In the previous articles [1][2][3][4][5], we saw how to easily and quickly deploy an application server and a database to OCI. We also noticed that we have multiple programming languages to choose from.

In this article, we will see how to use OCI GenAI Service (some are also available with the always-free tier).

The goal is to use GenAI capabilities directly in our code. We provide SDKs and sample code for several programming languages, including Java and Python.

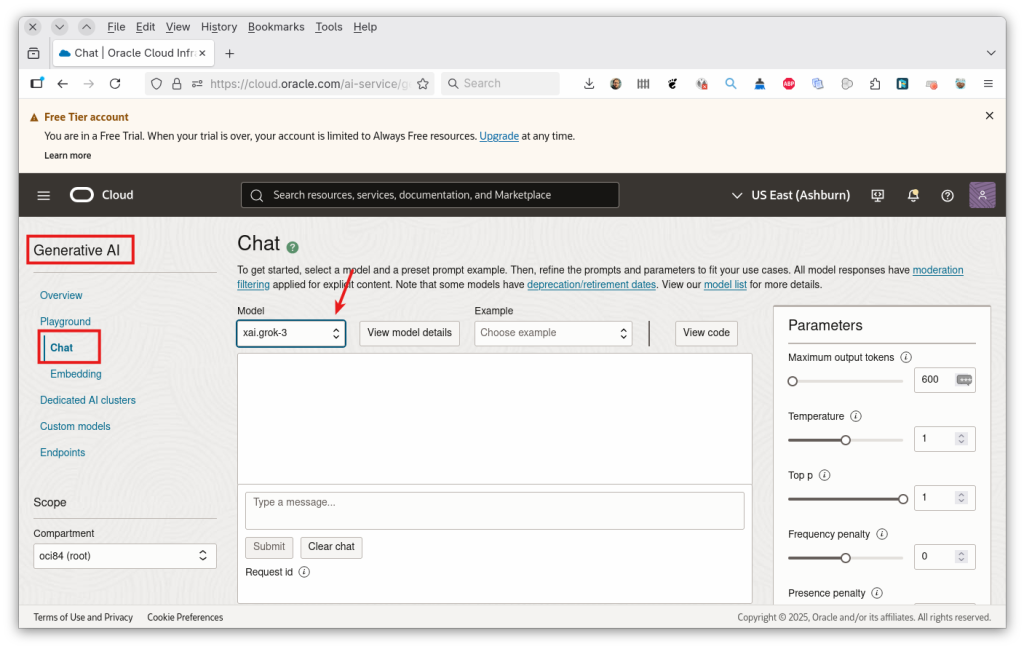

From the OCI’s console, we go to the GenAI section like this:

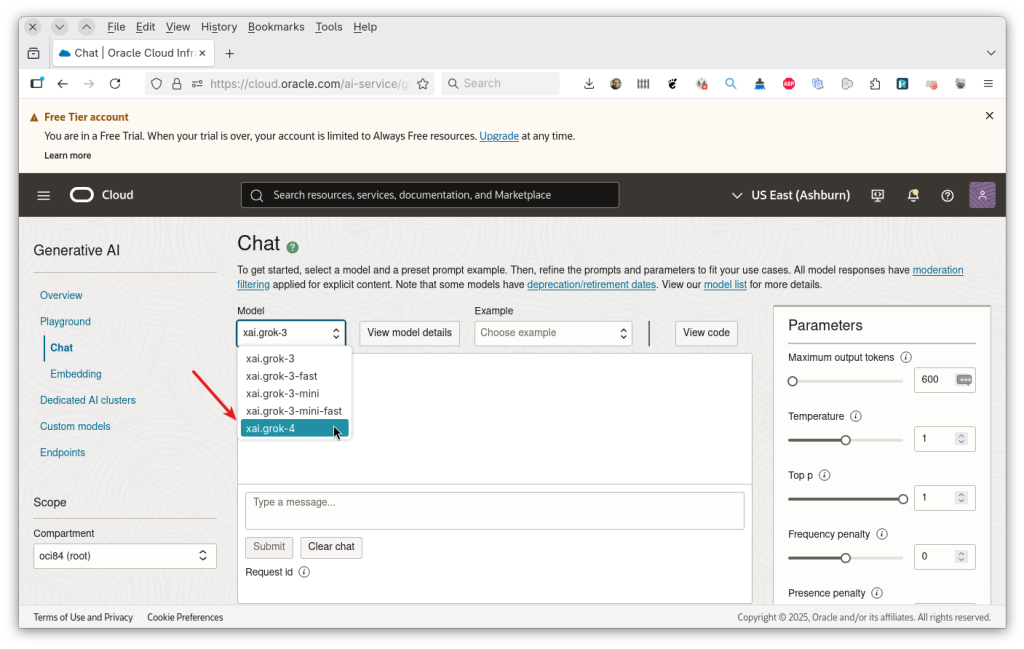

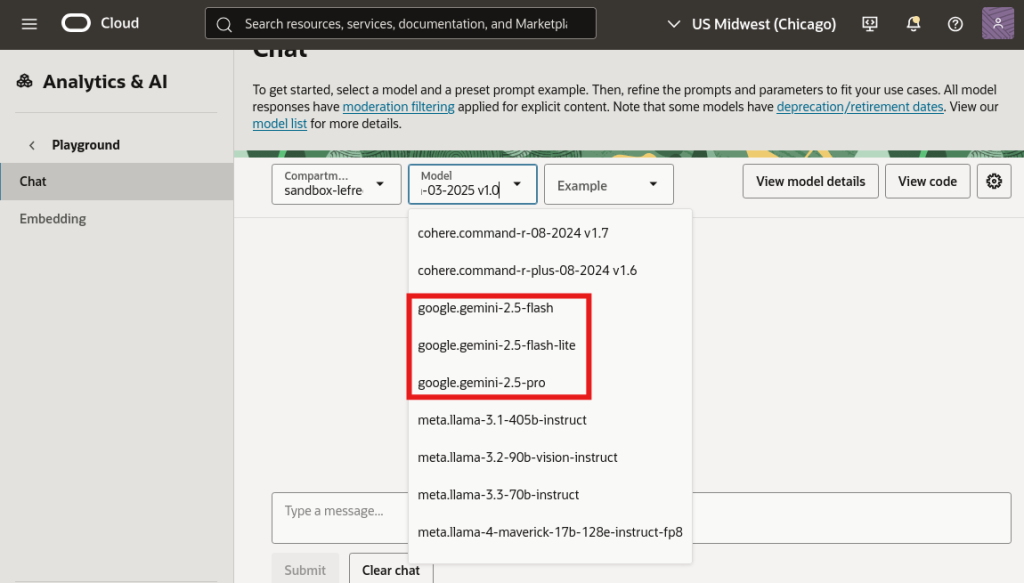

Different models are available depending on your tenancy’s region:

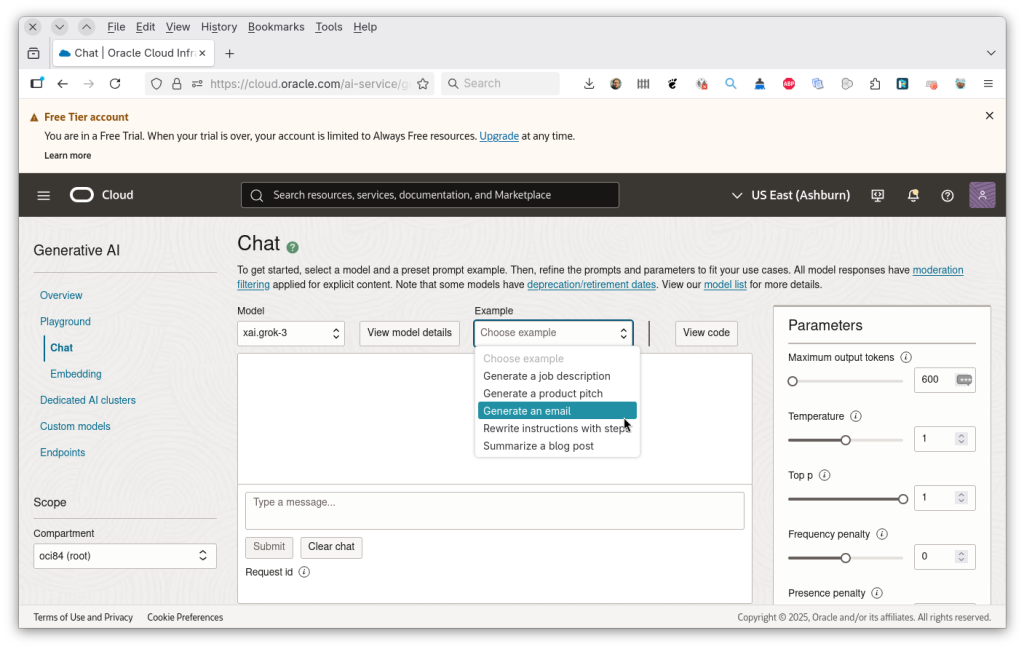

We provide examples, or you can leave it blank:

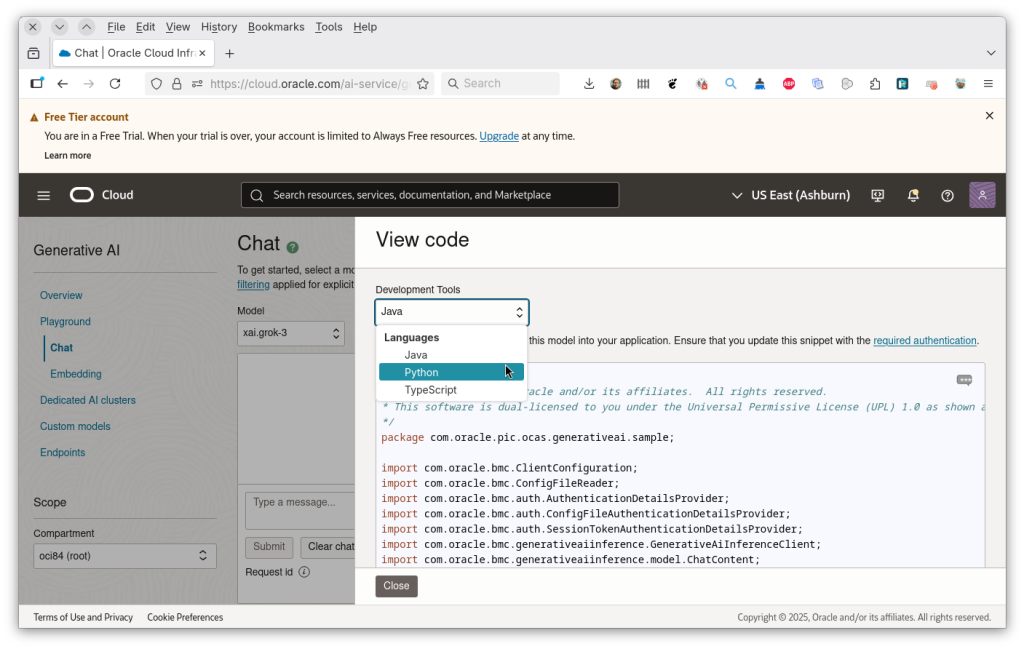

And we can access the code to use for the programming language of our choice:

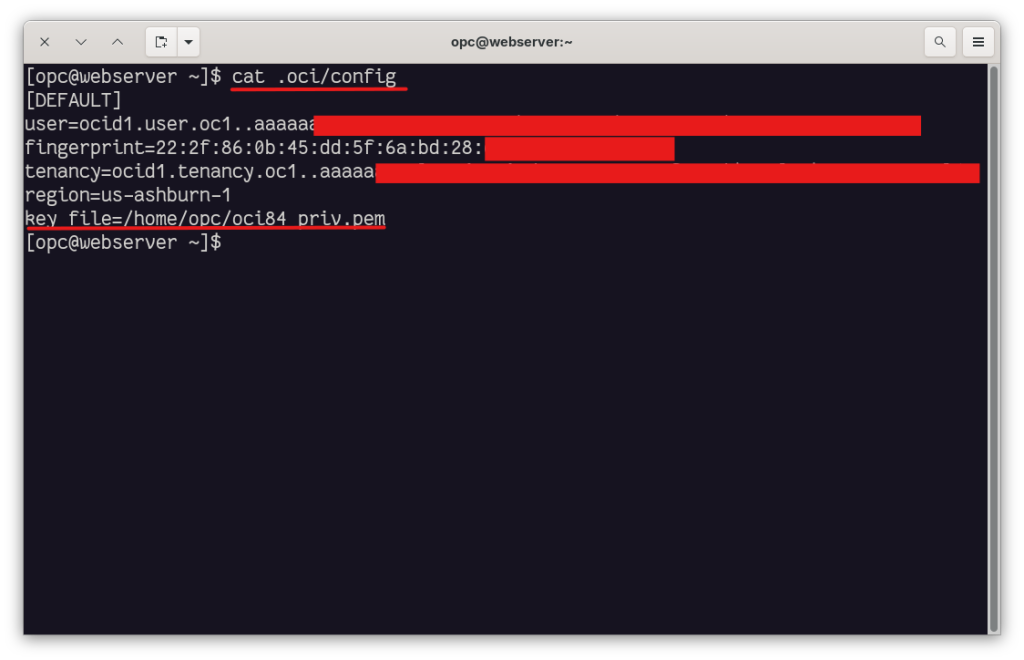

To be able to use these examples, you need to configure your OCI account (~/.oci/config) file with your settings (same as we did in MySQL Shell for Visual Studio Code, but this time on our compute instance):

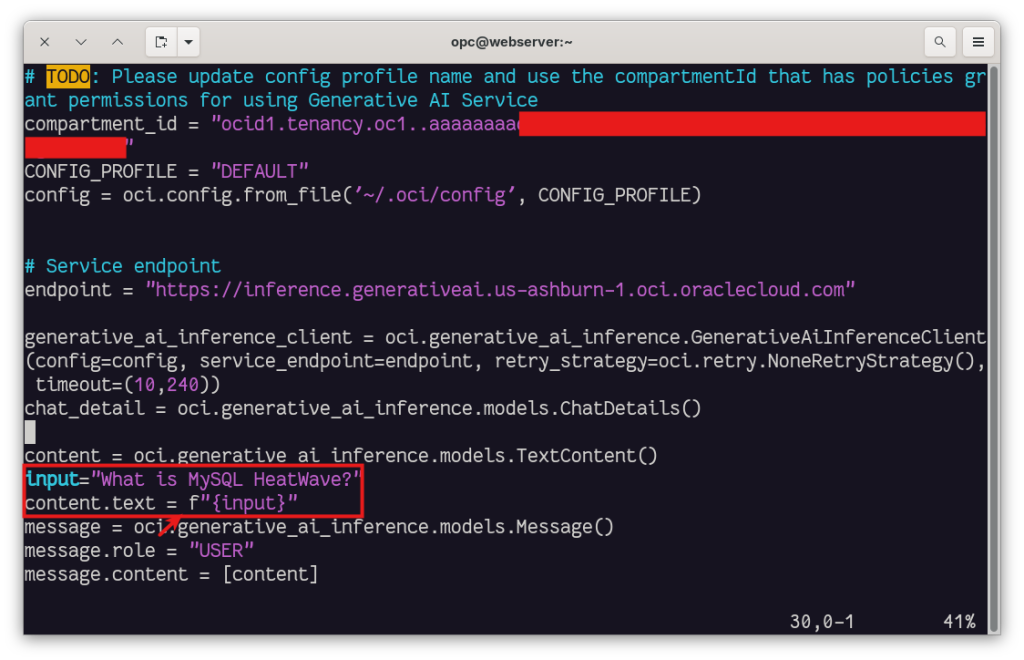

We copied the code for Python, and we modified it just a little bit to add our input question:

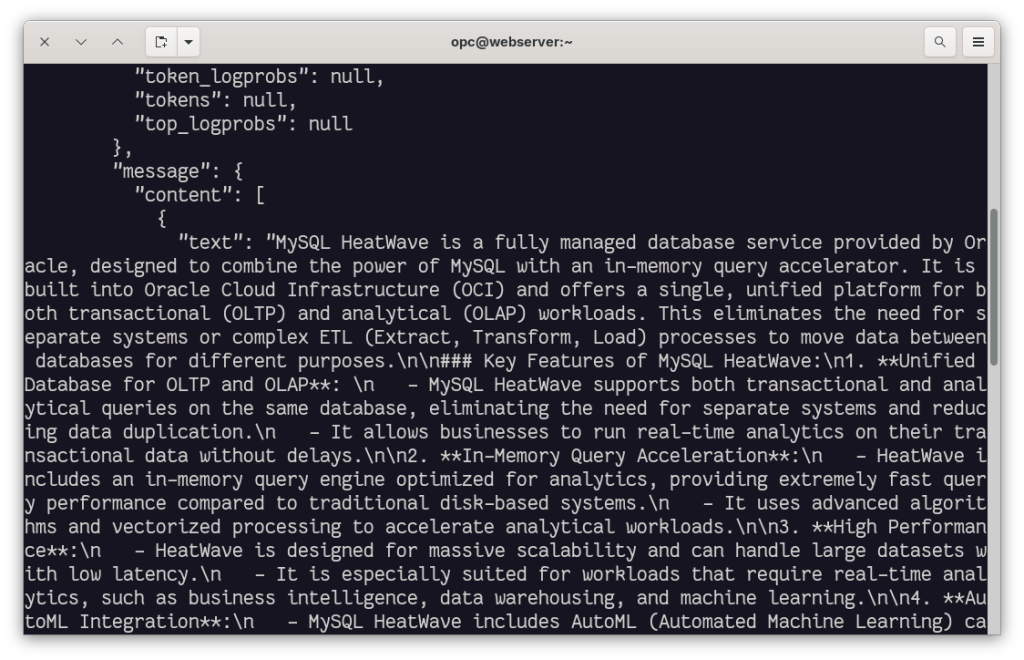

We can now execute this script:

[opc@webserver ~]$ python demo.py

Let’s see the complete steps in the following video:

Google Gemini

We recently added the Gemini 2.5 models in the US regions.

The “2.5” generation introduces “thinking”/reasoning capabilities: rather than just producing likely following words. Gemini 2.5 attempts to reason “step-by-step” internally before returning a response. That helps with more complex reasoning tasks.

Gemini 2.5 is multimodal — meaning it can support not just text, but also images, audio, video, and code as input (depending on the variant).

The 2.5 series offers a large context window — capable of processing large documents, long conversations, or extensive codebases.

According to Google, 2.5 is “the most intelligent” Gemini generation to date, showing state-of-the-art performance in reasoning, coding, science/math, and general multimodal tasks.

You can now use Gemini 2.5 models easily within OCI GenAI:

Conclusion

As you can see, it’s very easy to use the GenAI service from your code.

OCI Generative AI provides access to recent large and powerful LLMs, such as Gemini 2.5.

If you need more advanced LLMs with capabilities such as reranking, you can also deploy your dedicated AI Cluster.