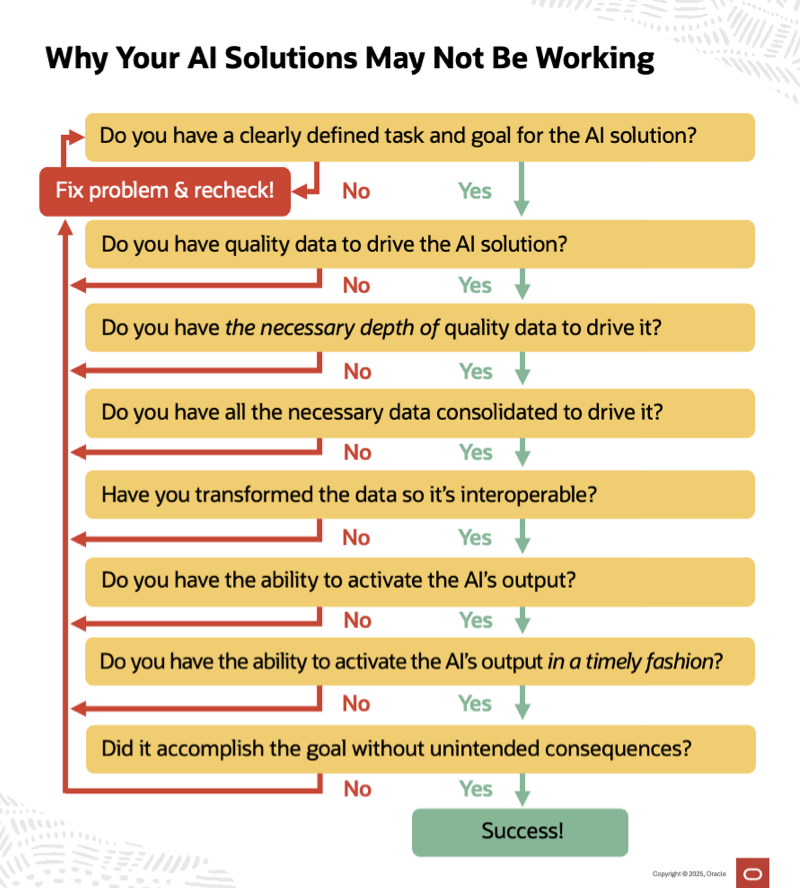

Every organization is excited about the potential of AI to improve their operations, but many are finding it difficult to make AI at scale a reality. If your brand is struggling to achieve what they expected with AI, answering these questions can help you identify common reasons for AI failures.

Do you have a clearly defined task and goal for the AI solution?

When crafting use cases for AI, it’s critical to understand the relationship between data, behaviors, and your goals. While some AI solutions can help you uncover relationships you weren’t aware of, in most cases you’ll need to understand those relationships from the beginning so you can be sure to provide the right data to the model.

Related post: Understanding AI: 5 Frameworks for Marketers

Do you have quality data to drive the AI solution?

If you have unreliable, low-quality data, then the output of your AI solution will be similarly unreliable and low-quality. You might also not have any data that you need.

For example, we had a client that wanted to drive cross-sells using AI. However, they’d never cross-sold their products before and hadn’t even cataloged their services. Moreover, they also couldn’t identify competing products, complimentary products, or related products sold in bundles. So, they not only didn’t have any data for the AI solution to learn from, but didn’t have a data structure or strategy in place to begin collecting data. To make an AI solution feasible, they first had to put a strategy in place to do a variety of cross-selling and collect data in a way that could facilitate the former.

Do you have the necessary depth of quality data to drive the AI solution?

It’s not enough to have high-quality data. You generally need lots of it.

For instance, if you’re using AI to drive seasonal product promotions, but you only have data for the past 12 months, then your output will be heavily biased toward the last year’s buying patterns, which may or may not be representative of longer term trends.

This is a snag that affects many AI applications that are supposed to work out of the box. For example, if a Next Best Offer model was built for the short sales cycles of the average retailer and you sell manufacturing equipment where sales cycles are over a year long, this model won’t function properly for you.

Do you have all the necessary data consolidated to drive the AI solution?

Your organization has the proper data, but it’s trapped in various systems the AI solution doesn’t have access to, or which impose large usage fees for retrieving data every time an AI model requires rescoring. You’ll want to assemble it all in one place where it can be collected, standardized, normalized, and properly managed for consistency, so the AI solution can act on it and draw correct conclusions from it.

In some cases, the operational platforms where this data is siloed charge per API call, making your AI output much more expensive. That may mean migrating to a platform that has a more AI-friendly billing model or is able to push data into a service bus for access without impacting business critical operations.

Related post: CDP vs. MDW vs. CRM vs. CDM vs. DMP: How They Differ & How They Might Work Together

Have you transformed the data so it’s interoperable?

Different systems store the same information differently, so you’ll need to transform data so it’s all following the same format and using the same values. For example, you might have challenges with data consistency across similar platforms, such as Status in multiple systems reusing certain values for different meanings.

Rather than forcing your AI’s interpretation layer to constantly adjust for various usage patterns, leverage a centralized data platform to manage the consistency and standardization of data that’s fed into the model to eliminate these potential issues.

Do you have the ability to activate the AI’s output?

It’s not enough to get high-quality output from an AI solution. You need to activate it. There are three primary groups that you might be passing that output along to.

First, you could be passing it to a sales rep, customer service rep, or other people who need to act on the intelligence. In these instances, make sure they understand what the output means and how to use it. That can be much more easily achieved if the model’s output is translated into everyday language and clear actions for the end user to take. This is often an overlooked part of the experience design that is critical to a successful launch.

Second, you could be passing it to an AI agent, chatbot, marketing orchestration platform, inventory management system, or another system for activation. Make sure those systems are also properly interpreting and acting on the AI’s output.

And lastly, you could be passing it to another AI solution or set of AI solutions. This is often the case when solving more complex problems like managing cross-channel messaging or doing advanced personalization. In these instances, make sure that all of the involved AI systems are properly architected to work in concert to solve the problem.

Do you have the ability to activate the AI’s output in a timely fashion?

Some decisions don’t need to be made within seconds of getting information, but some do. That can be a major structural barrier if one or more of your operational platforms don’t support the high concurrency to retrieve data in real-time that’s needed for some AI use cases.

This is where doing proper discovery and planning is again critical. If you take the time to define your use case and locate system data, you will uncover where you may have time constraints.

Related webinar: How to Overcome Common AI Mistakes

Did the AI solution accomplish the goal without unintended consequences?

Auditing AI is critical. Even if it clearly accomplished your desired goal, you’ll want to see if there were any unintended consequences, like negative outcomes elsewhere.

For example, we had a client who used AI to reduce email subscriber churn. The model quickly learned that the most churn came from new subscribers, so the model stopped emailing new subscribers. While the model achieved its goal of reducing churn, it did so at the cost of not engaging new subscribers. Obviously, that’s not a desirable outcome. The client had to go back and have the AI solution treat new subscribers and established subscribers differently.

In other cases, an AI may boost conversions for one product or service at the expense of others such that overall revenue is lower. Depending on the circumstances, that may be okay, but it may not.

Auditing over time is also important, as the modeling may fall out of sync with either your customers’ behaviors or your business operations.

* * *

If you answered No to any of those questions, then you’ve identified the reason why your AI solution isn’t delivering the results you expected. Even better, if you’re asking these questions before implementing an AI solution, then you’ve proactively identified pitfalls you’ll want to avoid.

—————

Need help with getting the most out of your AI solutions? Oracle Digital Experience Agency has hundreds of marketing and communication experts ready to help Responsys, Eloqua, Unity, and other Oracle customers create stronger connections with their customers and employees—even if they’re not using an Oracle platform as the foundation of that experience. With a 94% satisfaction rate, our clients are thrilled with the award-winning work our creative, strategy, and other specialists do for them, giving us an outstanding NPS of 82.

For help overcoming your challenges or seizing your opportunities, talk to your Oracle account manager, visit us online, or email us at OracleAgency_US@Oracle.com.

To stay up to date on customer experience best practices and news, subscribe to Oracle Digital Experience Agency’s award-winning, twice-monthly newsletter. View archive and subscribe →