An already existing Oracle Database installation can be integrated as a native resource into a Kubernetes cluster with a freely available OpenSource extension called OraOperator. This makes the database even more integrable in modern DevOps principles and a visible part of a Continuous Delivery approach. In this article, we would like to show how such an integration can work and we would like to briefly describe which further options the OraOperator offers, such as simplified operation of the Oracle Database in the Kubernetes cluster.

The Oracle Operator for Kubernetes, or OraOperator, supports multiple manifestations and modes of operation of an Oracle Database.  For example, autonomous databases can be created and addressed in the Oracle Cloud, and Oracle databases with and without sharding can be created and operated within the Kubernetes cluster. But also Oracle Databases installed in any way on common hardware and Exadata systems can be included and referenced. Since the latter case is probably the most common scenario, we would like to describe this path in more detail below.

For example, autonomous databases can be created and addressed in the Oracle Cloud, and Oracle databases with and without sharding can be created and operated within the Kubernetes cluster. But also Oracle Databases installed in any way on common hardware and Exadata systems can be included and referenced. Since the latter case is probably the most common scenario, we would like to describe this path in more detail below.

The OraOperator documentation on github.com explains this way in the chapter “Oracle Multitenant Database Controller”. We will present that way a bit rearranged and explained differently, taking into account newer versions and features of OraOperator version 0.2.1 and Oracle REST Data Services (ORDS), here in version 23.1.

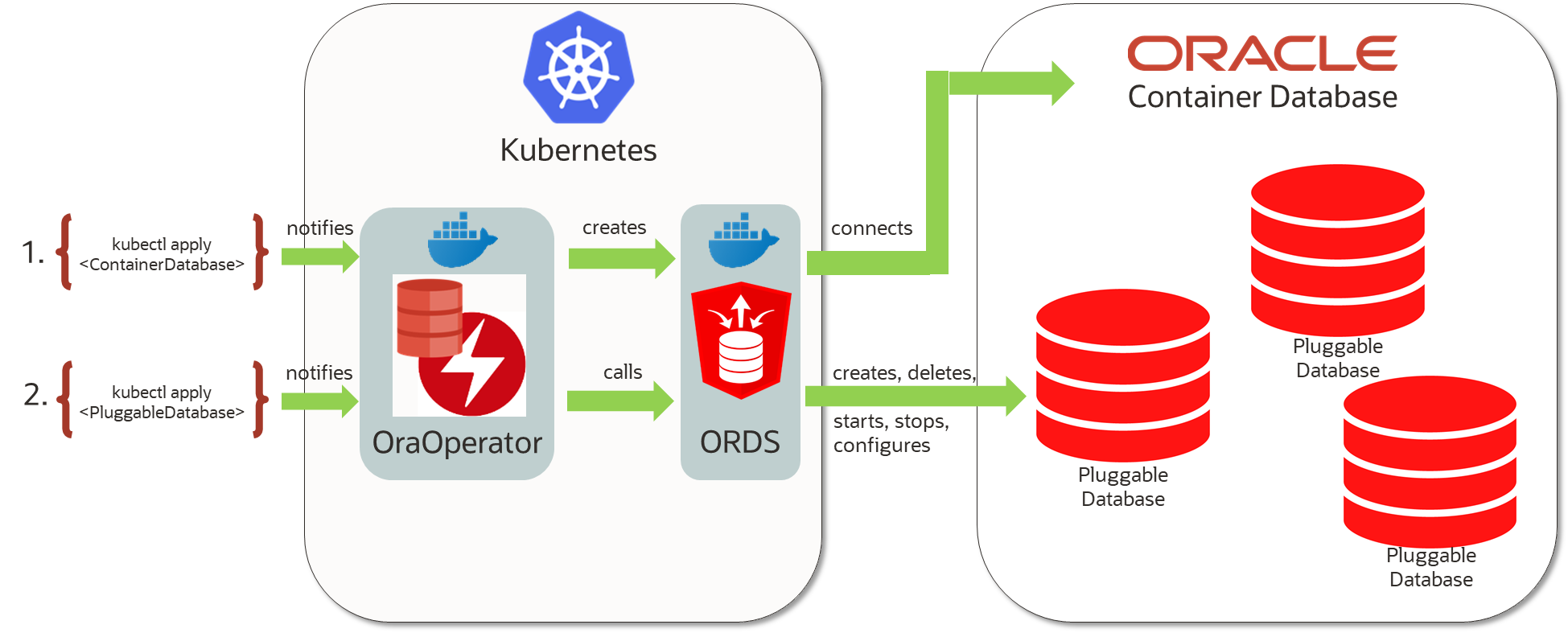

Functionality of OraOperator for Multitenant Databases (also works with only one tenant):

- A Kubernetes resource to be formulated in YAML format describes the access to a container database (CDB).

The Kubernetes resource is characteristically of the type “ContainerDatabase” or “CDB” for short. - When the resource is created (e.g. using the “kubectl apply” command), the OraOperator configures, links and starts a container with included Oracle REST Data Services (ORDS) for managing pluggable databases via a REST API.

- A Kubernetes resource to be formulated in YAML format describes a pluggable database (PDB) to be included, cloned or newly created.

The Container Database (CDB) to be addressed for this purpose is referenced by name in the description.

The Kubernetes resource is characteristically of the type “PluggableDatabase” or “PDB” for short. - When the resource is created (“kubectl apply”), the OraOperator addresses the ORDS container and invokes the REST services necessary for cloning, creation, deletion, startup and shutdown. The status of the Kubernetes resource is updated accordingly and can be queried (“kubectl get pdb <pdbname>”).

OraOperator setup, linking container and pluggable database in Kubernetes.

1. Installation of the OraOperator

2. Creation and storage of an ORDS container images for the OraOperator

3. Preparation of the Container Database

4. “security measures” for accessing the CDB in the Kubernetes cluster

5. Linking the Kubernetes clusters with the Container Database

6. Creating, stopping and deleting a Pluggable Database

7. Including both database and application in a tool for Continuous Deployment

8. Outlook: there’s still more to come ?

1. Installation of the OraOperator

The OraOperator software can be installed in an existing Kubernetes cluster with two short commands.

For the management of SSL certificates for the internal communication of the OraOperator, the component “cert-manager” should be installed first. If a container management platform such as Oracle Verrazzano is already installed, the cert-manager is already present and does not need to be taken into account.

$ kubectl apply -f https://github.com/jetstack/cert-manager/releases/latest/download/cert-manager.yaml

Now follows the command for reinstallation (and also update) of the OraOperator software itself:

$ kubectl apply -f https://raw.githubusercontent.com/oracle/oracle-database-operator/main/oracle-database-operator.yaml

After the installation is complete, a new namespace oracle-database-operator-system should be created in the Kubernetes cluster, with multiple containers or pods of the same software deployment oracle-database-operator-controller-manager in it. This can be checked as follows:

$ kubectl get pods -n oracle-database-operator-system NAME READY STATUS RESTARTS AGE oracle-database-operator-controller-manager-775bb46fd-6smq7 1/1 Running 5 (24h ago) 33d oracle-database-operator-controller-manager-775bb46fd-mkhxw 1/1 Running 6 (24h ago) 33d oracle-database-operator-controller-manager-775bb46fd-wjxdn 1/1 Running 4 (24h ago) 33d

2. Creation and storage of an ORDS container image for the OraOperator

The downloadable container image for ORDS at container-registry.oracle.com is not sufficient for use with OraOperator here. ORDS setup must be done with some additional settings, for example enabling the REST management API for databases, setting up a database administration user in the integrated HTTP server, setting up internal SSL communication. For this purpose, on the github pages of OraOperator exists a customized ORDS startup script and a corresponding Dockerfile to create the image. Please download the two files e.g. on a Linux system with preinstalled “docker” or “podman”:

$ wget https://raw.githubusercontent.com/oracle/oracle-database-operator/main/ords/Dockerfile $ wget https://raw.githubusercontent.com/oracle/oracle-database-operator/main/ords/runOrdsSSL.sh

The Dockerfile uses a container image pre-installed with Oracle Java as a base. If you want to use this, you do not need to customize the Dockerfile, but you should log in to container-registry.oracle.com with a browser first, click on the “Java” section and accept the software terms for the Java containers. Then please login to container-registry.oracle.com with the same user via “docker” or “podman” command (“podman login container-registry.oracle.com“).

Once this step is done, you can now create the container image and upload it to a write-authorized container registry of your choice, for example to docker.io:

$ podman build -t docker.io/meinbenutzer/pub_ordsrestmgmt:23.1 . $ podman push docker.io/meinbenutzer/pub_ordsrestmgmt:23.1

A prepared image for pure testing purposes is currently available in the Oracle Cloud under the designation fra.ocir.io/frul1g8cgfam/pub_ordsrestmgmt:23.1

3. Preparation of the Container Database

For the management of pluggable databases to work, the ORDS installation must connect directly to the container database and NOT to one of the managed pluggable databases. If at least one of the pluggable databases has its own, i.e. local, installation of the ORDS schema ORDS_PUBLIC_USER, the setup of the ORDS for managing pluggable databases will fail. Please use only a container database with ORDS_PUBLIC_USER set up there – or still without it at all. The ORDS installation would set up or update the database schema accordingly.

Further, create an extensively authorized database user capable of editing and querying Pluggable Databases. Typically the name C##DBAPI_DB_ADMIN is used. The ORDS container will log on to the container database with this user. To create the power user C##DBAPI_DB_ADMIN, please issue the following SQL commands against your container database:

SQL> conn /as sysdba

-- Create the below user at the database level:

ALTER SESSION SET "_oracle_script"=true;

DROP USER C##DBAPI_CDB_ADMIN cascade;

CREATE USER C##DBAPI_CDB_ADMIN IDENTIFIED BY <Password> CONTAINER=ALL ACCOUNT UNLOCK;

GRANT SYSOPER TO C##DBAPI_CDB_ADMIN CONTAINER = ALL;

GRANT SYSDBA TO C##DBAPI_CDB_ADMIN CONTAINER = ALL;

GRANT CREATE SESSION TO C##DBAPI_CDB_ADMIN CONTAINER = ALL;

-- Verify the account status of the below usernames. They should not be in locked status.

-- Missing ORDS_PUBLIC_USER is OK, will be created when running ORDS the first time.

col username for a30

col account_status for a30

select username, account_status from dba_users where username in ('ORDS_PUBLIC_USER','C##DBAPI_CDB_ADMIN','APEX_PUBLIC_USER','APEX_REST_PUBLIC_USER');

The database user is later automatically linked in ORDS to a different user of the HTTP server, for example sql_admin. This user must have the application role SQL Administrator for this purpose. This assignment is also done later automatically when the ORDS container is started for the first time.

4. “Security measures” for accessing the CDB in the Kubernetes cluster

The existing usernames and passwords of the container database as well as the desired usernames and password of the ORDS HTTP user must first be stored in a Kubernetes “Secret” so that they can be picked up by the OraOperator. Usernames and passwords are BASE64-encoded values. A corresponding YAML file with passwords in it , e.g. “cdb_secret.yaml” could look like this:

apiVersion: v1 kind: Secret metadata: name: cdb19c-secret namespace: oracle-database-operator-system type: Opaque data: # pwd for ORDS_PUBLIC_USER ords_pwd: "yadayadayumyum" # pwd for SYS AS SYSDBA sysadmin_pwd: "yadayadayumyum" # CDB Admin User, e.g. C##DBAPI_CDB_ADMIN cdbadmin_user: "QyMjREJBUElfQ0RCX0FETUlO" # pwd for cdbadmin_user cdbadmin_pwd: "yadayadayumyum" # HTTP user with "SQL Administrator" role, e.g. sql_admin webserver_user: "c3FsX2FkbWlu" # pwd for HTTP user webserver_pwd: "yadayadayumyum"

The values you still have to enter for the fields can be obtained, for example, by encoding them with the “base64” tool under Linux:

$ echo -n "C##_DBAPI_CDB_ADMIN" | base64 ; echo QyMjREJBUElfQ0RCX0FETUlO

As namespace for the secret and also for the still following resources another one would be conceivable than the oracle-database-operator-system used here. For our example, however, we will stick with this. Please now create the resource of type “Secret” in Kubernetes:

$ kubectl apply -f cdb_secret.yaml

In the next step, two certificates are generated for the internal communication of OraOperator with the ORDS container and also stored as Kubernetes “Secret”. The self-signed certificates used here should be fully sufficient, since ORDS is not addressed outside the Kubernetes cluster. Communication normally takes place only between the OraOperator and the ORDS inside the cluster.

Please note that the server name for which the certificate is issued must be the same as the ContainerDatabase resource yet to be created – only with the addition of “-ords“. In our example the ContainerDatabase resource will have the name “cdb19c“, so the certificate must be issued to a server named cdb19c-ords. This is a special feature with ORDS from version 22.4 on: From this version on the network stack of the HTTP server checks if the called server name matches the one in the installed certificate. If not, an error message of the type “Invalid SNI” is returned, the REST services cannot be called.

$ genrsa -out ca.key 2048 $ openssl req -new -x509 -days 365 -key ca.key -subj "/C=CN/ST=GD/L=SZ/O=oracle, Inc./CN=oracle Root CA" -out ca.crt $ openssl req -newkey rsa:2048 -nodes -keyout tls.key -subj "/C=CN/ST=GD/L=SZ/O=oracle, Inc./CN=cdb19c-ords" -out server.csr $ /usr/bin/echo "subjectAltName=DNS:cdb19c-ords,DNS:www.example.com" > extfile.txt $ openssl x509 -req -extfile extfile.txt -days 365 -in server.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out tls.crt $ kubectl create secret tls db-tls --key="tls.key" --cert="tls.crt" -n oracle-database-operator-system $ kubectl create secret generic db-ca --from-file=ca.crt -n oracle-database-operator-system

So much for the safety precautions. In the next part we will connect everything together.

5. Connecting the Kubernetes clusters with a Container Database

Now all the previous tasks come together: we create a Kubernetes resource of type CDB and specify in it everything that has been created so far: ORDS container image name, CDB resource name, usernames and passwords from one Kubernetes Secret, and SSL certificates from another Kubernetes Secret. The descriptive YAML file cdb.yaml might look like below – please adjust your server names, ports, container image and database service according to your environment. The passwords and usernames are dynamically read from the Kubernetes Secrets you defined in the previous step. Please note the respective names of your self-created Secrets there.

apiVersion: database.oracle.com/v1alpha1

kind: CDB

metadata:

name: cdb19c

namespace: oracle-database-operator-system

spec:

cdbName: "DB19c"

dbServer: "130.61.106.99"

dbPort: 1521

replicas: 1

ordsImage: "fra.ocir.io/frul1g8cgfam/pub_ordsrestmgmt:23.1"

ordsImagePullPolicy: "Always"

# Uncomment Below Secret Format for accessing ords image from private docker registry

# ordsImagePullSecret: ""

serviceName: "DB19c_fra1gq.publicdevnet.k8snet.oraclevcn.com"

sysAdminPwd:

secret:

secretName: "cdb19c-secret"

key: "sysadmin_pwd"

ordsPwd:

secret:

secretName: "cdb19c-secret"

key: "ords_pwd"

cdbAdminUser:

secret:

secretName: "cdb19c-secret"

key: "cdbadmin_user"

cdbAdminPwd:

secret:

secretName: "cdb19c-secret"

key: "cdbadmin_pwd"

webServerUser:

secret:

secretName: "cdb19c-secret"

key: "webserver_user"

webServerPwd:

secret:

secretName: "cdb19c-secret"

key: "webserver_pwd"

cdbTlsCrt:

secret:

secretName: "db-tls"

key: "tls.crt"

cdbTlsKey:

secret:

secretName: "db-tls"

key: "tls.key"

Now you can use “kubectl apply -f cdb.yaml” to create the resource in Kubernetes and the OraOperator should then do its work to configure and start an ORDS container. You can check if this worked in several places. After about 20 seconds, there should be a pod named cdb19c-ords next to the containers or pods for the OraOperator, if you named your CDB resource cdb19c accordingly:

$ kubectl get pods -n oracle-database-operator-system NAME READY STATUS RESTARTS AGE cdb19c-ords-rs-ftd7b 1/1 Running 0 43h oracle-database-operator-controller-manager-775bb46fd-6smq7 1/1 Running 5 (42h ago) 33d oracle-database-operator-controller-manager-775bb46fd-mkhxw 1/1 Running 6 (42h ago) 33d oracle-database-operator-controller-manager-775bb46fd-wjxdn 1/1 Running 4 (42h ago) 33d

Please also have a look at the log entries of the container, which outputs its configuration quite detailed and also gives info about database connectivity.

$ kubectl logs cdb19c-ords-rs-ftd7b -n oracle-database-operator-system ... ... jdbc.InitialLimit=50 db.connectionType=basic java.class.version=64.0 standalone.access.log=/home/oracle 2023-05-22T11:31:57.453Z INFO Mapped local pools from /etc/ords/config/databases: /ords/ => default => VALID 2023-05-22T11:31:57.578Z INFO Oracle REST Data Services initialized Oracle REST Data Services version : 23.1.2.r1151944 Oracle REST Data Services server info: jetty/10.0.12 Oracle REST Data Services java info: Java HotSpot(TM) 64-Bit Server VM 20.0.1+9-29 2023-05-22T11:31:58.201Z INFO handleException /ords/_/db-api/stable/metadata-catalog/ org.eclipse.jetty.http.BadMessageException: 400: Invalid SNI

If you also see an error message of the “invalid SNI” type, as in our environment, it is not a problem at this exact point: it is a test call to itself that has only partially failed to determine whether the REST services are fundamentally responsive. Unfortunately, this test call is made to a server named “localhost”, and it is not in the SSL certificate.

To test the database access and to see if the correct server name is in the certificate, it is best to look directly into the pod. Connect to it with a shell and call a test URL. The test URL used here should give you a list of all PDBs in JSON format that already exist in your CDB:

$ kubectl exec -n oracle-database-operator-system -it cdb19c-ords-rs-ftd7b -- /bin/bash

[oracle@cdb19c-ords-rs-ftd7b ~]$ curl --user sql_admin https://cdb19c-ords:8888/ords/_/db-api/stable/database/pdbs/ --insecure

Enter host password for user 'sql_admin':

{"items":[{"inst_id":1,"con_id":2,"dbid":3834124154,"con_uid":3834124154,"guid":"5LG5f2Oe3DvgUzABCgrhSQ==","name":"PDB$SEED","open_mode":"READ ONLY","restricted":"NO","open_time":"2023-05-22T08:52:16.037Z","create_scn":2543153,"total_size":1092616192,"block_size":8192,"recovery_status":"ENABLED","snapshot_parent_con_id":null,"application_root":"NO","application_pdb":"NO","application_seed":"NO","application_root_con_id":null,"application_root_clone":"NO","proxy_pdb":"NO","local_undo":1,"undo_scn":268,"undo_timestamp":null,"creation_time":"2022-07-26T08:02:41Z","diagnostics_size":0,"pdb_count":0,"audit_files_size":0,"max_size":0,"max_diagnostics_size":0,"max_audit_size":0,"last_changed_by":"COMMON USER","template":"NO","tenant_id":null,"upgrade_level":1,"guid_base64":"5LG5f2Oe3DvgUzABCgrhSQA=","links":[{"rel":"self","href":"https://cdb19c-ords:8888/ords/_/db-api/stable/database/pdbs/PDB%24SEED/"}]},

...usw...

The –insecure parameter is required because only self-signed certificates are used. The HTTP user sql_admin has the name that you stored in the Kubernetes secret in the previous step. It is possible that this is not called sql_admin in your system.

Also the OraOperator containers should have generated some log output when trying to create resource and pod. Quite common problems like failed attempts to download and start the container image (pod status CrashLoopBackoff, info about “kubectl get events -n oracle-database-operator-system”) are not discussed further here.

Last but not least, Kubernetes will tell you if the new CDB resource was successfully created and started. Please check for this in the following way:

$ kubectl get cdb -n oracle-database-operator-system NAME CDB NAME DB SERVER DB PORT TNS STRING REPLICAS STATUS MESSAGE cdb19c DB19c 130.61.106.99 1521 1 Ready

Now the time has come – congratulations! Your container database is connected and we can start some tests with pluggable databases.

6. Creating, stopping and deleting a Pluggable Database

In order to create a new pluggable database (PDB) or to connect an existing PDB, existing or desired user names and passwords must first be stored in a Kubernetes Secret, very similar to the connection of the CDB. Here is an example file “testpdb-secret.yaml” with BASE64-encoded username “pdbadmin” and a password you still have to enter in BASE64 format, similar to step 4 (echo -n “MyPassword” | base64 ; echo).

apiVersion: v1 kind: Secret metadata: name: testdb-secret namespace: oracle-database-operator-system type: Opaque data: # base64 encoded String for "pdbadmin" sysadmin_user: "cGRiYWRtaW4=" # add Your own base64 encoded password here sysadmin_pwd: "yadayadayumyum"

Now create the Secret in your Kubernetes cluster:

kubectl apply -f testpdb-secret.yaml

Now it’s time – let’s create a new PDB. All we need is a small descriptive YAML file “testpdb.yaml” with the desired name of the PDB, admin user and password, size of the temp area, action to be performed with it (e.g. “Create”, “Modify”, “Status”) and other possible specifications, which are documented under the following link in the form of a Custom Resource Definition: https://github.com/oracle/oracle-database-operator/blob/main/config/crd/bases/database.oracle.com_pdbs.yaml.

apiVersion: database.oracle.com/v1alpha1

kind: PDB

metadata:

name: testpdb

namespace: oracle-database-operator-system

labels:

cdb: cdb19c

spec:

cdbResName: "cdb19c"

cdbName: "DB19C"

pdbName: "testpdb"

adminName:

secret:

secretName: "testpdb-secret"

key: "sysadmin_user"

adminPwd:

secret:

secretName: "testpdb-secret"

key: "sysadmin_pwd"

fileNameConversions: "NONE"

totalSize: "1G"

tempSize: "100M"

action: "Create"

pdbTlsCat:

secret:

secretName: "db-ca"

key: "ca.crt"

pdbTlsCrt:

secret:

secretName: "db-tls"

key: "tls.crt"

pdbTlsKey:

secret:

secretName: "db-tls"

key: "tls.key"

The certificates referenced in the YAML file are the same ones that were generated and used when the CDB was connected.

Now you can send the YAML resource description to your Kubernetes cluster:

kubectl apply -f testpdb.yaml

In quite a short time, a new PDB should be created, the corresponding Kubernetes resource should change its state from “Creating” to “Ready”, the “PDB STATE” should be set to “READ WRITE”.

kubectl get pdbs -n oracle-database-operator-system NAME CONNECT_STRING CDB NAME PDB NAME PDB STATE PDB SIZE STATUS MESSAGE testpdb 130.61.106.99:1521/testpdb DB19C testpdb READ WRITE 1.08G Ready Success

Something should have happened on the database server as well. For example, the database listener now knows about another service. You can get a list of all registered database services directly on the database server for example with “lsnrctl status” command.

If you now want to stop the PDB, the desired state with 2 additional parameters must be entered into the resource description and sent to Kubernetes. The OraOperator then restores the desired state. In the YAML file testpdb.yaml you can now change the field action from Create to Modify and add parameter fields for the desired state (pdbState) and an addition for the type of execution (modifyOption):

apiVersion: database.oracle.com/v1alpha1

kind: PDB

metadata:

name: testpdb

namespace: oracle-database-operator-system

labels:

cdb: cdb19c

spec:

cdbResName: "cdb19c"

cdbName: "DB19C"

pdbName: "testpdb"

adminName:

secret:

secretName: "testpdb-secret"

key: "sysadmin_user"

adminPwd:

secret:

secretName: "testpdb-secret"

key: "sysadmin_pwd"

fileNameConversions: "NONE"

totalSize: "1G"

tempSize: "100M"

action: "Modify"

pdbState: "CLOSE"

modifyOption: "IMMEDIATE"

pdbTlsCat:

secret:

secretName: "db-ca"

key: "ca.crt"

pdbTlsCrt:

secret:

secretName: "db-tls"

key: "tls.crt"

pdbTlsKey:

secret:

secretName: "db-tls"

key: "tls.key"

Another kubectl apply followed by a kubectl get pdb should quickly represent the change in state of the PDB from “READ WRITE” to “MOUNTED”:

$ kubectl apply -f testpdb.yaml $ kubectl get pdb -n oracle-database-operator-system NAME CONNECT_STRING CDB NAME PDB NAME PDB STATE PDB SIZE STATUS MESSAGE testpdb 130.61.106.99:1521/testpdb DB19C testpdb MOUNTED 1.08G Ready Success

Once the PDB is down, we might as well delete it for testing. In our file testpdb.yaml the action field would have to be changed to the value Delete. An additional field dropAction, whose value determines whether the data files should also be deleted, is also necessary. The parameter fields from the previous action may remain, because they are used only with the suitable contents of the action field. Otherwise they are ignored:

apiVersion: database.oracle.com/v1alpha1

kind: PDB

metadata:

name: testpdb

namespace: oracle-database-operator-system

labels:

cdb: cdb19c

spec:

cdbResName: "cdb19c"

cdbName: "DB19C"

pdbName: "testpdb"

adminName:

secret:

secretName: "testpdb-secret"

key: "sysadmin_user"

adminPwd:

secret:

secretName: "testpdb-secret"

key: "sysadmin_pwd"

fileNameConversions: "NONE"

totalSize: "1G"

tempSize: "100M"

action: "Delete"

dropAction: "INCLUDING"

pdbTlsCat:

secret:

secretName: "db-ca"

key: "ca.crt"

pdbTlsCrt:

secret:

secretName: "db-tls"

key: "tls.crt"

pdbTlsKey:

secret:

secretName: "db-tls"

key: "tls.key"

If you now run a kubectl apply followed by a kubectl get pdb, you can quickly see that the OraOperator has not only deleted the PDB. It has also removed the associated Kubernetes resource. In contrast, a kubectl delete pdb would remove the resource but keep the PDB in the database. This is an intended behavior of the OraOperator and makes sense in our opinion:

$ kubectl apply -f testpdb.yaml pdb.database.oracle.com/testpdb configured $ kubectl get pdb -n oracle-database-operator-system No resources found in oracle-database-operator-system namespace.

So much for the mandatory part of our presentation. You and other developers in your company who are authorized via so-called ServiceAccounts should now be able to create, clone and delete PDBs at will. And all this via self-service and visible in Kubernetes. Congratulations!

What can you do with these capabilities, what should you perhaps add? Some thoughts about that in the next part:

7. Including both database and application into a tool for continuous deployment

Suppose you upload all your application descriptive YAML files together with the YAML files for the associated pluggable database to a GIT repository of your choice. Then a tool for Continuous Deployment could react as soon as there are changes in the application or database configuration and immediately retrace and monitor them. Or even roll back to an older configuration, as you wish.

If you would like to control and version the contents of the database in a more fine-grained way, in addition to the database size or a different clone than before, the integration of a container with installed liquibase software and versioned database schema would be conceivable. In the simpler case, you can reference an SQL script to be executed at deployment when starting the application container. In any case, thanks to OraOperator, you have even more automation and documentation when rolling out the application and associated database – and also when rolling back to an older state of the application.

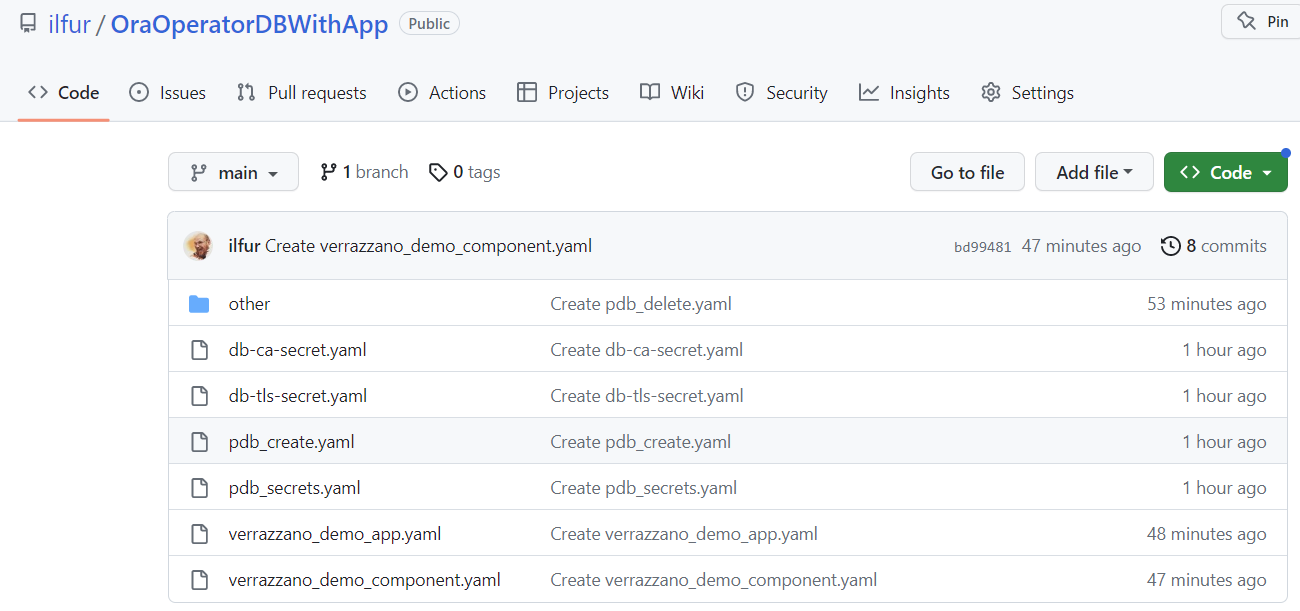

As an example, we uploaded a small demo application and our just created PDB description to a github repository and included it for viewing and documentation in ArgoCD, an OpenSource Continuous Deployment tool for Kubernetes and also part of the OpenSource container management platform “Oracle Verrazzano”. The repository is publicly available for testing purposes at the URL https://github.com/ilfur/OraOperatorDBWithApp:

Including the appropriate github URL in ArgoCD and choosing a name (“oraoperatordbwithapp”) for the application to maintain, our example is listed as a tile:

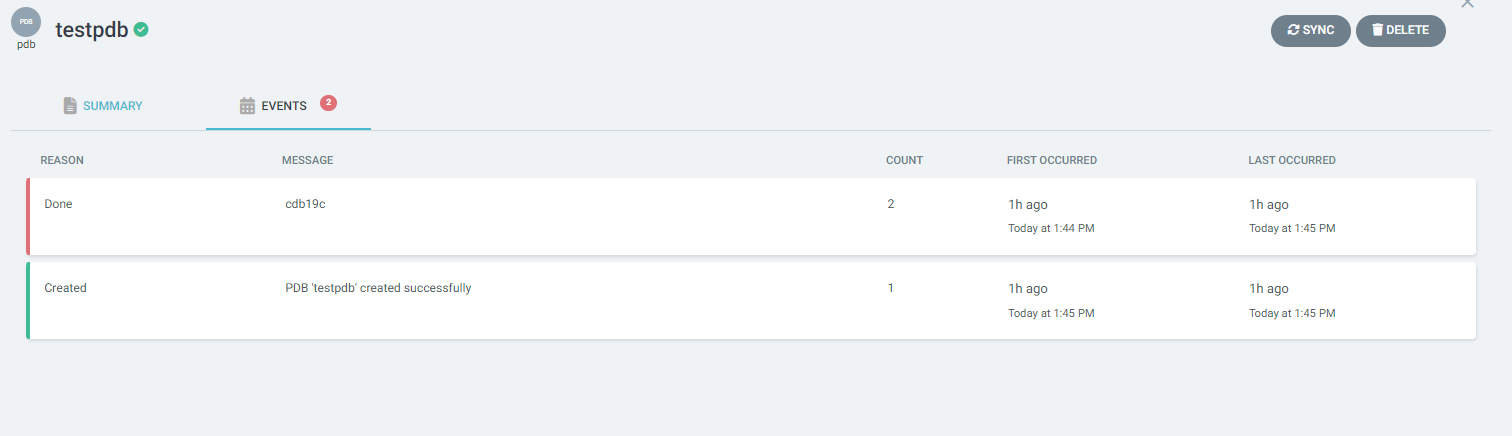

Once the Sync button has been clicked, the application is displayed in full with all its Kubernetes resources – now finally also with the associated, no longer anonymous Oracle database or pluggable database.

The Pluggable Database resource is viewable here like any other Kubernetes resource and shows, for example, the latest events around the resource, fed from the OraOperator information.

We hope that we have been able to provide a little insight into the topic and an idea of why it makes sense to include an (external) Oracle database as a Kubernetes resource. But at this point we have not quite reached the end yet.

Conclusion – or: is there still more to come?

To treat and document Oracle databases as part of a microservices-based application, to include them automatically, clone them or create them freshly. This is certainly desirable for many developers and also solvable thanks to OraOperator. Kubernetes as a runtime environment for containers also offers a lot of comfort for the comprehensive operation of its software components managed in it. Among other things, thought was given to scaling (horizontal and vertical), patching, failover, persistence also in the sense of a backup or a flashback area and ArchiveLogs. The OraOperator can also use these possibilities, but the Oracle Database to be managed must also be operated in the Kubernetes cluster, not only externally integrated. Many operational automations work by defining a Kubernetes resource of type “SingleInstanceDatabase“, which also belongs to the OraOperator. Patches, i.e. release updates, can be applied by swapping database container images. Databases can be reconfigured, cloned and some more. A combination of the locally managed “SingleInstanceDatabase” for operation and the combination “PluggableDatabase/ContainerDatabase” shown here for developers is quite possible and probably content of another blog entry.

Until then – have fun and success with testing and trying out!

Some interesting links around this article:

Numerous Oracle products as officially prepared container images

OraOperator pages on github.com

Oracle Verrazzano pages on github.com

Announcement of availability of ArgoCD in Oracle Verrazzano

Blog post about Kubernetes operators for Oracle databases

Announcement of the OraOperators from product management

Blog post about managing PDBs with ORDS and REST management API – without OraOperator