Standing up an AI workload shouldn’t take months. Yet a steep learning curve and a rapidly evolving stack often stretch production large language model (LLM) deployments far beyond plan. The results are stalled projects,delayed impact, and a loss of confidence in ever achieving measurable ROI.

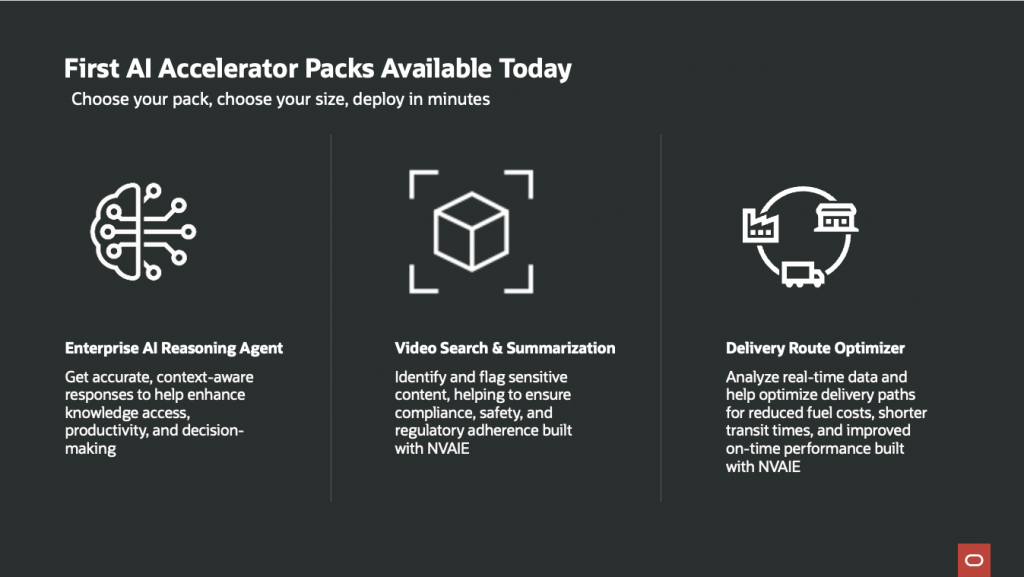

OCI AI Accelerator packs address the most common blockers we see in enterprise AI. They reduce the time needed to bring Generative AI applications to life and help solve real-world problems; while helping you reduce the learning curve and stack complexity that typically slows production and deployment. They provide pragmatic sizing profiles to operate within regional GPU constraints and give you commercial flexibility through OCI Universal Credits or extended services.

The process is that simple; with one click, you can deploy a complete, OCI-native AI stack without advanced hardware or software configuration. Each pack is designed around a real business scenario, such as a RAG copilot, a multimodal content pipeline, real-time summarization, computer vision inspection, or search and recommendations, so you start from your desired outcome, not a list of possible components.

AI Accelerator packs combine OCI AI services, storage, and compute with curated open-source software and 3rd party software components designed for proven outcomes, so teams can launch impactful AI applications for business in minutes rather than weeks or months.

These pre-configured, self-service solutions, built to solve common business problems, compress weeks of platform assembly into a simple process that users can execute right from the OCI Console. AI Engineers, ML Engineers, DevOps, and developers can now deliver production-ready outcomes without mastering every layer of the stack.

Based on your expected usage needs, everything is automatically provisioned, including the required OCI Services, Compute resources, Networking components including security policies, observability layers and most importantly the AI Models, all within tuned application parameters to achieve consistent high-quality results.

We took a vendor-agnostic approach to this product by including the best and most popular ISV technologies, from NVIDIA AI Enterprise for acceleration, to WEKA for high-performance storage needs, and Open-Source components like Langchain, vLLM and Meta Llama Models.

To get started, open the OCI console to explore the AI Accelerator Pack catalog and connect with your Oracle account team to select a small, medium, or large footprint. Build faster. Operate with confidence. Scale when you’re ready. OCI AI Accelerator Packs turn AI potential into tangible outcomes, on day one.

To implement and learn more about OCI AI Accelerator Packs, visit the OCI AI Accelerator Packs page.