Introduction

This blog looks at the sort of performance that the free TimesTen XE In-Memory Database can achieve with a commodity 1U Linux server

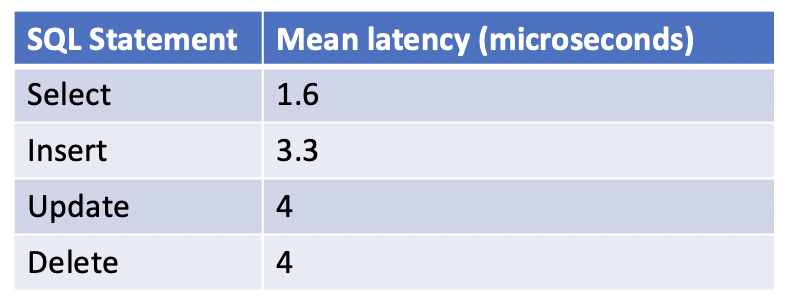

- A latency of 1.6 microseconds for primary key lookups

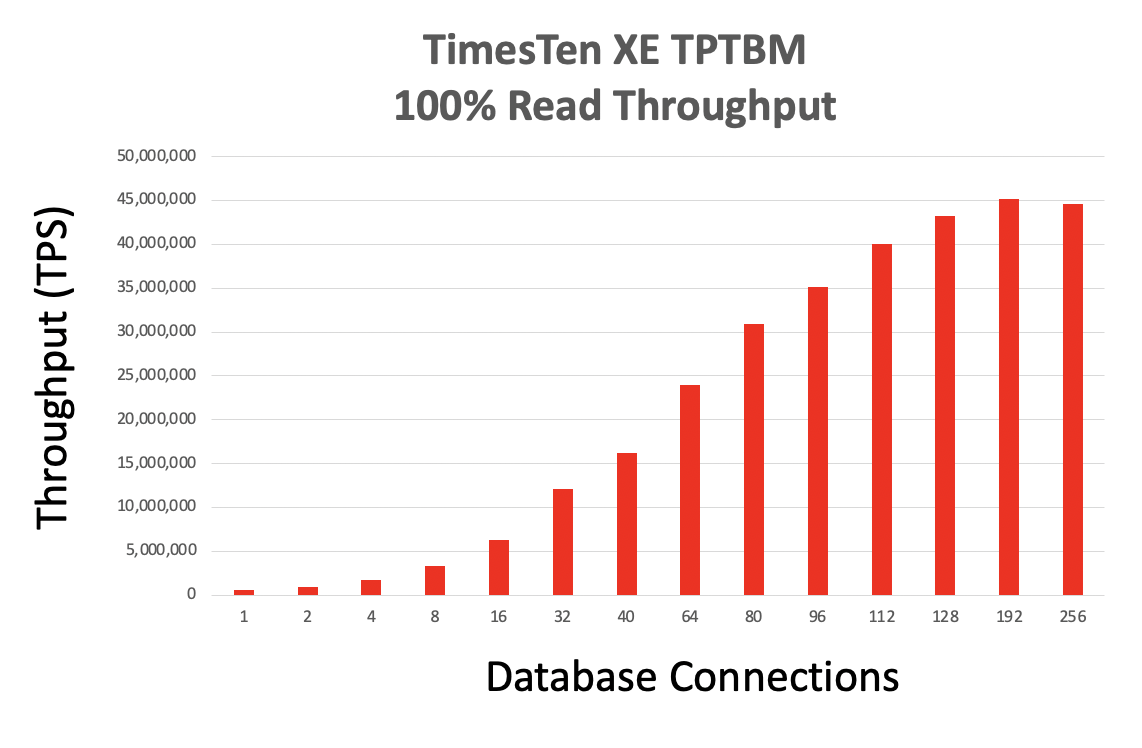

- A throughput of 45 million queries per second [100% read]

- A throughput of 7.8 million transactions per second [80% read, 20 % write]

- Scaling to 256 CPU cores

The devil is in the details, so read the rest of this blog to understand those performance numbers.

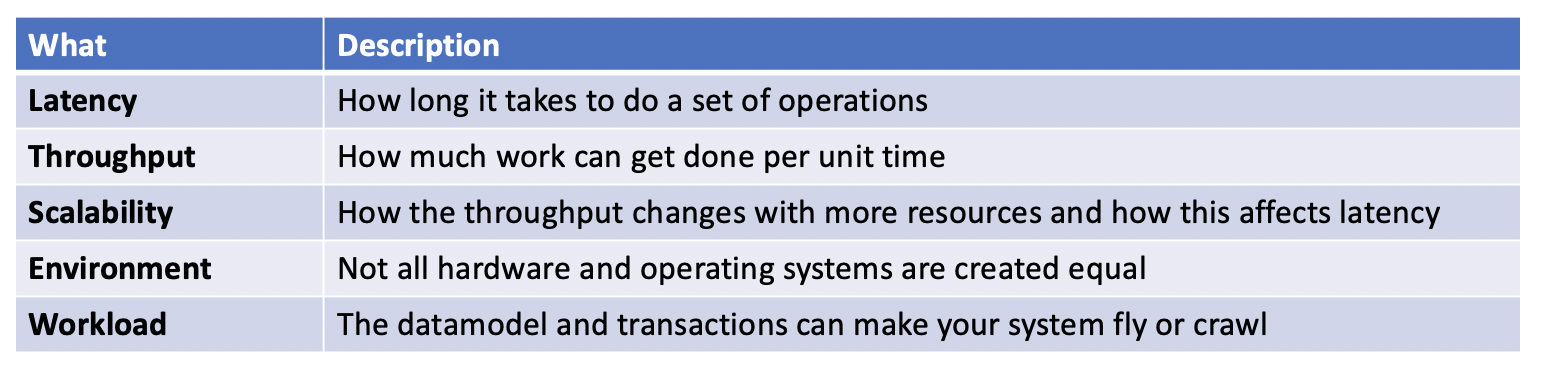

Database performance is really about five things:

Environment

The tests in this blog were run on the following environment:

- Oracle Cloud Infrastructure [London Region]

- Oracle Linux 8.5 with the Unbreakable Enterprise Kernel Release 6

- Huge pages were being used

- Bare metal compute

- BM.Standard3.64 [Intel Xeon Platinum 8358 @ 2.6 GHz]

- BM.Standard.E4.128 [AMD EPYC 7J13 @ 2 GHz]

- DDR4 DRAM

- Block Storage (iSCSI)

- 50 gbps Ethernet

- TimesTen XE 22.1.1.2.0

- The TPTBM benchmark used a C/ODBC program with prepared and bound SQL statements and ACID transactions

You should expect similar performance with similar hardware in your data center or using other clouds.

Workload

The workload was a simple telecommunications use case called TPTBM.

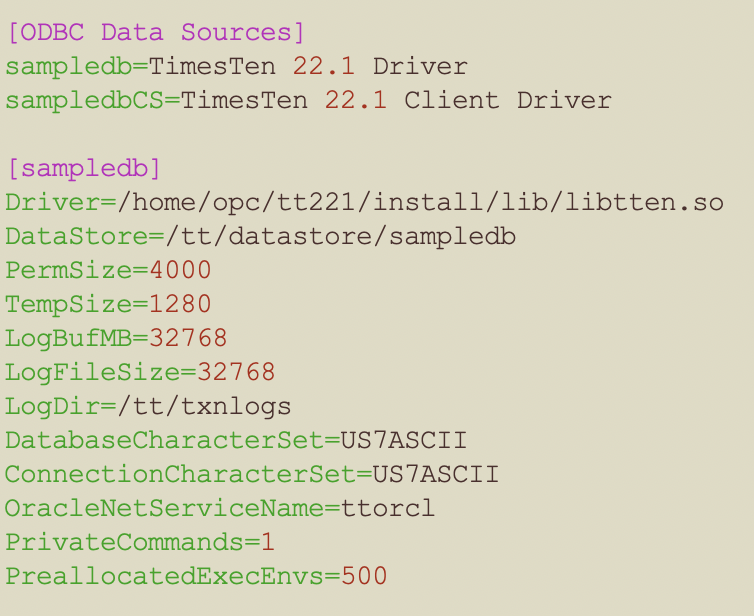

The TimesTen XE database configure file [sys.odbc.ini] was:

Both the database files [/tt/datastore/sampledb.*] and redo log files [/tt/txnlogs/*] were on a Linux ext4 file system using OCI block storage, ie NAS using iSCSI.

The TPTBM SQL transaction was:

select

directory_nb,

last_calling_party,descr

from vpn_users

where vpn_id = ? and vpn_nb= ?;

insert into vpn_users values (?,?,?,?,?);

update vpn_users

set last_calling_party = ?

where vpn_id = ?

and vpn_nb = ?;

delete

from vpn_users

where vpn_id = ?

and vpn_nb = ?;

commit;

The VPN_USERS tables was:

CREATE TABLE vpn_users(

vpn_id TT_INT NOT NULL,

vpn_nb TT_INT NOT NULL,

directory_nb CHAR(10) NOT NULL,

last_calling_party CHAR(10) NOT NULL,

descr CHAR(100) NOT NULL,

PRIMARY KEY (vpn_id,vpn_nb));

CPUs

- TimesTen XE is only available on Linux x8664

- This means that Intel Xeon and AMD EPYC CPUs will tend to give the best scalability

- A gaming CPU with few cores and extreme frequency will tend to give the best latency

- Gaming CPUs with extreme frequency will not tend to scale as well as server CPUs as they have less CPU cores

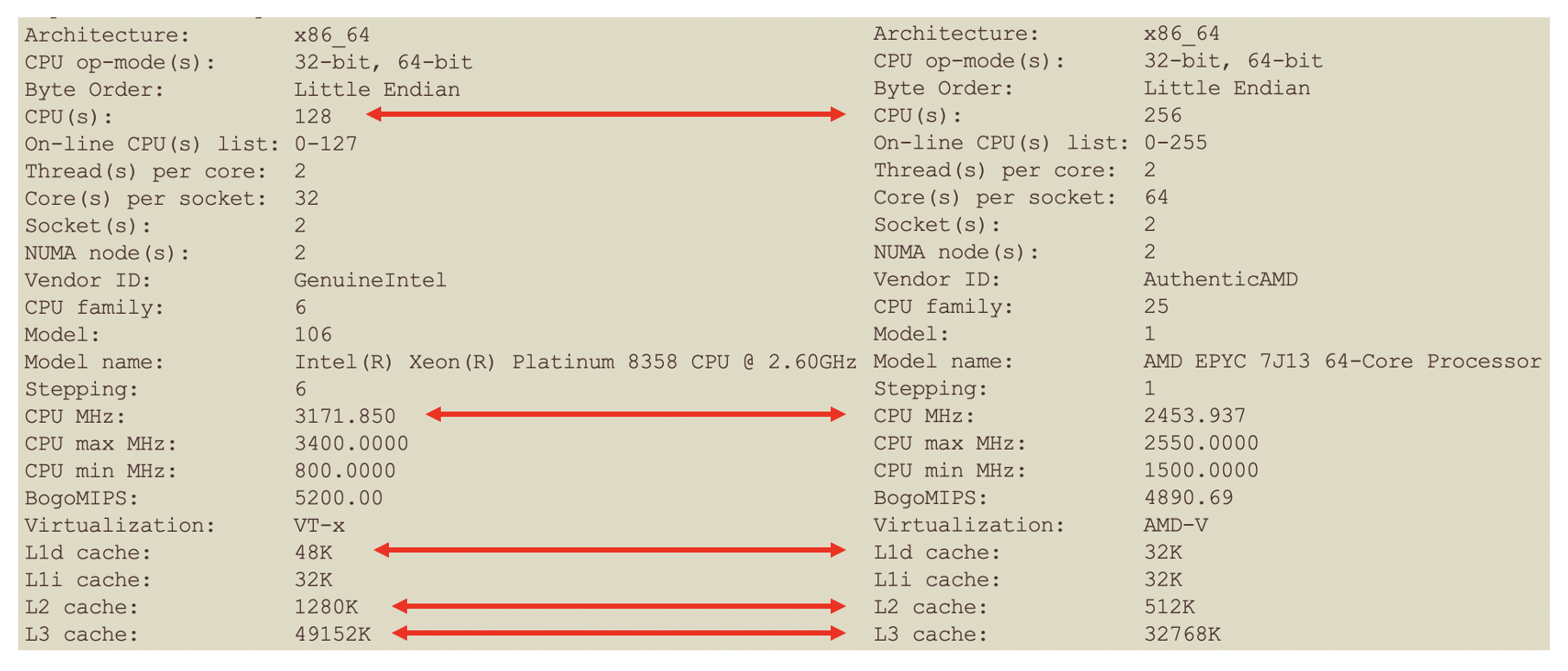

lscpu output

- The above image shows the output of lscpu for both the Intel Xeon and AMD EPYC CPUs that I used

- The red arrows compare the Intel Xeon Platinum 8358 and AMD EPYC 7J13 CPUs

- The Xeon has a higher base and max frequency than the EPYC

- The Xeon has significantly larger L1, L2 and L3 caches than the EPYC

- The Xeon has half the number of CPU cores of the EPYC

Latency

- The mean latency for 89 million executions of the TPTBM SQL select, insert, update and delete statements were used

- The benchmark warmed up for 30 seconds and ran for three minutes

- Running the benchmark for longer, [eg 30 minutes] gave the same results

- An even distribution was used to minimize L3 cache effects

- There were 10 million rows in the VPN_USERS table

- A random number generator was used to generate an even distribution of primary key values for the Select, Insert, Update and Delete statements

- This means that there was a 1/10,000,000 chance of each transaction using the same row

- This working set was large enough that L3 CPU cache hits were not a factor. ie a smaller working set could have skewed the results by using L3 cache rather than DRAM

- Inserts, updates and deletes take longer than selects as the database needs to deal with the undo/redo as these are ACID transactions

Throughput

Read only throughput

- Using the Oracle Cloud bare metal BM.Standard.E4.128 compute node which had two AMD EPYC CPUs, a 100% read workload could scale to 256 database connections

- At 192 database connections, TimesTen XE was executing 45 million primary key lookup per second on a 1U Linux server

- Doubling the number of database connections almost doubled the throughput

- As TimesTen XE is an in-memory database, if TimesTen XE is well tuned then it will tend to be neither be network nor disk IO bound

- This means that TimesTen XE will tend to be CPU bound for direct link benchmarks

- Direct linked applications run on the same machine as the TimesTen database and do not use a TCP socket to communicate with the database

- If client server connections over a TCP socket were used, you would need thousands of database connections to make the TimesTen XE server CPU bound

- TimesTen XE will tend to scale to the number of CPU cores for read workloads

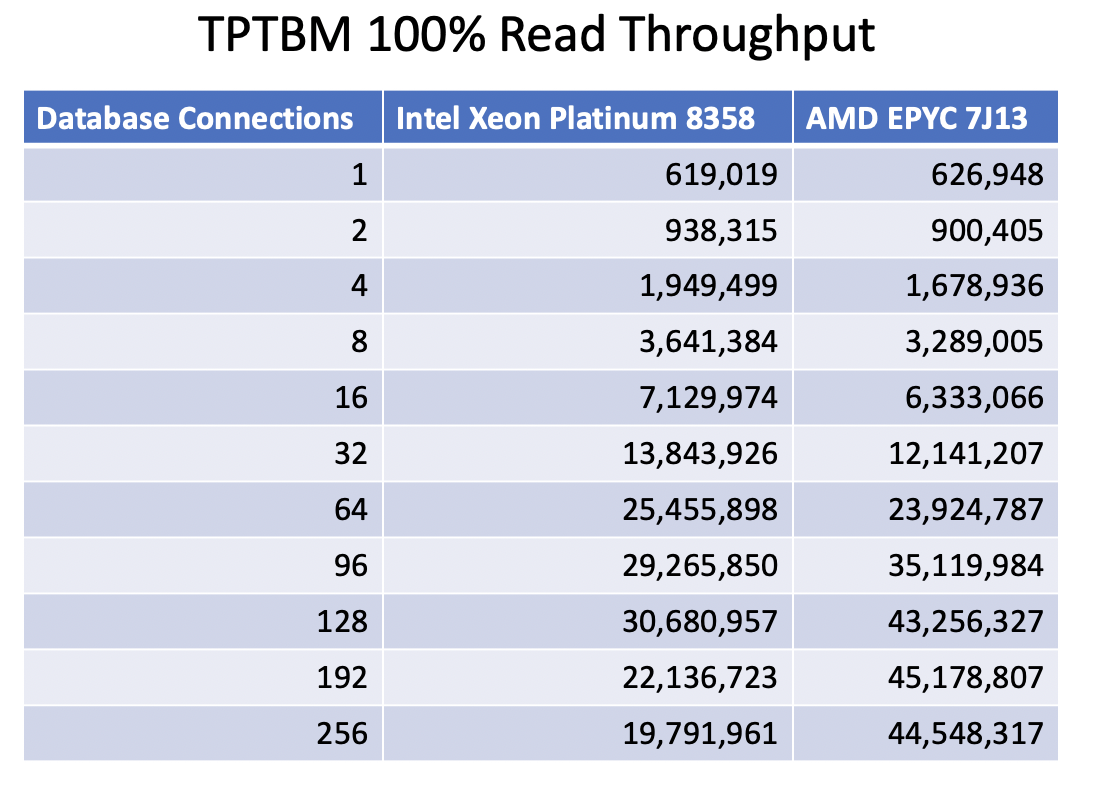

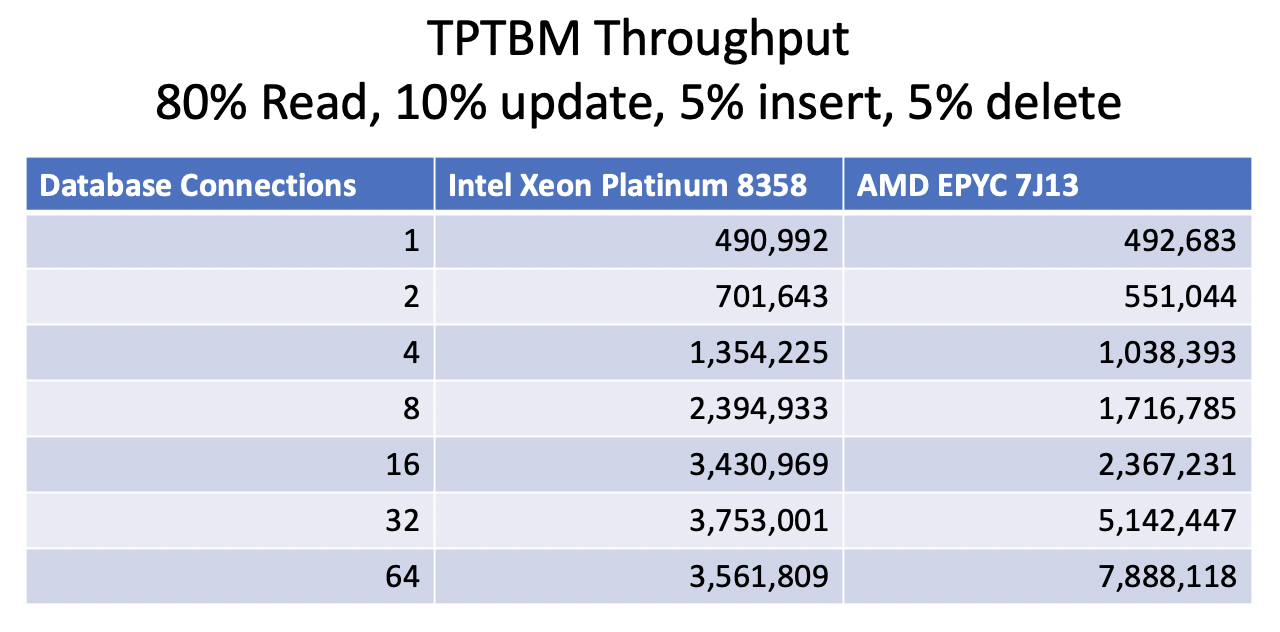

- The above table shows results for both Intel Xeon and AMD EPYC CPUs

- The number of CPU cores, the size of the L2 and L3 caches and CPU frequency will tend to determine the TimesTen XE performance

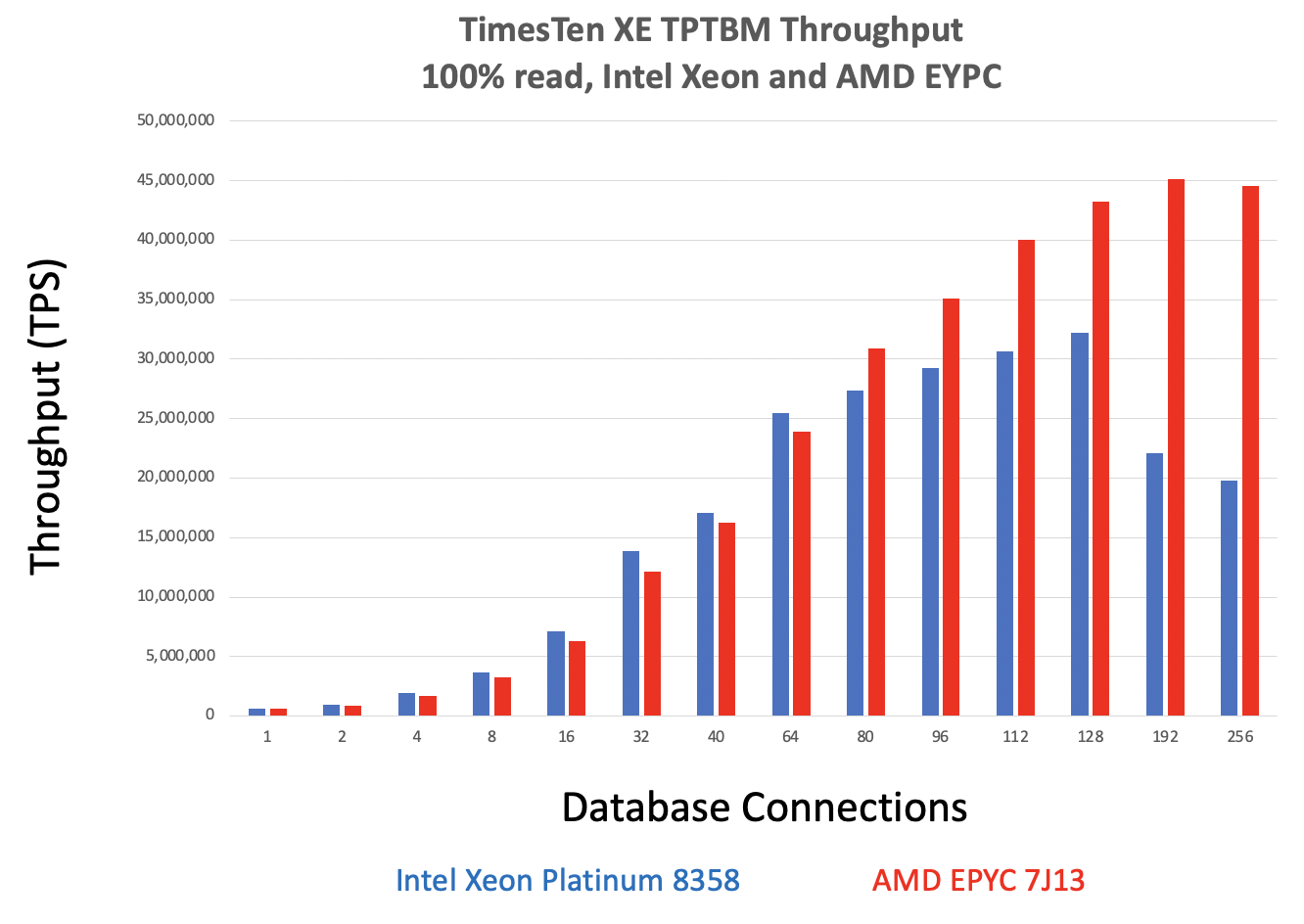

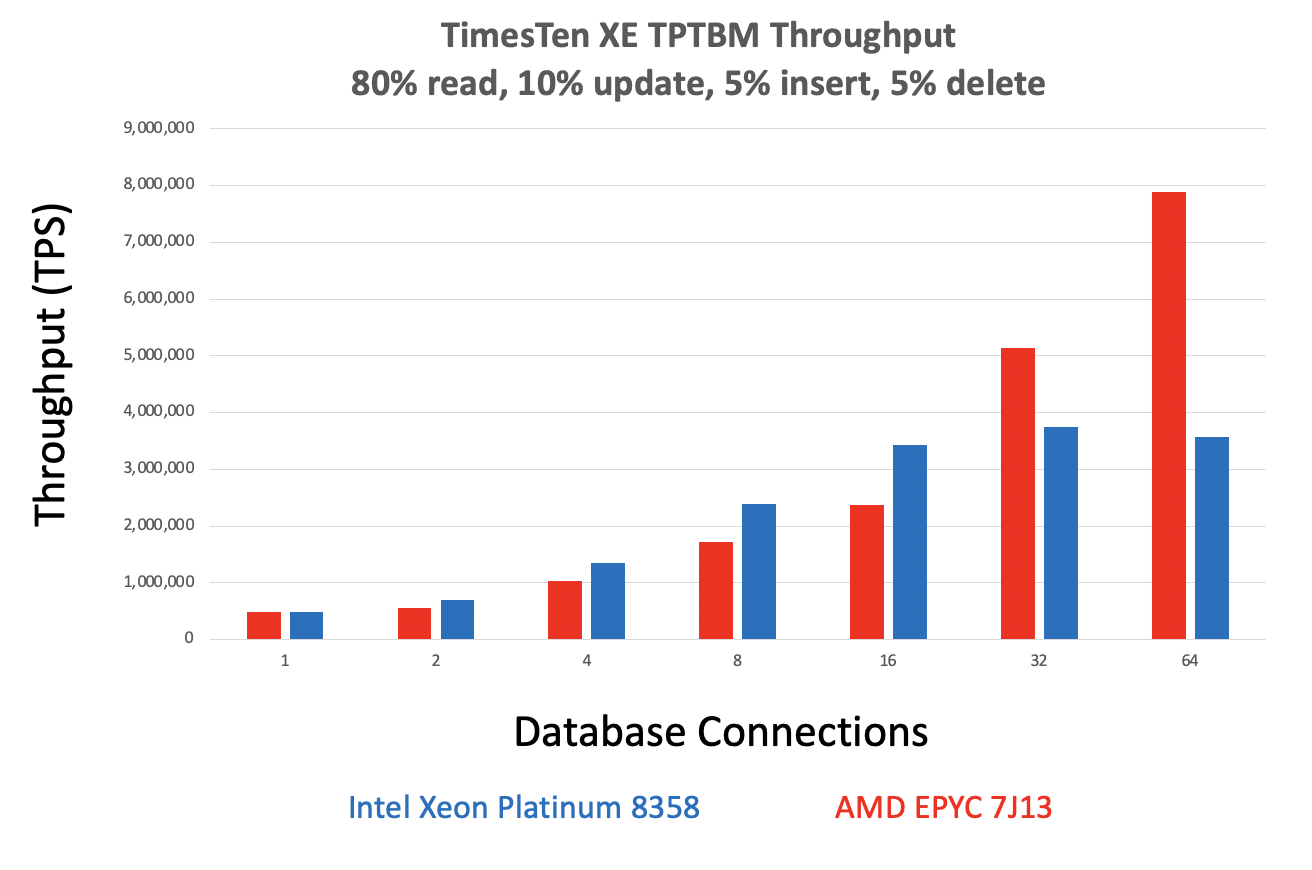

- This chart compares the throughput of TimesTen XE for both Intel Xeon and AMD EPYC CPUs

- The tables, data, workload and TimesTen XE versions are the same

- The variable is whether an Intel Xeon Platinum 8358 or an AMD EPYC 7J13 CPU was used

- For 1-64 database connections, the Intel Xeon CPU tends to give better read throughput than than the AMD EPYC CPU as it has a higher CPU frequency

- For more than 64 database connections, the AMD EPYC CPU has better read throughput than the Intel Xeon as it has more CPU cores

- Both the Intel Xeon and AMD EPYC CPUs show their server scalability curves for TimesTen XE

- The read throughput on the Intel Xeon CPU decreases when there are more database connections than CPU cores

- The AMD EPYC CPU was not CPU bound as it did not use more database connections than CPU cores

Read write throughput

- When a read/write workload is used:

- The AMD EPYC CPU scales to 7.8 million transaction per second using 64 database connections

- The Intel Xeon CPU scales to 3.7 million transactions per second using 32 database connections

- Eventually the read/write workload become IO bound for both the Intel and AMD CPUs

- The redo and database writes are all going over the network as the storage is NAS with iSCSI

- Using fast local NVMe storage would likely have given better write scalability

- This read/write chart shows that the Intel Xeon CPUs tends to give better throughput up to 16 database connections

- The AMD EPYC CPUs tend to give better read/write scalaiblity for 32 to 64 database connections

More complex workloads

The TimesTen XE In-Memory Database will tend to enable latency that is 2x – 40x lower than a traditional disk based database.

The TPTBM read and read/write workloads are simple transactions with a single table.

Customer workloads for complex joins with 50+ billion rows have been shown to be up to 33x faster when using TimesTen than optimized production clustered and/or single instance databases which are disk based.

How well that the free TimesTen XE In-Memory Database performs depends on many factors:

- The data model

- The workload

- The number and types of joins

- How large the resultsets are

- The hardware

- Whether the SQL was prepared and bound

- The SQL APIs [eg JDBC, OCI, ODBC, Python, Node.js, Go etc]

So, try TimesTen XE for your workload, as it is free 🙂

Summary

- TimesTen XE can run on Kubernetes, virtual machines or bare metal machines in clouds, or in your data center

- TimesTen XE is a really fast in-memory relational database with ACID transactions

- TimesTen XE can execute simple SQL Select, Insert, Update and Delete statements in a few microseconds

- TimesTen XE can scale simple read workloads to 45 million reads per second on commodity 1U Linux servers

- TimesTen XE can scale simple mixed workloads with 20% writes to 7.8 million transactions per second on commodity 1U Linux servers

- The scalability of TimesTen XE is dependent on the CPU

- TimesTen XE has excellent performance on both Intel Xeon and AMD EPYC CPUs

TimesTen XE Resources

- TimesTen XE Download

- TimesTen XE Overview

- How to install TimesTen XE

- How to create a Timesten XE database

- TimesTen XE SQL

- TimesTen XE on WSL

- TimesTen XE documentation

Disclaimer: these are my personal thoughts and do not represent Oracle’s official viewpoint in any way, shape, or form.