High-level steps are in two phase

- Dump Schema Utility

- Export the schema of Azure for MySQL Instance into Oracle Cloud Object Storage.

- Load Dump Utility

- Import the schema from Object Storage to local compute Instance.

More info:- https://dev.mysql.com/doc/mysql-shell/8.0/en/mysql-shell-utilities-dump-instance-schema.html

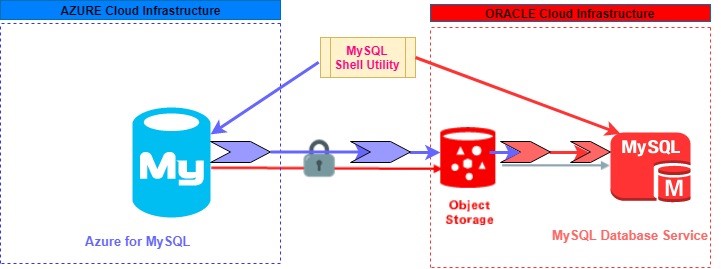

How does Migration Work?

Suppose you wanted to do lift-shift of database called “sakila” , so “Schema Dump” utility will export sakila database from Azure for MySQL to OCI(oracle gen2 cloud infrastructure) object storage.

then “Load Dump” utility will import directly to MySQL Database Service(MDS) .

Below diagram I have made for clear understanding…

What do we needed handy ?

- MySQL Shell 8.0.x version (recommendation always use latest version)

- Azure for MySQL Up and Running.

- MySQL database service(MDS) Up and Running.

- Install OCI CLI on Azure VM Machine.

- Install OCI CLI on OCI(Oracle Cloud Compute Instance)

- local_infile variables must be ON for destination machine.

Summary of Instance details

| Azure VM details |

Azure for MySQL Details |

Oracle Cloud Compute Instance Details |

MDS Details |

| Public IP address:- 20.198.96.xx SSH PPK file User: azureuser |

Database Name:- Sakila End point IP:- azuremysql-dbs.mysql.database.azure.com User:- admin123 Port: 3306 No of Tables: 23 |

Public IP Address: 140.238.227.xx SSH ppk file User:- opc

Object Storage Bucket Name |

MDS Private IP Address:- 10.0.0.xx MySQL Username:- admin MySQL Port: 3306 |

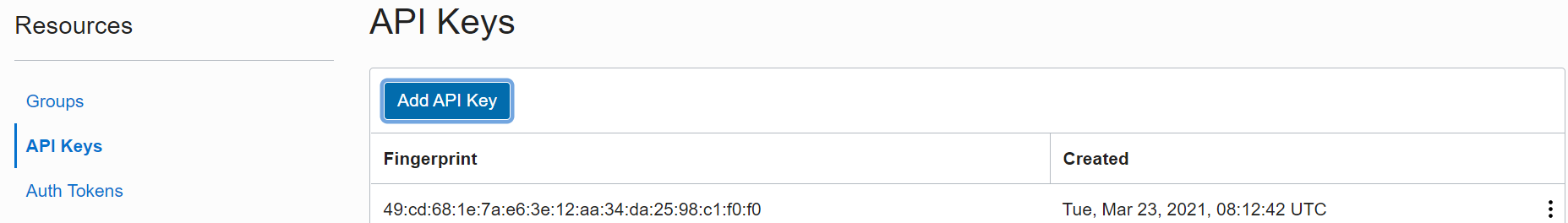

First Install OCI CLI in Azure compute instance

| bash -c “$(curl -L https://raw.githubusercontent.com/oracle/oci-cli/master/scripts/install/install.sh)” exec -l $SHELL cd /root/bin/ oci setup config >>follow the steps cat /root/.oci/oci_api_key_public.pem Paste the o/p of public key into OCI console->user settingsà paste

|

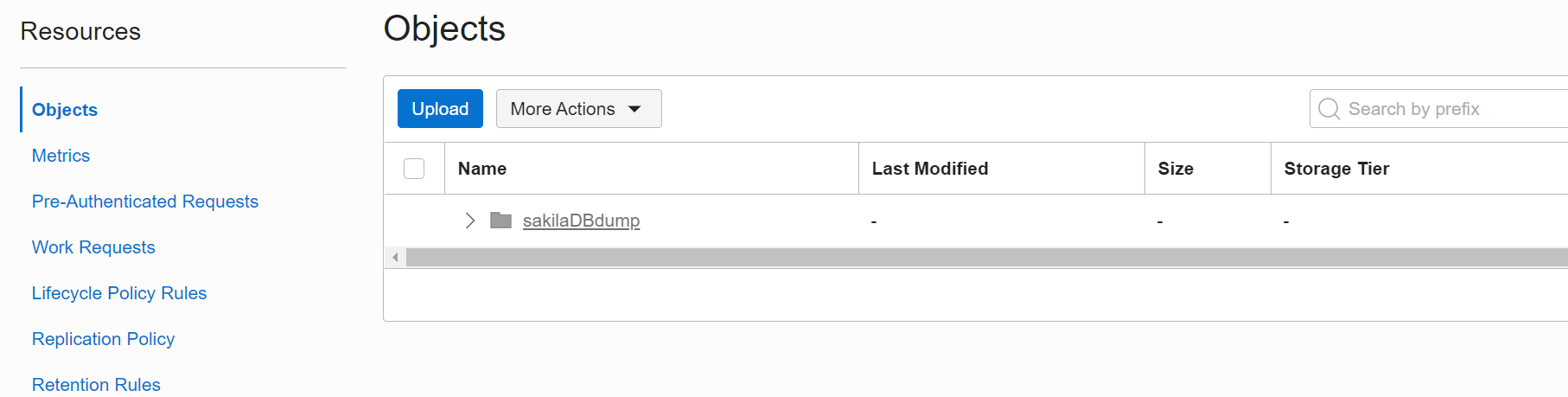

We do require bucket in the Oracle Cloud to temporary hold the exported data from Azure Cloud.

How to create Bucket and putting data into the Bucket , below link will assist further.

Putting Data into Object Storage

Command to Export the backup from Azure for MySQL to OCI Object Storage

#mysqlsh -hazuremysql-dbs.mysql.database.azure.com -uadmin123@azuremysql-dbs -pxxxxx

Check for Compatibility issues and gather the problems in advance before migration by using DRYRUN

util.dumpSchemas([“sakila”], “sakilaDBdump”, {dryRun: true, ocimds: true, “compatibility”: [“strip_definers”, “strip_restricted_grants”]})

Where:-

dryRun: true

Setting this option enables you to list out all of the compatibility issues before starting the dump.

Display information about what would be dumped with the specified set of options, and about the results of MySQL Database Service compatibility checks (if the ocimds option is specified), but do not proceed with the dump.

ocimds: true

Setting this option to true enables checks and modifications for compatibility with MySQL Database Service. the ocimds option checks and reports an error for any tables in the dump that are missing primary keys.

When this option is set to true, DATA DIRECTORY, INDEX DIRECTORY, and ENCRYPTION options in CREATE TABLE statements are commented out in the DDL files, to ensure that all tables are located in the MySQL data directory and use the default schema encryption.

compatibility”: [“strip_definers”, “strip_restricted_grants”]

Apply the specified requirements for compatibility with MySQL Database Service for all tables in the dump output, altering the dump files as necessary. From MySQL Shell 8.0.23, this option is available for all the utilities, and before that release, it is only available for the instance dump utility and schema dump utility.

strip_definers:- Remove the DEFINER clause from views, routines, events, and triggers, so these objects are created with the default definer (the user invoking the schema), and change the SQL SECURITY clause for views and routines to specify INVOKER instead of DEFINER.

strip_restricted_grants :- Remove specific privileges that are restricted by MySQL Database Service from GRANT statements, so users and their roles cannot be given these privileges (which would cause user creation to fail).

Now, Let’s execute the command and observe the output for any errors:-

| MySQL azuremysql-dbs.mysql.database.azure.com:3306 ssl JS > util.dumpSchemas([“sakila”], “sakilaDBdump”, {dryRun: true, ocimds: true, “compatibility”: [“strip_definers”, “strip_restricted_grants”]}) Acquiring global read lock Global read lock acquired Gathering information – done All transactions have been started Locking instance for backup NOTE: Backup lock is not supported in MySQL 5.7 and DDL changes will not be blocked. The dump may fail with an error or not be completely consistent if schema changes are made while dumping. Global read lock has been released Checking for compatibility with MySQL Database Service 8.0.23 NOTE: MySQL Server 5.7 detected, please consider upgrading to 8.0 first. You can check for potential upgrade issues using util.checkForServerUpgrade(). NOTE: Procedure sakila.film_not_in_stock had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: Procedure sakila.rewards_report had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: Procedure sakila.film_in_stock had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.nicer_but_slower_film_list had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.sales_by_film_category had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.actor_info had definer clause removed NOTE: View sakila.film_list had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.sales_by_store had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.staff_list had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.customer_list had definer clause removed and SQL SECURITY characteristic set to INVOKER Compatibility issues with MySQL Database Service 8.0.23 were found and repaired. Please review the changes made before loading them. Writing global DDL files Preparing data dump for table `sakila`.`actor` Data dump for table `sakila`.`actor` will be chunked using column `actor_id` Preparing data dump for table `sakila`.`store` Writing DDL for schema `sakila` Data dump for table `sakila`.`store` will be chunked using column `store_id` Preparing data dump for table `sakila`.`customer` Data dump for table `sakila`.`customer` will be chunked using column `customer_id` Preparing data dump for table `sakila`.`country` Data dump for table `sakila`.`country` will be chunked using column `country_id` Preparing data dump for table `sakila`.`language` Data dump for table `sakila`.`language` will be chunked using column `language_id` Preparing data dump for table `sakila`.`staff` Writing DDL for view `sakila`.`nicer_but_slower_film_list` Data dump for table `sakila`.`staff` will be chunked using column `staff_id` Writing DDL for view `sakila`.`sales_by_film_category` Preparing data dump for table `sakila`.`film` Data dump for table `sakila`.`film` will be chunked using column `film_id` Preparing data dump for table `sakila`.`film_text` Data dump for table `sakila`.`film_text` will be chunked using column `film_id` Writing DDL for view `sakila`.`actor_info` Preparing data dump for table `sakila`.`payment` Data dump for table `sakila`.`payment` will be chunked using column `payment_id` Preparing data dump for table `sakila`.`film_actor` Data dump for table `sakila`.`film_actor` will be chunked using column `actor_id` Preparing data dump for table `sakila`.`film_category` Data dump for table `sakila`.`film_category` will be chunked using column `film_id` Preparing data dump for table `sakila`.`inventory` Data dump for table `sakila`.`inventory` will be chunked using column `inventory_id` Preparing data dump for table `sakila`.`rental` Data dump for table `sakila`.`rental` will be chunked using column `rental_id` Preparing data dump for table `sakila`.`category` Data dump for table `sakila`.`category` will be chunked using column `category_id` Preparing data dump for table `sakila`.`address` Data dump for table `sakila`.`address` will be chunked using column `address_id` Preparing data dump for table `sakila`.`city` Data dump for table `sakila`.`city` will be chunked using column `city_id` Writing DDL for view `sakila`.`film_list` Writing DDL for view `sakila`.`sales_by_store` Writing DDL for view `sakila`.`staff_list` Writing DDL for view `sakila`.`customer_list` Writing DDL for table `sakila`.`actor` Writing DDL for table `sakila`.`store` Writing DDL for table `sakila`.`customer` Writing DDL for table `sakila`.`country` Writing DDL for table `sakila`.`language` Writing DDL for table `sakila`.`staff` Writing DDL for table `sakila`.`film` Writing DDL for table `sakila`.`film_text` Writing DDL for table `sakila`.`payment` Writing DDL for table `sakila`.`film_actor` Writing DDL for table `sakila`.`film_category` Writing DDL for table `sakila`.`inventory` Writing DDL for table `sakila`.`rental` Writing DDL for table `sakila`.`category` Writing DDL for table `sakila`.`address` Writing DDL for table `sakila`.`city` MySQL azuremysql-dbs.mysql.database.azure.com:3306 ssl JS > |

Above dry run report couldn’t find any errors which means we are good to export the instance/schema of Azure for MySQL into Oracle Cloud Object Storage.

| To export particular schema, below command to be executed on Azure VM util.dumpSchemas([“sakila”],”sakilaDBdump”,{threads:30,”osBucketName”: “chandanBucket”, “osNamespace”: “idazzjlcjqzj”, “ocimds”: “true”,”ociConfigFile”:”/root/.oci/config”, “compatibility”: [“strip_definers”, “strip_restricted_grants”]}) To export complete instance, below command to be executed on Azure VM util.dumpInstance (“AzureForMySQLDBdump”,{threads:30,”osBucketName”: “chandanBucket”, “osNamespace”: “idazzjlcjqzj”, “ocimds”: “true”,”ociConfigFile”:”/root/.oci/config”, “compatibility”: [“strip_definers”, “strip_restricted_grants”]}) To export particular table(let say city as table) below command to be executed on Azure VM util.dumpTables([“sakila”], [“city”],”sakilaDBdump”,{threads:30,”osBucketName”: “chandanBucket”, “osNamespace”: “idazzjlcjqzj”, “ocimds”: “true”,”ociConfigFile”:”/root/.oci/config”, “compatibility”: [“strip_definers”, “strip_restricted_grants”]}) |

| Where:- Threads: n The number of parallel threads to use to dump chunks of data from the MySQL instance. Each thread has its own connection to the MySQL instance. The default is 4. osBucketName:<bucket name> The name of the Oracle Cloud Infrastructure Object Storage bucket to which the dump is to be written. By default, the [DEFAULT] profile in the Oracle Cloud Infrastructure CLI configuration file located at ~/.oci/config is used to establish a connection to the bucket. You can substitute an alternative profile to be used for the connection with the ociConfigFile and ociProfile options. osNamespace:<string> The Oracle Cloud Infrastructure namespace where the Object Storage bucket named by osBucketName is located. The namespace for an Object Storage bucket is displayed in the Bucket Information tab of the bucket details page in the Oracle Cloud Infrastructure console, or can be obtained using the Oracle Cloud Infrastructure command line interface. ociConfigFile:<string> An Oracle Cloud Infrastructure CLI configuration file that contains the profile to use for the connection, instead of the one in the default location ~/.oci/config. |

In this blog , we will explore particular schema migration from azure for MySQL to MDS

| MySQL azuremysql-dbs.mysql.database.azure.com:3306 ssl JS > util.dumpSchemas([“sakila”],”sakilaDBdump”,{threads:30,”osBucketName”: “chandanBucket”, “osNamespace”: “idazzjlcjqzj”, “ocimds”: “true”,”ociConfigFile”:”/root/.oci/config”, “compatibility”: [“strip_definers”, “strip_restricted_grants”]}) Acquiring global read lock Global read lock acquired Gathering information – done All transactions have been started Locking instance for backup NOTE: Backup lock is not supported in MySQL 5.7 and DDL changes will not be blocked. The dump may fail with an error or not be completely consistent if schema changes are made while dumping. Global read lock has been released Checking for compatibility with MySQL Database Service 8.0.23 NOTE: MySQL Server 5.7 detected, please consider upgrading to 8.0 first. You can check for potential upgrade issues using util.checkForServerUpgrade(). NOTE: Procedure sakila.film_not_in_stock had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: Procedure sakila.rewards_report had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: Procedure sakila.film_in_stock had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.nicer_but_slower_film_list had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.sales_by_film_category had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.actor_info had definer clause removed NOTE: View sakila.film_list had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.sales_by_store had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.staff_list had definer clause removed and SQL SECURITY characteristic set to INVOKER NOTE: View sakila.customer_list had definer clause removed and SQL SECURITY characteristic set to INVOKER Compatibility issues with MySQL Database Service 8.0.23 were found and repaired. Please review the changes made before loading them. Writing global DDL files Writing DDL for schema `sakila` Preparing data dump for table `sakila`.`actor` Data dump for table `sakila`.`actor` will be chunked using column `actor_id` Preparing data dump for table `sakila`.`store` Data dump for table `sakila`.`store` will be chunked using column `store_id` Preparing data dump for table `sakila`.`customer` Data dump for table `sakila`.`customer` will be chunked using column `customer_id` Preparing data dump for table `sakila`.`country` Writing DDL for view `sakila`.`nicer_but_slower_film_list` Data dump for table `sakila`.`country` will be chunked using column `country_id` Writing DDL for view `sakila`.`sales_by_film_category` Preparing data dump for table `sakila`.`language` Data dump for table `sakila`.`language` will be chunked using column `language_id` Preparing data dump for table `sakila`.`staff` Data dump for table `sakila`.`staff` will be chunked using column `staff_id` Preparing data dump for table `sakila`.`film` Writing DDL for view `sakila`.`actor_info` Data dump for table `sakila`.`film` will be chunked using column `film_id` Preparing data dump for table `sakila`.`film_text` Data dump for table `sakila`.`film_text` will be chunked using column `film_id` Preparing data dump for table `sakila`.`payment` Data dump for table `sakila`.`payment` will be chunked using column `payment_id` Preparing data dump for table `sakila`.`film_actor` Data dump for table `sakila`.`film_actor` will be chunked using column `actor_id` Preparing data dump for table `sakila`.`film_category` Data dump for table `sakila`.`film_category` will be chunked using column `film_id` Preparing data dump for table `sakila`.`inventory` Data dump for table `sakila`.`inventory` will be chunked using column `inventory_id` Preparing data dump for table `sakila`.`rental` Data dump for table `sakila`.`rental` will be chunked using column `rental_id` Preparing data dump for table `sakila`.`category` Data dump for table `sakila`.`category` will be chunked using column `category_id` Writing DDL for view `sakila`.`film_list` Preparing data dump for table `sakila`.`address` Data dump for table `sakila`.`address` will be chunked using column `address_id` Preparing data dump for table `sakila`.`city` Data dump for table `sakila`.`city` will be chunked using column `city_id` Running data dump using 30 threads. NOTE: Progress information uses estimated values and may not be accurate. Writing DDL for view `sakila`.`sales_by_store` Writing DDL for view `sakila`.`customer_list` Writing DDL for view `sakila`.`staff_list` Writing DDL for table `sakila`.`actor` Writing DDL for table `sakila`.`store` Writing DDL for table `sakila`.`customer` Writing DDL for table `sakila`.`country` Writing DDL for table `sakila`.`language` Writing DDL for table `sakila`.`staff` Writing DDL for table `sakila`.`film` Writing DDL for table `sakila`.`film_text` Writing DDL for table `sakila`.`payment` Writing DDL for table `sakila`.`film_actor` Writing DDL for table `sakila`.`film_category` Writing DDL for table `sakila`.`inventory` Writing DDL for table `sakila`.`rental` Writing DDL for table `sakila`.`category` Writing DDL for table `sakila`.`address` Writing DDL for table `sakila`.`city` Data dump for table `sakila`.`store` will be written to 1 file Data dump for table `sakila`.`actor` will be written to 1 file Data dump for table `sakila`.`customer` will be written to 1 file Data dump for table `sakila`.`country` will be written to 1 file Data dump for table `sakila`.`staff` will be written to 1 file Data dump for table `sakila`.`language` will be written to 1 file Data dump for table `sakila`.`film` will be written to 1 file Data dump for table `sakila`.`film_category` will be written to 1 file Data dump for table `sakila`.`payment` will be written to 1 file Data dump for table `sakila`.`film_text` will be written to 1 file Data dump for table `sakila`.`film_actor` will be written to 1 file Data dump for table `sakila`.`rental` will be written to 1 file Data dump for table `sakila`.`inventory` will be written to 1 file Data dump for table `sakila`.`category` will be written to 1 file Data dump for table `sakila`.`address` will be written to 1 file Data dump for table `sakila`.`city` will be written to 1 file 1 thds dumping – 100% (46.27K rows / ~46.27K rows), 1.61K rows/s, 96.26 KB/s uncompressed, 0.00 B/s compressed Duration: 00:00:01s Schemas dumped: 1 Tables dumped: 16 Uncompressed data size: 2.92 MB Compressed data size: 557.10 KB Compression ratio: 5.2 Rows written: 46273 Bytes written: 557.10 KB Average uncompressed throughput: 1.87 MB/s Average compressed throughput: 356.39 KB/s MySQL azuremysql-dbs.mysql.database.azure.com:3306 ssl JS > |

| You may cross check whether DUMP has been exported to Oracle Cloud Object Storage or not

|

Import Dump file into MySQL Database Service (MDS), from OCI Object Storage.

First is to install OCI CLI by following below web links

https://docs.oracle.com/en-us/iaas/Content/API/SDKDocs/cliinstall.htm

For import into a MySQL DB Service (MDS), the MySQL Shell instance where you run the dump loading utility must be installed on an Oracle Cloud Infrastructure Compute instance that has access to the MySQL DB System.

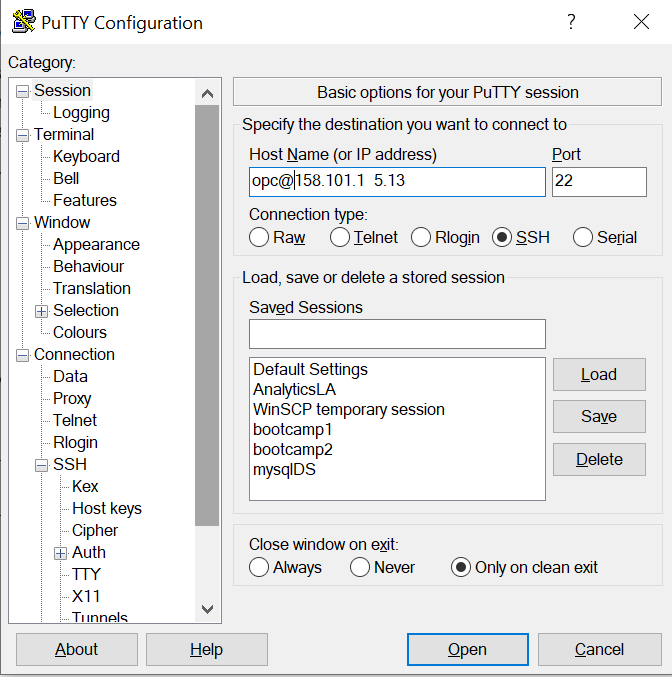

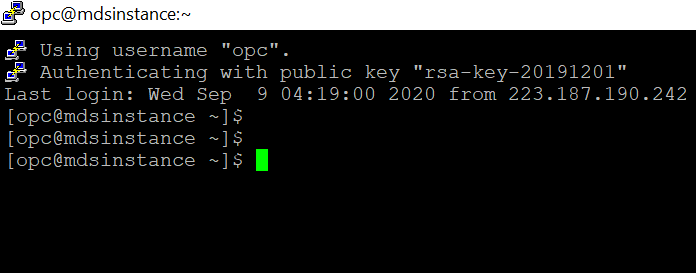

Let’s connect Oracle Cloud Compute Instance ,and check whether MDS Instance you are able to connect or not ?

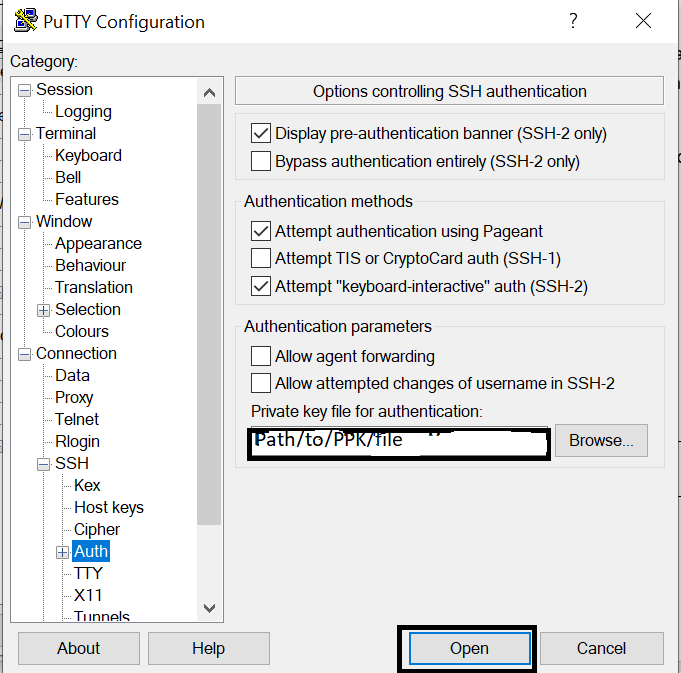

[Windows User] How to connect Compute Instance – open putty and keep public IP into it , see below screen shot.

In case putty is not installed in your local machine(laptop) , install by following the below link

https://www.putty.org/

In case you wanted to start from the begining,how to deploy MDS Instance ? Following blog will help:-

https://docs.oracle.com/en-us/iaas/mysql-database/doc/getting-started.html

Assuming that MDS instance is up and running let’s Connect MDS instance

Connect MySQL Shell to Import

# mysqlsh -h10.0.x.3 -uadmin -pxxxxxxx

MySQL 10.0.1.3:33060+ ssl JS > \sql

Switching to SQL mode… Commands end with ;

MySQL 10.0.1.3:33060+ ssl SQL > show databases;

+——————–+

| Database |

+——————–+

| information_schema |

| mysql |

| performance_schema |

| sys |

+——————–+

4 rows in set (0.00 sec)

Let’s run DRY RUN command to check that there will be no issues when the dump file is imported from a object storage into the connected MDS instance.

DRY RUN scripts:-

util.loadDump(“sakilaDBdump”,{dryRun: true, osBucketName: “chandanBucket”, osNamespace: “idazzjlcjqzj”,”ociConfigFile”:”/root/.oci/config”})

| MySQL 10.0.1.3:33060+ ssl JS >util.loadDump(“sakilaDBdump”,{dryRun: true, osBucketName: “chandanBucket”, osNamespace: “idazzjlcjqzj”,”ociConfigFile”:”/root/.oci/config”}) MySQL 10.0.1.3:33060+ ssl JS > util.loadDump(“sakilaDBdump”,{dryRun: true, osBucketName: “chandanBucket”, osNamespace: “idazzjlcjqzj”,”ociConfigFile”:”/root/.oci/config”}) Loading DDL and Data from OCI ObjectStorage bucket=chandanBucket, prefix=’sakilaDBdump’ using 4 threads. Opening dump… dryRun enabled, no changes will be made. Target is MySQL 8.0.23-u2-cloud. Dump was produced from MySQL 8.0.15 Fetching dump data from remote location… Fetching 16 table metadata files for schema `sakila`… Checking for pre-existing objects… Executing common preamble SQL Executing DDL script for schema `sakila` [Worker001] Executing DDL script for `sakila`.`city` [Worker003] Executing DDL script for `sakila`.`category` [Worker000] Executing DDL script for `sakila`.`inventory` [Worker002] Executing DDL script for `sakila`.`address` [Worker001] Executing DDL script for `sakila`.`film_category` [Worker003] Executing DDL script for `sakila`.`customer` [Worker000] Executing DDL script for `sakila`.`staff` [Worker001] Executing DDL script for `sakila`.`rental` [Worker002] Executing DDL script for `sakila`.`country` [Worker003] Executing DDL script for `sakila`.`store` [Worker001] Executing DDL script for `sakila`.`language` [Worker000] Executing DDL script for `sakila`.`actor` [Worker002] Executing DDL script for `sakila`.`film` [Worker003] Executing DDL script for `sakila`.`film_text` (indexes removed for deferred creation) [Worker000] Executing DDL script for `sakila`.`film_actor` [Worker001] Executing DDL script for `sakila`.`payment` [Worker002] Executing DDL script for `sakila`.`nicer_but_slower_film_list` (placeholder for view) [Worker003] Executing DDL script for `sakila`.`sales_by_film_category` (placeholder for view) [Worker001] Executing DDL script for `sakila`.`film_list` (placeholder for view) [Worker001] Executing DDL script for `sakila`.`customer_list` (placeholder for view) [Worker002] Executing DDL script for `sakila`.`sales_by_store` (placeholder for view) [Worker003] Executing DDL script for `sakila`.`staff_list` (placeholder for view) [Worker000] Executing DDL script for `sakila`.`actor_info` (placeholder for view) Executing common postamble SQL No data loaded. 0 warnings were reported during the load. MySQL 10.0.1.3:33060+ ssl JS > |

Since , above report shows NO ERROR , hence we are good to Import the data from object storage

Script to Import DUMP file from Object Storage to MySQL Database System

| util.loadDump(“sakilaDBdump”,{osBucketName: “chandanBucket”, osNamespace: “idazzjlcjqzj”,”ociConfigFile”:”/root/.oci/config”}) |

| MySQL 10.0.1.3:33060+ ssl JS > util.loadDump(“sakilaDBdump”,{osBucketName: “chandanBucket”, osNamespace: “idazzjlcjqzj”,”ociConfigFile”:”/root/.oci/config”}) Loading DDL and Data from OCI ObjectStorage bucket=chandanBucket, prefix=’sakilaDBdump’ using 4 threads. Opening dump… Target is MySQL 8.0.23-u2-cloud. Dump was produced from MySQL 8.0.15 Fetching dump data from remote location… Fetching 16 table metadata files for schema `sakila`… Checking for pre-existing objects… Executing common preamble SQL Executing DDL script for schema `sakila` [Worker003] Executing DDL script for `sakila`.`address` [Worker002] Executing DDL script for `sakila`.`category` [Worker001] Executing DDL script for `sakila`.`city` [Worker000] Executing DDL script for `sakila`.`inventory` [Worker003] Executing DDL script for `sakila`.`customer` [Worker002] Executing DDL script for `sakila`.`film_category` [Worker001] Executing DDL script for `sakila`.`country` [Worker000] Executing DDL script for `sakila`.`staff` [Worker003] Executing DDL script for `sakila`.`rental` [Worker002] Executing DDL script for `sakila`.`store` [Worker000] Executing DDL script for `sakila`.`language` [Worker001] Executing DDL script for `sakila`.`actor` [Worker003] Executing DDL script for `sakila`.`film` [Worker002] Executing DDL script for `sakila`.`film_text` (indexes removed for deferred creation) [Worker000] Executing DDL script for `sakila`.`payment` [Worker001] Executing DDL script for `sakila`.`film_actor` [Worker003] Executing DDL script for `sakila`.`nicer_but_slower_film_list` (placeholder for view) [Worker002] Executing DDL script for `sakila`.`sales_by_film_category` (placeholder for view) [Worker000] Executing DDL script for `sakila`.`actor_info` (placeholder for view) [Worker001] Executing DDL script for `sakila`.`film_list` (placeholder for view) [Worker003] Executing DDL script for `sakila`.`sales_by_store` (placeholder for view) [Worker000] Executing DDL script for `sakila`.`customer_list` (placeholder for view) [Worker002] Executing DDL script for `sakila`.`staff_list` (placeholder for view) Executing DDL script for view `sakila`.`actor_info` Executing DDL script for view `sakila`.`nicer_but_slower_film_list` Executing DDL script for view `sakila`.`sales_by_film_category` Executing DDL script for view `sakila`.`film_list` Executing DDL script for view `sakila`.`sales_by_store` Executing DDL script for view `sakila`.`customer_list` Executing DDL script for view `sakila`.`staff_list` [Worker001] sakila@staff@@0.tsv.zst: Records: 2 Deleted: 0 Skipped: 0 Warnings: 0 [Worker003] sakila@film@@0.tsv.zst: Records: 1000 Deleted: 0 Skipped: 0 Warnings: 0 [Worker001] sakila@address@@0.tsv.zst: Records: 603 Deleted: 0 Skipped: 0 Warnings: 0 [Worker003] sakila@film_actor@@0.tsv.zst: Records: 5462 Deleted: 0 Skipped: 0 Warnings: 0 [Worker003] sakila@customer@@0.tsv.zst: Records: 599 Deleted: 0 Skipped: 0 Warnings: 0 [Worker003] sakila@city@@0.tsv.zst: Records: 600 Deleted: 0 Skipped: 0 Warnings: 0 [Worker001] sakila@inventory@@0.tsv.zst: Records: 4581 Deleted: 0 Skipped: 0 Warnings: 0 [Worker003] sakila@film_category@@0.tsv.zst: Records: 1000 Deleted: 0 Skipped: 0 Warnings: 0 [Worker001] sakila@actor@@0.tsv.zst: Records: 200 Deleted: 0 Skipped: 0 Warnings: 0 [Worker003] sakila@country@@0.tsv.zst: Records: 109 Deleted: 0 Skipped: 0 Warnings: 0 [Worker001] sakila@category@@0.tsv.zst: Records: 16 Deleted: 0 Skipped: 0 Warnings: 0 [Worker003] sakila@language@@0.tsv.zst: Records: 6 Deleted: 0 Skipped: 0 Warnings: 0 [Worker001] sakila@store@@0.tsv.zst: Records: 2 Deleted: 0 Skipped: 0 Warnings: 0 [Worker002] sakila@rental@@0.tsv.zst: Records: 16044 Deleted: 0 Skipped: 0 Warnings: 0 [Worker000] sakila@payment@@0.tsv.zst: Records: 16049 Deleted: 0 Skipped: 0 Warnings: 0 Executing common postamble SQL 15 chunks (46.27K rows, 2.92 MB) for 15 tables in 1 schemas were loaded in 2 sec (avg throughput 1.46 MB/s) 0 warnings were reported during the load. MySQL 10.0.1.3:33060+ ssl JS > |

Finally Import Has been done successfully.

Let’s verify The results

| MySQL 10.0.1.3:33060+ ssl JS > \sql Switching to SQL mode… Commands end with ; MySQL 10.0.1.3:33060+ ssl SQL > show databases; +——————–+ | Database | +——————–+ | information_schema | | mysql | | performance_schema | | sakila | | sys | +——————–+ 5 rows in set (0.0020 sec) Alright, you can see “Sakila” database available now, which means that migration happened successfully!! MySQL 10.0.1.3:33060+ ssl SQL > use sakila; Default schema set to `sakila`. Fetching table and column names from `sakila` for auto-completion… Press ^C to stop. MySQL 10.0.1.3:33060+ ssl sakila SQL > select count(*) from city; +———-+ | count(*) | +———-+ | 600 | +———-+ 1 row in set (0.0025 sec) MySQL 10.0.1.3:33060+ ssl sakila SQL > show tables; +—————————-+ | Tables_in_sakila | +—————————-+ | actor | | actor_info | | address | | category | | city | | country | | customer | | customer_list | | film | | film_actor | | film_category | | film_list | | film_text | | inventory | | language | | nicer_but_slower_film_list | | payment | | rental | | sales_by_film_category | | sales_by_store | | staff | | staff_list | | store | +—————————-+ 23 rows in set (0.0019 sec) MySQL 10.0.1.3:33060+ ssl sakila SQL >

|

Conclusion:-

Migration happened successfully!!!

MySQL Shell Instance DUMP and LOAD utility makes migration and daily operational job seamless ,very very easy to migrate from different sources into MDS and easier to use, by providing an interactive MySQL client supporting SQL, Document Store, JavaScript & Python interface with support for writing custom extensions.

MySQL Database Service is a fully managed database service that enables organizations to deploy cloud-native applications using the world’s most popular open source database. It is 100% developed, managed and supported by the MySQL Team.