Introducing Bulk Ingest Functionality in MySQL HeatWave on AWS

Overview

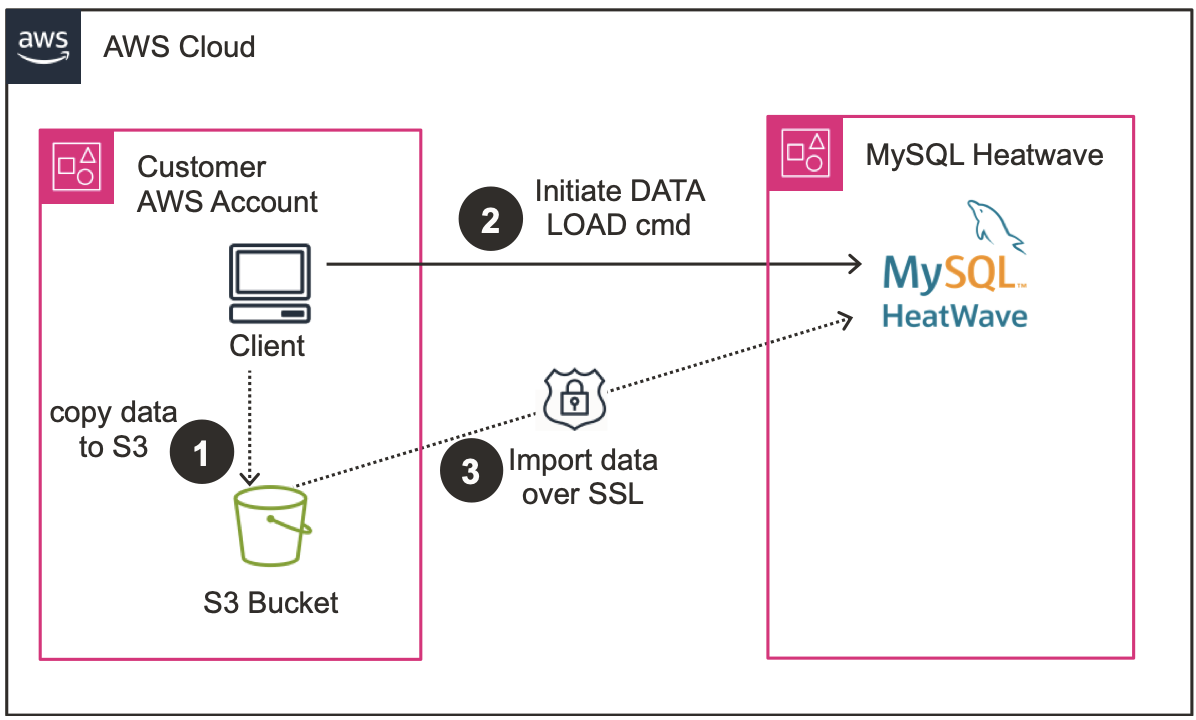

MySQL HeatWave on AWS provides a MySQL native data import functionality (LOAD DATA statement) to import data from a client machine into a table in MySQL. This is convenient if you only want to import a small data set into MySQL HeatWave. However, enterprise customers, who have their applications and workloads running on AWS, store their data on Amazon S3, and often times, the data sets are large.

To facilitate organizations to import large data set from Amazon S3 into MySQL HeatWave, we have enhanced the MySQL native data import functionality to support direct import of data files stored on Amazon S3 into MySQL without incurring data transfer cost and introduced the new bulk ingest algorithm to provide higher data import performance with low memory usage.

The existing LOAD DATA statement in MySQL is extended with

- FROM S3 s3-uri to allow MySQL server to read data files directly from S3

- ALGROITHM=BULK to indicate the use of the new Bulk Ingest algorithm for fast data import

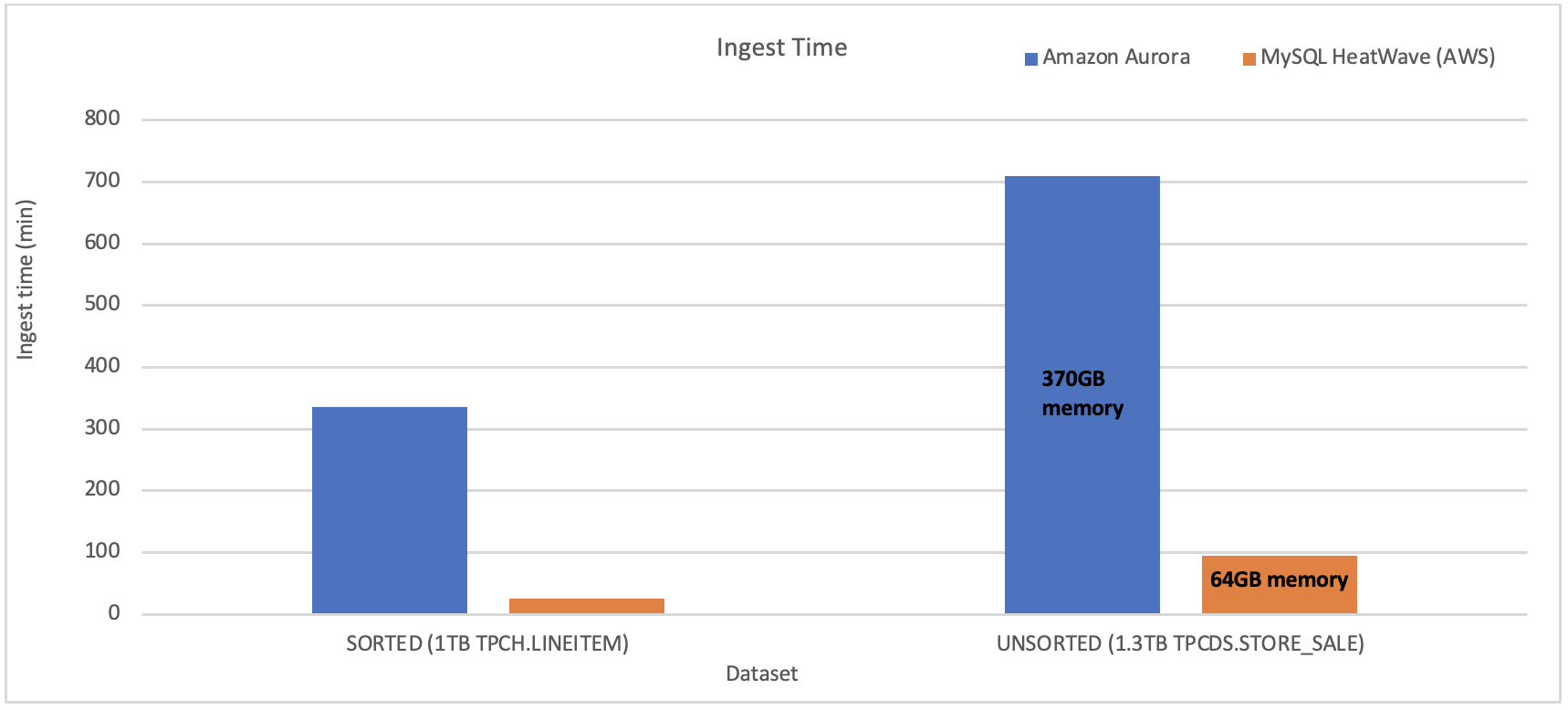

Compared to AWS Aurora MySQL, importing a 1TB data set with data already sorted in the data file in AWS S3, MySQL HeatWave on AWS is 12.4X faster. For data that is not sorted, MySQL HeatWave on AWS is 7.5X faster and uses 5.8X less memory than Aurora.

How it works

MySQL HeatWave has been enhanced in two aspects:

- Integration with AWS Identity and Access Management (IAM) to securely access data in customer’s AWS account:

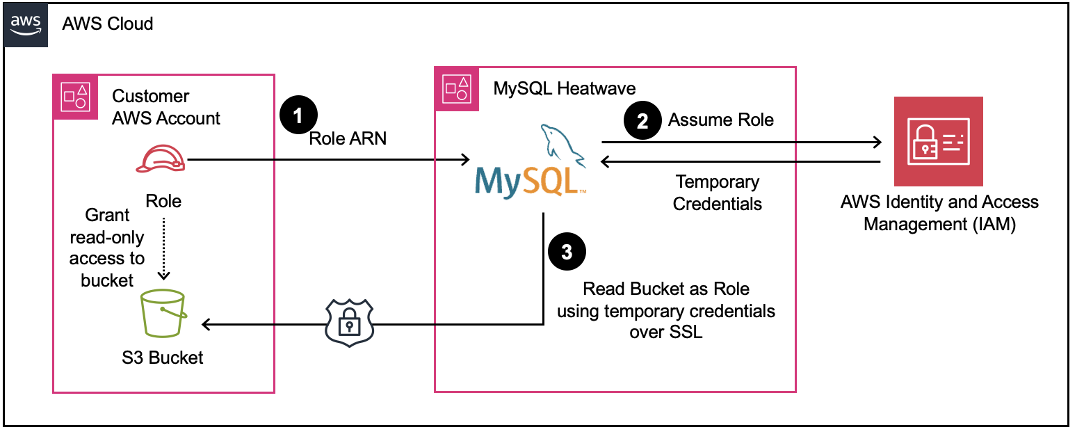

The diagram above depicts how a MySQL HeatWave database system accesses data files stored in AWS S3 in customer’s account.

MySQL HeatWave on AWS utilizes cross-account roles mechanism provided by AWS Identity and Access Management (IAM) service. With this mechanism, customers can delegate S3 access permission to a MySQL HeatWave database system.

To achieve this, first the customer needs to create an AWS IAM role in their AWS account with a trust policy and a permission policy. The trust policy specifies who can assume this IAM role (i.e., a MySQL HeatWave database system hosted in the MySQL HeatWave service’s AWS account), while the permission policy specifies what the role allows the corresponding MySQL HeatWave database system to do (i.e., read-only access to the objects in the S3 bucket).

Once the customer associates this role with a MySQL HeatWave database system (using the MySQL HeatWave on AWS service console), only during bulk ingest, the database system assumes this IAM role to retrieve the temporary credentials from AWS IAM to access customer’s data in S3.

- Bulk ingest performance improvements:

The default algorithm employed by LOAD DATA command performs in-place insertions into the B+ tree index. This results in frequent disk I/O operations to read and write index pages if consecutive insertions do not exhibit index page locality. Moreover, when multiple LOAD DATA commands are issued to load multiple files into the same table, insertions with overlapping paths in the B+ tree traversal contend for the B+ tree latches.

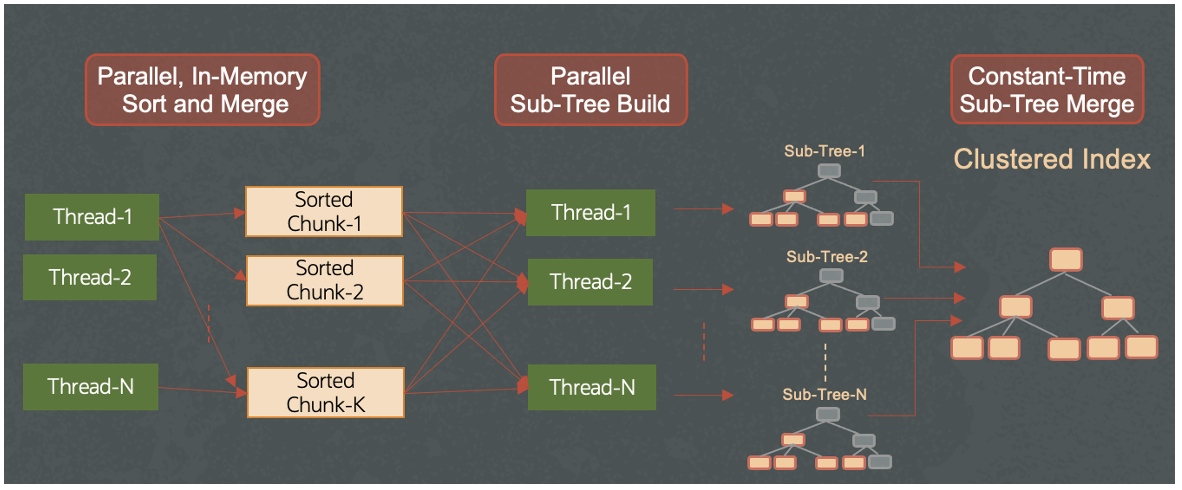

The new bulk ingest algorithm elimates these performance bottlenecks by avoiding in-place insertions into the index B+ tree. Instead, the new bulk ingest algorithm heavily relies on in-memory sorting and merging of smaller portions of the input data with multiple threads and constructs the final B+ tree as the very final step.

As shown in the diagram above, bulk ingest loads data in three phases:

Phase 1: If input is not already sorted, multiple threads in parallel sort portions of input data in memory, then each thread merges its dedicated partition of data in parallel to form sorted chunks.

Phase 2: Multiple threads build multiple disjoint sub-trees from these sorted chunks in parallel and write them on to disk.

Phase 3: The dis-joint sub-trees are merged into one single B+ tree representing the final clustered index.

The most important benefits of bulk ingest algorithm are:

- Compared to the default LOAD DATA algorithm, bulk ingest eliminates the contention on B+ tree latches, since in-memory sorting and merge are performed by multiple threads in parallel before the final B+ is formed.

- Bulk ingest eliminates frequent random disk IO, thanks to its in-memory sort and merge, which results in sequential writes of sorted data into disk.

- Bulk ingest algorithm is heavily pipelined, resulting in high overlap of compute and disk IO.

Example to import data on AWS S3 into MySQL HeatWave using Bulk Ingest

This example demonstrates how to import data hosted in an AWS S3 bucket into a MySQL HeatWave DB System using bulk ingest.

For this example, the sample data files corresponding to the booking table of AirportDB are already uploaded to AWS S3, and the AWS IAM role, which can be associated with a MySQL HeatWave DB System to grant access to the S3 bucket is already created.

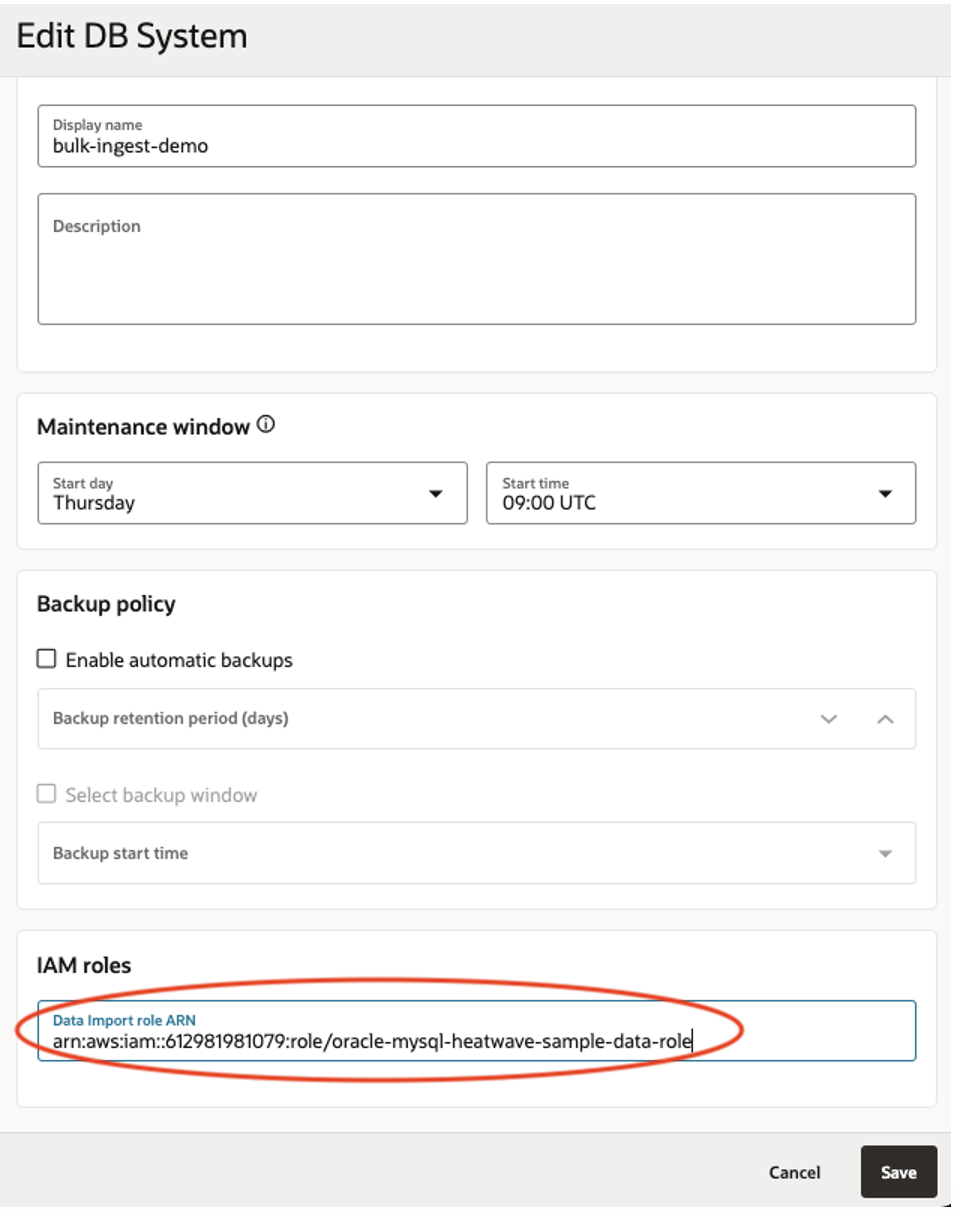

AWS IAM Role:

arn:aws:iam::612981981079:role/oracle-mysql-heatwave-sample-data-role

S3 URI of the booking table files (assuming will be imported by a DB System in us-east-1 region):

s3-us-east-1://oracle-mysql-heatwave-sample-data-us-east-1/published/airportdb/v1/tsv/booking.tsv

- Grant access to MySQL HeatWave instance

To grant an existing MySQL HeatWave database system access to the sample data in S3, goto MySQL HeatWave service console, edit the DB System to associate the AWS IAM role with the DB System.

- Import data using bulk ingest

Once the IAM role is associated with the DB System, you can import the data using bulk ingest.

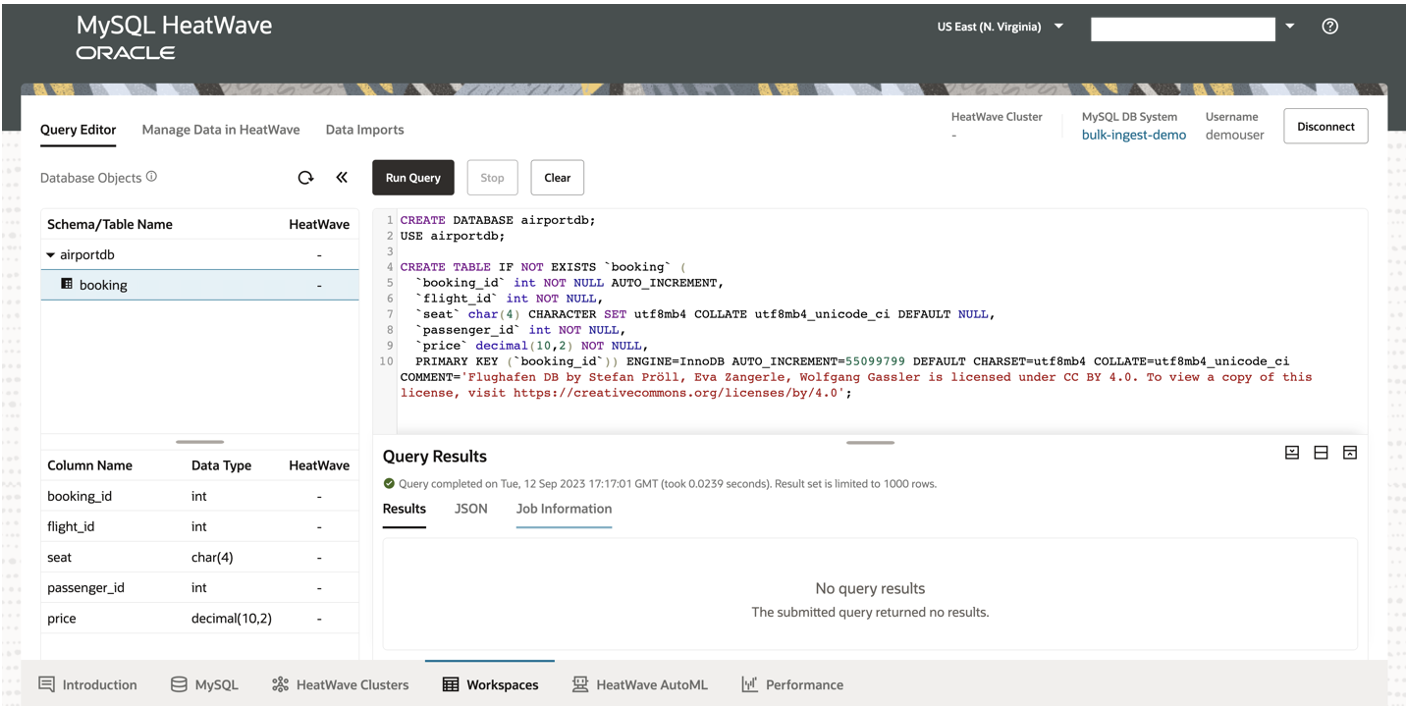

- Connect to your MySQL HeatWave DB System using the Query Editor in the console.

- Create the airportdb database and the booking table.

- CREATE DATABASE airportdb;

- USE airportdb;

- CREATE TABLE IF NOT EXISTS `booking` (

`booking_id` int NOT NULL AUTO_INCREMENT,

`flight_id` int NOT NULL,

`seat` char(4) CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci DEFAULT NULL,

`passenger_id` int NOT NULL,

`price` decimal(10,2) NOT NULL,

PRIMARY KEY (`booking_id`)) ENGINE=InnoDB AUTO_INCREMENT=55099799 DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_unicode_ci COMMENT=’Flughafen DB by Stefan Pröll, Eva Zangerle, Wolfgang Gassler is licensed under CC B Y 4.0. To view a copy of this license, visit https://creativecommons.org/licenses/by/4.0′;

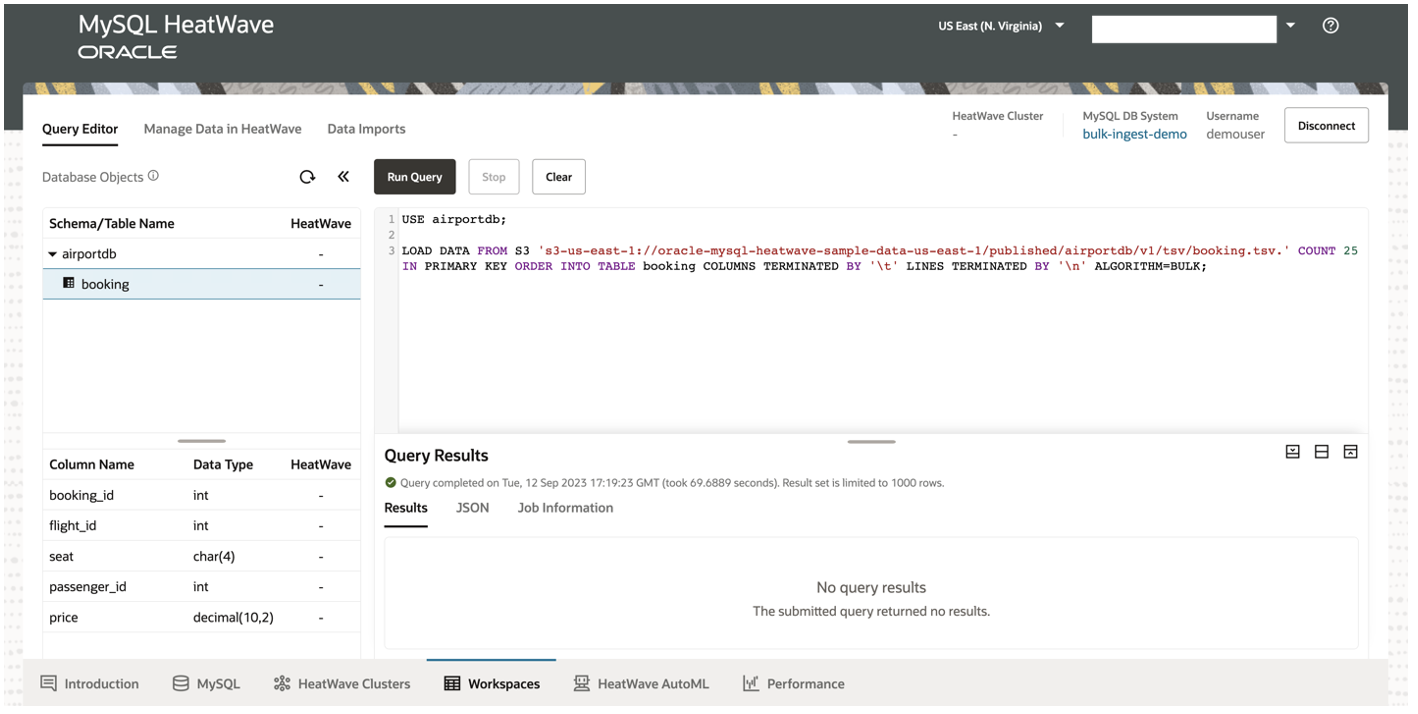

- Once the table is created, you can use bulk ingest to import data from S3 into the table:

In this example, sample data is split into 25 files, following the naming format booking.tsv.1, booking.tsv.2, etc. Splitting the input into multiple files is beneficial to improve the data ingest performance.

- LOAD DATA FROM S3 ‘s3-us-east-1://oracle-mysql-heatwave-sample-data-us-east-1/published/airportdb/v1/tsv/booking.tsv.’ COUNT 25 IN PRIMARY KEY ORDER INTO TABLE booking COLUMNS TERMINATED BY ‘\t’ LINES TERMINATED BY ‘\n’ ALGORITHM=BULK;

Summary

The enhanced bulk ingest provides a secured and fast MySQL native import functionality to directly import data on Amazon S3. It is 10X faster than AWS Aurora, enabling organizations to migrate their existing workload or import any customer or application data mush easier for further processing.