Large directories in the ext4 filesystem face a unique problem when the majority of the files in the directory are deleted. This situation can cause functions like sys_getdents to experiance a significant slowdown, which might even cause the system to hang. This blog post explains why this occurs and what you can do if you encounter this problem. It’s important to note that this isn’t a bug but rather how ext4 manages large directories.

To fully understand the issue and its workaround, the knowledge of the structure and operation of htree is essential. For those not familiar with htree, a brief overview is provided here. However, we recommend to read more about htree in the htree section of this blog.

A Brief Overview

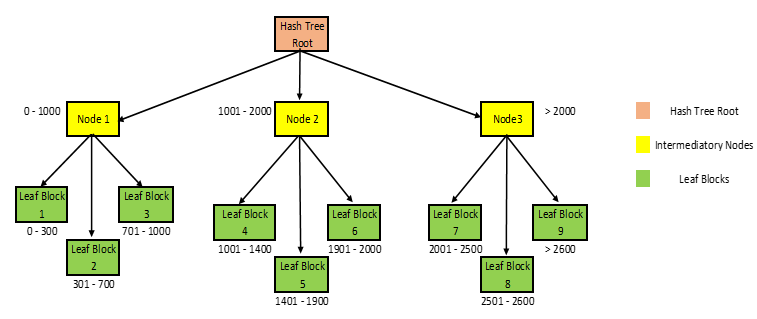

Below is a simple graphical representation of a hash tree (htree) with a depth of one.

The htree root and nodes direct you to the next level of nodes or to the leaf blocks. The leaf blocks store directory entries (dirents). Each leaf block is associated with a specific range of hash values, and it stores the dirents whose hash falls within this range.

For instance, if a file with a hash value of 1500 is added, it will be placed in leaf block 5 because its hash value is within the hash range of this block.

Inside a Leaf Block

In this section, let’s examine the structure of a leaf block in a directory with 1000 files named file1 to file1000 by looking at its hexdump.

The on-disk structure of a dirent includes the inode number, directory entry length (rec_len), name length, file type, and file name.

struct ext4_dir_entry_2 {

__le32 inode; /* Inode number of the file */

__le16 rec_len; /* Directory entry length */

__u8 name_len; /* Name length of the file*/

__u8 file_type;

char name[EXT4_NAME_LEN]; /* File name */

};

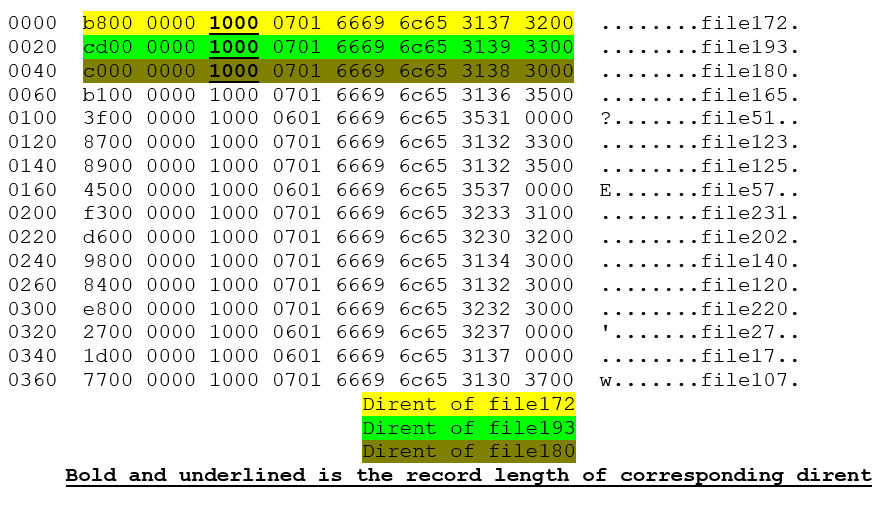

The hexdump:

In the image, you can see the file names file* in the ASCII section.

Three directory entries (dirents) corresponding to file172, file193, and file180 are highlighted, with their respective record lengths (rec_len) highlighted in bold and underlined text. All three have the same rec_len of 16 bytes (0x10).

The rec_len for any dirent is calculated using the formula:

rec_len = (name_len + 11) & (~3)

For the highlighted dirents, the name length (name_len) is 7, so rec_len = (7+11) & (~3) = 16. Thus, the rec_len for all there dirents is 16.

What Happens Upon Deletion?

Now, we shall examine the hexdump of the same leaf block after deleting some files from the directory.

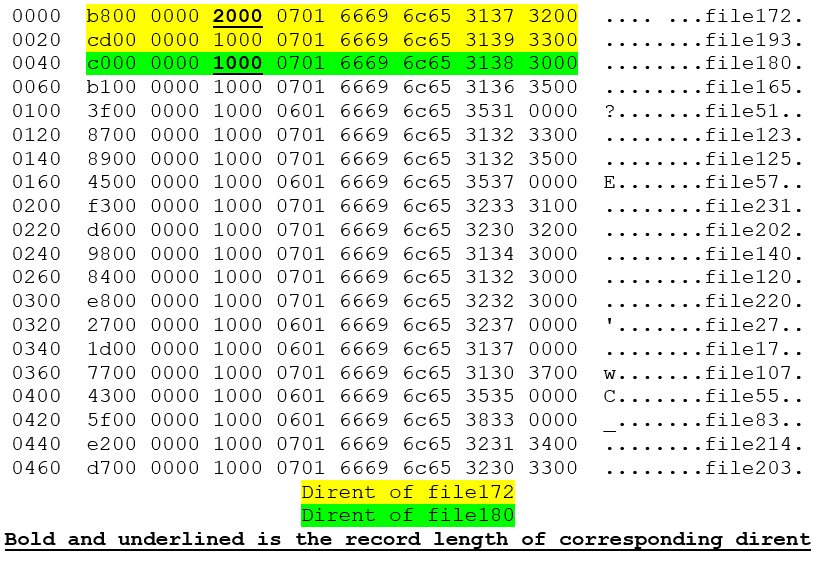

Below is the hexdump of the same leaf block after deleting the file named file193 from the directory.

Looking at the ASCII section, we still see the dirent of the deleted file file193. This raises the question, “How did the dirent of a deleted file remain in the directory block?”. If we observe carefully, the record length (rec_len) of file172 has changed to 32 bytes (0x20) from its previous 16 bytes (0x10). So, what’s happening here?

The explanation is that when a file is deleted from a directory, ext4 does not remove its directory entry (dirent) from the leaf block. Instead, it simply increases the rec_len of the preceding dirent so that it completely overlaps the deleted dirent.

You might wonder if this space is permanently assigned to the preceding dirent. The answer is no.

For example, in the future, suppose a new dirent is created, and its hash value falls within this leaf block’s range, therefore the new dirent has to be inserted into this block. During insertion, ext4 looks for the best possible location for this new dirent. If it finds any dirent whose on-disk rec_len is greater than its calculated rec_len (as per the formula mentioned earlier) and the difference between both rec_lens is at least as large as the new dirent’s calculated rec_len, then the new dirent will be placed after this particular dirent, and its rec_len will be adjusted accordingly. If no suitable location is found, the new dirent is simply added to the end. If there’s no space left for the new dirent, a block split occurs, this topic is beyond the scope of this article but is briefly discussed in this blog.

Let’s also consider what happens if all the dirents in a particular leaf are deleted.

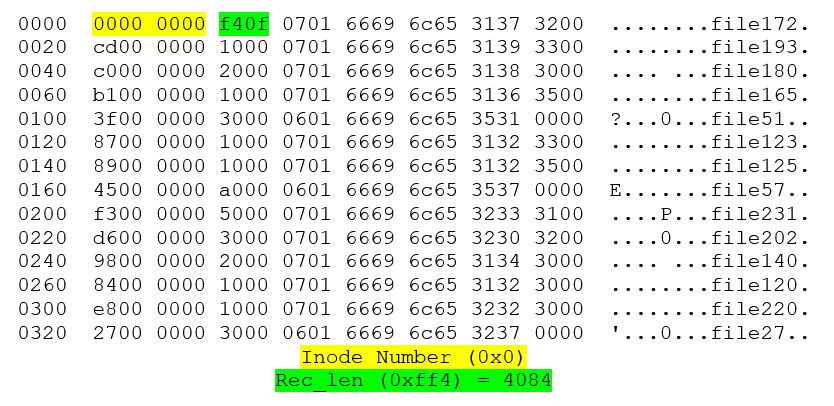

The hexdump below shows what a completely empty leaf block looks like:

The inode number of the first dirent is set to 0, and its rec_len is adjusted to 4084, which spans the entire block, leaving the last 12 bytes for the checksum.

The key takeaway is that even though the entire leaf block is empty, it is not freed but remains with the directory. This leaf block will not be reused until new dirents, whose hash values fall within this block’s hash range, are created.

If we have a large directory with 1 million dirents, it could potentially use almost 1GiB of disk space. Later, if most files are deleted for some reason, lets say 99% of the dirents are deleted. Even after this massive deletion, the directory will still use 1GiB of disk space. When functions like get_sysdents are called on such directories, they will take significantly longer to return, spending most of their time traversing empty leaf blocks.

Demonstrating the Issue

In this section, we will demonstrate the issue in detail:

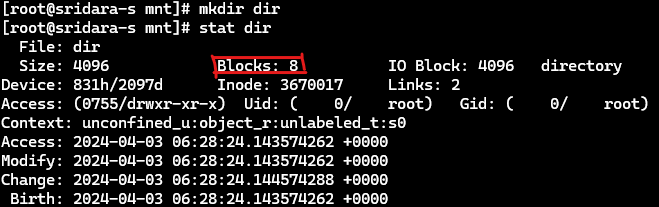

First, a new directory named dir is created:

This directory is initially empty and has 8 allocated blocks.

Next, almost 6 million new files are created in this directory (named file1, file2, file3 and so on), which increased its size to 330456 blocks.

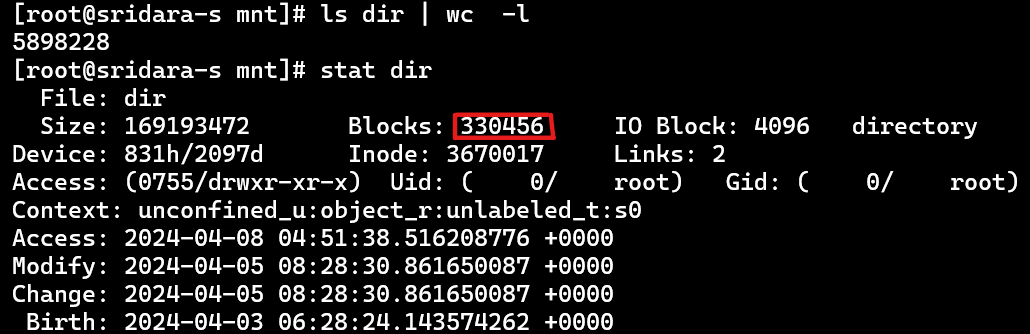

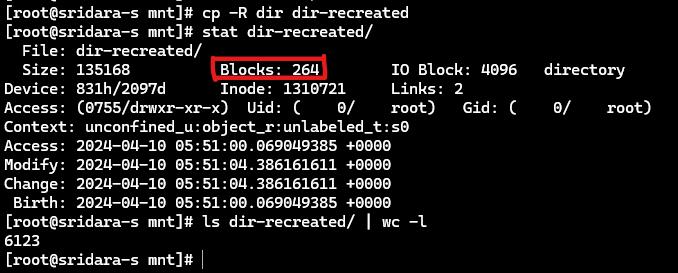

Then, almost all of the files are deleted leaving just 6k files. But we dont see its impact on the directory’s size.

![]()

Despite deletion, the size of the directory remained the same.

The reason behind this is that the ext4 file system does not release its empty blocks back to the kernel. Therefore, the size of the directory does not reduce even after the files have been deleted.

The issue here is that, ext4 doesn’t free the directory’s empty blocks. Therefore, once a directory’s size is grown, it cannot be shrunk.

System calls like sys_getdents hold the read/write semaphore of the inode of the directory. So when these functions handle this type of directory (the directories with a huge number of empty blocks with very few dirents), they spend most of their time iterating through empty blocks while holding the lock. Because of this, other processes waiting for the directory lock, either to add or delete or for something else, will be starved. When there are many such processes, the system will be at risk of hanging.

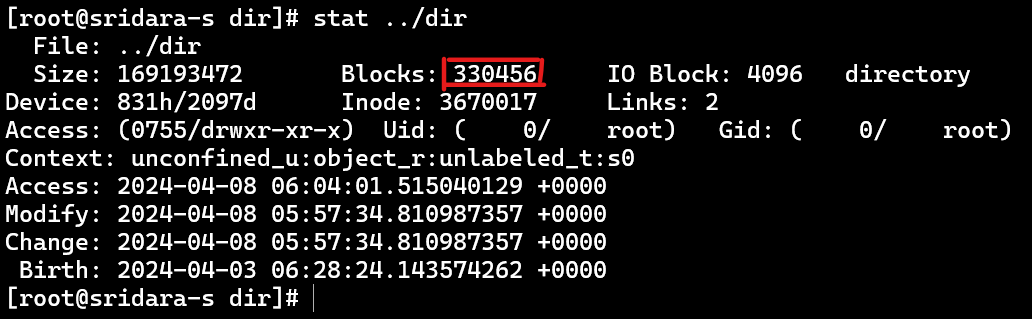

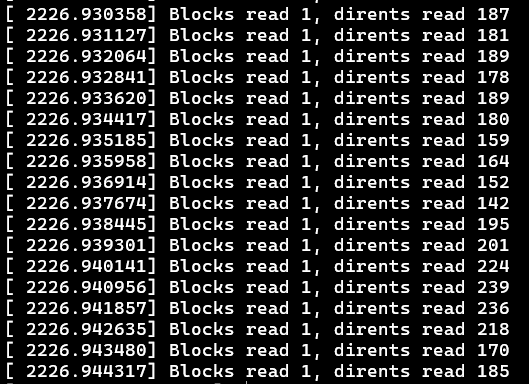

We have used a ksplice patch to print the number of blocks traversed and the number of dirents read by sys_getdents syscall for the directory dir:

We can see that sys_getdents traverses multiple blocks but is able to find only 1 or 2 dirents. In one of the cases, we see that it traversed 28 blocks but only found 1 dirent. We see how sys_getdents wastes its time by traversing through empty blocks.

The Workaround

To reclaim those unused blocks, the only solution is to create a new directory and copy the contents from the original directory to the new one.

By doing this, the newly created directory will utilize only the blocks necessary to store the non deleted directory entries. Any unused blocks will be left behind and will not be copied.

As we can see, the number of blocks utilized by the directory has decreased from 330456 to 264 blocks.

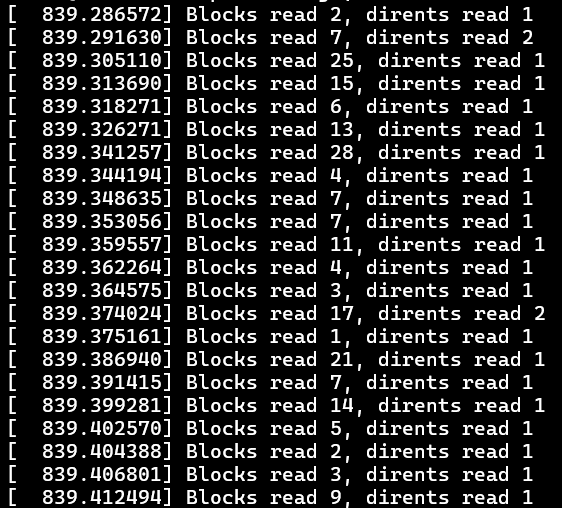

The traversal log:

From the log, we can see that sys_getdents is able to read around 200 dirents from a single block. The recreated directory is no longer hollow, and the dirents are efficiently packed.

Once the contents have been transferred to the new directory, the old directory can be deleted. If needed, the new directory can be renamed to the old one.

This workaround is useful in these type of situations where a directory occupies many blocks but contains very few files.

Conclusion

This article has explained the problem caused by the deletion of a large number of files in a directory and gave a workaround for reclaiming unused blocks. By creating a new directory and transferring the essential contents, users can effectively optimize their filesystem.