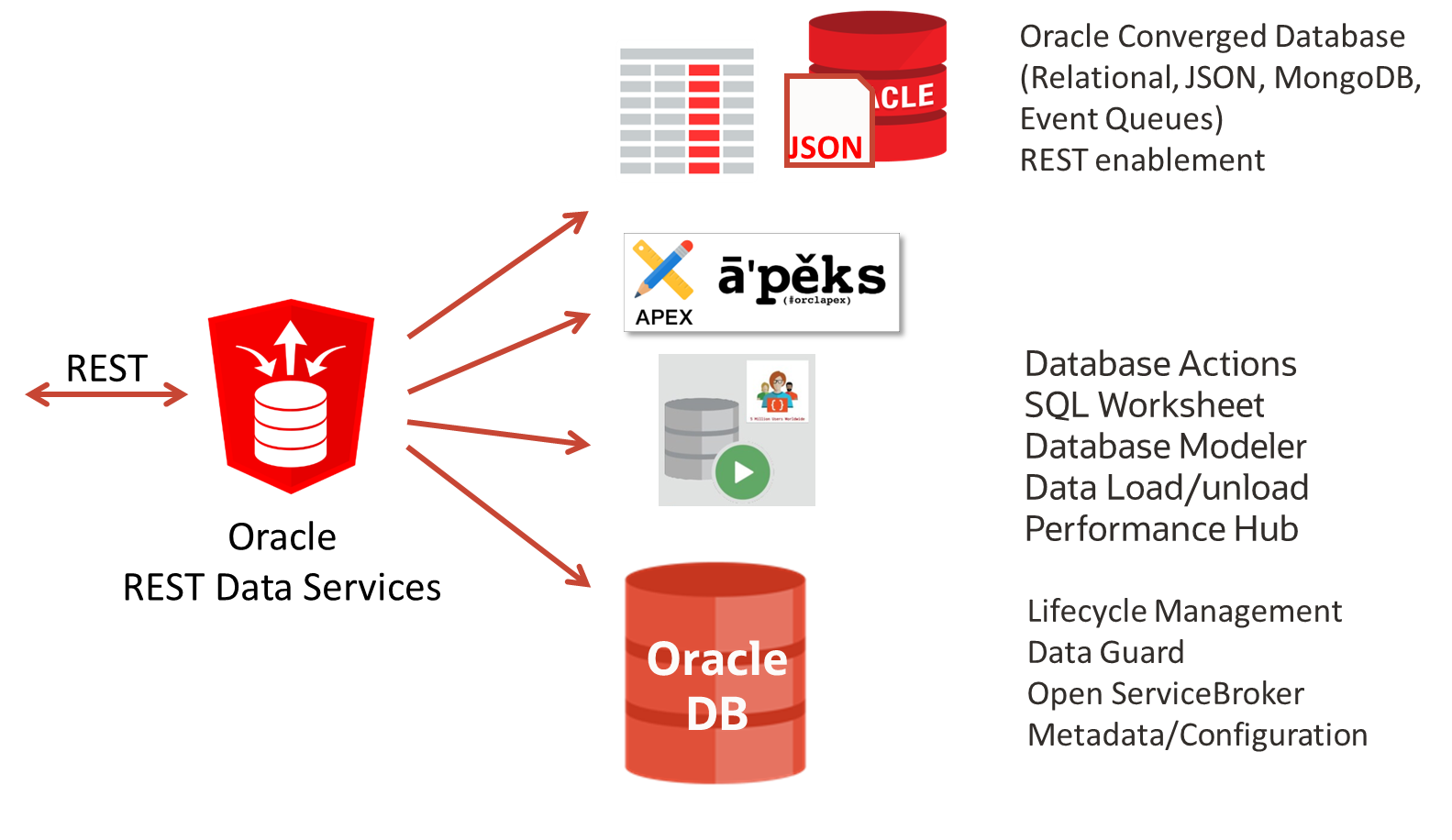

Oracle REST data services, or ORDS for short, has developed into a Swiss army knife when it comes to REST support for Oracle databases and should not be missing from any database installation. ORDS has a lot to offer – originally it was used almost exclusively as a listener or browser access for the low-code development environment “APEX”, Application Express. However, it now offers several tools and REST APIs for different purposes, which can also be activated individually if desired:

- Browser access for Application Express (APEX) for the creation and operation of fluid and colorful user interfaces

- Operation of the “Database Actions” toolbox, in principle a browser-based successor to SQL*Developer, which once had to be installed locally. With SQL command line, data modeling, definition of REST services from database structures, loading and unloading of data, performance monitoring for the database

- A REST API according to GraphQL standard and a GraphQL server to link generated REST services via database metadata

- REST APIs for managing, storing and searching JSON documents in SODA and MongoDB style

- A REST API for Transactional Event Queues for managing and handling events and messages

- REST APIs for the lifecycle management of databases, pluggable databases and schemas, once according to the Open Service Broker standard and once simplified with Oracle’s own implementation (PDB Lifecycle Management)

- REST APIs for reading metadata from database structures (data dictionary), runtime information, data guard configuration, data pump operations,…

A list of the supported REST APIs and their parameters is part of the ORDS documentation, e.g. in the chapter “All REST Endpoints”.

Just like other database satellite tools such as GoldenGate, Connection Manager, Observability Exporter and many more, ORDS fits into a fairly small container provided by Oracle and can be conveniently operated under Kubernetes. Also highly available and secured with Kubernetes on-board resources.

In our “will it blend?” series, I would like to use an example today to explain how ORDS can be integrated under Kubernetes.

In the last article in this series about GoldenGate as a container, we didn’t have it quite so convenient, because ORDS, in contrast, is already available for download as a ready-made container at container-registry.oracle.com, even in two variants ords and ords-developer. A separate image only needs to be created in exceptional cases, e.g. with GraalVM instead of Oracle Java as the basis for running the optional GraphQL server: This was written in JavaScript but runs as part of the ORDS process. Information on setting up a GraalVM can be found in the blog post on GraphQL versus SQL/PGQ.

A suitable but very optional Dockerfile to create a container with GraalVM and JavaScript support from a standard ORDS container could look like this :

ARG BASE_IMAGE=container-registry.oracle.com/database/ords-developer:latest

FROM ${BASE_IMAGE}

USER root

COPY graalvm-jdk-17.0.12+8.1 /usr/lib/jvm/graalvm-jdk-17.0.12+8.1

RUN rm /etc/alternatives/java && \

ln -s /usr/lib/jvm/graalvm-jdk-17.0.12+8.1/bin/java /etc/alternatives/java && \

/usr/lib/jvm/graalvm-jdk-17.0.12+8.1/bin/gu install nodejs

USER oracle

EXPOSE 8080

WORKDIR /opt/oracle/ords

CMD bash /entrypoint.sh

However, as it will not be necessary to create an image yourself in the vast majority of cases, I would like to streamline further explanations and only mention them for the sake of completeness:

- The base image here is the ords-developer image on container-registry.oracle.com.

- A previously downloaded and unpacked graalvm version 17 is copied into it.

- The graal update tool is then used to install JavaScript support.

- The container is started again as a non-root user named “oracle”, which has already been created in the base image.

- A command such as “podman build -t ords-developer-graal:latest” followed by “podman push ords-developer-graal:latest” creates the image and uploads it to docker.io, the default host. Unless you specify a different host name for the upload, which is prefixed with the name of the image (“myhost.com/ords-developer-graal:latest”)

Why are there two standard images, ords and ords-developer ?

The ords-developer image is easier to set up and start because it uses some default values over which you have no control in this image. For example, the password of the proxy user ORDS_PROXY_USER is set to “oracle” in the connected database at startup. Actually, only the connect string and a user with SYSDBA rights and his password can be passed as parameters in the form of a file “ords_secrets/conn_string.txt”. The connected database is set up immediately when APEX is started or updated if it already exists. I assume that this procedure is usually not desired and have chosen a flexible way with any configuration options with the standard ords image, which I would now like to describe.

Deployment via the standard “ords” image

In order to avoid having to create our own new image and instead use what is available as elegantly as possible, we need to proceed in two steps. In the first step an ORDS configuration is created, in the second step the configuration is integrated and ORDS is started. We use an initContainer for this. These containers start before the actual container, e.g. to prepare file systems or to start programs for configuration that may not even be installed in the actual container.

The initContainer could make one or more “ords config” calls in a script, the main container starts the ords with “ords serve”. Here is an excerpt from a corresponding deployment YAML file:

spec:

initContainers:

- name: set-config

#.... Umgebungsvariablen für das Skript...

command:

- /bin/bash

- /scripts/ords_setup.sh

containers:

- name: app

command:

- ords

- serve

It is important that both containers fill or read the same directory so that the created configuration is not lost again when the initContainer is closed. To avoid having to use persistent volumes and to save space, there is the “emptyDir” construct, which temporarily creates and binds an empty directory on the cluster node as long as the containers are started:

# in beiden Containern, d.h. 2x einzutragen

volumeMounts:

- mountPath: /etc/ords/config

name: config

# Ein Volume für beide Container im selben Pod

volumes:

- name: config

emptyDir: {}

This is why Kubernetes has so-called pods: these are one or more containers, either started one after the other or simultaneously, that share resources or logically belong together. For example, a container with metrics collection with an application container. Or a configuration container with an application container, as in this case.

The shell script that performs the configuration in the first container is also integrated as a volume. The volume originates from a Kubernetes “ConfigMap”, the contents of which can be integrated as files. The volume is integrated as in the following example excerpt:

# nur im initContainer einzutragen volumeMounts: - name: scripts mountPath: /scripts volumes: - name: scripts configMap: defaultMode: 420 name: ords-init-config

The content of the ConfigMap ords-init-config is basically a file with a shell script. Any commands can be inserted into the shell script. Provided that the container used knows these commands. An excerpt could look like this:

kind: ConfigMap metadata: name: ords-init-config data: ords_setup.sh: |- #!/bin/bash ords install ...... <<EOF $ADMIN_PWD $PROXY_PWD EOF

That would be all the magic behind the installation. A complete deployment with specification of namespaces and which ORDS containers are to be used could look like this:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ords-nondev

namespace: ords-zoo

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/instance: ords-nondev

app.kubernetes.io/name: ords-nondev

template:

metadata:

labels:

app.kubernetes.io/instance: ords-nondev

app.kubernetes.io/name: ords-nondev

spec:

initContainers:

- name: set-config

env:

- name: ADMIN_USER

valueFrom:

secretKeyRef:

key: admin_user

name: ords-secret

- name: ADMIN_PWD

valueFrom:

secretKeyRef:

key: admin_pwd

name: ords-secret

- name: PROXY_PWD

valueFrom:

secretKeyRef:

key: proxy_pwd

name: ords-secret

- name: DB_HOST

valueFrom:

secretKeyRef:

key: db_host

name: ords-secret

- name: DB_PORT

valueFrom:

secretKeyRef:

key: db_port

name: ords-secret

- name: DB_SERVICENAME

valueFrom:

secretKeyRef:

key: db_servicename

name: ords-secret

command:

- /bin/bash

- /scripts/ords_setup.sh

image: container-registry.oracle.com/database/ords:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /scripts

name: scripts

- mountPath: /etc/ords/config

name: config

containers:

- image: container-registry.oracle.com/database/ords:latest

imagePullPolicy: IfNotPresent

name: app

command:

- ords

- serve

ports:

- containerPort: 8080

name: http-ords

protocol: TCP

volumeMounts:

- mountPath: /etc/ords/config

name: config

volumes:

- name: scripts

configMap:

defaultMode: 420

name: ords-init-config

- name: config

emptyDir: {}

Assuming this YAML document had the name ords-deploy.yaml, it could be transferred to your Kubernetes cluster with “kubectl apply -f ords-deploy.yaml”. However, this still results in error messages that the namespace ords-zoo does not exist and that a secret named ords-secret is missing, as well as a ConfigMap named ords-init-config.

The namespace ords-zoo can be created like so:

$ kubectl create namespace ords-zoo

In this case, the passwords required for the database connection come from a Kubernetes secret called ords-secret. The container images come directly from container-registry.oracle.com – of course you can transfer them to your personal container registry beforehand and load them into Kubernetes from there. The secret could load its private data from a vault solution or, as in our example here, contain it in normally unwanted plain text. This file, let’s call it ords-secret.yaml, can also be transferred to your cluster with “kubectl apply -f ords-secret.yaml”. Please adapt host names and passwords to your circumstances beforehand:

apiVersion: v1 kind: Secret metadata: name: ords-secret namespace: sidb23c type: Opaque stringData: admin_pwd: MyComplexAdminPW123 admin_user: sys db_host: myhost.oraclevcn.com db_port: "1521" db_servicename: FREEPDB1 proxy_pwd: oracle

The ConfigMap ords-init-config, which performs all ORDS configurations via script, could look as follows:

apiVersion: v1

kind: ConfigMap

metadata:

name: ords-init-config

namespace: ords-zoo

data:

ords_setup.sh: |-

#!/bin/bash

ords install --admin-user $ADMIN_USER --proxy-user --db-hostname $DB_HOST --db-port $DB_PORT --db-servicename $DB_SERVICENAME --feature-sdw true --password-stdin <<EOF

$ADMIN_PWD

$PROXY_PWD

EOF

cd /etc/ords/config

curl -o apex.zip https://download.oracle.com/otn_software/apex/apex-latest.zip

jar xvf apex.zip

rm apex.zip

chmod -R 755 apex

ords config set standalone.static.path /etc/ords/config/apex/images

Let’s assume that this file is called ords-init-config.yaml. It can then be transferred to your cluster using “kubectl apply -f ords-init.yaml”. The script contained in the ConfigMap not only enters the connect strings and passwords for the database connection, but also downloads various icons and images from the Internet and integrates them into the APEX directories. The “database actions” or feature-sdw feature is also activated. Further calls are conceivable, e.g. downloading or copying an existing configuration from a git repository or other web server.

Now calling “kubectl apply -f ords-deploy.yaml” again should also work without errors and the container should be downloaded and started. If you have adjusted your database passwords and host names correctly. This can be checked as follows:

$ kubectl get deployment -n ords-zoo NAME READY UP-TO-DATE AVAILABLE AGE ords-nondev 1/1 1 1 1d $ kubectl get configmap -n ords-zoo NAME DATA AGE ords-init-config 1 1d $ kubectl get secret -n ords-zoo NAME TYPE DATA AGE ords-secret Opaque 6 1d $ kubectl get pod -n ords-zoo NAME READY STATUS RESTARTS AGE ords-nondev-56d97d9594-lck4h 1/1 Running 0 1d

A log output of the two containers set-config and app in the system-generated pod name ords-nondev-56d97d9594-lck4h should look promising, albeit very extensive.

If the download of the APEX icons does not work in your environment, omit the entry in the script for the time being. Your firewall probably does not allow the download. The configuration steps and unzip operations should be visible in the set-config container, for example, similar to here:

$ kubectl logs ords-nondev-56d97d9594-lck4h -n ords-zoo set-config

2024-11-12T14:52:15Z INFO ORDS has not detected the option '--config' and this will be set up to the default directory.

ORDS: Release 24.3 Production on Tue Nov 12 14:52:18 2024

Copyright (c) 2010, 2024, Oracle.

Configuration:

/etc/ords/config

Oracle REST Data Services - Non-Interactive Install

Retrieving information.

Connecting to database user: ORDS_PUBLIC_USER url: jdbc:oracle:thin:@//myhost.oraclevcn.com:1521/FREEPDB1

The setting named: db.connectionType was set to: basic in configuration: default

The setting named: db.hostname was set to: myhost.oraclevcn.com in configuration: default

The setting named: db.port was set to: 1521 in configuration: default

The setting named: db.servicename was set to: FREEPDB1 in configuration: default

The setting named: plsql.gateway.mode was set to: proxied in configuration: default

The setting named: db.username was set to: ORDS_PUBLIC_USER in configuration: default

The setting named: db.password was set to: ****** in configuration: default

The setting named: feature.sdw was set to: true in configuration: default

The global setting named: database.api.enabled was set to: true

The setting named: restEnabledSql.active was set to: true in configuration: default

The setting named: security.requestValidationFunction was set to: ords_util.authorize_plsql_gateway in configuration: default

2024-11-12T14:52:23.172Z INFO Oracle REST Data Services schema version 24.3.0.r2620924 is installed.

2024-11-12T14:52:23.174Z INFO To run in standalone mode, use the ords serve command:

2024-11-12T14:52:23.174Z INFO ords --config /etc/ords/config serve

2024-11-12T14:52:23.174Z INFO Visit the ORDS Documentation to access tutorials, developer guides and more to help you get started with the new ORDS Command Line Interface (http://oracle.com/rest).

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 276M 100 276M 0 0 104M 0 0:00:02 0:00:02 --:--:-- 104M

inflated: META-INF/MANIFEST.MF

inflated: META-INF/ORACLE_C.SF

inflated: META-INF/ORACLE_C.RSA

created: apex/

inflated: apex/LICENSE.txt

inflated: apex/apex_rest_config.sql

inflated: apex/apex_rest_config_cdb.sql

inflated: apex/apex_rest_config_core.sql

inflated: apex/apex_rest_config_nocdb.sql

inflated: apex/apexins.sql

.....

By the way, you can easily scale the pod and make it more available by adjusting the parameter “replica = 1” in the ords-deployment.yaml file to the value 2, then two pods are started and load balanced. Pod autoscaler could be discussed, because automatic scaling at high load would also work here.

In any case: congratulations if you have reached this point and the container is running for you!

The network connection

Now that the container is starting, the question is surely how to address the container and its many REST services and user interfaces with the browser? And is this possible with HTTPS or only with HTTP? The answer comes again in the form of kubectl commands and network resources in several variants.

A quick-and-dirty connection for testing is made using port forwarding between the Kubernetes cluster and the computer with which you send kubectl commands. Find out the name of the ORDS container in your cluster and forward the ORDS port 8080 in the container to your computer:

$ kubectl get pod -n ords-zoo NAME READY STATUS RESTARTS AGE ords-nondev-56d97d9594-lck4h 1/1 Running 0 2m9s $ kubectl port-forward ords-nondev-56d97d9594-lck4h 8888:8080 -n ords-zoo & Forwarding from 127.0.0.1:8888 -> 8080 Forwarding from [::1]:8888 -> 8080 $ curl http://127.0.0.1:8888/ords/ -v Handling connection for 8888 * Trying 127.0.0.1... * TCP_NODELAY set * Connected to 127.0.0.1 (127.0.0.1) port 8888 (#0) > GET /ords/ HTTP/1.1 > Host: 127.0.0.1:8888 > User-Agent: curl/7.61.1 > Accept: */* > < HTTP/1.1 302 Found < Location: http://127.0.0.1:8888/ords/_/landing < Transfer-Encoding: chunked < * Connection #0 to host 127.0.0.1 left intact

Is there also a redirect (HTTP 302) to the ORDS landing page in your environment? Excellent! Then let’s move on to the next step and create a permanent network connection. In many Kubernetes environments, there is a central entry point, a gateway with an external IP address and port, which is addressed via an external load balancer. Such gateways can be implemented with istio, nginx or other tools. From there, ingresses can be defined, i.e. forwarding to containers or pods under certain criteria such as URLs or host names used. An ingress talks to a Kubernetes service, preferably of the “ClusterIP” type, and this binds to the started containers via metadata such as the name of the application etc. and again operates load balancing to the containers.

A suitable Kubernetes service could look like this:

apiVersion: v1

kind: Service

metadata:

name: ords-nondev

namespace: ords-zoo

spec:

ports:

- name: http-ords

port: 8888

protocol: TCP

targetPort: 8080

selector:

app.kubernetes.io/instance: ords-nondev

app.kubernetes.io/name: ords-nondev

sessionAffinity: None

type: ClusterIP

Name the file “ords-service.yaml” and send it to your cluster using “kubectl apply -f ords-service.yaml”.

If you would like to do without an Ingress for the time being, you can turn the Kubernetes service ords-nondev of type ClusterIP into a service of type LoadBalancer. Your ORDS service is then at the front of the Kubernetes cluster, is entered directly in your external LoadBalancer and receives its external IP address. However, this configuration is also normally intended for test purposes.

A suitable Ingress in your environment is similar, but not the same as the one below. This is because you should always use a host name that can be resolved in your network and refers to the IP address of your gateway (often a CNAME entry, i.e. an alias). You may also use istio as the ingressClassName, or nginx or perhaps traefik. I like to use hostnames of the nip.io service for testing purposes – they are always resolvable because the corresponding IP addresses are contained in their names.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ords-ingress

namespace: ords-zoo

spec:

ingressClassName: istio

rules:

- host: ords.130-61-137-234.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: ords-nondev

port:

number: 8888

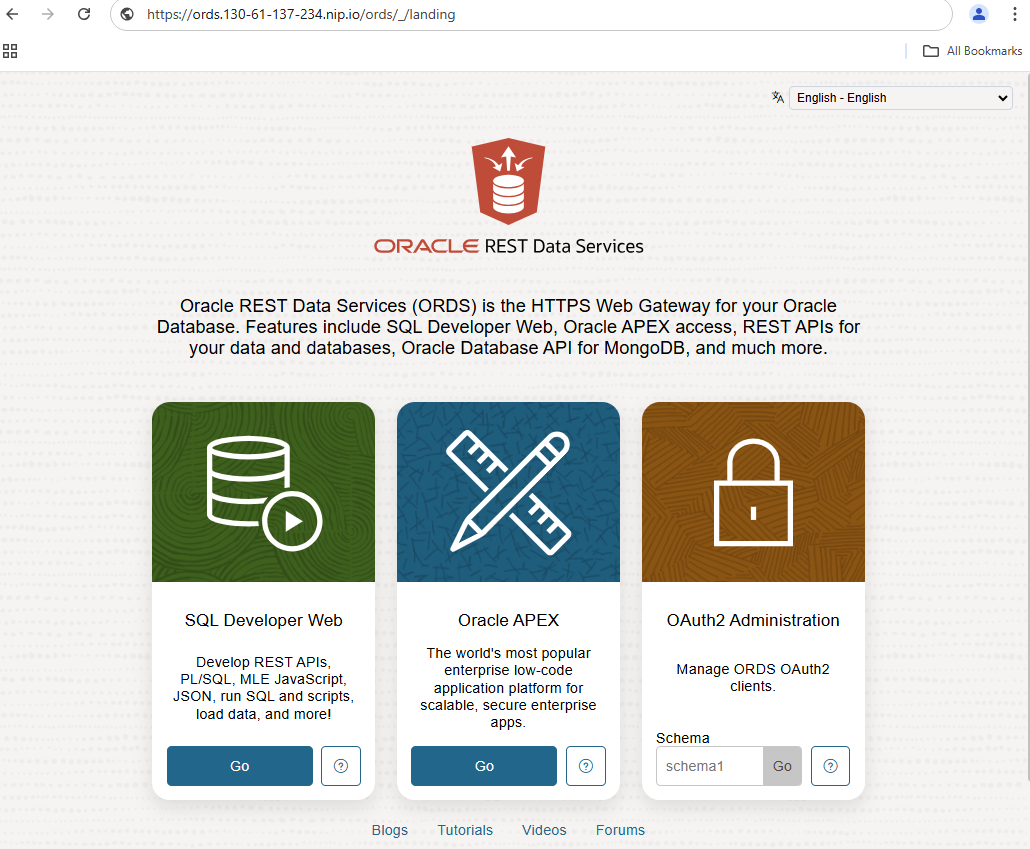

Another special feature in my environment: The gateway is accessible via HTTPS and forwards all requests to the unencrypted ORDS. The gateway terminates the SSL traffic. I therefore have to enter https instead of http as the address in the browser. Here in the example: https://ords.130-61-137-234.nip.io/ords/_/landing. This should also work differently in your environment:

If the ORDS landing page appears, this network access also works. If the areas for SQL Developer Web and APEX are not grayed out, the connection from the ORDS container to the database will also work.

If you have also terminated SSL access shortly before the ORDS and the ORDS itself is running unencrypted, it is possible that the forwarding does not work properly after a successful login. The “Database Actions” tool is then also unable to execute SQL commands with a “not authorized” error hidden in JavaScript. If this is the case, you can force the ORDS to “think” in SSL although it does not perform SSL encryption itself. By setting a small additional parameter security.forceHTTPS to true (in the ConfigMap ords-init-config.yaml introduced earlier), URLs and cookies generated internally by ORDS are changed to HTTPS. Alternatively, you could also use a reverse proxy such as nginx, which redirects HTTP access to HTTPS. Or you can simply configure ORDS completely with certificates for SSL access, which is exactly what we will do in the next chapter.

Congratulations, because you are actually already finished!

Network access part 2: SSL encryption

Would you also like to encrypt the internal access between the gateway and ORDS, should ORDS also be accessible via SSL or HTTPS? Then there is not much to do, you just have to give the ORDS the necessary certificates and tell it where they are located. And don’t forget the SSL port to be used, for example 8443 in the container. Ports smaller than 1024 are not permitted as a non-root user, and ORDS runs as an oracle user.

The previous YAML files must be adapted slightly for this purpose and sent back to the cluster with “kubectl apply -f ....”.

The configuration script in the ConfigMap ords-init-config.yaml receives further calls for the entries “standalone.https.cert”, “standalone.https.cert.key”, “standalone.https.host” and “standalone.https.port”. Please ensure that the specified host name matches the certificate used and that ORDS is only addressed via this host name. Any other HTTPS requests will then no longer be accepted.

The complete YAML would then look like this:

apiVersion: v1

kind: ConfigMap

metadata:

name: ords-init-config

namespace: ords-zoo

data:

ords_setup.sh: |-

#!/bin/bash

ords install --admin-user $ADMIN_USER --proxy-user --db-hostname $DB_HOST --db-port $DB_PORT --db-servicename $DB_SERVICENAME --feature-sdw true --password-stdin <<EOF

$ADMIN_PWD

$PROXY_PWD

EOF

cd /etc/ords/config

curl -o apex.zip https://download.oracle.com/otn_software/apex/apex-latest.zip

jar xvf apex.zip

rm apex.zip

chmod -R 755 apex

ords config set standalone.static.path /etc/ords/config/apex/images

ords config set standalone.https.host ords.130-61-137-234.nip.io

ords config set standalone.https.port 8443

ords config set standalone.https.cert /ssl/tls.crt

ords config set standalone.https.cert.key /ssl/tls.key

Das Deployment in ords-deploy.yaml erhält ein weiteres Volume im gerade eben gewählten Verzeichnis “/ssl“, in dem die Zertifikate von außen eingeschossen werden. Das Volume verweist auf ein noch anzulegendes Kubernetes Secret ords-ssl-secret, das die Zertifikate enthalten wird. Weiterhin wird in dem Deployment der neue Port 8443 bekanntgemacht. Sie können gerne den alten Port Eintrag 8080 entfernen, wenn nur noch SSL gesprochen werden soll. Das vollständige Deployment sieht dann wie folgt aus:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ords-nondev

namespace: ords-zoo

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/instance: ords-nondev

app.kubernetes.io/name: ords-nondev

template:

metadata:

labels:

app.kubernetes.io/instance: ords-nondev

app.kubernetes.io/name: ords-nondev

spec:

initContainers:

- name: set-config

env:

- name: ADMIN_USER

valueFrom:

secretKeyRef:

key: admin_user

name: ords-secret

- name: ADMIN_PWD

valueFrom:

secretKeyRef:

key: admin_pwd

name: ords-secret

- name: PROXY_PWD

valueFrom:

secretKeyRef:

key: proxy_pwd

name: ords-secret

- name: DB_HOST

valueFrom:

secretKeyRef:

key: db_host

name: ords-secret

- name: DB_PORT

valueFrom:

secretKeyRef:

key: db_port

name: ords-secret

- name: DB_SERVICENAME

valueFrom:

secretKeyRef:

key: db_servicename

name: ords-secret

command:

- /bin/bash

- /scripts/ords_setup.sh

image: container-registry.oracle.com/database/ords:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /scripts

name: scripts

- mountPath: /ssl

name: ssl

- mountPath: /etc/ords/config

name: config

containers:

- image: container-registry.oracle.com/database/ords:latest

imagePullPolicy: IfNotPresent

name: app

command:

- ords

- serve

ports:

- containerPort: 8080

name: http-ords

protocol: TCP

- containerPort: 8443

name: https-ords

protocol: TCP

volumeMounts:

- mountPath: /etc/ords/config

name: config

- mountPath: /ssl

name: ssl

volumes:

- name: scripts

configMap:

defaultMode: 420

name: ords-init-config

- name: config

emptyDir: {}

- name: ssl

secret:

defaultMode: 420

secretName: ords-ssl

There will be a problem with the deployment, because the basis for the ssl volume, the Kubernetes secret ords-ssl-secret, is still missing. Do you have the private key and certificate files to hand? Then use “kubectl create secret ...” to create an appropriately named secret and transfer your files (tls.key and tls.crt in this example) to it. Please also note that the key is expected in PKCS8 format and without a password! Your provided key may need to be converted first, openssl offers many options for this. My classic RSA PEM key first had to be converted with “openssl pkcs8 -in tls.key -topk8 -out tls.key.pk8 -nocrypt”, then ORDS could also be started with a secret that was created as follows:

$ kubectl create secret generic ords-ssl -n ords-zoo --from-file=tls.key="tls.key.pk8" --from-file=tls.crt="tls.crt"

Now the deployment should also work (please try again with “kubectl apply -f ords-deploy.yaml”). At this point I would like to advertise the Kubernetes tool cert-manager. It can take care of generating certificates for you, create the ords-ssl-secret automatically and even renew them automatically in good time before the certificates expire. This works with self-signed certificates, with external CAs such as letsEncrypt or cert-manager becomes its own CA if you give it “proper” root certificates, not self-signed ones.

To ensure that the new port 8443 is also used, the ords-nondev service must be adapted. Either add the port 8443 or replace the existing container port 8080 and the name http-ords. The naming has a special meaning for some network services such as istio, i.e. istio also reacts to the name of the ports to decide which protocol should be used between the gateway and the service. Please do not change port 8888, otherwise you would have to adapt the Ingress. The service with the exchanged port could look like this:

apiVersion: v1

kind: Service

metadata:

name: ords-nondev

namespace: ords-zoo

spec:

ports:

- name: https-ords

port: 8888

protocol: TCP

targetPort: 8443

selector:

app.kubernetes.io/instance: ords-nondev

app.kubernetes.io/name: ords-nondev

sessionAffinity: None

type: ClusterIP

If you use istio as an IngressClass, it may be necessary to specify that the internal network traffic to the ORDS container must be encrypted. A so-called DestinationRule can help here, otherwise HTTP error messages that the service was not found or the SSL connection was abruptly terminated may occur in the event of a problem.

apiVersion: networking.istio.io/v1alpha3 kind: DestinationRule metadata: name: ords-https-destrule namespace: ords-zoo spec: host: ords-nondev trafficPolicy: tls: mode: SIMPLE

That should be it! You can see from the container logs whether the fully encrypted access now works: are the certificates present in the container, do they have the correct name, is the key in PKCS8 format, did the ORDS configuration work? In addition to the container logs, you can connect to the container via shell and take a look for yourself:

NAME READY STATUS RESTARTS AGE

ords-nondev-sidb23c-754b9cd9f-tpdhj 1/1 Running 0 10m

$ kubectl exec --stdin --tty -n ords-zoo ords-nondev-sidb23c-754b9cd9f-tpdhj -- /bin/bash

Defaulted container "app" out of: app, set-config (init)

[oracle@ords-nondev-sidb23c-754b9cd9f-tpdhj ords]$ ls /ssl

tls.crt tls.key

The container logs should not contain any Java error messages of the type “oracle.dbtools.standalone.StandaloneException: The provided key is not RSA or PKCS8 encoded”. In the event of such errors, the container also terminates, it then quickly appears as “CrashLoopBackOff” in the list of pods with “kubectl get pods -n ords-zoo”.

This part should now also work. Thank you very much for reading along for so long and – as always – have fun testing!

Conclusion and outlook:

Running ORDS and other satellite containers around the Oracle database under Kubernetes makes a lot of sense. The advantages of Kubernetes such as scaling, availability, easy versioning and patching by simply exchanging the containers can be easily utilized. Oracle often offers the containers for download and productive use with docker and podman. Automations for Kubernetes deployments such as Helm Charts, a central Helm Chart repository are in progress and already available for some modern components, such as the microTX transaction manager and the Oracle Backend for Microservices. Other components such as Oracle databases in containers, metrics exporters for Grafana and, incidentally, ORDS (albeit somewhat limited in terms of dynamic configuration and network configuration) can be installed automatically via the Oracle Operator for Kubernetes (OraOperator). A Helm Chart is also currently being planned for the OraOperator, as well as its registration on operatorhub.io.

Some Links:

All YAML files to this blog on github

ORDS Container for download and description on container-registry.oracle.com

Notescabout SSL Konfiguration of ORDS on oracle-base

ORDS configuration with OraOperator for Kubernetes