Would you like to test how a generative AI can support you and set it up as easily as possible in your data center or even at home in your hobby room? Can your CPU handle the tasks at hand or do you need a gaming graphics card somewhere in a corner of your data center? Oracle offers you three environments for testing but also for development and runtime of GenAI based applications. Free of charge and container-based for Python, Java and PL/SQL.

Of course, Oracle has not completely reinvented the wheel here. Three packages of common and useful APIs and components have been put together. They are provided as part of the support subscription for Oracle databases, maintained and supported with technical assistance via github and Slack channels. A central service behind the packages is the linking and integration of the provided components as well as the creation of own plugins and drivers for the frameworks used. The most important benefit, however, is the simplicity with which you can quickly create a test environment, prototypes and even finished, secure and scalable applications. The best way to show how this is meant in concrete terms is by means of brief descriptions of the three packages, which can be combined with each other and with other tools.

Package 1: Oracle AI Microservices Sandbox

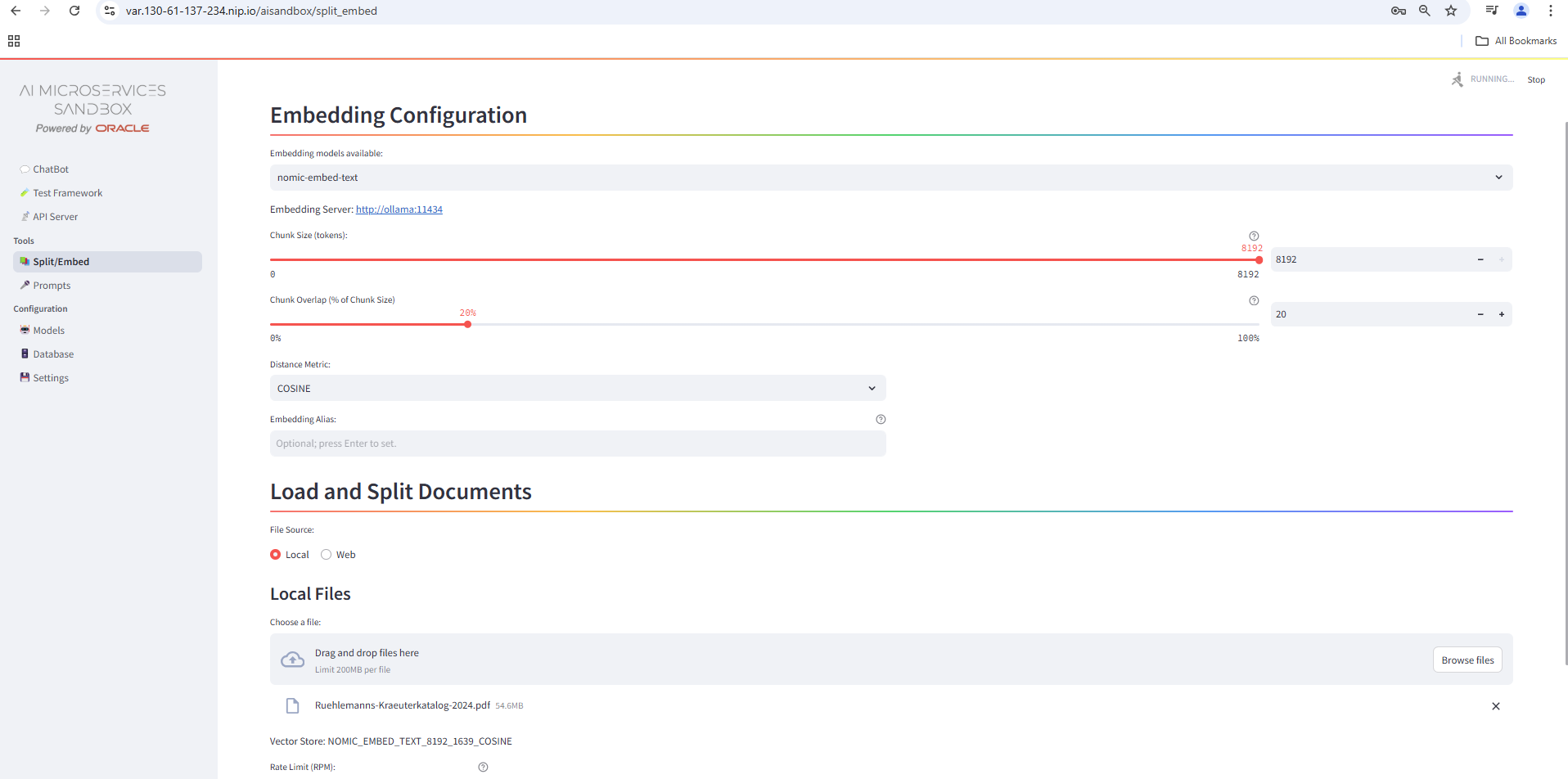

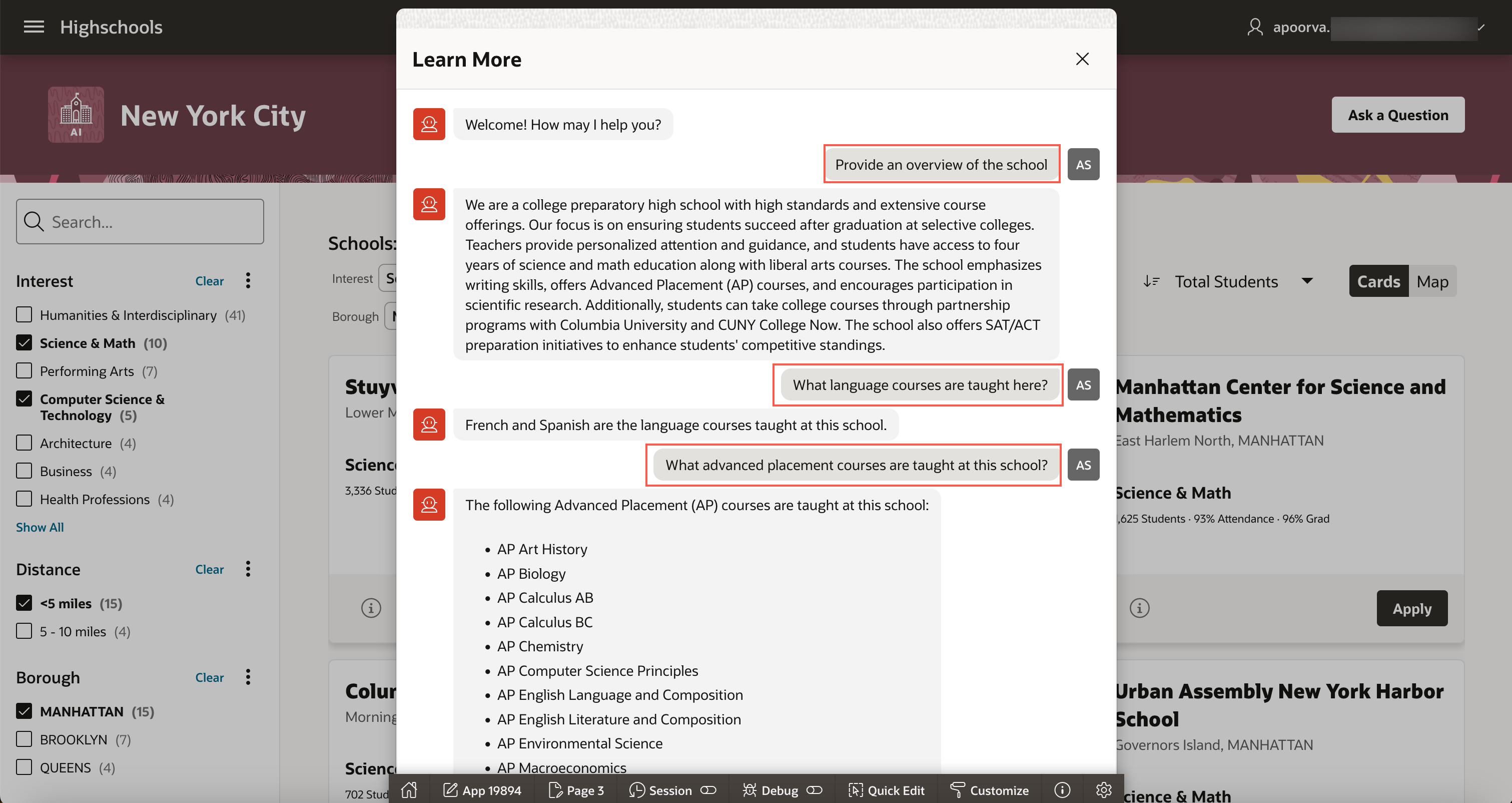

The package offers a clickable browser interface to build a fairly typical chatbot solution: Text documents can be uploaded and made searchable to build a knowledge base, a chat interface is used to test the selected large language models (LLMs) and also the exemplary prompts (system instructions in natural language to the AI on how it should proceed) and to formulate your own prompts. The end user’s queries in the chat can be answered with a mouse click on the basis of the loaded documents (retrieval augmented generation, RAG),, but can also originate from the public knowledge pool and the AI’s selectable creative powers of combination.

The package offers a clickable browser interface to build a fairly typical chatbot solution: Text documents can be uploaded and made searchable to build a knowledge base, a chat interface is used to test the selected large language models (LLMs) and also the exemplary prompts (system instructions in natural language to the AI on how it should proceed) and to formulate your own prompts. The end user’s queries in the chat can be answered with a mouse click on the basis of the loaded documents (retrieval augmented generation, RAG),, but can also originate from the public knowledge pool and the AI’s selectable creative powers of combination.

The package consists of three linked and prepared containers that can be set up and operated locally using the docker or podman command or in a small Kubernetes cluster (minikube, k3s or similar) using a helm chart. The helm chart offered via github repository sets up the application container and a container with a local AI “ollama”, an Oracle database 23ai installation as a container is described in the documentation provided. Instead of Kubernetes and Helm, the other two containers can also be started using podman as described in the hands-on guide.

The first container is the application container, which provides the configurable user interface. It can be linked with the other two containers or with external database and GenAI services. The frameworks used in the container and its logic can be further developed and expanded by the user; the source code for this is available on github. The logic in the container is currently based on the Python language, the langchain framework with official plugins for the Oracle database for storing and querying vector data and the user interface for the chatbot created with streamlit .

The second container comprises an Oracle 23ai FREE database. It is used for storing, indexing and searching vector data, so-called embeddings. Oracle23ai FREE may be used productively by license agreement, but is limited in size with a maximum of 20GB for tables and index data, which is often not to be sneezed at.

The third container comprises ollama, an open source GenAI for local use. Common large language models (LLMs) can be loaded and used. REST APIs for the generation of vector data (embeddings), for the generation of free and structured texts (answers to queries, summaries, image descriptions, code generation, classifications, function calls…) and for a chatbot are provided. A graphics card with nvidia GPU on the operating computer is desired, but very small LLMs with approx. 2GB maximum size like the quite new llama3.2:3b or deepseek-r1:1.5b run tolerably well in streaming mode, i.e. for reading along, on a regular CPU.

The Oracle AI Microservices Sandbox currently has developer preview status. It is a by-product of the second package Oracle Backend for Microservices and AI as a supplement to its focus on Java APIs. And for particularly quick and easy testing.

Package 2: Oracle Backend for Microservices and AI

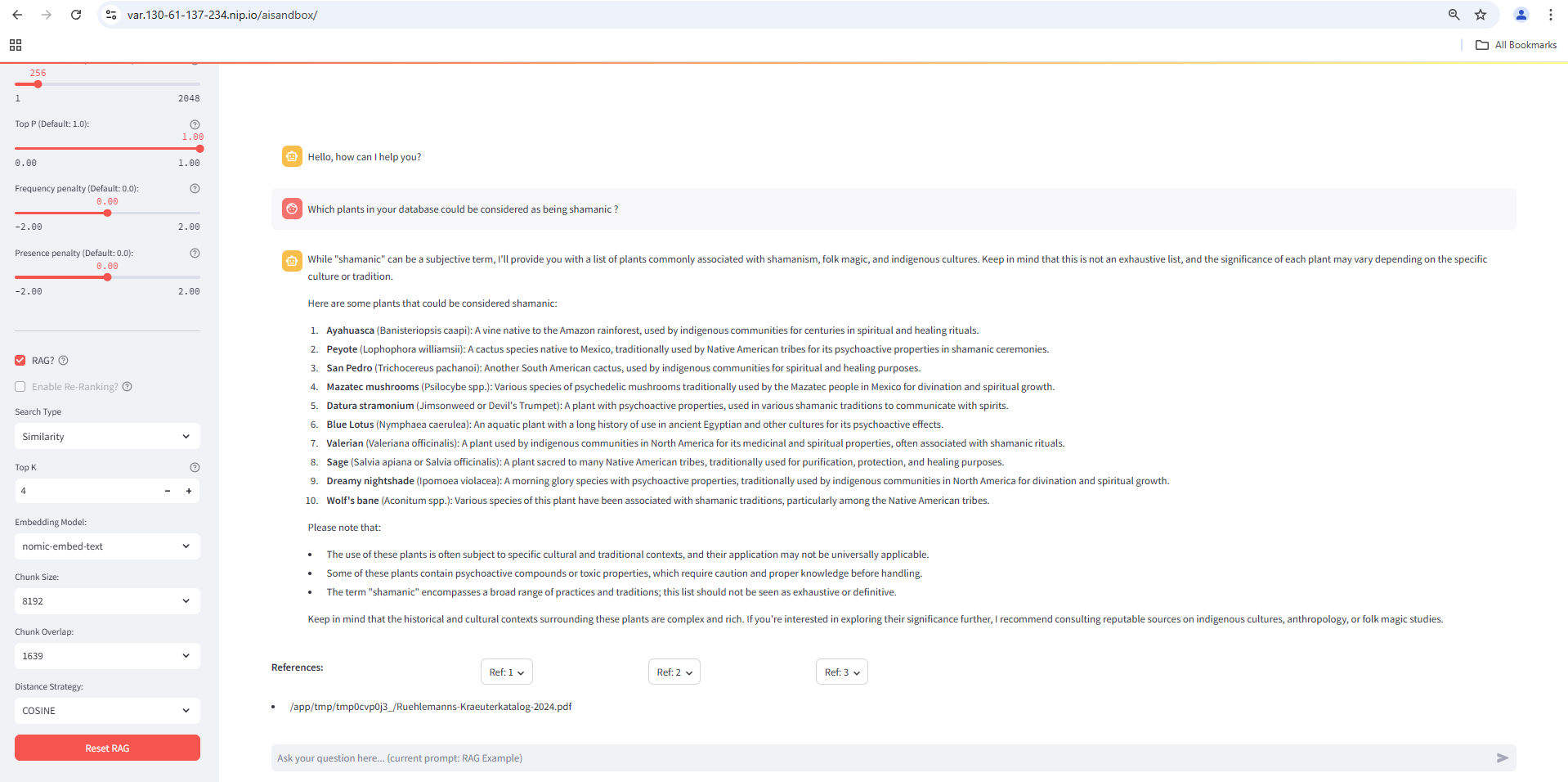

The package focuses primarily on the comprehensive development and full operation of backends, i.e. microservices of all kinds, in Java. Originally designed entirely for the Java Spring Framework, components based on the Helidon Framework (Eclipse Microprofile Standard) are now also supported. The Oracle Backend for Microservices and AI is supported as part of every database license. Help is provided via Slack channel, mail list and github issues. It also has its own chapter in the database documentation on developer support. The latest information and versions (e.g. 1.3.1 since the end of October 2024) are available on github alongside installation instructions.

As issues such as monitoring, automated deployments, scaling, network connection and security are particularly important when operating containers, many other containers are used in this package, which have been integrated with each other. The aim is to create a seamless, complete microservice runtime platform. Naturally completely open source and with interchangeable components, e.g. through commercial products or cloud services. In addition to the containers already known from package 1 with an Oracle 23ai Database FREE and a local ollama GenAI, among others, are used here:

Developer support:

Multiple Visual Studio Code Plugins (Oracle Backend, Spring, Java, Oracle Database, Kubernetes, Oracle Cloud, Helidon)

Spring Framework, like Spring AI (as alternative to langchain4j), Spring Database etc..

with numerous Oracle Plugins and kickstarters for quicker usage

like Oracle Database, Oracle Transaction Manager for Microservices, Oracle Transactional Event Queues, Oracle Vector Search, …

Platform “Microservices”:

Kafka Messaging (Strimzi Operator), Oracle Database (OraOperator),

Netflix Conductor (Flow Engine), ollama GenAI, Oracle Coherence Operator

Deployment and Monitoring:

Spring Cloud Config Server, Spring Cloud Admin Server, Service Operations Center,

Spring Cloud Eureka Service Registry, Grafana, Loki, Prometheus, Jaeger, OpenTelemetry collector

Network, Security and Identity:

cert-manager, nginx ingress controller, HashiCorp Vault,

Spring Authorization Server, Apache APISIX (API Gateway)

All these services require at least one container each. And the operators for Kafka, Oracle Database etc. in particular create additional containers. It is therefore hardly surprising that installation and operation are only possible under Kubernetes. However, minimalist installations with docker or podman are also supported. Some cloud Kubernetes distributions are also directly supported, e.g. Azure and Oracle Cloud with ansible playbooks or a terraform stack. A generic ansible playbook is provided for any other distributions such as minikube, Oracle OCNE, OpenShift and Tanzu. And for quick tests, VMs are available with a prepared environment based on minikube or k3s via the Oracle Cloud Marketplace.

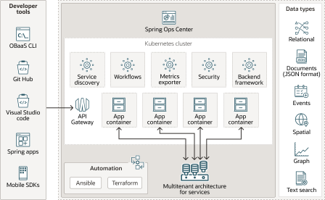

A sample application (CloudBank) is created with the help of a hands-on lab as part of the documentation – with several REST services, microservice transactions (SAGAs), and of course an AI chatbot. An explanatory video about this new microservices runtime platform from Oracle is also part of the documentation and we can expect more innovations, apps and add-ons in the near future:

Package 3: Oracle Application Express Version 24+

Python and Java developers have been provided with packages 1 and 2, but PL/SQL developers will definitely not miss out either. Because in principle, what is possible with langchain for Python and with Spring AI or langchain4j for Java is also possible with PL/SQL. Provided you have an Oracle23ai database to hand, e.g. as a FREE variant in the container.

Package 3 therefore consists of an Oracle23ai database container, an ORDS container for access to Application Express (APEX) and to REST services within the database and again an ollama container. As in package 1, you can also start all containers individually using docker or podman. Or you can use the Oracle Database Operator under Kubernetes to provision an Oracle database with flanged ORDS and set up the ollama container separately under Kubernetes as in package 2, e.g. via helm chart.

For the sake of simplicity, you can find the instructions for the docker and podman variants here:

Description about starting a database 23ai FREE containers with docker or podman on container-registry.oracle.com

Description about starting an ORDS/APEX containers with docker or podman on container-registry.oracle.com

Description about starting an ollama containers from the “hands on” instructions of the Oracle AI optimizer (Package 1)

About the database container:

New APIs (packages) provided in Oracle23ai enable the handling of GenAIs and vector data. The new packages have names such as DBMS_VECTOR, DBMS_VECTOR_CHAIN, and they enable, among other things, very typical functions already known from the langchain framework:

- extracting texts from accessible documents (stored externally or as a BLOB),

- the usual “chunking” of these texts into smaller overlapping sections,

- the calculation of vector data (embeddings) on various data (texts, images, JSON documents,…),

- either within the database or outside via REST service calls,

- and of course various types of interaction with a GenAI outside the database

such as OpenAI, Cohere or a local ollama via REST service calls.

A newly introduced VECTOR data type is supported by its own indices and queries; in addition to texts and images, JSON documents are now also given special consideration and can be queried hybrid, i.e. both CONTEXT full-text indices and VECTOR indices can be used in a query. In other words, a combined semantic and keyword-based search takes place, which should further improve the search result.

A separate manual in the database documentation is dedicated to the Vector Search functionality and the connection of external GenAIs.

About the application express container:

Application Express 24.1 has wizards like the Generative AI Workspace Utility to create an AI chatbot application quite comfortably. You only need to have access to a GenAI via its REST interface. An Oracle LiveLab environment will guide you through the necessary steps.

In addition to textual instructions, there are various explanatory YouTube videos on the same topic. Like this one:

The use of VECTOR data types and queries on them have also found their way into APEX Wizards and are described in LiveLabs and demos.

Oracle APEX includes its own flow or workflow engine to graphically formulate and visualize sequences in a process or program. You could use it to build your own AI agents and also make them callable from outside via REST service call. I have currently found an example for booking doctor’s appointments. However, a GenAI agent created with APEX workflows is probably worth a separate blog entry.

In general, APEX offers continuously better GenAI support even during application development. Already in the first LiveLab mentioned, you will notice that an AI-based helper can generate SQL queries and interface fragments for you. Further GenDev features and improvements with APEX version 24.2 can be found in prepared form on the APEX Homepage.

About the ollama container:

Various GenAI providers are supported within the Oracle 23ai Database, e.g. Oracle Cloud GenAI, Cohere, OpenAI, HuggingFace and now also ollama. You are therefore no longer forced to formulate REST service calls to an ollama container by hand and call them with the UTL_HTTP package. You can use the convenience functions in DBMS_VECTOR_CHAIN to generate embeddings or texts by specifying ollama as the provider. You can find an example of this in our database documentation. However, the documentation has not yet been fully adapted, e.g. in the list of supported providers and usable features it is still pointed out that the UTL_HTTP package should be used for ollama. An example for calling the ollama container using UTL_HTTP can be found in another github repository (for a Vector Search Workshop in German, which you are welcome to request from me).

Resume and outlook

By supporting and easily integrating a local GenAI such as ollama as a container, the number of options for testing, developing and putting into operation literally explodes. Thanks to a container-based microservice architecture, individual containers can be replaced by particularly powerful cloud services at any time to meet special requirements. Or a move of the environment to a cloud or another data center is generally simplified. Try and test the various available large language models for CPU and GPU performance and application areas, formulate your own prompts to the GenAI to not only answer questions, but also decide which functions should be called and which categories of data pots should be used (relational, json, graph, text, …). The programming language used is relatively irrelevant, because Oracle supports you with the most common environments for Python, Java or PL/SQL. And it’s free from the outset thanks to freely available components from Oracle and other embedded providers.