Can Oracle Object Storage be used with Oracle Database on-premises and if yes how can this be done? What is Oracle Object Storage? Oracle Object Storage is a persistent cloud storage service that is available in all cloud regions. Developers and IT administrators can use this service to store and access data at low cost. Further information on prices, documentation etc. can be found here.

Interesting use cases for database applications are external tables or hybrid partitioned tables which could store the data not only in the database but also in the object storage. The required functionality is provided via the PL/SQL interface DBMS_CLOUD. With DBMS_CLOUD, it is possible to use Oracle Cloud Object Storage as data storage for the database. Originally, this functionality was reserved for users of Oracle Autonomous Database (short ADB) and has always been part of the standard functionality of the Oracle Autonomous Database. With Oracle Database Release 19c (beginning with 19.9) DBMS_CLOUD is now also available for all other database installations – on-premises and in cloud.

With DBMS_CLOUD, you can create external tables or hybrid partitioned tables and thus store the data not only in the database but also in the object storage.

The following list shows some examples:

- COPY_DATA for loading data from Object Storage into tables

- CREATE_CREDENTIAL for saving Cloud Object Storage credentials

- CREATE_EXTERNAL_TABLE to create external tables

- CREATE_EXTERNAL_PART_TABLE for creating partitioned external tables

- CREATE_HYBRID_PART_TABLE to create hybrid partitioned tables

The complete list of functions and procedures can be found in the PL/SQL Packages and Types Reference Guide under DBMS_CLOUD. If you want to try it out: My colleague Witold Swierzy put together some SQL examples on github. You can find the examples here.

However, there is still a catch: the package is not pre-installed. It must be installed manually and users or roles for using this package must be configured manually. Information on the step-by-step installation can be found in the MOS Note How To Setup And Use DBMS_CLOUD Package (Doc ID 2748362.1). Please note, this package is supported in Oracle Database 19c beginning with 19.9 and in Oracle Database 21c beginning with 21.3.

The following sections are intended to illustrate the setup and configuration and encourage you to follow along. Finally, application examples are shown to demonstrate the use.

Two configuration steps are required – firstly the installation of the DBMS_CLOUD package and then the security configuration of roles and users in order to be able to use the package.

Installation of DBMS_CLOUD

Please note, this package is supported in Oracle Database 19c beginning with 19.9 and in Oracle Database 21c beginning with 21.3. Installation in non-CDB environments is currently not supported.

Following steps are required for the installations:

1) Create schema C##CLOUD$SERVICE as owner of DBMS_CLOUD

First, the common schema C##CLOUD$SERVICE is created in the CDB and the PDBs. In the multitenant architecture, the Perl script catcon.pl can be used to ensure that DBMS_CLOUD is installed in all PDBs. An SQL script with the name dbms_cloud_install.sql, which contains the necessary commands, is created and stored in the dbc directory. (The code can be found in the MOS Note mentioned above or here).

Note: The installation instructions assume that the Database Vault option is installed. If errors occur in this context, they can be ignored if the Database Vault option is not installed.

Then the call looks like this:

$ORACLE_HOME/perl/bin/perl $ORACLE_HOME/rdbms/admin/catcon.pl -u sys/<password> --force_pdb_mode 'READ WRITE' -b dbms_cloud_install -d /home/oracle/dbc -l /home/oracle/dbc dbms_cloud_install.sql

The following commands can be helpful for verification.

-- from within the ROOT Container

SQL> select con_id, owner, object_name, status, sharing, oracle_maintained

from cdb_objects where object_name = 'DBMS_CLOUD' order by con_id;

CON_ID OWNER OBJECT_NAME STATUS SHARING O

---------- -------------------- --------------- ------- ------------------ -

1 PUBLIC DBMS_CLOUD VALID METADATA LINK Y

1 C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

1 C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

3 PUBLIC DBMS_CLOUD VALID METADATA LINK Y

3 C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

3 C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

5 PUBLIC DBMS_CLOUD VALID METADATA LINK Y

5 C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

5 C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

-- from within PDB

SQL> select owner, object_name, status, sharing, oracle_maintained

from dba_objects where object_name = 'DBMS_CLOUD';

OWNER OBJECT_NAME STATUS SHARING O

-------------------- -------------------- ------- ------------------ -

PUBLIC DBMS_CLOUD VALID METADATA LINK Y

C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

C##CLOUD$SERVICE DBMS_CLOUD VALID METADATA LINK Y

Create the SSL wallet with certificates for accessing HTTP URIs and the object storage

First, the required certificates must be downloaded from the following URL and unpacked in the /home/oracle/dbc directory, for example. Then an SSL wallet is created in the directory /opt/oracle/dcs/commonstore/wallets/ssl with the following orapki commands. (Incidentally, any other directory can also be selected).

cd /opt/oracle/dcs/commonstore/wallets/ssl orapki wallet create -wallet . -pwd <your_chosen_wallet_pw> -auto_login #! /bin/bash for i in 'ls <location of="" cert="" files"">/*cer' do orapki wallet add -wallet . -trusted_cert -cert $i -pwd <SSL Wallet password> done

To have your SSL wallet taken into effect you need to point to the newly created ssl wallet for your Oracle installation by adding it to your sqlnet.ora on the Server side.

WALLET_LOCATION= (SOURCE=(METHOD=FILE)(METHOD_DATA= (DIRECTORY=/opt/oracle/dcs/commonstore/wallets/ssl)))

Now the network ACLs (short for Access Control List) are set up via DBMS_NETWORK_ACL_ADMIN. As a reminder, ACLs are required by users to control network access from the database, for example via UTL_HTTP or UTL_TCP. The necessary commands can be copied from the MOS Note or downloaded here. They are stored in the dbc_aces.sql file for easy execution. The variable sslwalletdir must also be replaced correctly here. The script is executed in the root container.

# Connect to CDB$ROOT connect sys/<password> as sysdba @@dbc_aces.sql

The following check shows that the wallet has been created:

SQL> select * from database_properties

where property_name in ('SSL_WALLET','HTTP_PROXY');

PROPERTY_NAME PROPERTY_VALUE

-------------------- ---------------------------------------------

DESCRIPTION

------------------------------------------------------------

SSL_WALLET /opt/oracle/dcs/commonstore/wallets/ssl

Location of SSL Wallet

For final verification, you can use a special script that can be found in the MOS Note or downloaded here. Please do not forget to replace the variables with the correct entries. The result of the execution should be “valid response”. Otherwise, the configuration must be checked again and repeated if necessary.

...

old 2: &clouduser..GET_PAGE('https://objectstorage.eu-frankfurt-1.oraclecloud.com');

new 2: C##CLOUD$SERVICE.GET_PAGE('https://objectstorage.eu-frankfurt-1.oraclecloud.com');

valid response

...

Configuring users or roles to use DBMS_CLOUD

The required rights are now assigned either via a role or directly to the user. Both procedures are explained in the MOS Note. For test purposes, the direct assignment of rights is shown here using the example of the user SCOTT. In general, it is of course advisable to define a role instead of granting privileges directly and individually.

grant CREATE TABLE to SCOTT;

grant read, write on directory DATA_PUMP_DIR to SCOTT;

grant EXECUTE on dbms_cloud to SCOTT;

-- configure ACLs and ACEs

@$ORACLE_HOME/rdbms/admin/sqlsessstart.sql

-- Create New ACL / ACE s

begin

-- Allow all hosts for HTTP/HTTP_PROXY

dbms_network_acl_admin.append_host_ace(

host =>'*',

lower_port => 443,

upper_port => 443,

ace => xs$ace_type(

privilege_list => xs$name_list('http', 'http_proxy'),

principal_name => upper('SCOTT'),

principal_type => xs_acl.ptype_db));

-- Allow wallet access

dbms_network_acl_admin.append_wallet_ace(

wallet_path => 'file:/opt/oracle/dcs/commonstore/wallets/ssl',

ace => xs$ace_type(privilege_list =>

xs$name_list('use_client_certificates', 'use_passwords'),

principal_name => upper('SCOTT'),

principal_type => xs_acl.ptype_db));

end;

/

@$ORACLE_HOME/rdbms/admin/sqlsessend.sql

The configuration for the SCOTT user is now complete and the DBMS_CLOUD package can be used.

Some application scenarios

To access data in Object Store that is not public you need to authenticate with a OCI user in your tenancy that has appropriate privileges to the object storage bucket in the region in question. You need to create either an OCI API signing key or an auth token for a user in your tenancy. See https://docs.oracle.com/en-us/iaas/Content/Identity/Tasks/managingcredentials.htm for details.

Assuming you have created an auth token you now need to create a credential object in your database schema for authentication:

SQL> connect scott/<password>@US1

Connected.

SQL> BEGIN DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => '<credential name>',

username => '<your cloud service credentials>',

password => '<passwort'>);

END;

/

PL/SQL procedure successfully completed.

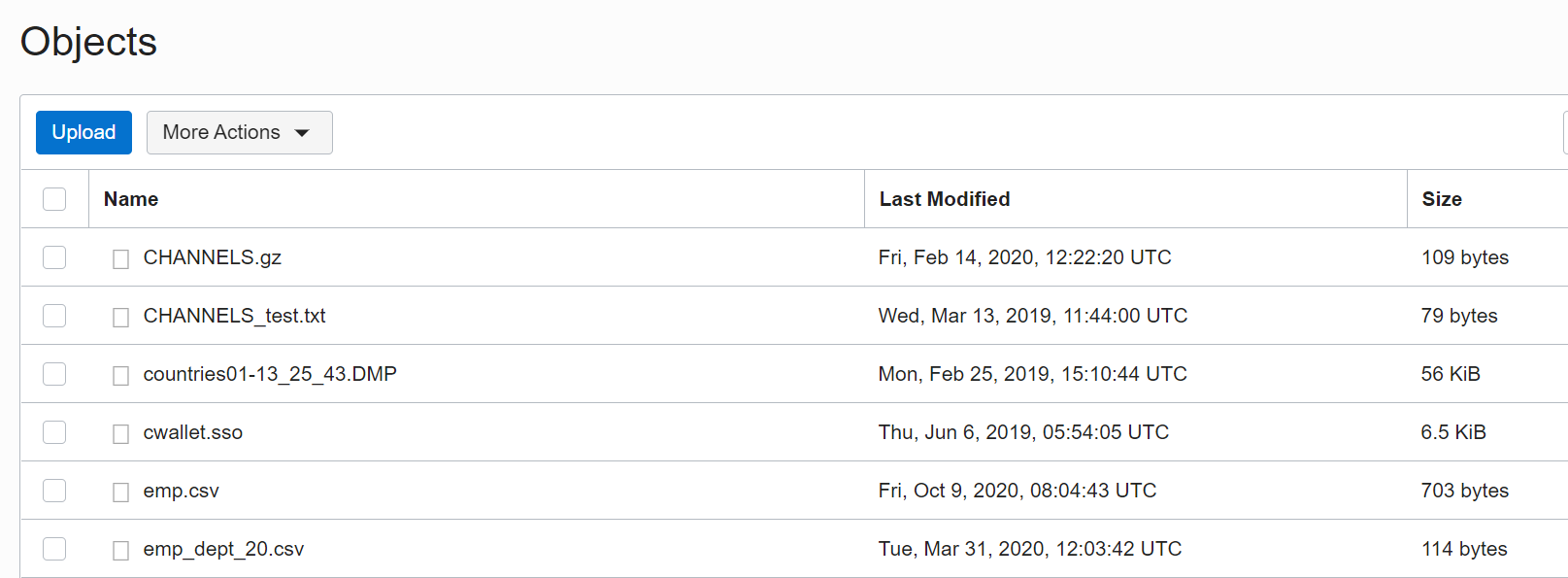

In the first example, the user SCOTT will read a bucket from Object Storage. As a reminder: Buckets in Object Storage are logical containers for storing objects. Users or systems create buckets as required within a region. A region is linked to a single compartment that has policies that define which actions a user can perform. The bucket in my example is called USBUCKET and looks like this:

The files from the bucket can now be listed. This requires not only the credential information but also the URL of the bucket (also location_uri), which can be derived from the “View Object Details” menu item in Object Storage.

SQL> select object_name, bytes

from DBMS_CLOUD.LIST_OBJECTS('<credential name>','https://objectstorage.<region>.oraclecloud.com/n/<ObjectStorageNameSpace>/b/<BucketName>/o/'),

OBJECT_NAME BYTES

-------------------------------------------------- ----------

CHANNELS.gz 109

CHANNELS_test.txt 79

countries01-13_25_43.DMP 57344

cwallet.sso 6661

emp.csv 703

emp_dept_20.csv 114

emp_dept_20.exp 12288

emp_dept_30.csv 121

emp_dept_30.exp 12288

exp_scott.dmp 0

exp_scott.dmp_aaaaaa 471040

In the next example, we want to use an external table. The files are stored in Object Storage. The CREATE_EXTERNAL_TABLE function is now used. For a successful application, it is necessary to specify at least the table name, the credential name, the FILE_URI_LIST, the FORMAT argument and the COLUMN_LIST argument, which specifies the list of column names and data types, separated by commas, for the external table. The saved files do not necessarily have to be in text format. As in on-premises installations, the CSV format is also possible with the TYPE format option. Pre-compressed files can also be read. The COMPRESSION format option for compressed files enables access to files of the compression type gzip, zlib and bzip2. Parquet files can also be used. The TYPE option and the value ‘parquet’ must be used for the format option.

SQL> drop table channels_ext;

SQL> begin DBMS_CLOUD.CREATE_EXTERNAL_TABLE(table_name=>'CHANNELS_EXT',

credential_name => '<credential name>',

file_uri_list => ''https://objectstorage.<region>.oraclecloud.com/n/<ObjectStorageNameSpace>/b/<BucketName>/o/'CHANNELS.gz',

format => json_object('compression' value 'auto','delimiter' value '|' ),

column_list =>'channel_short varchar2(1),

channel_long varchar2(20),

channel_class varchar2(20)');

end;

/

SQL> select * from channels_ext;

C CHANNEL_LONG CHANNEL_CLASS

- -------------------- --------------------

S Direct Sales Direct

T Tele Sales Direct

C Catalog Indirect

I Internet Indirect

P Partners Others

In the last example, we copy data from the file CHANNELS_test.txt into the database table CHANNELS.

SQL> drop table channels;

SQL> create table channels

(channel_id CHAR(1),

channel_desc VARCHAR2(20),

channel_class VARCHAR2(20));

SQL> begin DBMS_CLOUD.COPY_DATA(table_name => 'CHANNELS',

credential_name => '<credential name>',

file_uri_list => 'https://objectstorage.<region>.oraclecloud.com/n/<ObjectStorageNameSpace>/b/<BucketName>/o/'CHANNELS_test.txt',

schema_name => 'SCOTT',

format => json_object('delimiter' value '|'));

end;

/

SQL> select * from channels;

C CHANNEL_DESC CHANNEL_CLASS

- -------------------- --------------------

T Tele Sales Direct

C Catalog Indirect

I Internet Indirect

P Partners Others

Of course there is much more. One other interesting topics is certainly hybrid partitioned tables and much more.

Have fun testing and trying things out.

Links, documentation and github

- MOS Note How To Setup And Use DBMS_CLOUD Package (Doc ID 2748362.1)

- PL/SQL Packages and Types Reference Guide under DBMS_CLOUD

- SQL examples on github (Witold Swierzy)

- Hybrid Partitioned Tables – an introduction with examples