Generative AI applications are increasingly constrained not by compute, but by where enterprise data resides. As organizations adopt Retrieval Augmented Generation (RAG), they face a common challenge: how to run high-performance AI workloads while securely accessing distributed data across multiple clouds.

In this post, we walk through how to run NVIDIA RAG workloads on Oracle Cloud Infrastructure (OCI) using best-in-class GPU performance, while securely accessing data stored in Azure and Google Cloud Platform (GCP)—while limiting data sprawl, mitigating public internet exposure, while also reducing architectural complexity.

Why NVIDIA RAG on OCI?

OCI provides a purpose-built platform for large-scale AI workloads, combining bare metal NVIDIA GPUs, RDMA networking, AI enabled database (Oracle Database26ai) and predictable pricing. When paired with NVIDIA’s officially supported RAG Blueprints and NVIDIA Inference Microservices (NIM), OCI becomes an ideal environment for running production-grade generative AI systems.

Key advantages include:

- Best-in-class GPU performance with bare metal A100/ H100 /H200 GPUs

- Massive GPU scaling with low-latency RDMA networking

- Private, isolated GPU clusters for secure enterprise AI

- No premium pricing for performance, enabling cost-efficient scaling

- Kubernetes-native AI deployment using Oracle Kubernetes Engine (OKE)

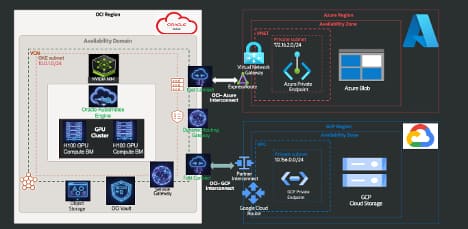

Bringing AI to Your Data with Multi-Cloud Interconnect

Enterprise data rarely resides in a single cloud. Rather than moving data into OCI, this architecture brings AI to the data using private, high-bandwidth interconnects—all within a unified RAG pipeline:

- OCI–Azure Interconnect with Azure Private Endpoints

- OCI–GCP Interconnect with google cloud Private Service Connect

These interconnects provide:

- Private, secure connectivity

- Consistent performance

- No exposure to the public internet

- Compliance-friendly data access

This allows OCI-hosted GPU workloads to securely access:

- Azure services such as Blob Storage, Virtual Machines, Database, and more.

- GCP services such as Cloud Storage, Virtual Machines, Database services, and more.

- OCI Object Storage private access via OCI Service Gateway

Architecture Overview

At a high level, the solution consists of:

- Oracle Kubernetes Engine (OKE) running on bare metal NVIDIA H100 GPUs

- NVIDIA NIM deployed as inference microservices

- NVIDIA RAG Blueprint for ingestion, retrieval, and generation

- OCI–Azure and OCI–GCP Interconnects for secure data access

- Object storage across OCI, Azure, and GCP

- Full observability and GPU monitoring

This architecture enables enterprises to deploy distributed RAG systems without compromising performance, security, or data locality.

Deploying NVIDIA RAG on OCI

Deployment follows NVIDIA’s officially supported RAG Blueprint and includes:

- OKE cluster deployment on OCI bare metal GPU shapes

- NVIDIA NIM installation for optimized inference

- RAG ingestion services to process data from Azure and GCP

- Query and retrieval pipelines using vector databases

- Generation services delivering contextual responses

OCI provides a GPU QuickStart to accelerate cluster setup: https://github.com/oracle-quickstart/oci-hpc-oke

Oracle also offers ai-accelator-packs which support 1-click deployment of the Nvidia RAG on OCI. Read more about it: https://github.com/oracle-quickstart/oci-ai-blueprints/blob/main/docs/ai_accelerator_packs/about.md

Secure Data Access Across Clouds

Custom ingestion jobs securely read data from Azure and GCP over private interconnects and feed it directly into the RAG ingestion services running on OCI GPUs. This approach:

- Eliminates unnecessary data duplication

- Preserves data sovereignty

- Reduces egress costs

- Maintains consistent security boundaries

The result is real-time, context-aware AI operating across cloud boundaries

Observability and GPU Monitoring

To support enterprise-grade operations, the solution includes complete telemetry and monitoring. An in-house tool, OCI-GPU-SCANNER- https://github.com/oracle-quickstart/oci-gpu-scanner ,provides visibility into:

- GPU utilization

- Performance bottlenecks

- Resource efficiency

This ensures teams can optimize cost, performance, and scalability as workloads grow

Conclusion

Running NVIDIA RAG on OCI with secure access to Azure and GCP data demonstrates what’s possible when cloud infrastructure is designed for AI from the ground up. By combining OCI’s GPU performance and private networking with NVIDIA’s AI software stack, organizations can unlock powerful generative AI capabilities without compromising on security, cost, or architectural simplicity