Customers are moving their applications, data, file systems, and other resources to the public cloud to take advantage of the flexibility, scale, and enhanced security. With the advantage of cloud native services, they might also want to migrate or deploy applications that have strict performance requirements, such as storage, processing, and latency. Customers must ensure that the cloud provider they choose has the appropriate resources that meet these requirements. We also highly recommended that customers conduct thorough testing to ensure that their choice meets all performance, security, and availability criteria.

File systems: On the cloud?

You can choose to deploy a file system instead of using a cloud native file system, such as Oracle File Storage service, for one or more of the following reasons:

-

Clustered file system can make mounted file system management simple by making it a single logical system.

-

Enabling simultaneous access to file system increases its utilization and can provide higher performance.

-

When storage servers are bundled in a cluster, data retrieval using a traditional file system can become complicated. Clustered file systems can organize data across different clusters. This organization enables simultaneous access to files and handles demanding workloads.

-

Cluster file systems provides higher redundancy if a node failure occurs in a cluster.

Let’s consider a specific example of machine learning (ML) and big data applications. These workloads usually require high-performance compute nodes (HPC) to achieve the necessary performance. For example, a machine learning application might require access to large number of files for training the ML model. A big data application might also require access to large set of data files, although the files themselves might be much larger than the ones used by an ML application. Both these types of application require low latency and high IO throughput for access to the files.

You can achieve this configuration using in Oracle Cloud Infrastructure (OCI) by deploying HPC nodes with the multiattach block volume feature. Block Volumes in OCI are NVMe-based and performance is backed by SLAs. This feature provides redundancy with no extra effort, decreasing the solution cost.

HPC nodes utilize OCI’s RDMA over converged ethernet, also known as RoCE. RoCE is a key feature of our HPC cluster network offering and provides fast, reliable 100-Gbps RDMA infrastructure, a significant performance boost for reduced latency and increased throughput.

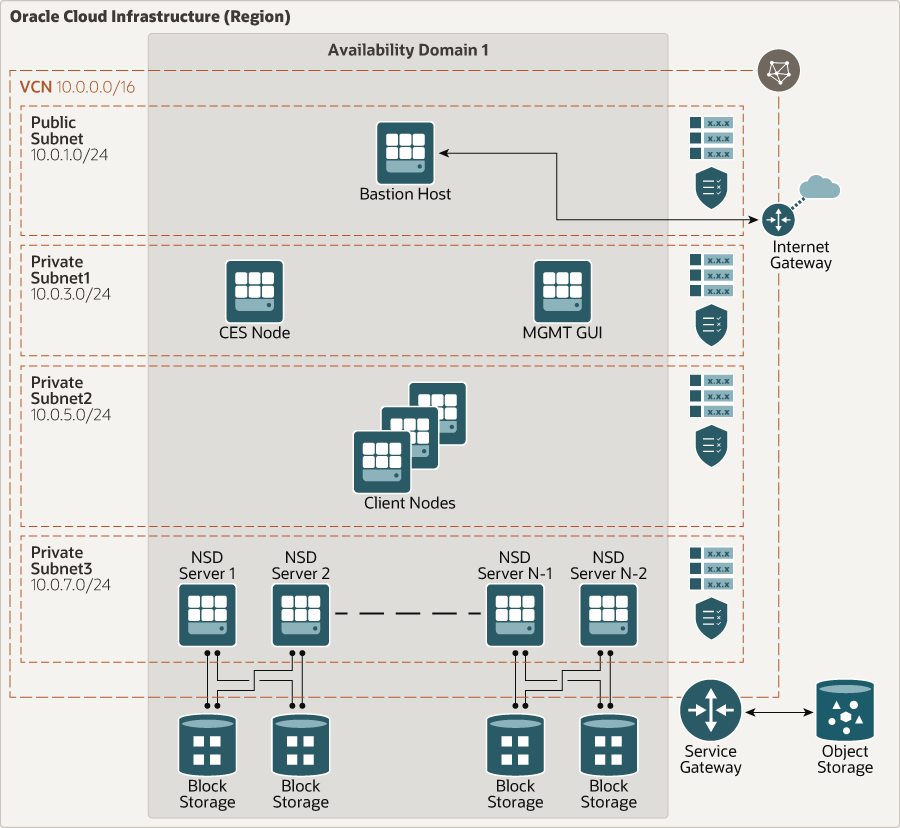

For clustered file systems, such as IBM spectrum scale, on OCI, Oracle Cloud customers can use cluster networking to get over 140 GB/s throughput in a cloud-based file server. Network service descriptors (NSDs) provide access to storage that’s visible on servers as local block devices servers use Block Volume’s multiattach feature and HPC Compute shape. The following graphic depicting the architecture for this deployment:

In testing conducted by Oracle for volumes 1 TB and above, a throughput of approximately 500MB/s was achieved on the balanced performance tier by default and at no extra charge.

Don’t need HPC?

Not all applications require HPC compute nodes or clustered file system for high performance, in which case, you can use standard VMs with multiattach block volumes.

Let’s look at four popular file systems that you can deploy on OCI. OCI provides the best price-performance for the following deployments:

-

Virtual machine (VM) or bare metal nodes including DenseIO with locally attached NVMe storage and HPC shapes. Bare Metal shapes provide 2×50 Gbps network interfaces for standard and dense I/O instances.

-

Non-oversubscribed network infrastructure providing maximum throughput and low latency

-

High-performance NVMe block storage with multiattach capabilities with high input and output per second (IOPS) meeting requirements for different types of application

-

Security features, such as Cloud Guard and maximum-security zones, for preconfigured best practice security framework

IBM Spectrum Scale

IBM Spectrum Scale is a clustered file system that provides concurrent access to one or more file systems from multiple nodes. Many applications that have strict requirements for storage, bandwidth, and latency use IBM Spectrum Scale. The nodes can be SAN-attached, network-attached, a mixture of SAN-attached and network-attached, or in a shared-nothing cluster configuration. Spectrum Scale enables high-performance access to a common set of data to support a scale-out solution or to provide a high-availability platform.

GlusterFS

A scalable, distributed network file system is suitable for data-intensive tasks, such as image processing and media streaming. When used in HPC environments, GlusterFS delivers high-performance access to large data sets, especially immutable files.

Lustre

Lustre is an open source, parallel, distributed file system used for HPC clusters and environments. The name Lustre is a portmanteau of Linux and cluster. Using Lustre, you can build an HPC file server on OCI bare metal Compute with locally attached NVMe SSD or network-attached OCI Block Volumes. Lustre clusters scale for higher throughput, higher storage capacity, or both for the file system. It costs only a few cents per gigabyte per month for compute and storage combined.

BeeGFS

BeeGFS is a parallel cluster file system, developed with a strong focus on input-output performance and designed for easy installation and management. Using BeeGFS, you can build an HPC file server on OCI. BeeGFS transparently spreads user data across multiple servers. By increasing the number of servers and disks in the system, you can scale the performance and capacity of the file system from small clusters up to thousands of nodes.

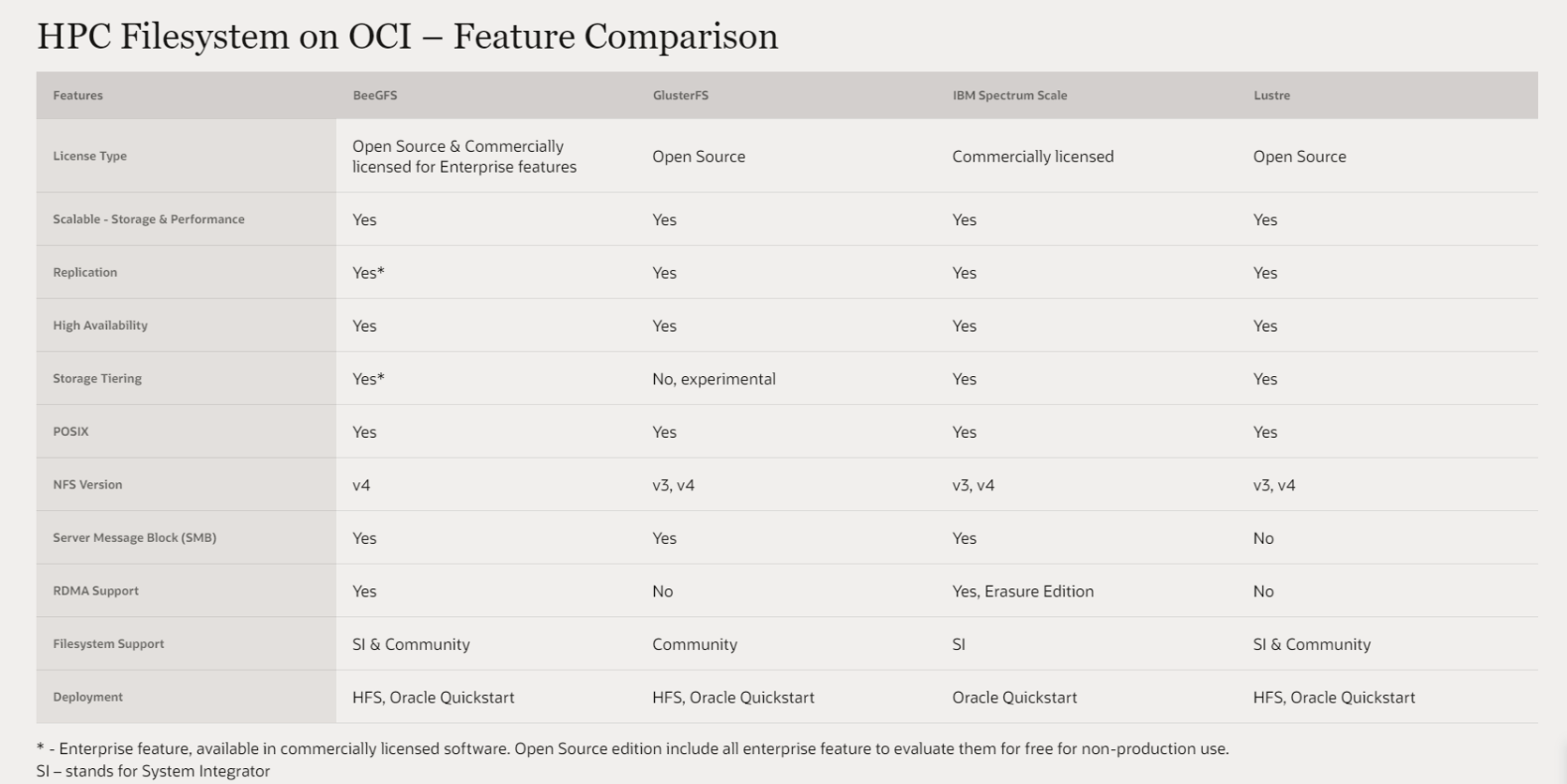

All these file systems have been deployed and thoroughly tested on OCI and have corresponding reference architectures. They’re easy to deploy with a click of a button with Terraform. The following matrix compares features for these different file systems:

HPC file systems on OCI: Feature comparison

| Features | BeeGFS | GlusterFS | IBM Spectrum Scale | Lustre |

|---|---|---|---|---|

| License type | Open source and commercially licensed for enterprise features | Open source | Commercially licensed | Open source |

| Scalable storage and performance | Yes | Yes | Yes | Yes |

| Replication | Yes* | Yes | Yes | Yes |

| High availability | Yes | Yes | Yes | Yes |

| Storage tiering | Yes* | No, experimental | Yes | Yes |

| POSIX | Yes | Yes | Yes | Yes |

| NFS version | v4 | v3, v4 | v3, v4 | v3, v4 |

| Server message block (SMB) | Yes | Yes | Yes | No |

| RDMA support | Yes | No | Yes, Erasure edition | No |

| File system support | SI and community | Community | SI | SI and community |

| Deployment | HFS, Oracle Quickstart | HFS, Oracle Quickstart | Oracle Quickstart | HFS, Oracle Quickstart |

Reference

For more information on reference architectures, automation stacks, and more, see the following resources