Oracle Cloud Infrastructure’s Generation 2 cloud is moving the needle for high-performance file servers in the cloud yet again. Oracle Cloud customers can now use Cluster Networking to get nearly 140 GB per second throughput in a cloud-based file server.

Because BeeGFS on Oracle Cloud Infrastructure can feed the beast for high-performance computing (HPC), artificial intelligence, and machine learning workloads, we plan to include this file server in our HPC marketplace image.

HPC Bare Metal Compute Shape with 100-Gbps RDMA

We recently published the Deploy a BeeGFS File System on Oracle Cloud Infrastructure blog to demonstrate the simplest and most straightforward way to deploy BeeGFS filesystem on Oracle Cloud Infrastructure. In that blog post, we covered how to deploy BeeGFS on Oracle Cloud Infrastructure using transmission control protocol (TCP). Now, we’re sharing what a tremendous improvement our HPC bare metal Compute shape (BM.HPC2.36) with remote direct memory access (RDMA) cluster network can deliver to the performance of your BeeGFS filesystem cluster.

BeeGFS running on Oracle HPC compute shapes use and greatly benefits from RDMA over converged ethernet, also known as RoCE. RoCE is a key feature of our HPC cluster network offering on Oracle Cloud Infrastructure. Moving the BeeGFS server-client block communication away from the traditional 25-GbE networks and onto the fast and reliable 100-Gbps RDMA infrastructure provides a significant performance boost for reduced latency and increased throughput. At Oracle Cloud Infrastructure, we provide Oracle Linux UEK operating system images, which have RDMA OFED libraries preinstalled and configured for our customers to use.

Simple RDMA Setup for BeeGFS

By turning on the support for RDMA in BeeGFS using the VERBS API for data transfer between a storage or metadata server and client, customers can further drive the latency down and the throughput up. To enable RDMA during the BeeGFS install process, use the following commands. You can find more details on the install process in the Install Commands Walkthrough.

1. Install libbeegfs-ib package to enable RDMA based on OFED ibverbs API for application integration.

Meta node:

yum install beegfs-meta libbeegfs-ib -y

Storage node:

yum install beegfs-storage libbeegfs-ib -y

2. Update beegfs-client-autobuild.conf to include path to installed kernel driver modules.

Client node:

sed -i 's|^buildArgs=-j8|buildArgs=-j8 BEEGFS_OPENTK_IBVERBS=1 OFED_INCLUDE_PATH=/usr/src/ofa_kernel/default/include|g'/etc/beegfs/beegfs-client-autobuild.conf

On Oracle Linux UEK Operating System:

yum install -y elfutils-libelf-devel

sed -i -e '/ifeq.*compat-2.6.h/,+3 s/^/# /'/opt/beegfs/src/client/client_module_7/source/Makefile

3. BeeGFS Client rebuild:

Client node:

/etc/init.d/beegfs-client rebuild

Benchmarking

Testbed

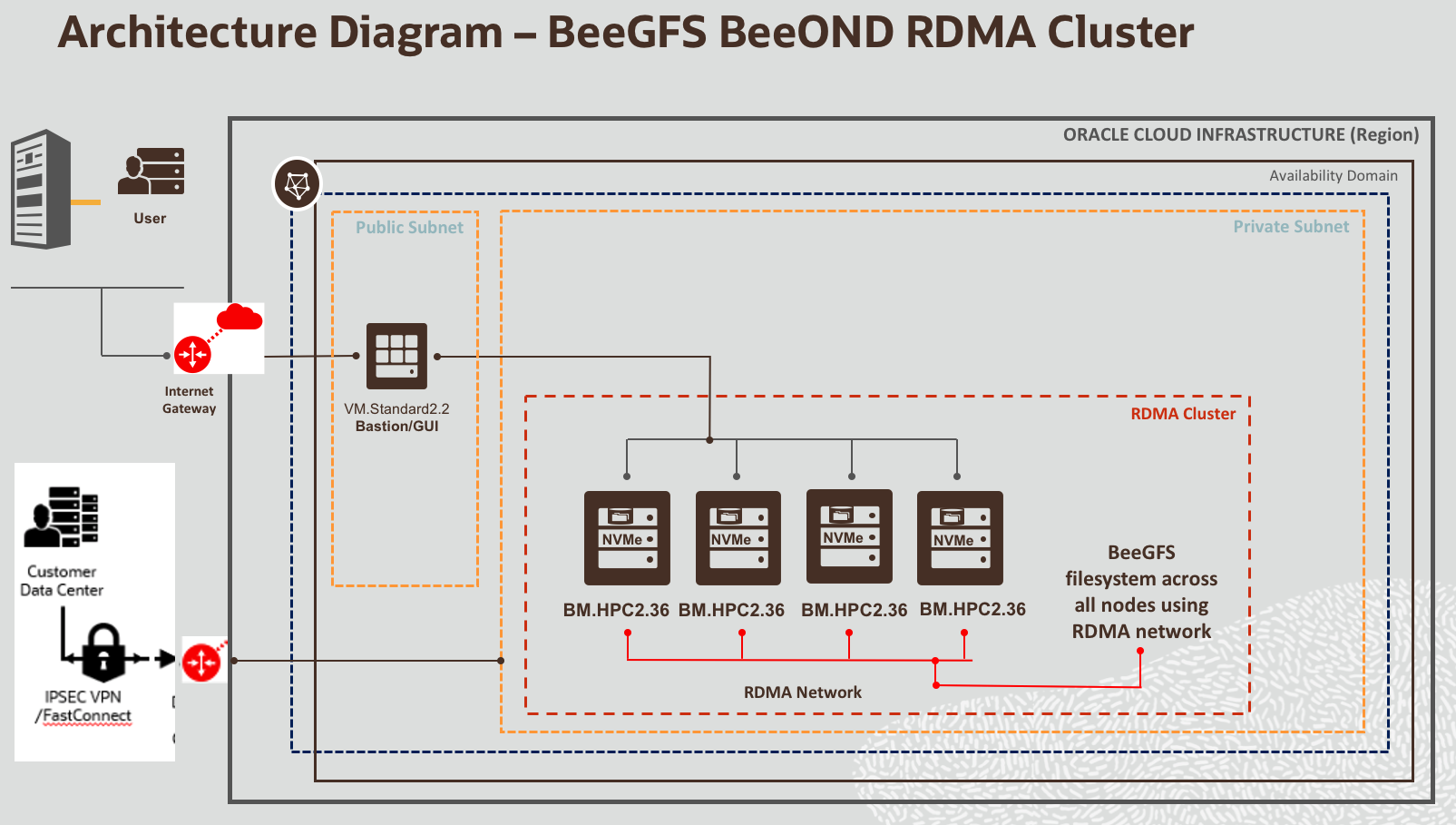

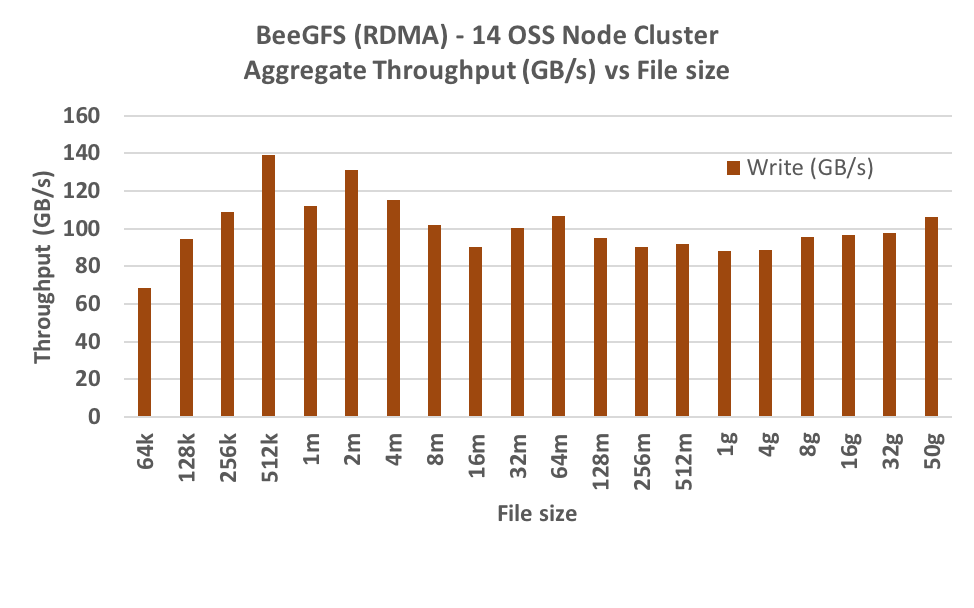

We created a BeeGFS converged cluster with 16 HPC bare metal nodes, each with 6.4 TB local NVME and 100-Gbps RDMA cluster networking enabled. A converged BeeGFS cluster is a setup where different BeeGFS services (server and client) run on same compute nodes. We use 14 nodes for the file server, providing 89.6 TB of total storage.

BeeGFS Tuning

On client nodes, we updated the chunk or stripe size to 1m, instead of 512k default value.

beegfs-ctl --setpattern --chunksize=1m --numtargets=4 /mnt/beegfs

The following BeeGFS parameters are required tuning for RDMA:

-

tuneNumWorkers

-

tuneBindToNumaZone

-

connRDMABufSize

-

connRDMABufNum

-

connMaxInternodeNum

For more details, refer to How To Configure and Test BeeGFS with RDMA.

IOZone Configuration

For IO throughput benchmarking, we used IOzone, an open source file system benchmarking utility. We ran IOzone concurrently on all 16 client nodes. We configured it to run write workload with record/transfer size of 2m (-r2m), fsync (-e) enabled, Stonewall disabled (-x), 32 threads and processes per client node (-t32). We tested the IO throughput for a range of file sizes using a total of 512 threads across 16 client nodes.

Results

As you can see in the following graph, we can get up to 140 GB/s aggregate write throughput from a 14 OSs node filesystem.

Deployment

We automated the deployment of a converged BeeGFS BeeGFS ON Demand using RDMA on Oracle Cloud Infrastructure using the Terraform template. Soon, we’re including the provisioning of a BeeGFS BeeOND cluster in our HPC marketplace image at no additional charge.