Oracle Cloud Infrastructure (OCI) is a public cloud designed to support mission critical applications that need a secure, performant, and scalable infrastructure. OCI offers cluster networks, which deliver demonstrable performance gains through unique engineering approaches for remote direct memory access (RDMA) networking. Cluster networking is the foundation for core OCI services that rely on RDMA, such as Oracle Autonomous Database and Oracle Exadata Cloud service, and enables high-performance computing (HPC) and graphics processing unit (GPU)-based machine learning (ML) workloads.

RDMA

RDMA relies on direct memory access between Compute hosts spread across a network, using the network in a way that eliminates the CPU overhead associated with the traditional network communication and delivers a very low network latency. Most applications using RDMA are sensitive to network latency and jitter. RDMA communication is also ultra-sensitive to packet loss and typically requires lossless networking for good performance.

RDMA requirements pose challenges for multitenant networks. The most common implementations of RDMA involve using InfiniBand or proprietary protocols. OCI, on the other hand, uses battle-tested and widely-deployed ethernet and internet protocol (IP) technologies in the form of RDMA over converged ethernet (RoCE) delivered over NVIDIA ConnectX SmartNICs. This process required solving the scaling and performance issues that have traditionally been associated with RoCE.

Unique properties and key innovations

OCI cluster networks rely on several innovations and the following key properties of our underlying network:

-

A purpose-built dedicated RDMA network: Instead of sharing the RDMA network with non-RDMA traffic, OCI invested in a high-performance network dedicated for RDMA traffic. Having a common shared network involved performance compromises for the RDMA traffic, such as sharing queues, buffers, and bandwidth with non-RDMA traffic. Using a dedicated RDMA network has higher networking costs for OCI but delivers the best performance for RDMA-dependent workloads.

-

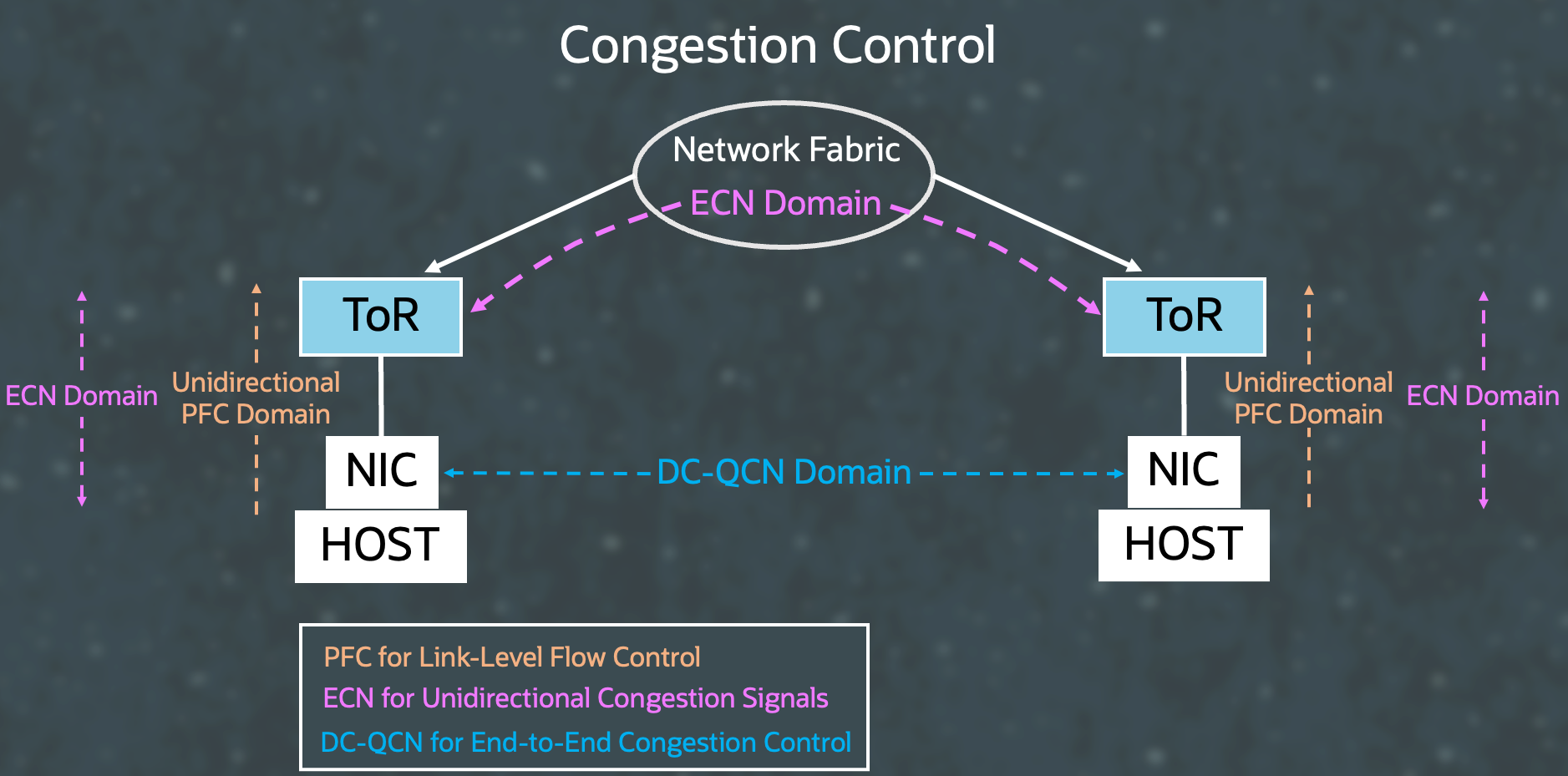

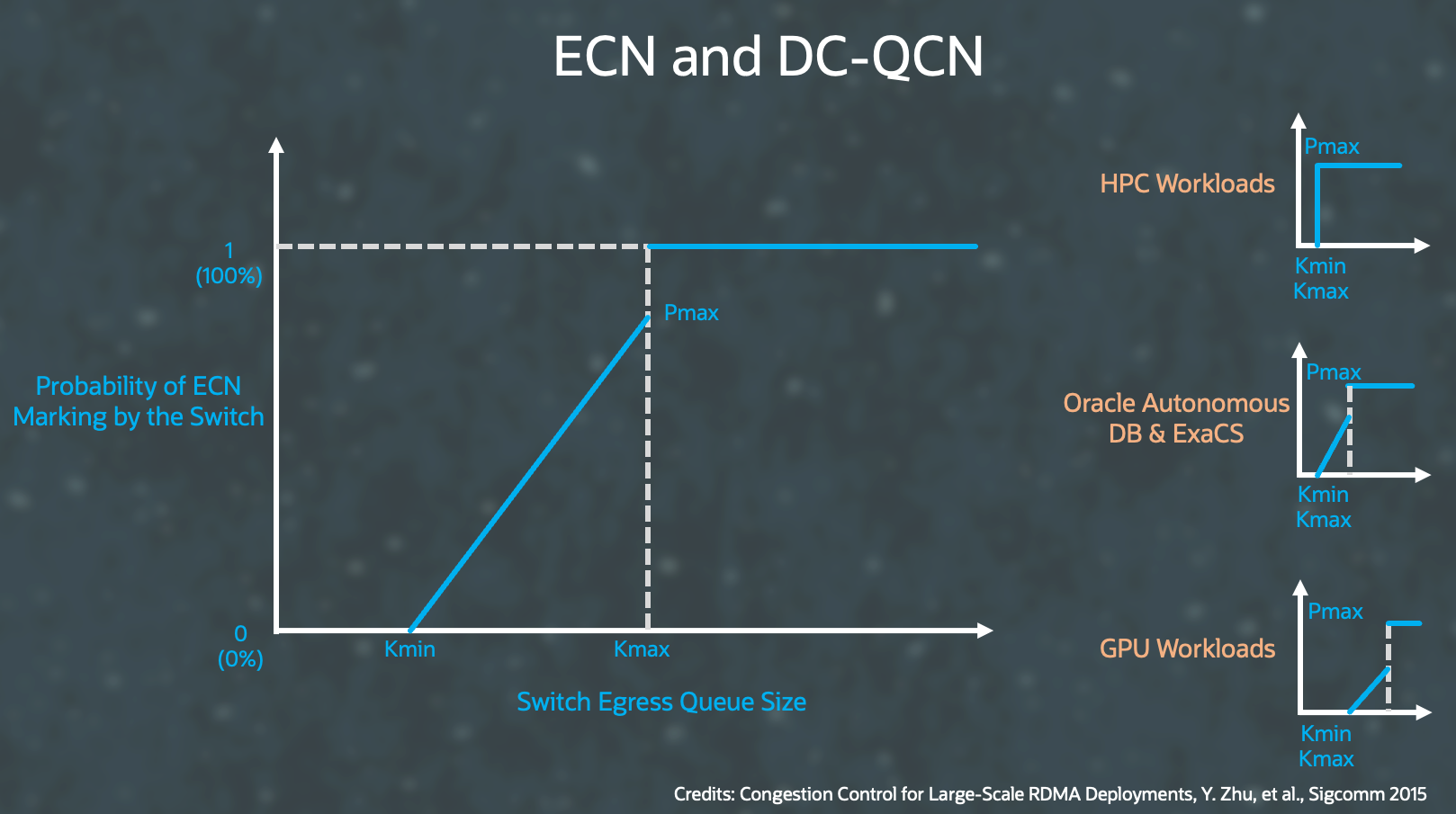

Eliminate network blocking issues: RoCE traditionally uses priority flow control (PFC) to create a lossless network, but using PFC can lead to network blocking issues in certain kinds of congestion. OCI cluster networking restricts PFC to the edge of the network and eliminates such network blocking issues. Instead, OCI relies on explicit congestion notification (ECN) and data center quantized congestion notification (DC-QCN) for RDMA congestion control.

-

Multiple queues and buffer tuning for tailored experience: OCI cluster networks support multiple different classes of RDMA workloads, each aiming for a different tradeoff of latency versus throughput. OCI implemented multiple RDMA queues, each tailored to provide a differentiated performance experience

Conclusion

OCI Cluster Network provides ultra-high performance¹ for the most demanding RDMA workloads. OCI designed our Cluster Network to support multiple different types of RDMA workloads with a tailored performance experience to match the requirements of each workload. In the accompanying video blog we discuss in detail how we went about doing so.

The choices we made to develop Oracle Cloud Infrastructure are markedly different. The most demanding workloads and enterprise customers have pushed us to think differently about designing our cloud platform. We have more of these engineering deep dives as part of this First Principles series, hosted by Pradeep Vincent and other experienced engineers at Oracle.

¹ OCI Cluster Network achieves <=2us microseconds latency for single rack clusters and achieves <=6.5 microseconds latency for multi-rack clusters.

For more information, see the following resources: