NVIDIA GPUs on Oracle Cloud Infrastructure (OCI) provide faster AI training and inferencing for customers. As a result, OCI has seen explosive growth in infrastructure usage for training large-scale AI models.

At GTC today, NVIDIA announced the open beta of the NVIDIA NeMo Megatron large language model (LLM) framework, which customers can choose to run on accelerated cloud instances from OCI. Customers are using LLMs to build AI applications for content generation, text summarization, chatbots, code development and more. Next year, we’ll add new instances with NVIDIA H100 GPUs to further accelerate these massive models.

This week at GTC provides a unique opportunity for tech innovators and business professionals to learn more about graphical processing units (GPUs), natural language processing (NLP) and LLMs. OCI experts are presenting the following sessions at GTC, which runs online September 19–22, 2022:

-

Building Large Language Models at Scale in the Cloud [A41328]

-

Insights from Unstructured Information with AI Services (Presented by Oracle Cloud) [A41397]

Why natural language processing matters

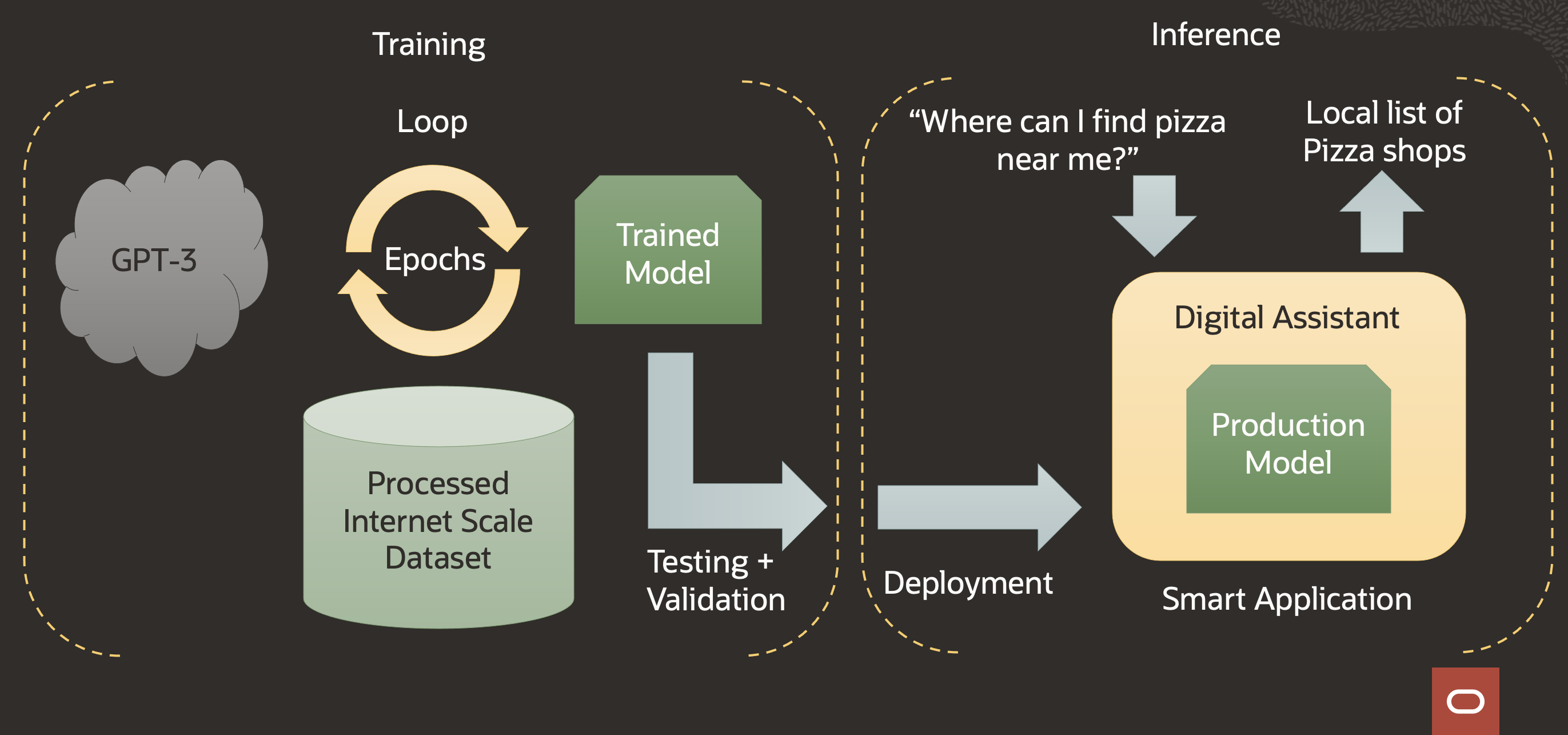

NLP, enabled by large language models, enhances how we interact with technology by giving computers a better understanding of text and audio. These models, such as GPT-3, BLOOM, and BERT, can include hundreds of billions of parameters that enable human-like communication. Use cases include translation tools, autocorrect, autocomplete, chatbots, speech recognition, and automatic summarization of text like papers, blogs, and reports.

Training NLP models requires massive data sets, with data that has been cleansed, labeled, tagged, and classified. Model training needs access to powerful processors, fast and dense local storage, high-throughput ultra-low-latency RDMA cluster networks, and tools to automate and run jobs seamlessly.

Why OCI for model training?

To train one of these NLP models, massive data sets are needed, with data that has been cleansed, labeled, tagged, and classified. Model training needs access to powerful processors, fast and dense local storage, high-throughput ultra-low-latency RDMA cluster networks, and tools to automate and run jobs seamlessly.

OCI offers the best performing cloud hardware and networking. To sustain the bandwidth required by LLMs and reduce operations, NVIDIA A100 Tensor Core GPU shapes are interconnected with high-throughput ultra-low-latency RDMA cluster networks. Each NVIDIA A100 node has eight 2-100Gbps NVIDIA ConnectX SmartNICs connected through our high-performance cluster network blocks, resulting in 1,600 Gbps of bandwidth between nodes.

These innovations enable customers such as Adept and Aleph Alpha to train their models faster and more economically than before.

“AI continues to rapidly grow in scope but until now, AI models could only read and write text and images; they couldn’t actually execute actions such as designing 3D parts or fetching and analyzing data. With the scalability and computing power of OCI and NVIDIA technology, we are training a neural network to use every software application, website, and API in existence—building on the capabilities that software makers have already created. The universal AI teammate gives employees an ‘extra set of hands’ to create as fast as they think and reduce time spent on manual tasks. This in turn will help their organizations become more productive, and nimble in their decision making.” —David Luan, chief executive officer, Adept

“This is a new generation model, and in order to train those you need a new generation of hardware—the old GPU clusters aren’t sufficient anymore. On the industry side we have raised a lot of capital and partnered with Oracle. We’re building a way to translate an impressive playground task into an enterprise application that creates value.” —Jonas Andrulis, Founder & CEO, Aleph Alpha

AI Services

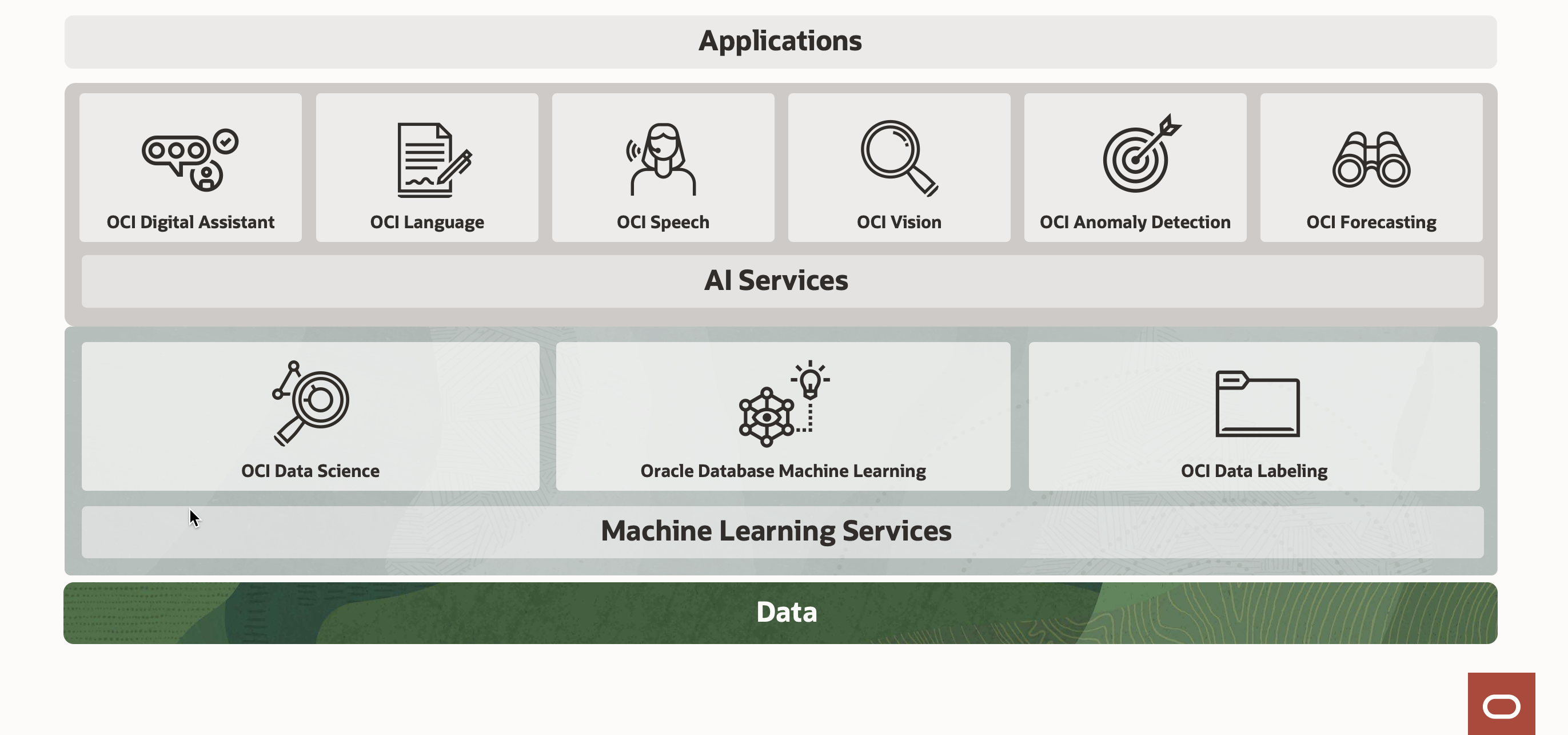

Oracle AI includes several products: OCI Speech, OCI Language, OCI Vision, OCI Anomaly Detection, and OCI Digital Assistant. The services most relevant to the second GTC presentation are OCI Speech and OCI Language. OCI Speech applies automatic speech recognition (ASR) technology to transform audio-based content into text. OCI Language performs sophisticated text analysis at scale.

The combination of OCI Speech, OCI Language, Oracle Integration services and Oracle Analytics provides a workflow to extract information from recorded calls and run analytics to discover insights at-scale. This analysis can be particularly useful at call centers and other departments that handle a high volume of audio conversations.

Join us at NVIDIA GTC

OCI offers Compute instances equipped with NVIDIA V100 and A100 Tensor Core GPUs. As an extension of our ongoing collaboration with NVIDIA, OCI will also offer support for the NVIDIA H100 Tensor Core GPU, which can be used for trillion-parameter language models, and A10 Tensor Core GPUs to enable end-to-end scaling of GPU workloads. When run on OCI bare metal instances, these GPUs enable large-scale training and inferencing for AI and ML models for enterprises, research, academia, and innovative independent software vendors.

Join us for free at NVIDIA GTC. Register for the conference to access these and other sessions, workshops, and demos. For more information about Oracle Cloud Infrastructure’s capabilities, explore the following resources: