Get The Best of Both Worlds Using OCI Logging Analytics and Open Source Collector Fluentd

Fluentd is an open-source data collector that provides a unified logging layer between data sources and backend systems. It is flexible, lightweight, with hundreds of plugins for collecting, manipulating and uploading data and has a large user base. The Fluentd open-source data collector is often used for collecting logs from custom environments having strict resource constraints and infrequent configuration changes. There are use cases where it is beneficial to ingest logs using Fluentd collection and we used those as design principles when building Fluentd support for OCI Logging Analytics. Our goal was to combine the flexibility of an Open Storage Service (OSS) with the scalability of a cloud service.

OCI Logging Analytics is a fully managed cloud service for ingesting, indexing, enriching, analyzing and visualizing log data from many sources, including Fluentd, for troubleshooting, and monitoring any application and infrastructure whether on-premises or on cloud. It enables you to keep logs for active analysis for as long as you want or archive part of them for analysis later. Data from the archive can be recalled for active analysis within minutes. If you’re already an Oracle Cloud user, the Logging Analytics service is already integrated, you just enable it in your tenancy.

In this blog I’ll describe the steps to configure a Fluentd-based log pipeline to send logs to the Logging Analytics service using the updated Fluentd Output Plugin. I’ll use /var/log/message (syslog format) as an example.

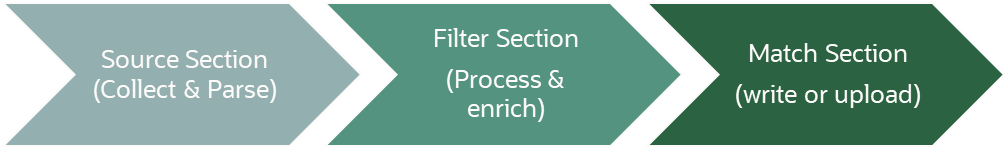

Fluentd Log Pipelines

Without diving too deep into the technical details of Fluentd log pipelines, I’ll cover a few key concepts to set the context for the next steps. If you are already familiar with Fluentd concepts you can skip this section.

Fluentd log pipelines consist of different types of plugins stitched together to perform specific and customizable data processing operations. Some of these plugins are available in the base package, while others are installed on Fluentd. Generally speaking, a Fluentd pipeline consists of three stages, which are setup by configuring different Fluentd plugins.

Oracle Logging Analytics is a Fluentd output plugin that converts the log-records into the required format, establishes secure connection to the cloud service securely, and uploads encrypted logs to the service.

Let’s install and configure the output plugin.

Installing & Configuring Fluentd Logging Analytics Output Plugin

In this section, we’ll be installing the output plugin and configuring it to send logs from /var/log/messages to the Logging Analytics service. Before we begin, there are a few prerequisites.

Now, with the prerequisites out of the way, download the Logging Analytics plugin on the Linux server, from here, unzip the file and use the following command to install:

td-agent-gem install fluent-plugin-oci-logging-analytics-1.0.0.gem

/var/log/messages file continuously and upload any additions to Logging Analytics. The Fluentd Logging Analytics Output Plugin can work with any input source. In this configuration, I’ll be using the tail input plugin, which is available by default. Fluentd configuration file is located at /etc/td-agent/td-agent.conf. Refer to the Fluentd installation steps, if you can’t find it.

Below is the full configuration:

# Source Section

<source>

@type tail

path /var/log/messages

pos_file /var/log/messages.log.pos

path_key tailed_path

tag oci.syslog

<parse>

@type none

</parse>

</source>

# Filter Section

<filter oci.syslog>

@type record_transformer

enable_ruby true

<record>

entityId "<ocid1.loganalyticsentity.oc1.....>"

logGroupId "<ocid1.loganalyticsloggroup.....>"

entityType "Host (Linux)"

logSourceName "Linux Syslog Logs"

logPath ${record['tailed_path']}

message ${record["log"]}

</record>

</filter>

# Output plugin section

<match oci.syslog>

@type oci-logging-analytics

namespace <'abcdefgh'>

config_file_location </.oci/config>

profile_name DEFAULT

<buffer>

@type file

path /var/log

</buffer>

</match>

Let’s drill down into this configuration, in the source section, we are configuring Fluentd to use the tail plugin to tail /var/log/messages and tag the record with oci.syslog to identify in later processing.

To Parse Or Not To Parse

Now, you might have noticed that I am not doing any parsing with Fluentd. That is because we don’t want to put a strain on the host resources, instead we use the Logging Analytics service to do the heavy processing required for parsing. This way we get the flexibility of ingesting a wide array of data with Fluentd and the scalability of the Oracle cloud for processing intensive tasks like parsing and analytics. And parsing is easier to set up, since Logging Analytics comes with more than 250 built-in parsers and you can create new ones easily using the GUI.

Next, in the Filter section we are decorating the logs tagged oci.syslog with contextual and Logging Analytics related parameters to help find the logs faster in Logging Analytics. Visit the services documentation for more information on the key concepts of the service.

Create a log group and see more information here:

Create an Entity of type “Host (Linux)“:

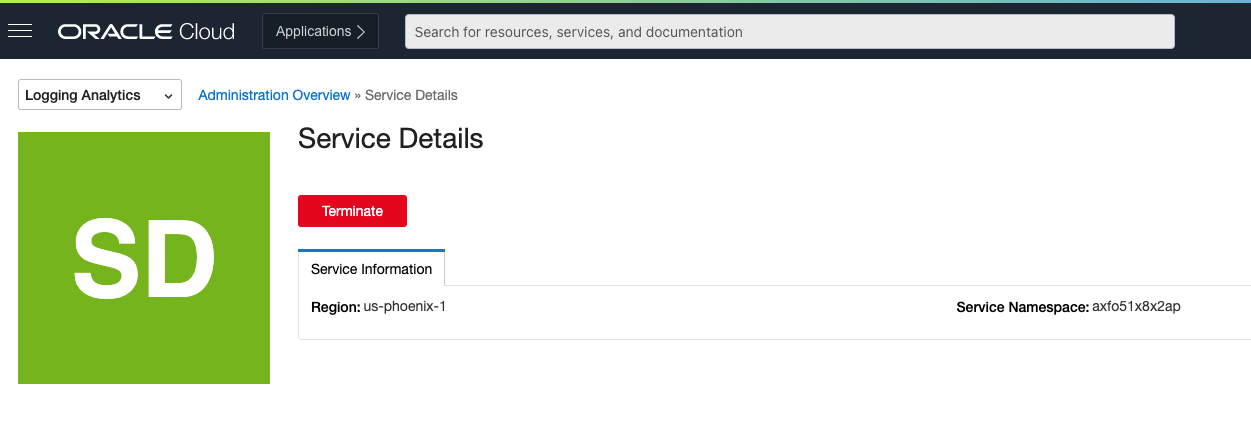

Find the namespace, also described here:

In the output plugin section, we are telling Fluentd to send the logs to the OCI tenant using the Logging Analytics Output Plugin we installed earlier by using the API key defined in the file ~/.oci/config. Note that the user must have the permissions to upload logs. You can verify that quickly by uploading a small file here: https://cloud.oracle.com/loganalytics/upload_create?context=UPLOADS. If unsuccessful, refer to the documentation for any missing policies. The td-agent user would need permissions to read the /var/log/messages file. We also need to give td-agent write permissions to the /var/log directory for writing buffer and pos file.

chown td-agent /var/log/messages

facl u:td-agent:w -m /var/log

The final step is starting (or restarting if already running) Fluentd:

TZ=utc /etc/init.d/td-agent start

You should see content from /var/log/messages in log explorer ready for analysis in a few moments.

If you don’t see this in a few minutes, you can check for errors in:

td-agentlogs in/var/log/td-agent/tg-agent.log- Output plugin logs in

/var/log/oci-…log

The pipeline is leveraging the syslog source and parsers that come built-in with Logging Analytics and allow you to see logs in different services.

Get Started Now

In this blog we walked through the end-to-end process of installing and configuring Fluentd to ingest logs into the Logging Analytics service using the new output plugin. This method is highly suited for resource constrained environments, where the lightweight Fluentd can be installed on the systems and log parsing/processing is offloaded the scalable Logging Analytics cloud service, giving you the best of both worlds. The output plugin has many options to fine-tune the performance, handling multi-line logs and configuring workers and has been tested for uploading up to 1GB per minute.

For more end to end examples of installing the OCI Logging Analytics Output Plugin and configuring Fluentd to ingest other type of logs see this turorial.