In our 3-part blog series, we’ve aimed to help you understand how customers succeed with HeatWave by considering three very common patterns. Part 1 focused on migrating MySQL-based databases to HeatWave MySQL, and part 2 on innovating with AI using HeatWave GenAI and HeatWave AutoML. In this final blog, we’ll consider how customers get insights from all their data using HeatWave Lakehouse.

Increasingly challenging to obtain fast insights from diverse data sets

Over 80% of the data we generate is unstructured. It’s coming from social media, Web logs, Internet of Things (IoT) devices…etc., and this data is often stored in object storage. 95% of organizations state that managing unstructured data is a significant problem. With this complexity it’s not surprising that a large amount of collected data is not analyzed. Indeed, such analysis can involve complicated data movements and transformations across multiple data stores and services for various purposes, which makes it difficult to obtain a complete view. Moreover, security and regulatory compliance risks increase as data moves between services, and so do costs. This is exacerbated by the fact that AI’s value is directly tied to the data it learns from.

HeatWave Lakehouse: Query all your data and get built-in AI

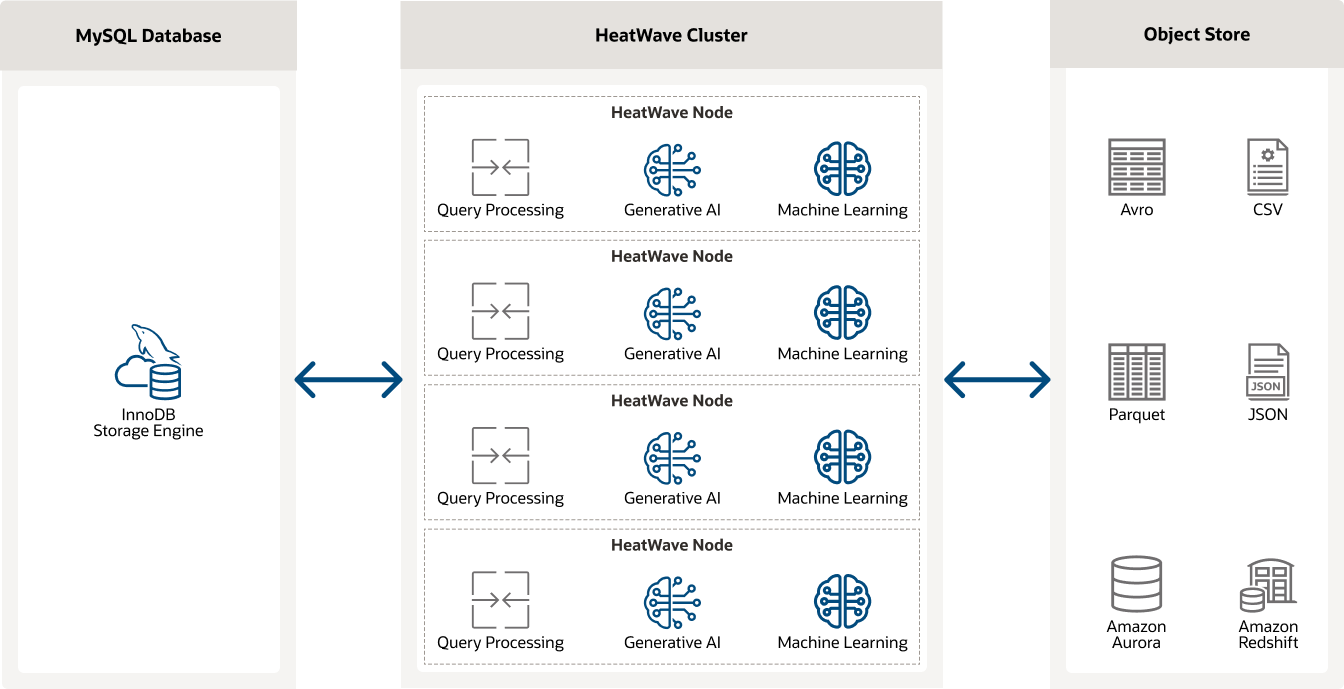

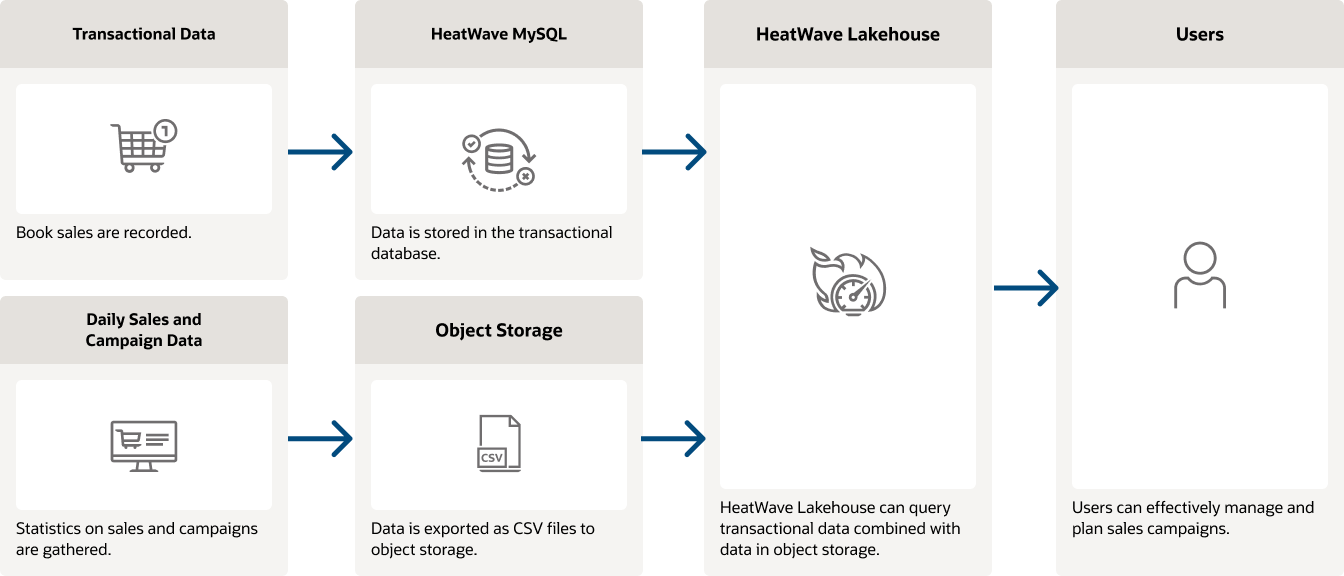

HeatWave Lakehouse allows you to query data in object storage in various file formats, including CSV, Parquet, Avro, JSON, and export files from other databases using standard SQL syntax. You can optionally combine this data with transactional data in MySQL databases to get insights across your data lake and operational databases/data warehouses using a single solution. Data loaded into the HeatWave cluster for processing is automatically transformed into the HeatWave in-memory format, and object storage data isn’t copied to the MySQL database.

The built-in HeatWave Autopilot provides workload-aware, machine learning-powered automation for HeatWave Lakehouse at no additional cost. It improves performance and scalability without requiring database tuning expertise, increases the productivity of developers and DBAs, and helps eliminate human errors. For example:

- Auto provisioning predicts the number of HeatWave nodes required for running a workload by adaptive sampling of table data on which analytics is required. This means you no longer need to manually guessestimate the optimal size of their cluster.

- Auto schema inference automatically infers the mapping of file data to the corresponding schema definition for all supported file types, including CSV. As a result, you don’t need to manually define and update the schema mapping of files, saving time and effort.

- Auto query plan improvement learns various statistics from the execution of queries and improves the execution plan of future queries. This improves the performance of the system as more queries are run.

Use cases for HeatWave Lakehouse

Let’s review four use cases implemented by customers in different industries:

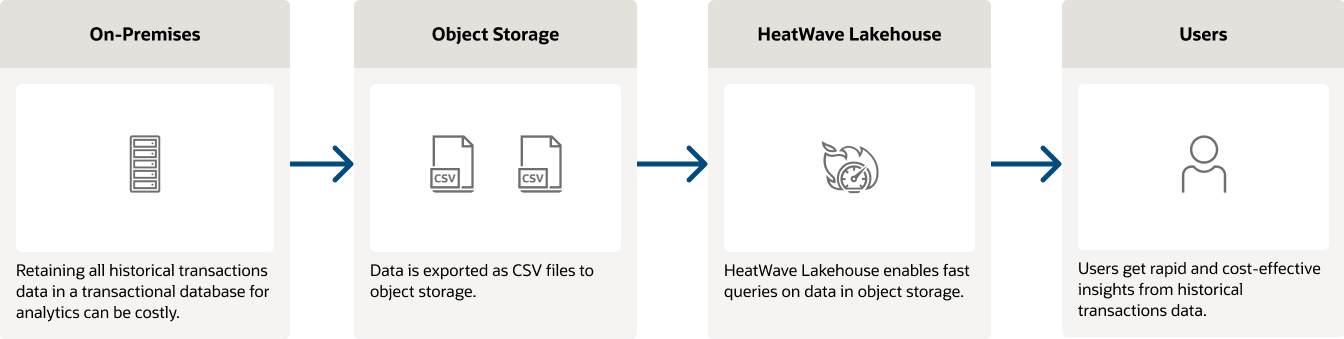

Banking

Users can obtain rapid and cost-effective insights from historical banking transactions offloaded to object storage for analysis using HeatWave Lakehouse.

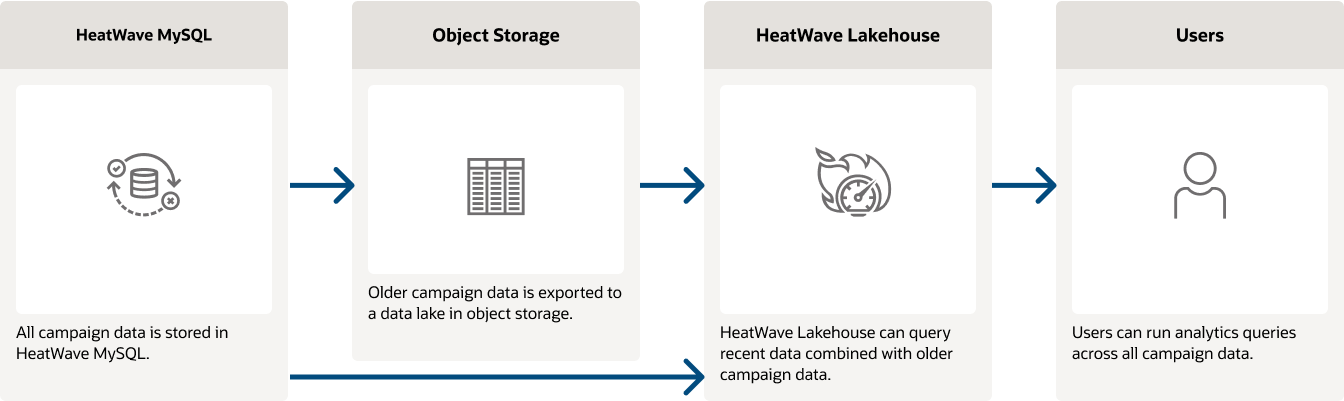

Digital marketing

With HeatWave Lakehouse, users can obtain insights across recent campaign data in their transactional database and older campaign data cost-effectively stored in their data lake.

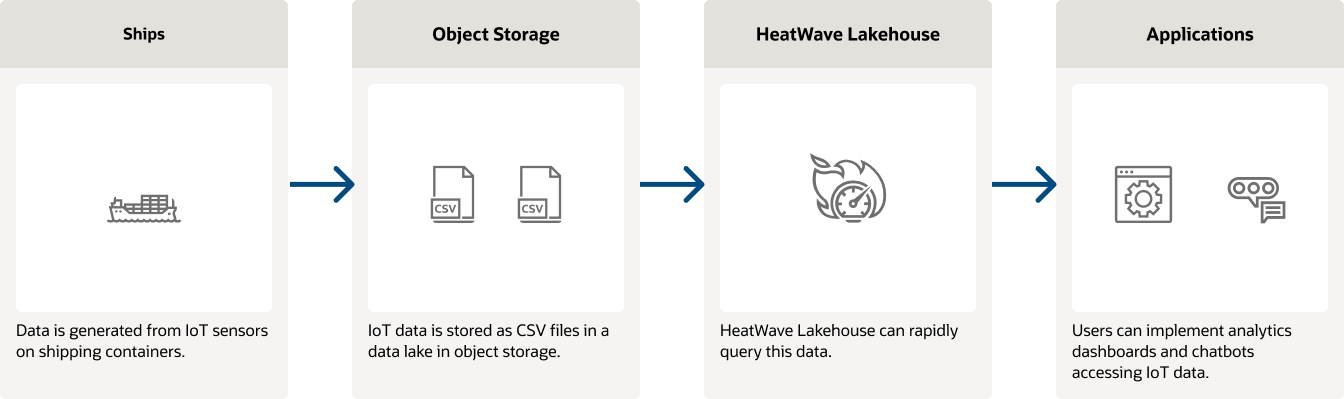

IoT

Data generated by IoT sensors can rapidly be accessed by applications via HeatWave Lakehouse.

Media

Users can manage and plan media sales campaigns using HeatWave Lakehouse to simultaneously query sales data in their transactional database as well as sales statistics and campaign data in object storage.

Faster and less expensive than Snowflake, Amazon Redshift, Databricks, and Google BigQuery

HeatWave Lakehouse allows you to get significantly faster insights at lower cost. As demonstrated by a 500 TB TPC-H benchmark, the query performance of HeatWave Lakehouse is

- 15X faster than Amazon Redshift, delivering 11X better price-performance.

- 18X faster than Databricks, delivering 15X better price-performance.

- 18X faster than Snowflake, delivering 19X better price-performance.

- 35X faster than Google BigQuery, delivering 22X better price-performance.

Built-in AI

As noted in part 1 of our blog series, HeatWave provides the simplicity of integrated, automated, and secure AI, transactions, and lakehouse-scale analytics in one cloud service. You can use a single HeatWave capability, such as HeatWave Lakehouse, and you can also take advantage of all the other built-in HeatWave capabilities at no additional cost—with great synergies when doing so. For instance:

- With HeatWave GenAI, you can upload and query unstructured documents in HeatWave Vector Store and use generative AI with your business data without AI expertise, data movement, or additional cost.

- With HeatWave AutoML you can use data in object storage, MySQL Database, or both to build, train, deploy, and explain ML models. You don’t need to move the data to a separate ML cloud service or be an ML expert as HeatWave AutoML automates the machine learning pipeline.

Conclusion

Most organizations struggle to obtain fast insights from an ever-growing set of more diverse data, and this complexity delays business decisions. This is compounded by the fact that data is foundational for AI. By using HeatWave Lakehouse, you can:

- Use standard SQL to query data in object storage—with unmatched performance and price-performance.

- Optionally combine data in object storage, including offloaded historical data, with data in MySQL databases to obtain deeper insights.

- Easily use generative AI and ML with your business data housed in databases and object storage.

HeatWave Lakehouse is available on OCI, AWS, and Microsoft Azure.

Don’t hesitate to contact us, we’ll be happy to discuss how we could help.

Resources

- Learn more about HeatWave Lakehouse

- Read the technical brief

- Request a free workshop to evaluate or get started with HeatWave Lakehouse