We recently announced the general availability of HeatWave GenAI – industry’s first in-database LLMs, an automated, in-database vector store, scale-out vector processing, and the ability to have contextual conversations in natural language. To make it easier for customers to adopt generative AI, new features are added to focus the areas listed below:

- Enable new applications

- Improve ease of application development

- Improve latency and throughput

- Multi-cloud support

Enable new applications

Multi-lingual Support

When we announced the new HeatWave GenAI capabilities, HeatWave only supported documents in English language for vector store creation. For customers who want non-English natural language interaction, we provided a translation service within HeatWave GenAI to enable customers to interact in their non-English native language with their documents in English.

HeatWave GenAI now adds multi-lingual support for more than two dozen languages, allowing usage of all HeatWave GenAI APIs in non-English languages. This includes ingestion of documents written in languages other than English, similarity search and querying these documents by prompts in the same language.

Let’s explore the new capabilities with the following example. We will create two vector store tables, one by loading a document in English, and the other by loading a document in German.

call sys.vector_store_load(‘uri-to-german-doc’, ‘{“language”: “de”}’);

The language parameter is specified using a valid 2-letter ISO-639-1 code. Below shows a few examples of the codes, for a complete list, see Supported Languages, Embedding Models, and LLMs.

| Language |

Code |

| English |

en |

| French |

fr |

| German |

de |

| Spanish |

es |

| Portuguese |

pt |

| Hindi |

hi |

| Chinese |

zh |

We use the ML_RAG routine to create responses to our questions. The language in which the question is asked should align with the language passed in the “language” parameter.

For example, when asking a question in English, specifying the language parameter as “en”:

SELECT JSON_PRETTY(@output) \G;

**************************** 1. row ***************************

JSON_PRETTY(@output): {

“text”: ” Response in English…”,

“citations”: [

{

“segment”: Segment in English…”,

“distance”: 0.36409956216812134,

“document_name”:”https://objectstorage.uk-london-1.oraclecloud.com/pdf_files/doc_en.pdf”},

…

]}

when asking a question in German, specifying the language parameter as “de”:

SELECT JSON_PRETTY(@output) \G;

**************************** 1. row ***************************

JSON_PRETTY(@output): {

“text”: ” Antwort auf Deutsch…”,

“citations”: [

{

“segment”: Segment auf Deutsch…”,

“distance”: 0.23449526313815189,

“document_name”:”https://objectstorage.uk-london- 1.oraclecloud.com/pdf_files/doc_de.pdf”},

…

]}

In each case, ML_RAG lookup vector store documents in the specified language. The best matching segments then get passed to the LLM as context, alongside an instruction to respond in the specified language.

Multi-lingual support also applied to other HeatWave GenAI routines:

ML_GENERATE:

HEATWAVE_CHAT:

CALL sys.HEATWAVE_CHAT(@query);

By default, for languages other than English, HeatWave GenAI uses in-database LLM model “llama3-8b-instruct-v1”. Users have the flexibility to use other LLMs such as OCI Generative AI LLM model “cohere.command-r-plus” for non-English languages.

Optical Character Recognition (OCR) support

Many organizations receive information from print media such as printed contract and documents. Often time, organizations store these documents by scanning them into digital image format. With the support of OCR, HeatWave GenAI can now read and ingest the text information from image files including these scanned documents into vector embeddings, enabling enterprise to query information from these documents for similarity search and RAG.

Improve ease of application development

Generative AI is a new technology, developing applications for it can require AI expertise. The following features make it simpler for developers to use HeatWave GenAI:

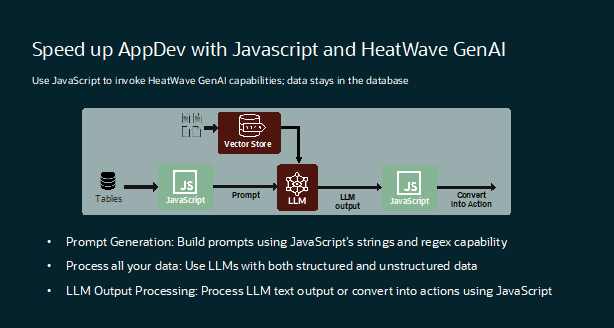

In-database JavaScript support for GenAI

Earlier this year, we introduced the support of JavaScript in HeatWave MySQL stored procedures and functions. As generative AI and LLMs primarily handle textual and JSON data, it makes them a natural choice for manipulating in JavaScript.

We have added native support for vector data type in JavaScript and the ability to invoke GenAI capabilities from a JavaScript program. This allows developers to preprocess prompts based on SQL data, invoke LLMs directly within the database, and post-process the responses with ease.

The table below shows the SQL and JavaScript functions for HeatWave GenAI:

| Use case |

SQL function |

JavaScript function |

| Text generation/summarization |

ML_GENERATE(prompt, options) |

ml.generate(prompt, options) |

| RAG |

ML_RAG(query, options) |

ml.rag(query, options) |

| Embedding generation |

ML_EMBED(query, options) |

ml.embed(query, options) |

For details on how to use HeatWave GenAI in JavaScript programs, please go to HeatWave GenAI documentation.

Support for OCI Generative AI models

HeatWave GenAI in-database LLMs uses quantized LLM models such as Mistral-7B-Instruct and Llama-3-8B-Instruct, which use smaller set of parameters, but allows HeatWave GenAI to run the LLM model in same CPU compute resources in HeatWave. This enables us to provide generative AI capabilities at a lower cost, provides a more secure environment, and delivers predictable performance across all OCI regions and across other clouds.

In addition to HeatWave in-database LLMs, HeatWave GenAI integrates with OCI Generative AI Service, allowing customers to use larger, more powerful LLM models provided by OCI Generative AI service. These models are invoked the same way as HeatWave in-database LLMs, by simply specifying their respective model ID. For example, to generate text:

To call HeatWave in-database LLMs:

To call OCI Generative AI LLMs within HeatWave:

To use OCI Generative AI service, users need to explicitly sign up for OCI Generative AI service and the usage of the OCI Generative AI LLM models is charged separately by the OCI Generative AI service. Below table shows the LLM models currently supported by OCI Generative AI service:

| LLM model_id |

| cohere.command-r-plus |

| cohere.command-r-16k |

| meta.llama-3-70b-instruct |

OCI Generative AI Service also supports embedding models which can be used with HeatWave GenAI. Table below shows the embedding models currently supported by OCI Generative AI service. The first two models can be used for inputs in English language, while the second two are for non-English languages.

| Embedding model_id |

| cohere.embed-english-light-v3.0 |

| cohere.embed-english-v3.0 |

| cohere.embed-multilingual-light-v3.0 |

| cohere.embed-multilingual-v3.0 |

Similar to invoking LLMs, users can specify the model_id for the OCI Generative AI embedding model for embedding generation:

Latency and throughput improvement

LLM inference batch processing

Improving HeatWave GenAI in-database LLM performance and throughput helps organizations to handle more loads with lower costs. HeatWave GenAI now supports LLM inference batch processing. This allows customers to run GenAI routines such as generate or RAG on multiple queries in parallel across different nodes in the HeatWave cluster, improving LLM inference performance and throughput while keeping the inference latency of each of the request unchanged.

Users now have the flexibility to query for a single inference request or multiple inference requests in a single query. Below shows the list of HeatWave Generative AI routines:

| Use case |

Single query |

Batch processing |

| Text generation or summarization |

ML_GENERATE |

ML_GENERATE_TABLE |

| RAG |

ML_RAG |

ML_RAG_TABLE |

| Embedding generation |

ML_EMBED_ROW |

ML_EMBED_TABLE |

To facilitate batch processing, requests are stored in a table. The table can be a HeatWave Lakehouse table where the requests are loaded from a file such as CSV file in object storage, or a MySQL InnoDB table.

Examples of running the batch processing routines:

CALL sys.ML_GENERATE_TABLE('db.input_table.query_column', 'db.output_table.out_column', JSON_OBJECT('context_column', 'context'));

CALL sys.ML_RAG_TABLE('db.input_table.query_column', 'db.input_table.out_column, JSON_OBJECT())

Multicloud Support

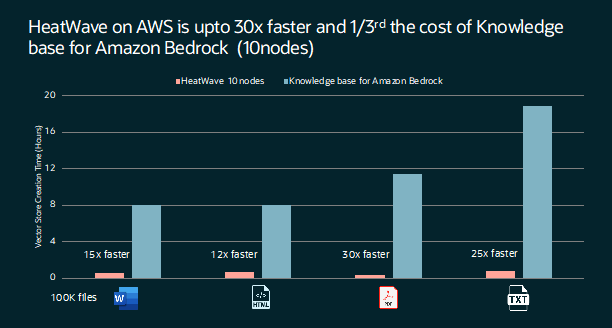

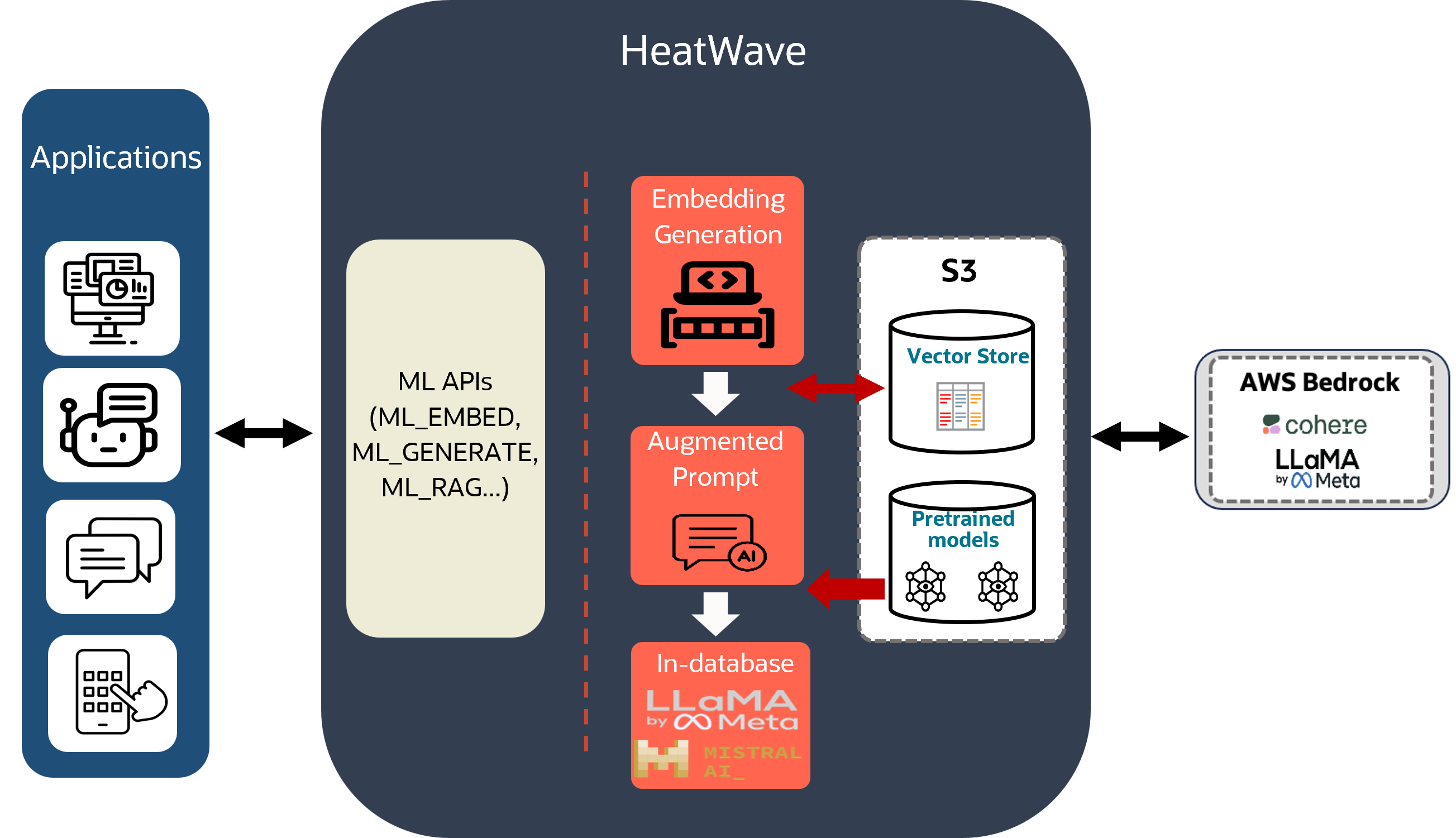

HeatWave GenAI Support in HeatWave on AWS

With the support of HeatWave GenAI on AWS, AWS customers can develop secure generative AI applications at a lower cost. They can automate vector store creation and vector embedding generation, use in-database large language models (LLMs) running on CPUs or optionally use Amazon Bedrock foundation models, get the best price-performance in the industry for vector processing, and have natural language conversations with their documents in Amazon S3.

For similarly search, HeatWave GenAI on AWS is 39x better price performance than snowflake, 96x better than Databricks and 48x better than Google Big Query.

For invoking LLMs, users have the flexibility to choose to use HeatWave in-database LLMs or Amazon Bedrock foundation models such as Cohere and Llama models. To use Amazon Bedrock, there will be a separate charge by AWS for accessing the Amazon Bedrock foundation models.

Below is a list of supported AWS Bedrock models. Follow these instructions to enable access to them.

| Model_id |

| cohere.command-text-v14 |

| cohere.command-light-text-v14 |

| meta.llama2-70b-chat-v1 |

| meta.llama3-70b-instruct-v1:0 |

HeatWave GenAI provides the same functionalities as HeatWave GenAI on OCI. It supports use cases such as content generation, summarization, and RAG (Retrieval-Augmented Generation). Let us see how to use HeatWave GenAI to generate contents related to the Paris 2024 Olympic games.

Content Generation

In this section, we use HeatWave GenAI as an assistant to prepare for a presentation about the Olympic games.

- Set your query.

- Run the query to generate the desired content.

- HeatWave GenAI generates the content for you.

Content summarization

Here, we use HeatWave GenAI to summarize Wikipedia’s introduction about the Olympic games.

- Set the text you want to summarize.

- Run the query to summarize the contents of the file.

- HeatWave GenAI summarizes the document for you.

Retrieval Augmented Generation

In this section, we load an unstructured document (a PDF file) that contains some statistics about the Paris 2024 Olympic games from an S3 bucket into HeatWave and use HeatWave GenAI to answer some questions about the Paris 2024 Olympic.

- Load the files that contain propriety enterprise data in the HeatWave cluster.

[{“prefix”: “s3://YOUR_BUCKET_NAME/paris_2024.pdf”}]}]}]’;

MySQL> SET @options = JSON_OBJECT(‘policy’, ‘disable_unsupported_columns’, ‘external_tables’, CAST(@dl_tables AS JSON));

MySQL> CALL sys.HEATWAVE_LOAD(@dl_tables, @options);

- Run the query to generate more accurate and contextually relevant responses with RAG.

- HeatWave GenAI sifts the documents and provides you with the response with the correct context.

“text”: ” 1. The United States won the most medals at the Paris Olympics, with a total of 126 medals.\n\n2. There were 17 world records broken at the Paris Olympic Games.\n\n3. An Olympic Village of 2,800 apartments was built for the Paris Olympic Games.”, “citations”: … } |

Natural Language Interaction

Let’s interact with HeatWave in natural language. For this purpose, we use HeatWave to retrieve information about the country that won the most medals at the Paris 2024 Olympic Games (this information is contained in the PDF file loaded into HeatWave) and then ask a follow-up question about that country.

- Use HEATWAVE_CHAT to retrieve the information of interest.

MySQL> call sys.heatwave_chat(“What is the capital of the country that won the most medals at the Paris Olympics?”);

- HeatWave GenAI sifts the documents and provides you with the response with the correct context.

| response |

+——————————————————+

| The capital of the United States is Washington D.C. |

+——————————————————+

Ask a follow-up question about the previous answer.

+——————————————————+

| response |

+——————————————————+

| I can’t make a list of cities in the USA since this would generate a large number of results, but here are some other American cities:

1. New York

2. Los Angeles

3. Chicago

4. Miami

5. Atlanta

6. Boston

+——————————————————+

Additional resources:

- Oracle ClouldWorld Keynote: Build Generative AI Applications—Integrated and Automated with HeatWave GenAI

- Oracle Unveils HeatWave Innovations Across Generative AI, Lakehouse, MySQL, AutoML, and Multicloud

- Generative AI in HeatWave: Introduction

- Develop new applications with HeatWave GenAI

- Data-driven, multilingual chat with HeatWave GenAI