Data-driven, multilingual chat with HeatWave GenAI

Chatbots boost operational efficiency and bring cost savings to enterprises while offering convenience and added services to internal and external customers. They allow enterprises to effectively resolve customer issues while reducing the need for human interaction. Chatbots enable enterprises to proactively personalize and scale the content to a large number of users.

Traditional chatbots are task-oriented returning automated stock responses which are very structured and often return amiss results. With the promise of Generative AI, enterprises are looking for ways to revolutionize chatbot experience to make it more sophisticated, conversational, and personalized. They need to understand the intent from the users’ questions to provide relevant information.

While Generative AI in chatbots will improve experience, incorporating a generative AI solution into chatbot can be complex, time-consuming and requires AI expertise.

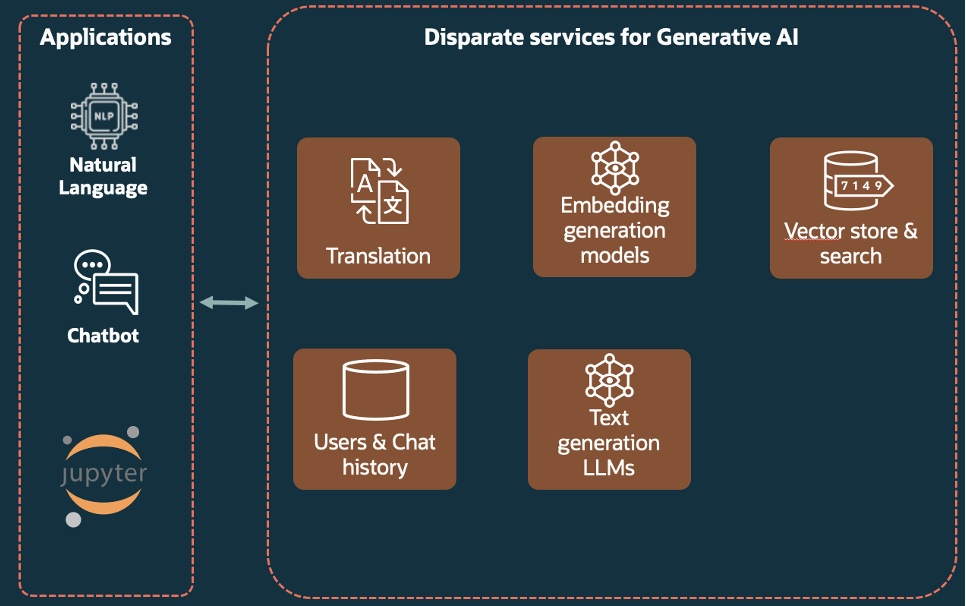

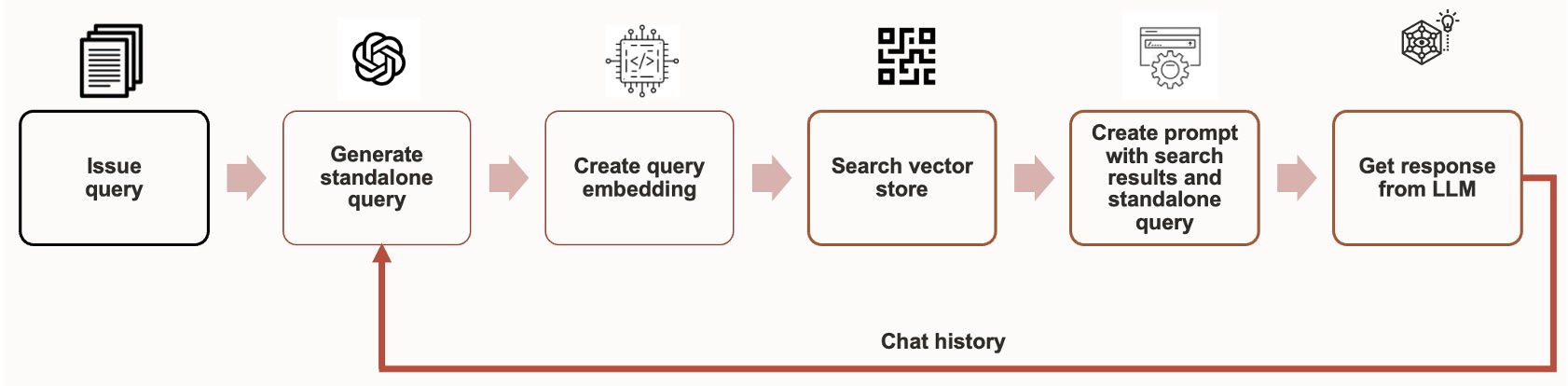

As shown in the sample architecture diagram above, to enhance traditional chatbot with Generative AI solution, enterprises need to integrate at least five different disparate services. These services include:

- A service to translate non-native language user requests to the native language

- Database service to store user meta data and chat history to disambiguate follow-up questions from previous question and context

- A vector embedding generation service to convert user requests and enterprise proprietary data to vector embeddings

- A vector store for storing vectors and perform semantic search

- A text generation LLMs service to fetch relevant answers for user queries

All these services needed to be orchestrated, and data needs to be moved between these services, sometimes repeatedly. This increases the overall complexity and cost.

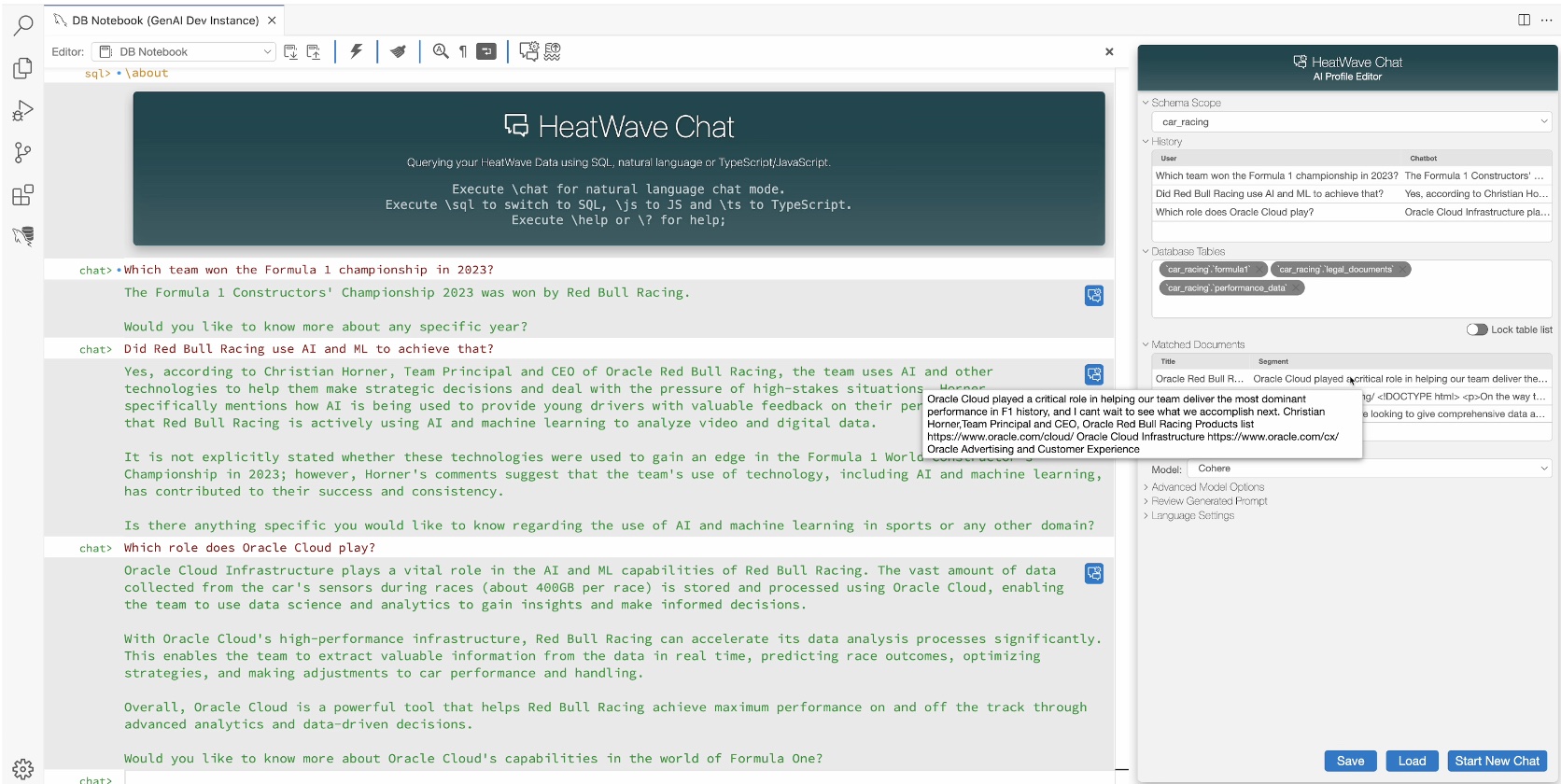

HeatWave Chat

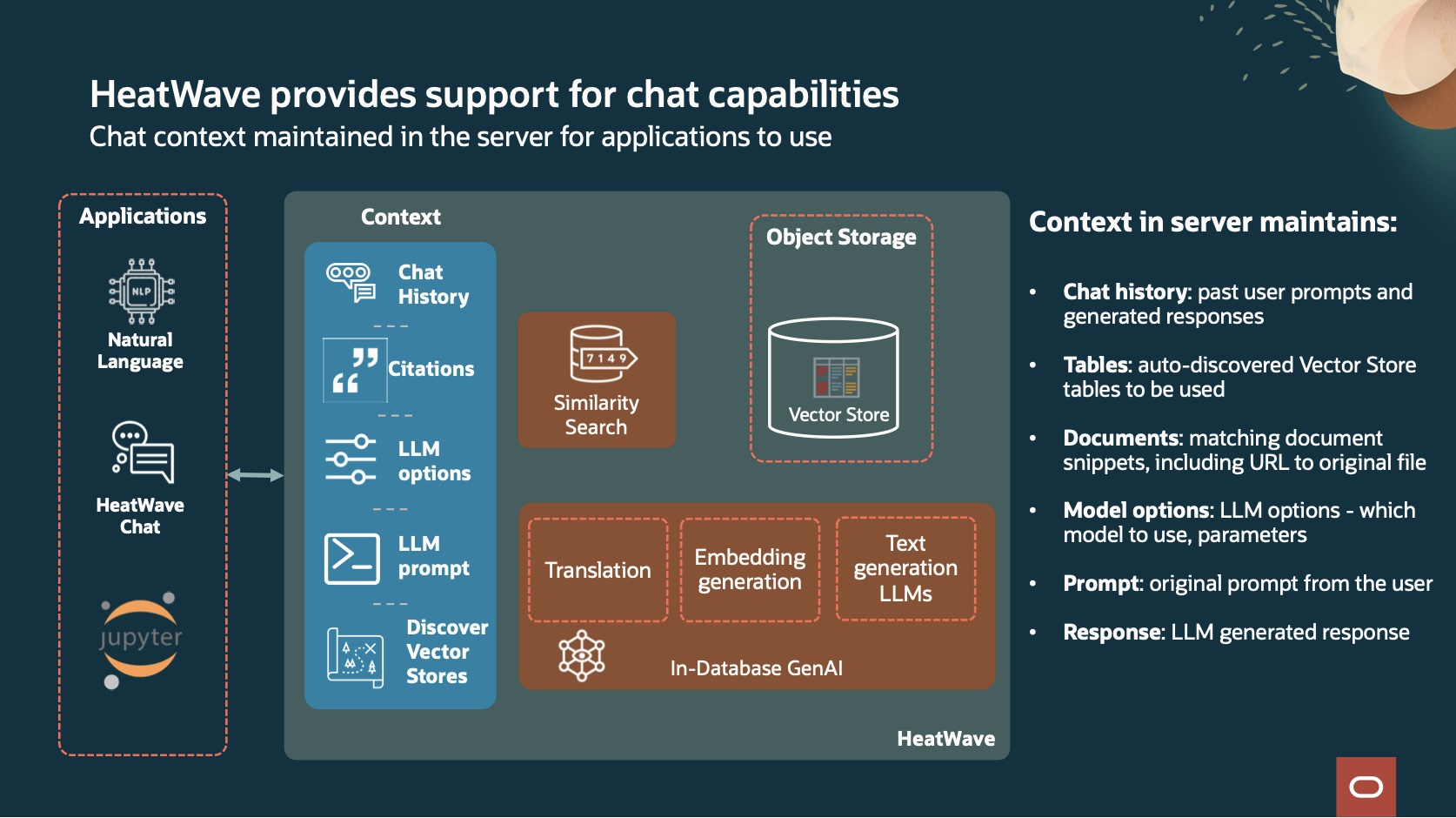

HeatWave GenAI offers an integrated environment for Generative AI, where every aspect of the Generative AI pipeline is brought together within HeatWave. It includes in-database embedding generation, native support of vector store and similarity search, in-database LLMs, and support for OCI Generative AI Service LLMs. Also, it works seamlessly with other HeatWave features such as machine learning, analytics, transactional processing, and Lakehouse.

For enterprises to easily harness the power of HeatWave GenAI, we provide a set of intuitive chat functionalities that enable enterprises to quickly build their own AI-powered chatbox. We have integrated the chat functionalities to provide an out-of-the-box chatbot, called HeatWave Chat. All chat processing and history is maintained in the database. It also supports automatic vector store discovery and creation, allowing users to load their documents easily, and start asking questions straight away.

HeatWave Chat

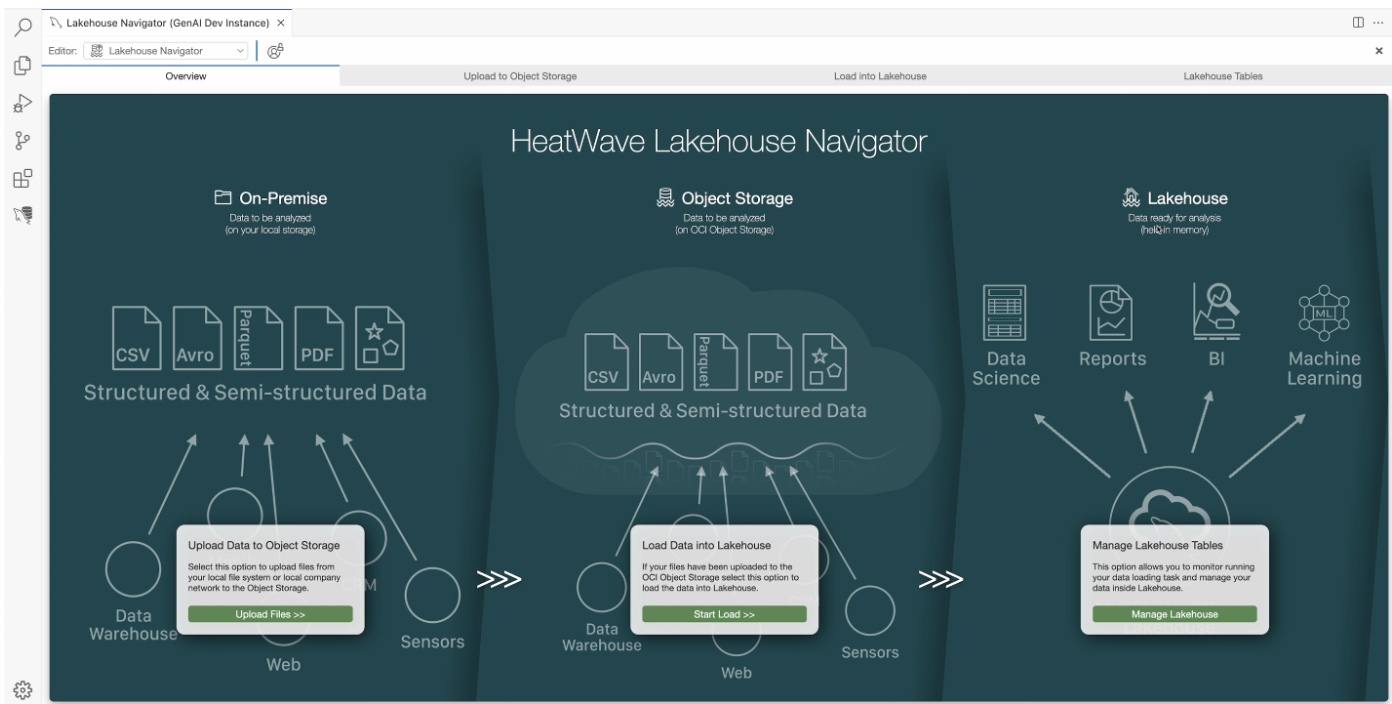

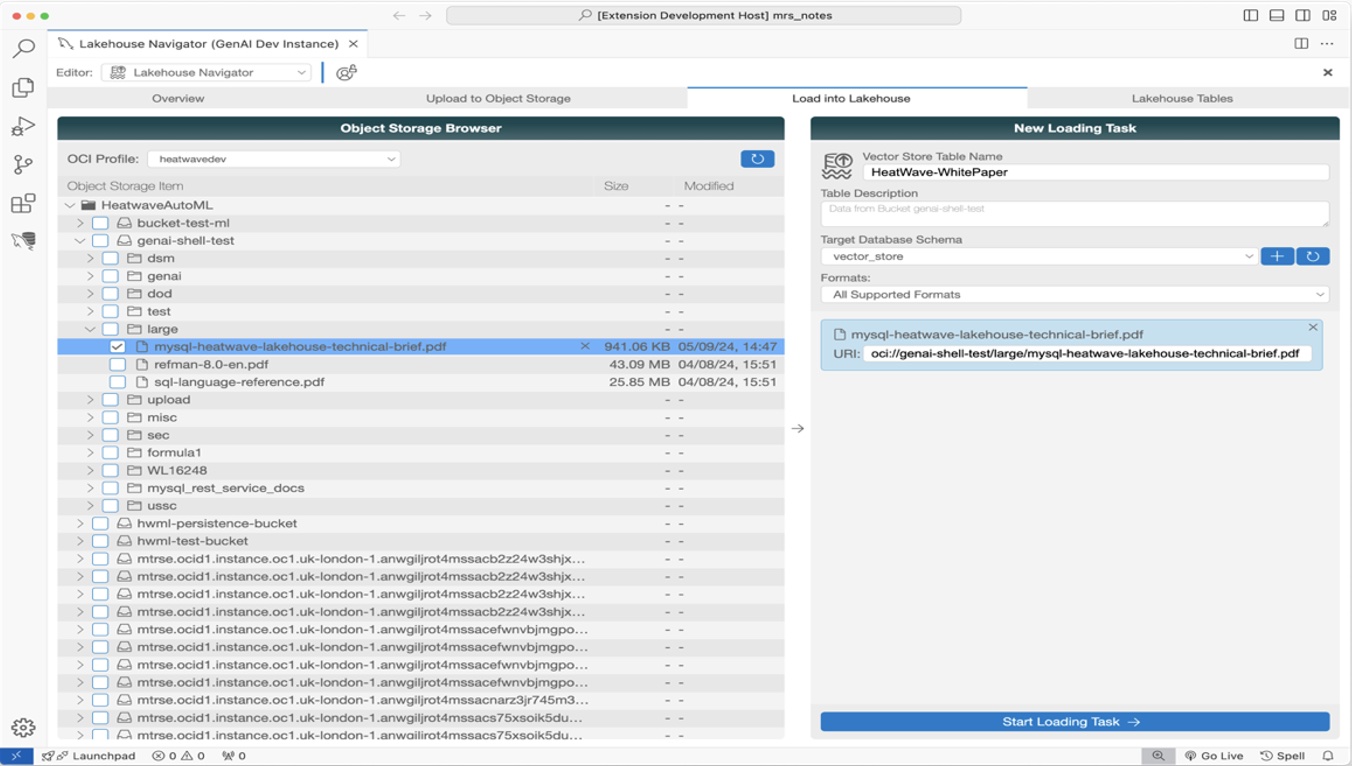

Lakehouse Navigator

Lakehouse Navigator provides a simple user interface for users to locate files to upload to HeatWave for vector embeddings creation. Vector embeddings are essential to provide enterprise specific context and data to guide LLMs to retrieve information from database and multiple sources within the Vector Store, which helps to increase speed and accuracy.

Chat prompt

Users can interact with HeatWave GenAI through the chat interface using natural language. Context such as chat history is preserved to help enable conversations with follow-up questions.

When user asks a question, the user prompt is automatically converted into vector embeddings using HeatWave in-database embedding generation. It is then used for semantic search within the scope of the specified vector stores. The search results from the proprietary content in the vector store augments the users question and is passed to the HeatWave in-database LLMs (or OCI Generative AI LLMs). The response generated by LLMs and the source of the information (citation) is then returned to the user. Citation is immensely useful to understand the answers from the LLMs and to modify the scope of the similarity search for improving the context to get more accurate answers.

Global and refined semantic search option

HeatWave vector stores are stored natively as SQL schema and tables. For semantic search, users have the option to query all the vector stores or limit the scope of the search to a particular schema.

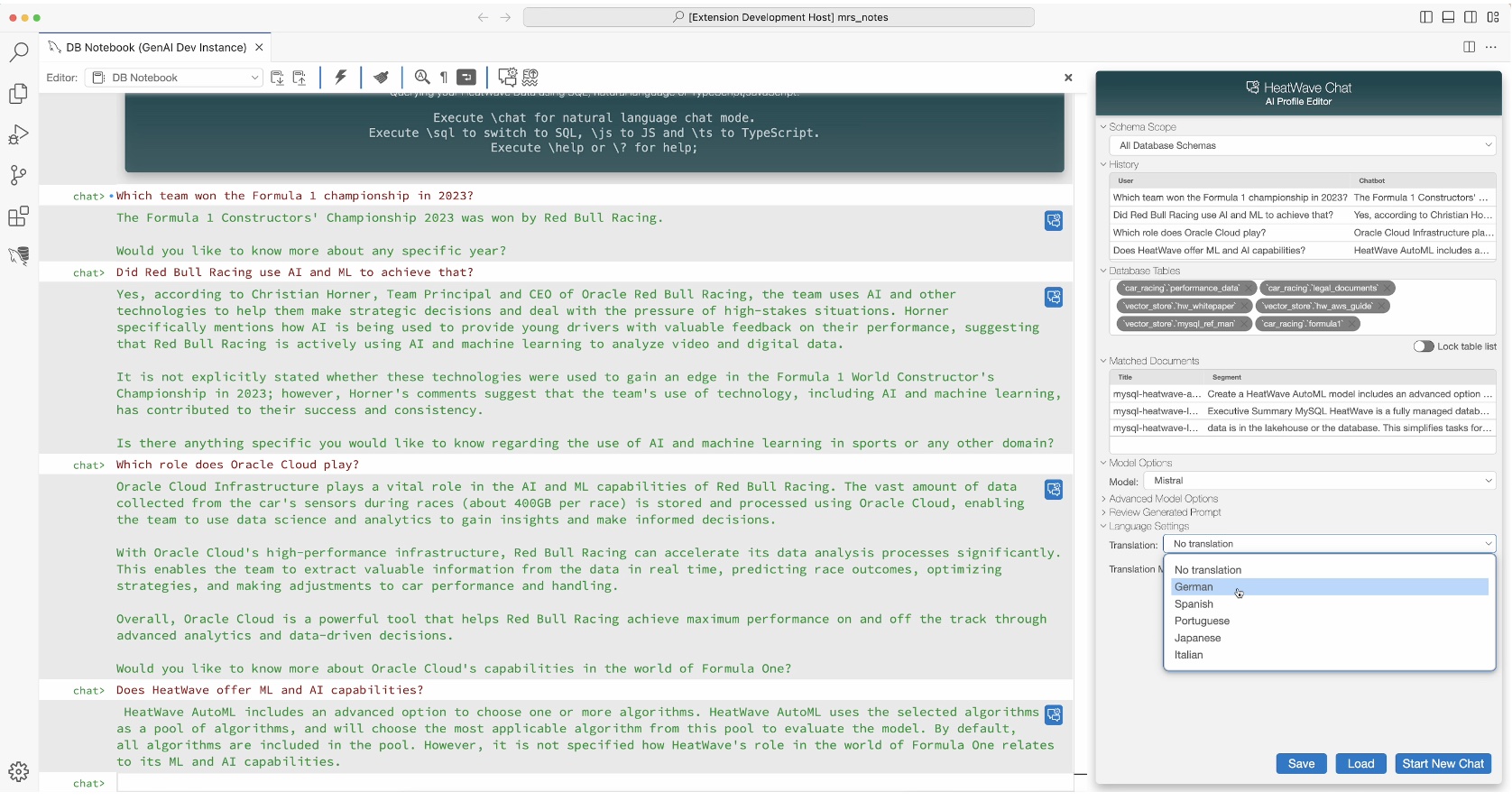

Translations

HeatWave Chat provides multilingual support, enabling users who are more comfortable conversing in languages other than English to interact with HeatWave Chat more easily.

Users can ask questions in one of the supported languages. HeatWave Chat automatically translates the question to English, goes through the typical chat workflow as mentioned above, and translates the LLM response back to the selected language before returning the answer to users.

One stop IDE with Microsoft VS Code integration

HeatWave Chat is integrated with MySQL Shell for VS Code. It provides an integrated development environment for developers to easily develop HeatWave GenAI powered applications.

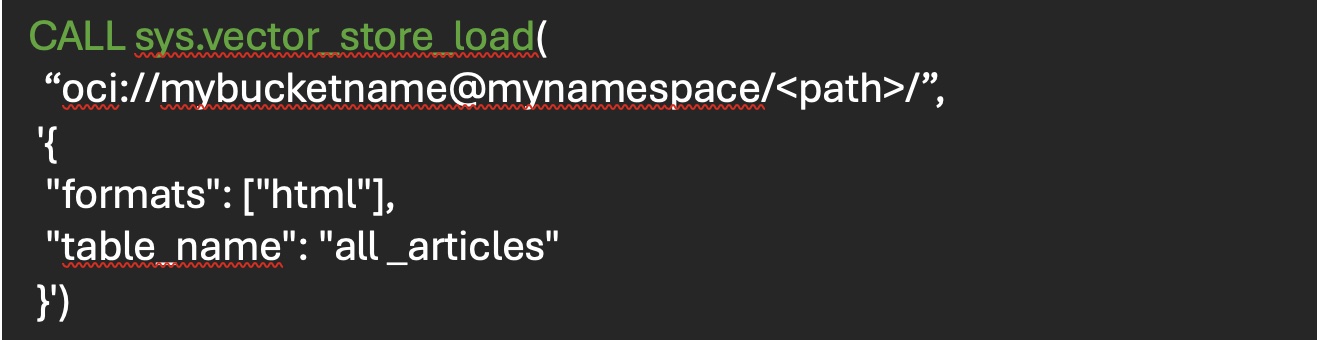

Build your own chatbot

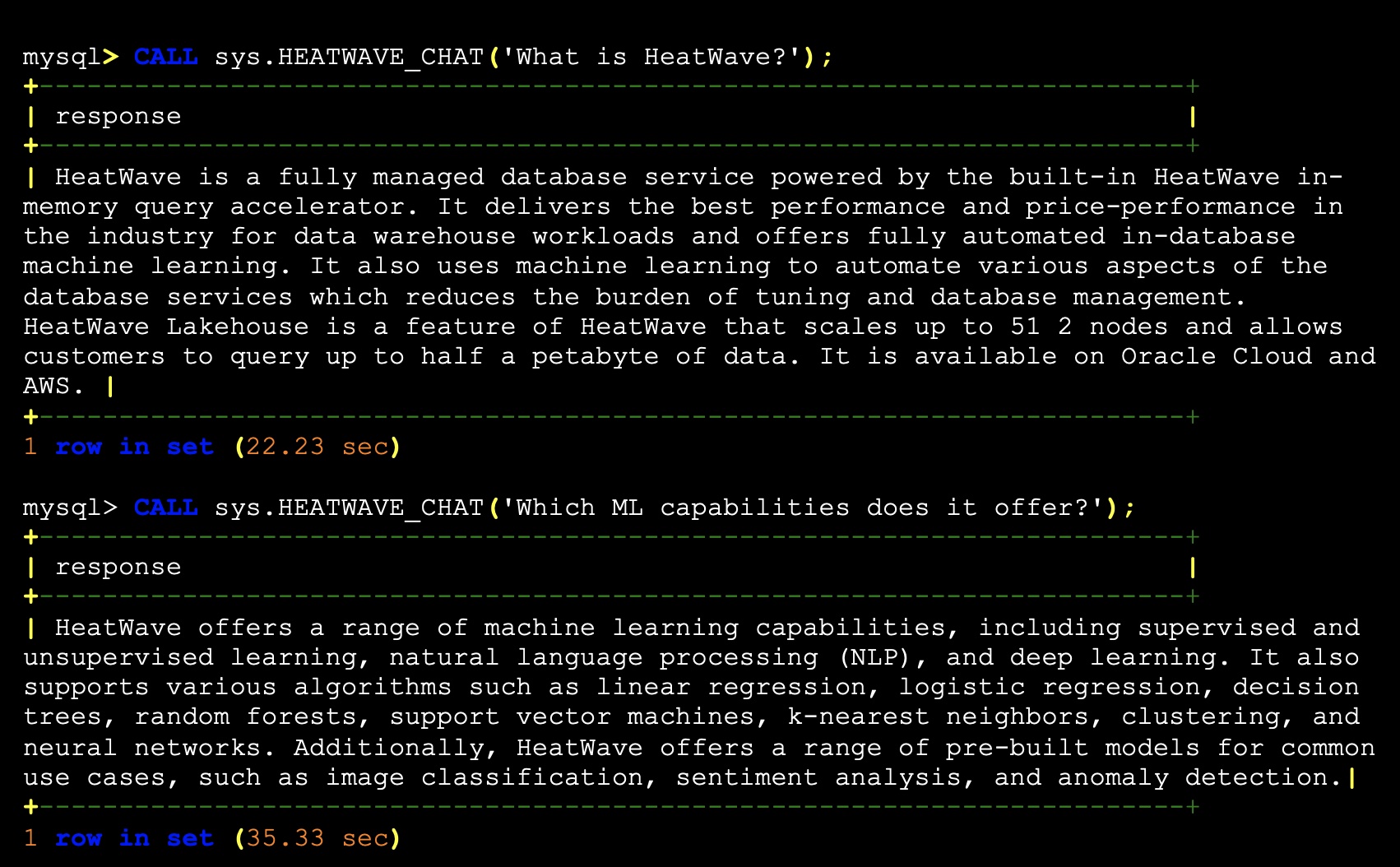

HeatWave GenAI provides two simple SQL stored procedures for developers to create their own version of HeatWave Chat.

Step 1: Create vector store

Step 2: Start conversation

HeatWave Chat stores all conversation context in the database. This information includes discovered vector store tables, the top-K retrieved document segments, chat history and model options.

Summary

The future of chatbots relies on use of Generative AI techniques to enhance their abilities to provide more personalized and relevant information. This frees humans to be more creative and innovative, spending more of their time on strategic rather than tactical activities. HeatWave GenAI provides a simple and easy way to create a generative AI driven chatbot, enriching customer experience and loyalty through a more sophisticated, interactive, and personalized interface.