Apache Airflow is a powerful tool for managing and scheduling data pipelines.

It allows you to define your data processing workflows as directed acyclic graphs (DAGs) and manage their execution in a scalable and reliable manner.

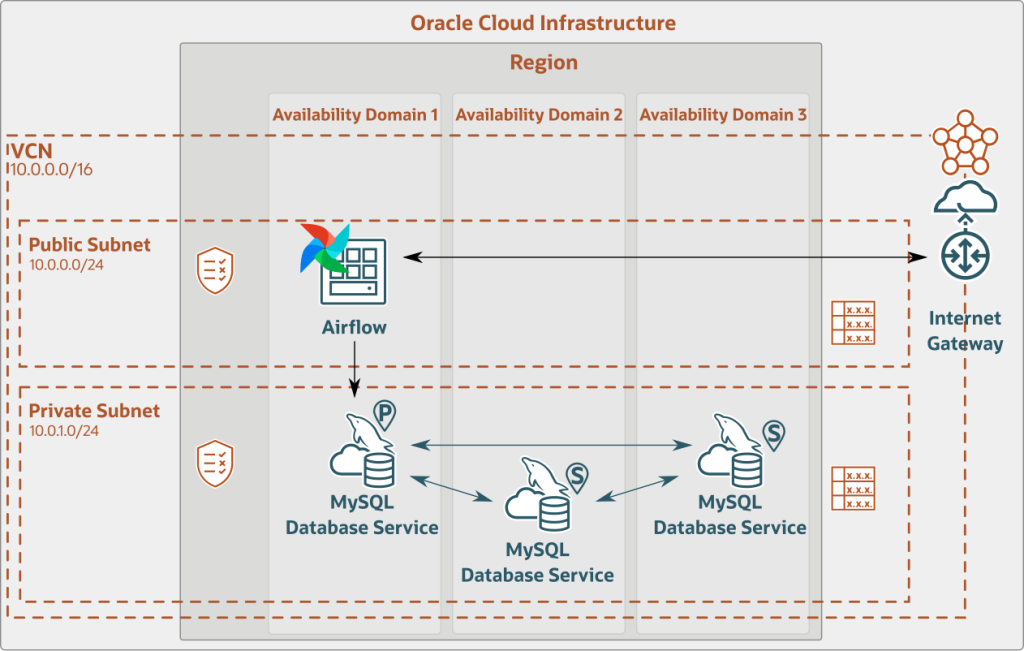

In this blog, I will explain how to deploy Apache Airflow on Oracle Cloud Infrastructure (OCI) with MySQL HeatWave Database Service as the backend store.

By using this setup, you can take advantage of the scalability and performance of OCI to run your data pipelines at scale. In the following sections, we will walk through the steps to set up and configure Apache Airflow on OCI, as well as how to connect it to the MySQL HeatWave database service.

Apche Airflow will be installed on a Compute instance in OCI, the deployment is very easy using the OCI Resource Manager Stack shared in this blog post. You can also use only the Terraform modules to deploy Apache Airflow in your existing architecture:

Architectures

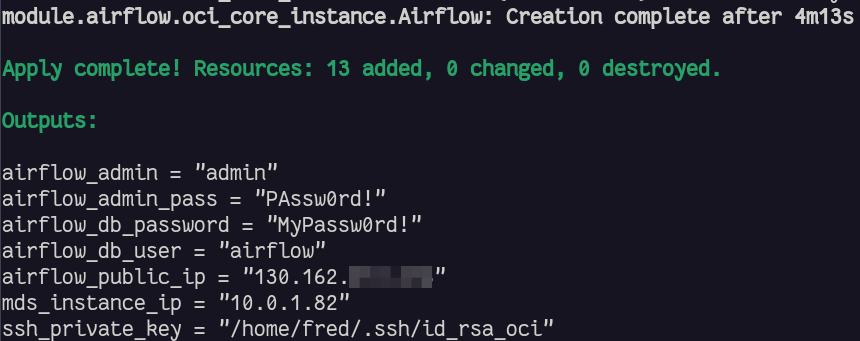

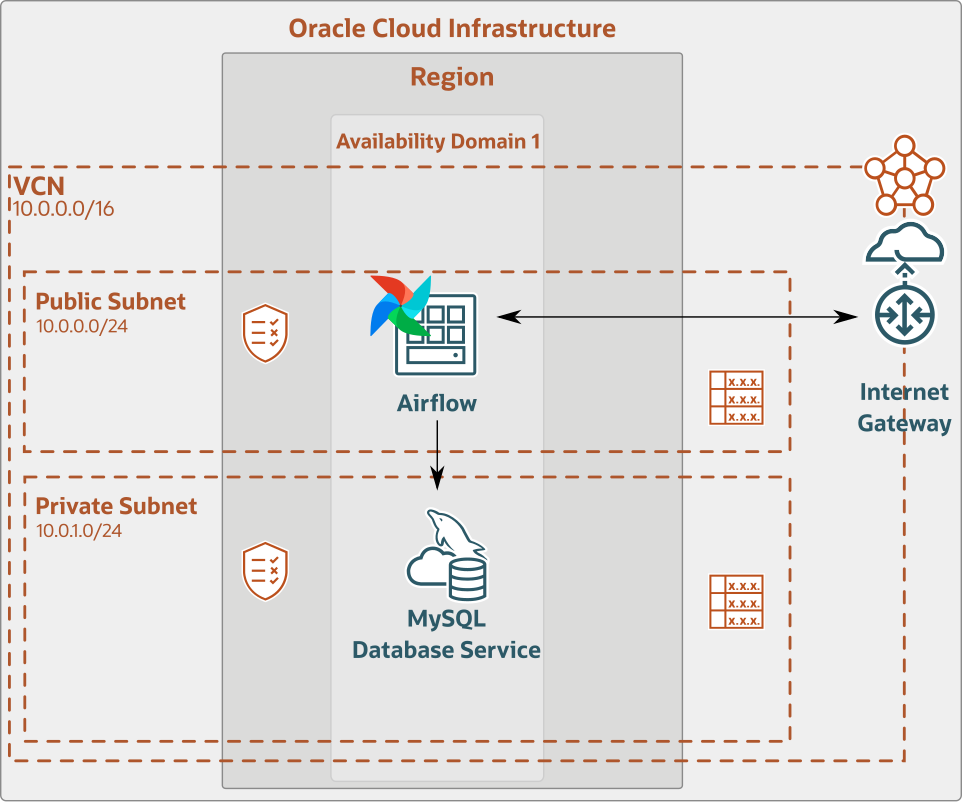

With the modules you can deploy architectures like these:

We will use the exact same modules built into a stack for OCI’s Resource Manager. This will allow us to deploy the architecture just in few clicks.

Deployment

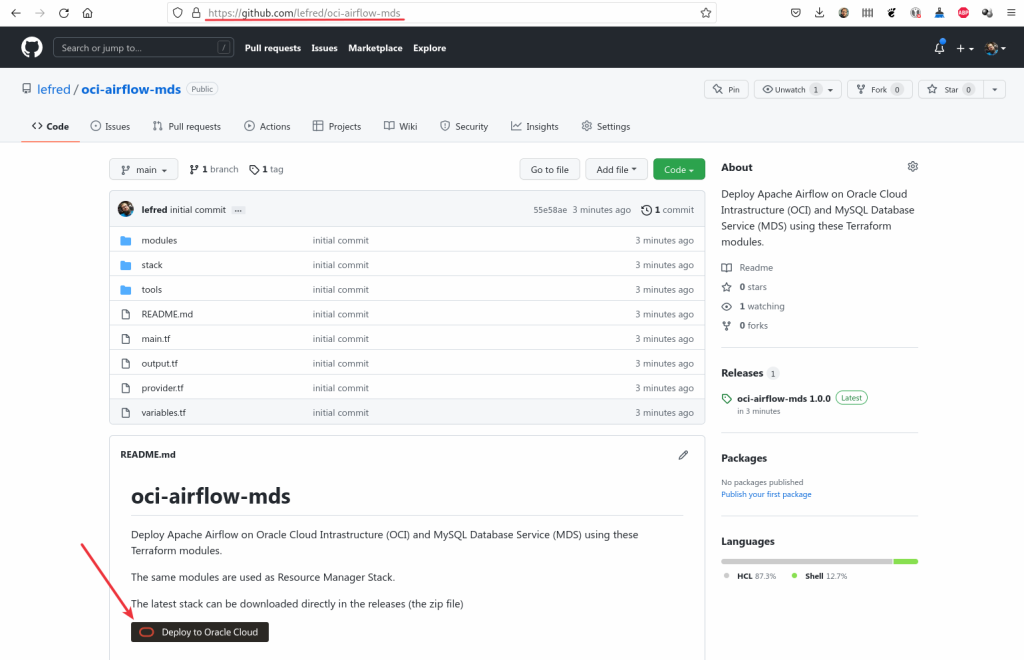

We open in a browser the following GitHub repository: https://github.com/lefred/oci-airflow-mds and we click on the Deploy to Oracle Cloud button:

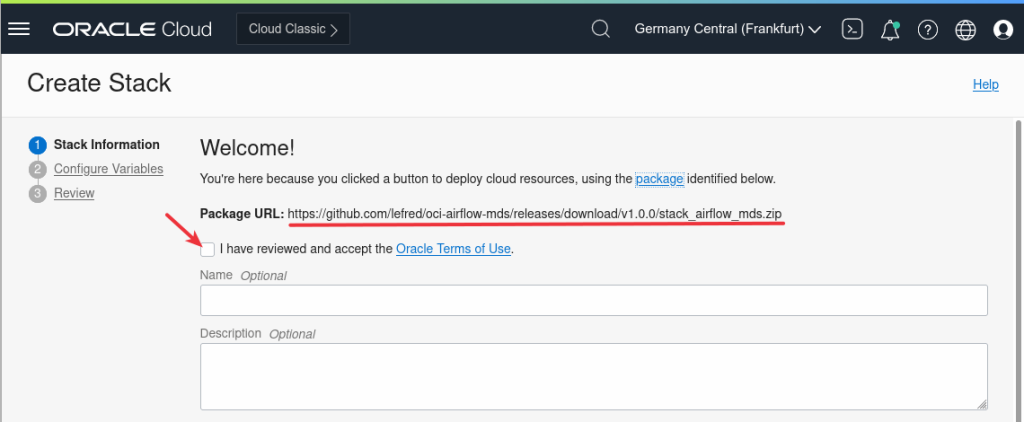

If you have already an OCI account and you are logged in Oracle Cloud’s console, you will see the following screen and you need to accept the Oracle Terms of Use:

If you don’t have an account yet, I encourage you to get a free account with $300 credits to test HeatWave, click here !

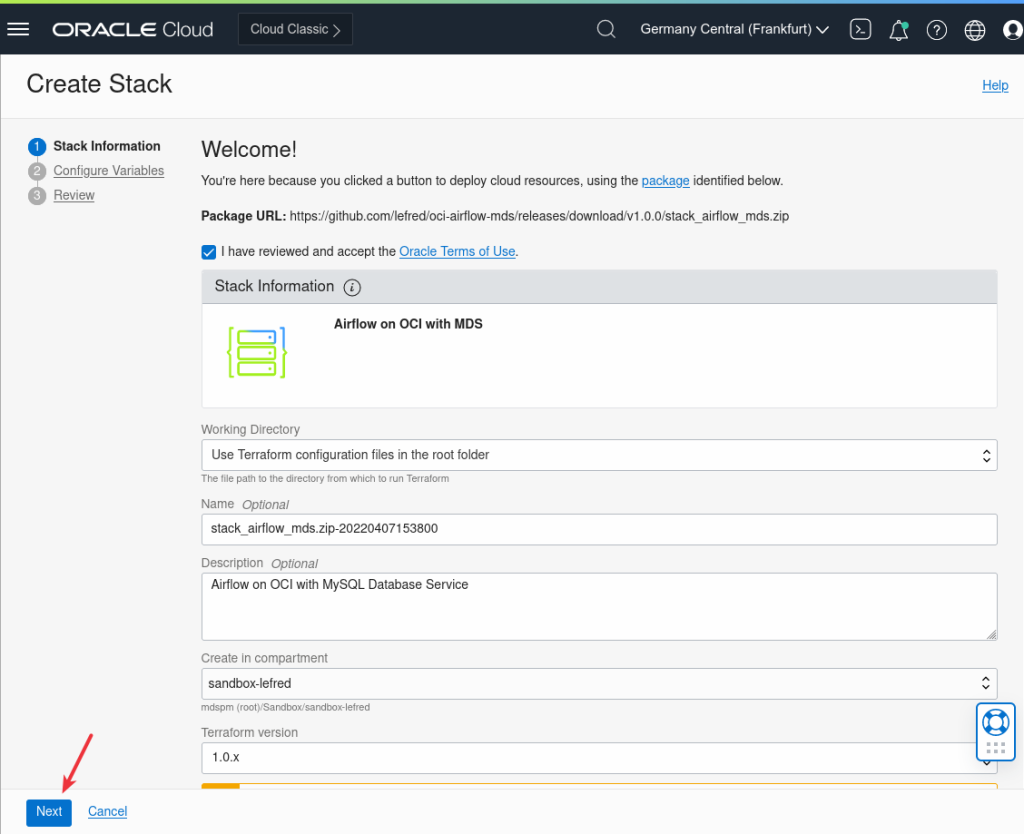

Once the Term of Use accepted, the form will be filled and you can modify some entries and click next when ready:

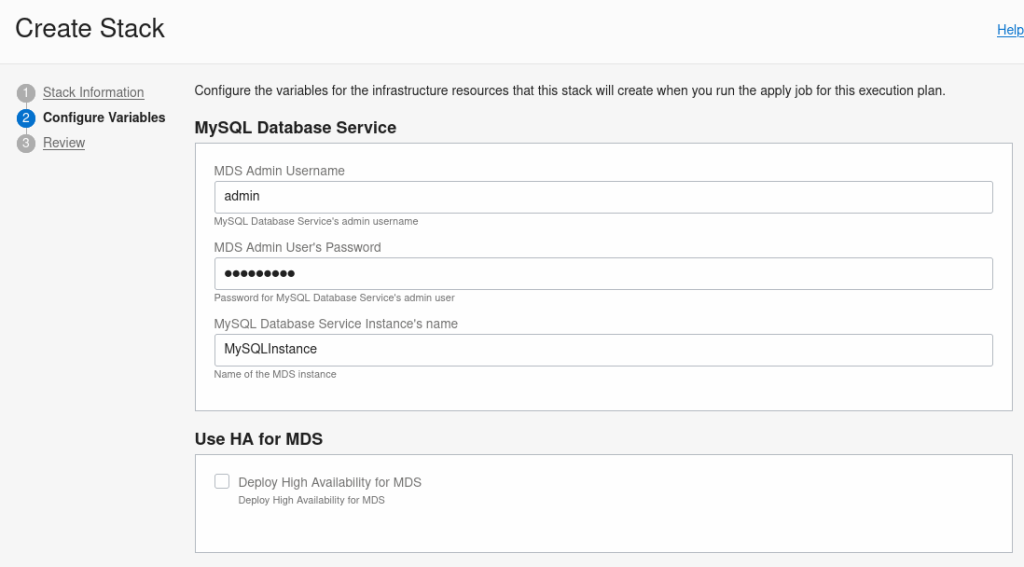

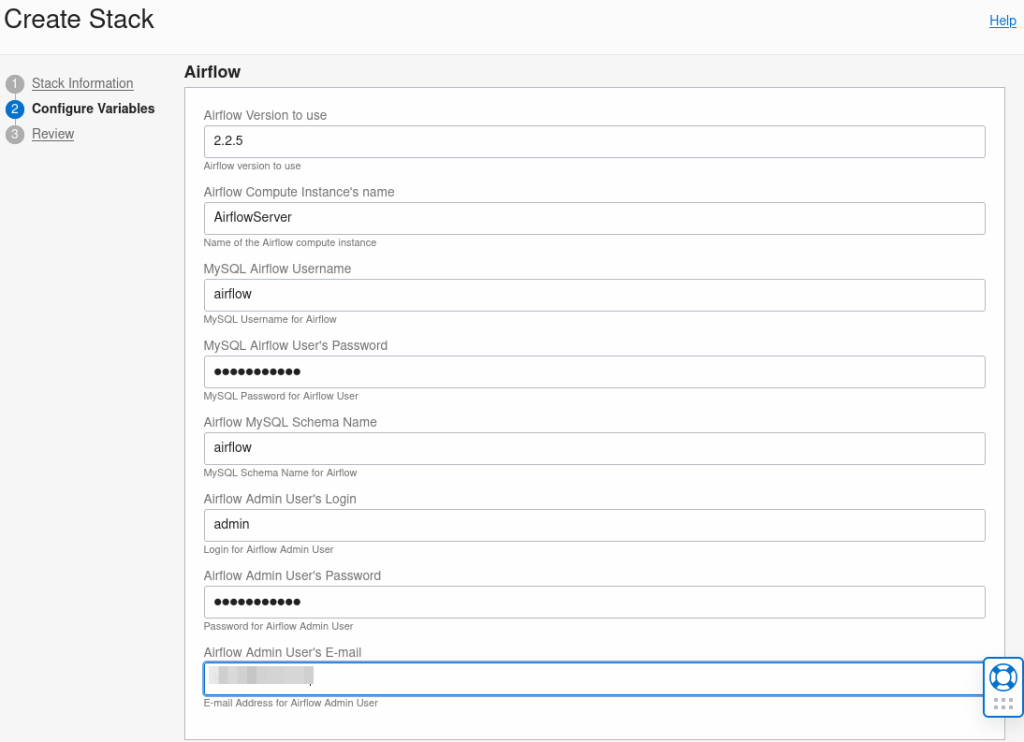

Se second Wizard’s screen is dedicated to the variables. There are already several default values. You can of course change them. This is in this section that you also decide which architecture you want to deploy (single MDS instance, HA, HeatWave):

Some fields are not yet filled and are mandatory like the email address of the airflow admin user:

If you don’t want to immediately deploy a HeatWave Cluster, you can still do it later but you must use a compatible shape already:

After the reviewing the information, you can Create the stack and Apply the job to deploy the resources:

Deployment

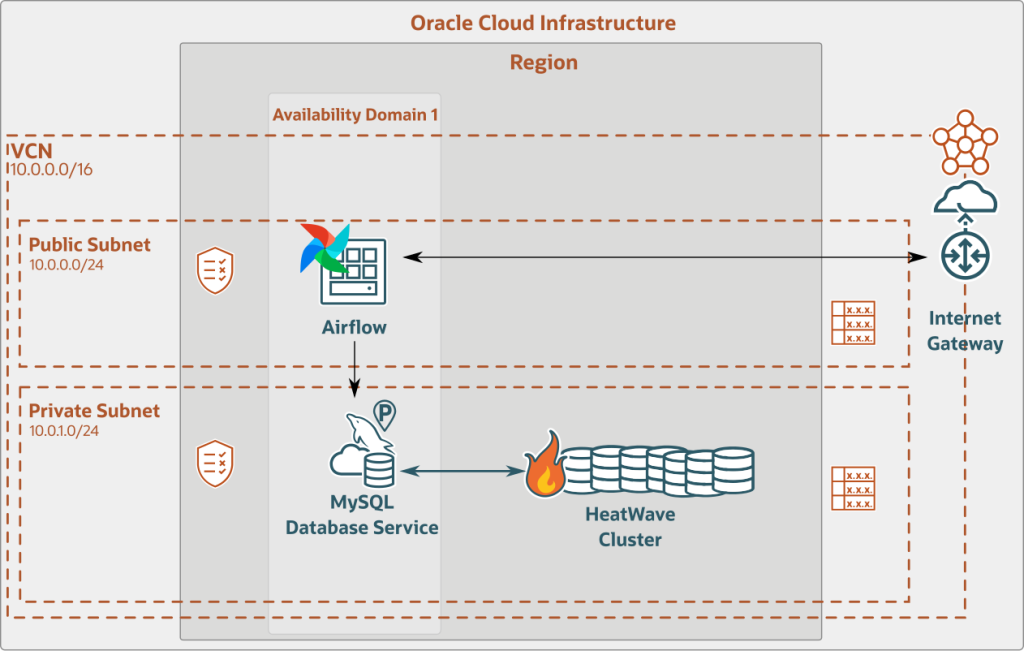

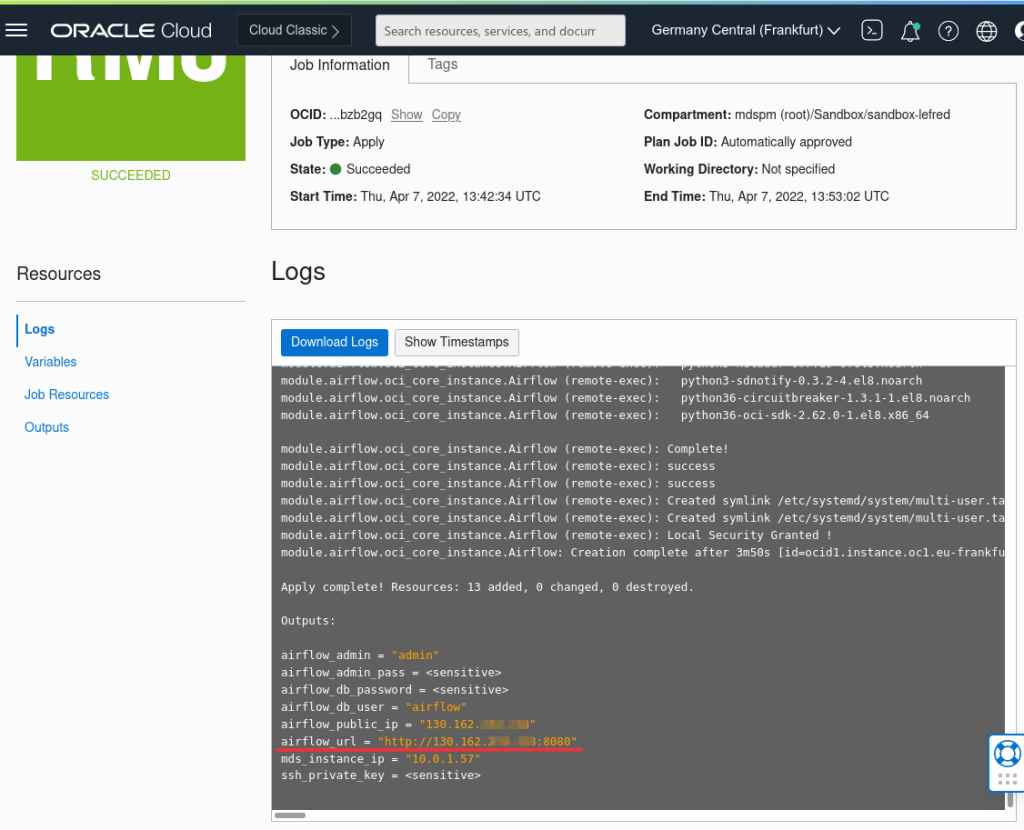

OCI’s Resource Manager starts deploying all the resources. You can follow the logs and when finished you can see the output information you need to connect to Airflow:

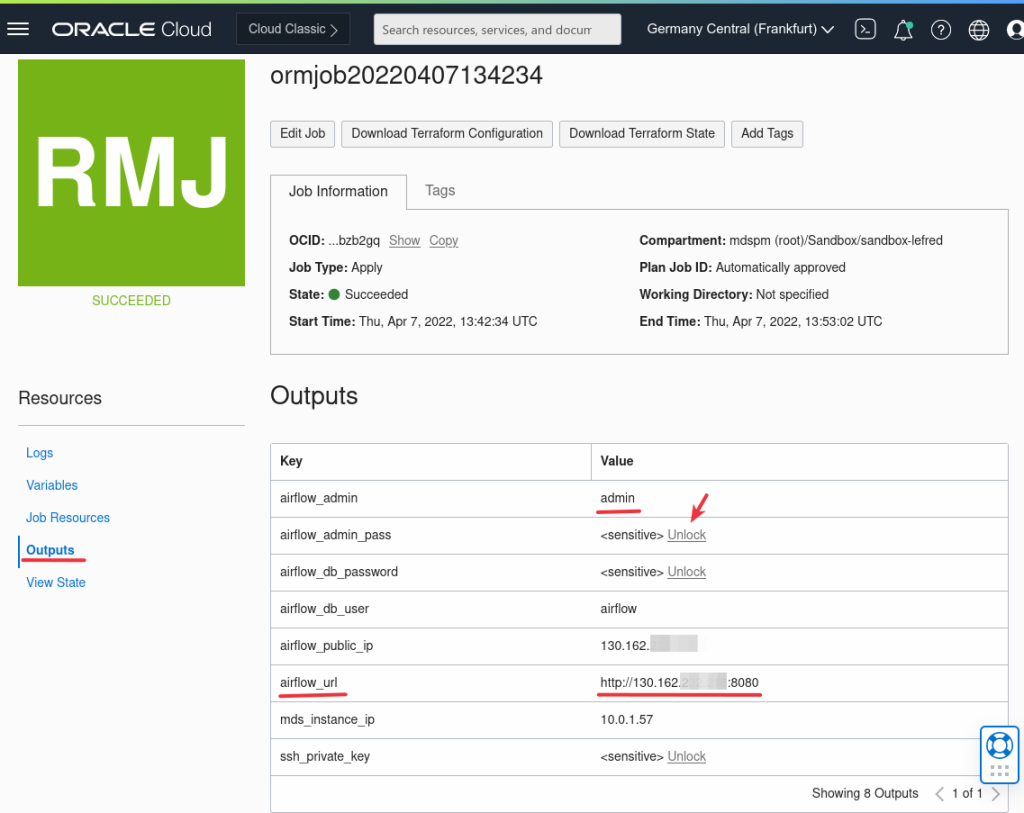

You can always retrieve the useful output values for the Job from the Outputs section:

Airflow

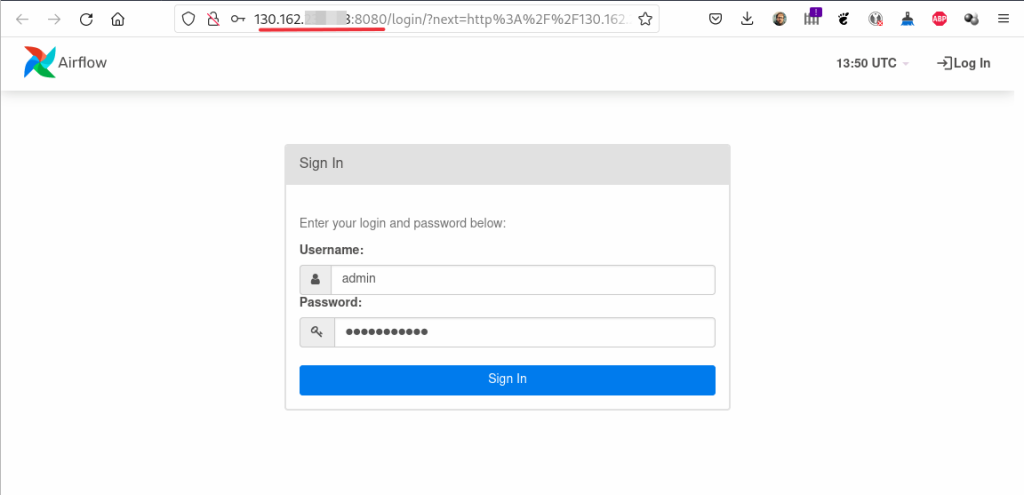

Now you can connect to Airflow using the credentials you entered in the stack wizard:

Airflow is using mysql-connector-python to connect to MDS.

Please note that for the moment, deploying airflow on Arm does not work, there are some pip dependencies not building. So please pay attention to not use the Ampere shape for the airflow compute instance.

Yet another nice open source program using MySQL Database Service deployed on OCI very quickly !

Enjoy MySQL, MySQL HeatWave Database Service and Apache Airflow !