Enterprises see tremendous opportunities with generative AI. Use cases range from asking simple questions about industry marketing trends or how to use a particular machine learning algorithm, to more sophisticated questions—such as generating queries specific to your database, generating personalized customer marketing emails, or determining aggregate sentiment for each question on your customer feedback survey.

When asking natural language questions to a generative AI-powered application —whether about your private data or even general information—how do you know that the answers you’re getting are correct or comply with enterprise style or content policies? In addition to correctness, it’s important to understand and control the cost of your organization’s use of generative AI. Further, you’d want to be confident that your private data is secure and not being used for purposes or in ways you didn’t authorize.

With Autonomous AI Database Select AI, you will soon be able to address these types of challenges. Select AI will introduce new capabilities to verify, observe, and secure your generative AI results and usage—extending its integrated access to AI providers and AI models, directly from your database.

Customer Challenges using Generative AI

Let’s explore in more detail the challenges you may face when using generative AI across the three areas: correctness (verify), AI usage (observe), and data access (secure):

- Verify: AI tools often include a disclaimer along the lines of “LLMs can make mistakes, verify results.” In the case of AI-generated SQL, just because a query runs without error and gives a result doesn’t mean it is correct or addresses the question you asked. LLMs can also exhibit bias, in part, due to the type of data they were trained on.

- Observe: While each AI model call is generally low-cost, usage and spending can quickly add up across teams, especially with inefficient code, larger-than-necessary prompt and response sizes, or high-call volumes. In addition, pricing varies by model size, context window, and SLA requirements, so choosing the most appropriate (least expensive) model for a given task is important. As such, organizations need visibility and controls to tie AI usage to ROI and make informed investment decisions.

- Secure: Enterprises are concerned about data access, particularly the risk of confidential or personally identifiable information (PII) being exposed to external AI providers. Regulatory and compliance requirements may further restrict data access and location, while requiring transparency and auditability. Additional controls are also necessary to mitigate risks from prompt injection, jailbreaks, and non-compliant or unsafe model outputs.

These are just some of the challenges enterprise users of generative AI face today. Select AI has been taking major steps to help enterprises address these challenges.

How Select AI addresses the challenges

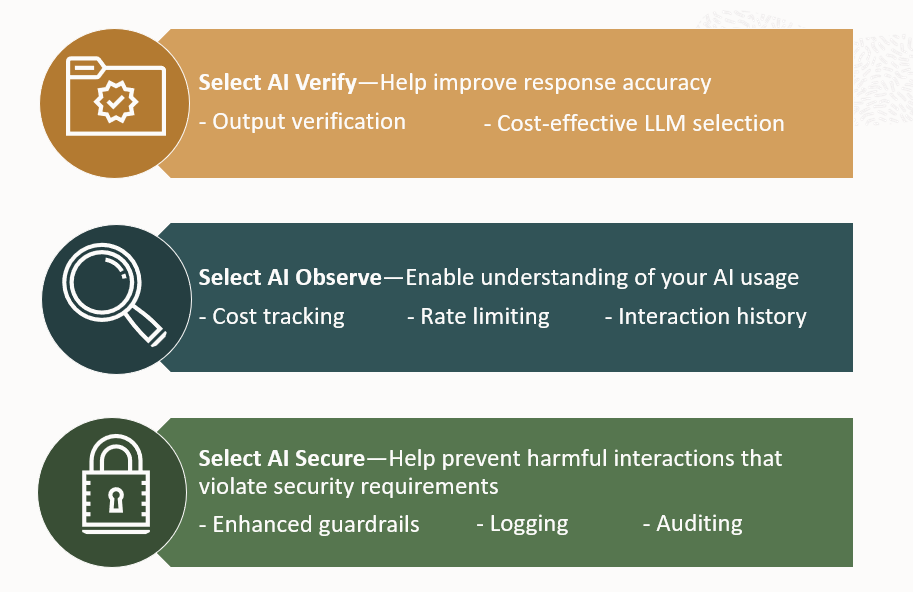

Select AI has expanded its capabilities to support enterprise use of generative AI to tackle the three challenge areas mentioned above:

- • Verify – helps you improve response accuracy and reduce hallucinations

- • Observe – enables your organization to gain insight into AI usage in your enterprise through usage tracking, budgeting, and rate limiting

- • Secure – provides additional guardrails and auditing capabilities along with logging.

Select AI Verify

Assessing the quality of AI model results can occur in multiple areas: natural language to SQL query (NL2SQL) generation, retrieval augmented generation responses, and direct responses from the LLM. It is worth noting that the goal is often to reduce hallucinations, but there are also concerns about bias and reasoning. Another measure of quality can involve rule enforcement, where based on the prompt, you need to assess a response not only for correctness, but also completeness and alignment with enterprise policies, among other criteria.

In the case of NL2SQL, let’s say you ask, “What are my total sales by region for the last quarter?” You get back a table with your regions and a number for each. How do you know it is correct? This is the question nagging most users of SQL-generation technology. You can look at the query, but this requires you know the schema definition and enough SQL to understand if the query is “correct.” A SQL expert may need to look at not only the query but also the underlying tables to “certify” the query is correct. But this defeats the purpose of immediacy of using NL2SQL. So, it’s important to introduce mechanisms that can improve accuracy and increase confidence in response accuracy.

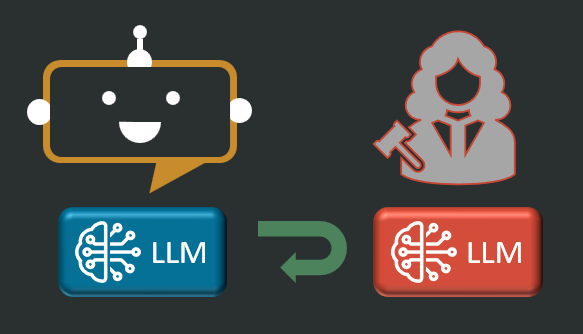

With Select AI Verify, we’re introducing the ability to use one LLM to check the results of another, which can help reduce hallucinations as well as offer a level of validation that the results match the prompt. For example, using the prompt above, “What are my total sales by region for the last quarter?”, a second LLM could check if the generated query and even the values returned reasonably match the prompt and context. For NL2SQL, this context includes schema metadata, but it could also refer to selected text chunks as part of a RAG workflow, or even chat interactions using only the general knowledge of the LLM. Using a second model to provide feedback on the result of the first also enables a feedback loop whereas the first LLM can use the feedback to improve the previous response. Such verification, while not foolproof, does assist with result quality assurance without requiring humans in the loop for every prompt. This is sometimes referred to as LLM-as-a-judge.

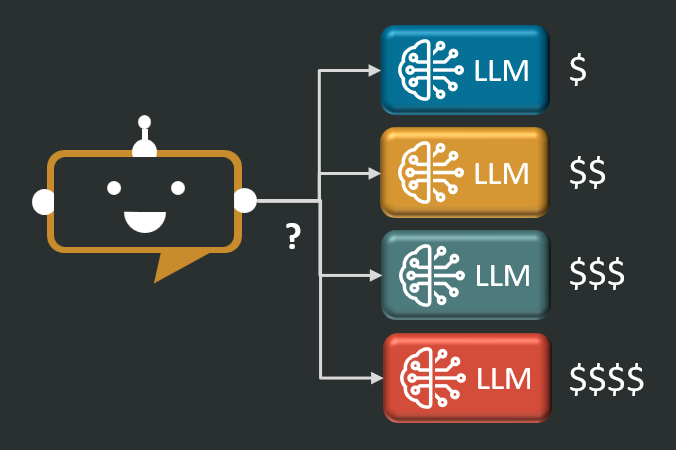

In addition to verifying the output, accuracy (and cost) can be addressed by using the “most appropriate” AI model for a given prompt. As noted earlier, some AI models are more expensive than others to use. However, not all prompts require the most powerful model available. As such, Select AI can be instructed to gather data from which it can automatically select a cost-efficient model to address the prompt, while maintaining result quality. In this case, during what we might call a tuning phase, Select AI can be configured to run a set of prompts against multiple LLMs to get timings and assess result quality from each LLM. Select AI then uses such information to select the “most appropriate” LLM for future prompts.

Select AI Observe

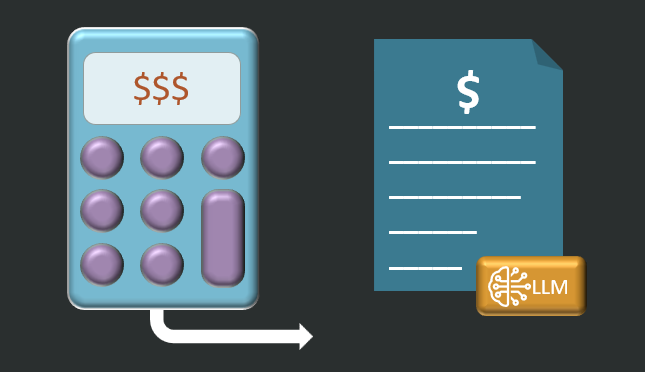

You need to understand where you’re spending money, put limits on spending, and know what value you’re getting for the investment so that you can more effectively control your AI costs. Select AI Observe introduces several observability capabilities to meet this need:

First is cost tracking. Enterprises need to ability to track AI usage at different levels of granularity, such as by user, team, AI profile, database, agent, and application. Metrics include the number of tokens used, the cost per token, and the number of AI model calls. This also needs to be broken out by AI provider and AI model.

To prevent the potential for runaway costs through overuse or flawed code, Select AI also enables specifying budgets at the granularities and metrics as mentioned above. For example, the administrator may want to specify a maximum number of tokens for each user per unit of time – 50K tokens per day.

To prevent denial of service due to too many requests made to an AI model, Select AI supports rate limiting, where an administrator can limit AI model usage at the granularities and metrics mentioned above. For example, the number of requests made to a model per unit of time or per AI agent – 20 prompts per AI agent per hour.

Such observability offers greater transparency and makes clear which uses offer the most value for their respective costs. By setting budgets, enterprises can avoid overspending and enable better spend forecasting. Rate limiting also helps to ensure fair resource allocation, so some users or applications don’t starve others while balancing priorities across the enterprise. With the availability of cost and performance data, LOB managers can make more informed decisions such as whether to switch AI providers, host models privately, or even invest in AI model fine-tuning.

Observability also involves being able to view the interaction history with AI providers and AI models. In the case of NL2SQL, users already have access to the history of queries run using the V$CLOUD_AI_SQL database view. Conversations also capture NL2SQL, RAG, and chat history. In the case of AI agents, database views provide traceability for agent and task behavior.

Select AI Secure

Data security remains a top concern for most enterprises, especially as integrating AI—with third-party providers and autonomous agents—can introduce new complexities. To mitigate risks, establishing strong guardrails is essential to prevent harmful interactions that violate security requirements. Configurable guardrails allow administrators to help set and enforce security and safety policies, such as blocking unsafe requests, aligning outputs with organizational values, and proactively filtering results that could violate established policies.

Select AI already provides multiple guardrails by leveraging Oracle AI Database security for user-level access control, Virtual Private Database (VDP), Real Application Security (RAS), as well as audit trails and read-only sessions. Select AI further supports security through the AI profile, which allows you to specify which data to access and metadata to use.

Select AI Secure focuses on adding enhanced guardrails such as defining a translation profile to enforce user-defined rules about SQL queries produced by an LLM. This offers custom validation by specifying a callback function that takes SQL as input and returns SQL adjusted by function, auto-retry the query, or outright reject it. Similar functions can be defined for RAG and chat interactions.

![]()

Auditing plays a major role in security by recording and persisting LLM interaction by providing an evidence trail for root-cause analysis of results and provide enable demonstrating regulatory compliance. Select AI supports auditing using the conversation API and SQL query generation and response audit trails. By creating an auditable history of user and system actions, enterprises can more readily satisfy compliance and governance requirements.

In addition, enterprise users and auditors may also require logging of augmented audit trails with more verbose, customizable details like application context, the objects used (tables, views, documents), and user IP addresses. You can push such data to object storage logs to enable processing offline by external tools. Such tools can process logs to detect usage anomalies and support continuous improvement initiatives for prompt tuning, guardrail adjustments, or even AI model fine-tuning. As such, logging is an important part of an organization’s continuous process improvement efforts.

Why use Select AI?

Oracle Autonomous AI Database Select AI simplifies and automates the use of generative AI enabling you to generate, run, and explain SQL from natural language prompts, as well as to chat with your LLM. Recent enhancements, such as the feedback capability, enable users to provide feedback on the results and queries produced by the LLM through Select AI. Others, like customizable conversations support creating and using multiple named conversations for long-term memory, where you can work with different topics without mixing context and improve both conversation flexibility and efficiency.

Select AI also automates vector index creation, simplifies and automates the retrieval-augmented generation (RAG) workflow, and enables synthetic data generation. Select AI enables you to take your application and development to the next level with generative AI, conveniently through SQL. We’ve also introduced a Python API to support Python developers to easily take advantage of this powerful generative AI functionality.

Another recent capability is Select AI Agent (autonomous agent framework) for building and running agentic workflows. Instead of configuring and using third-party frameworks and maintaining a runtime environment, Select AI Agent is fully integrated with your Autonomous AI Database environment, supporting the ReAct agentic pattern to support a broad range of agentic use cases.

Resources

With Select AI Verify, Observe, and Secure, Oracle provides you with more tools for greater confidence in your use of generative AI.

For more information…

- Try Select AI for free on OCI: Autonomous AI Database Free Trial

- Video: Getting Started with Oracle Select AI

- Documentation

- LiveLabs