Zoned block device support was added to the mainline Linux Kernel starting in v4.10. Unbreakable Enterprise Kernel release 7 (UEK7) is based on a 5.15.0 kernel release which supports extended Zoned commands along with newer the file system ZoneFS, which was implemented to support Zoned hard drives. ZoneFS uses highly optimized hard disks which use Shingled Magnetic Recording technology to create zones.

What is Shingled Magnetic Recording?

Shingled Magnetic Recording (SMR) technology is used in hard disk drives to provide increased density, thus achieving a higher storage capacity when compared to drives using Conventional Magnetic Recording (CMR).

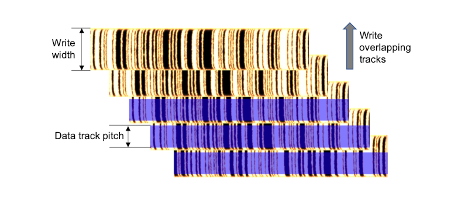

Conventional Magnetic Recording places gaps in between recording tracks to account for Track Misregistration (TMR) budget. These gaps increase aerial density resulting in lower capacity. Shingled Magnetic Recording uses a pattern that resembles the formation of “shingles on a roof”. Data is written sequentially on a track and is then overlapsed with subsequent tracks of data. This pattern of overlapping writes removes gaps between the tracks resulting in a much higher capacity, yet still using the same platter surface. The figure below represents an example of an SMR disk with overlapping tracks.

SMR Interface Implementation

How that we have looked at what SMR technology is, the section describes supported implementation models.

Host Managed

This model is used for sequential write workloads to deliver predictable performance and is controlled at the host level. Currently, host software modification is required to manage host-managed SMR drives.

Drive Managed

This model provides backward compatibility with older interfaces by managing sequential write constraints internally. The drive-manged interface supports both sequential and random writes to the disks.

Host Aware

This mode provides the flexibility of the drive-managed model with regular disks while also providing the same control as host-managed models. More details are available in the Zoned Storage community documentation [1].

Advantages of using ZoneFS

The ZoneFS file system, when used with a zoned block device, efficiently helps with the zone reclamation process when compared to other file systems. This file system has a better view of metadata and file abstraction usage of storage blocks as opposed to the legacy zoned-block-based approach. ZoneFS uses a raw-block device access interface for the zoned block device as compared to a full-featured POSIX file system.

The super block for a ZoneFS file system is always written on disk at sector 0. When the user space mkzonefs format tool [2] is used for formatting the zone with a superblock which is a sequential zone, it will make that zone read-only, thus, preventing any data written to that zone.

The directory structure for ZoneFS

When the ZoneFS file system is mounted on an SMR hard drive, it creates a directory structure that groups the same types of zones.

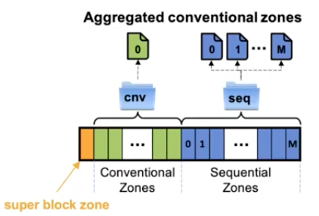

Conventional Zones files: The size of the conventional zone files is the fixed size of the zone that they represent. These files will be placed in the cnv sub-directory when the ZoneFS file system is mounted. These files can be randomly read and written using any type of I/O operation. They represent the same properties as data on a conventional hard disk drive.

Sequential Zones files: The size of the sequential zone files that are grouped in a seq sub-directory represents the zone-write-pointer position relative to the file’s zone start sector. ZoneFS allows random writing to sequential zone files

ZoneFS does not allow random writes to sequentual zone files, it only accepts append writes to the end of the file.

Here is a pictorial view of the directory structure of the Zones on an SMR drive.

Setting up ZoneFS

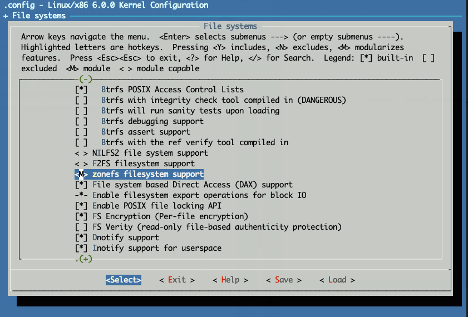

UEK7 was released with ZoneFS support by default, but to try with the mainline upstream kernel you will need to build with the following kernel configuration parameters:

CONFIG_ZONEFS_FS=y

Uee of the mq-deadline block I/O scheduler with zoned block devices is mandatory. This is to ensure write command order guarantees.

Verify if the correct scheduler is set up:

# cat /sys/block/sda/queue/scheduler [mq-deadline] none

Ensure kernel module is loaded (for UEK7 this module is built into the kernel, so this step does not apply):

# modprobe zonefs # lsmod | grep zonefs zonefs 49056 0

Verify that the disk type is a Zoned Block device:

# lsscsi -g | grep zbc [2:0:0:0] zbc ATA HGST HSH721414AL T104 /dev/sda /dev/sg0

Confirm that the disk is host managed by issuing an inquiry command:

# sg_inq /dev/sda

standard INQUIRY:

PQual=0 Device_type=20 RMB=0 LU_CONG=0 version=0x07 [SPC-5]

[AERC=0] [TrmTsk=0] NormACA=0 HiSUP=0 Resp_data_format=2

SCCS=0 ACC=0 TPGS=0 3PC=0 Protect=0 [BQue=0]

EncServ=0 MultiP=0 [MChngr=0] [ACKREQQ=0] Addr16=0

[RelAdr=0] WBus16=0 Sync=0 [Linked=0] [TranDis=0] CmdQue=1

[SPI: Clocking=0x0 QAS=0 IUS=0]

length=96 (0x60) Peripheral device type: host managed zoned block

Vendor identification: ATA

Product identification: HGST HSH721414AL

Product revision level: T104

Unit serial number: VEG1VBDS

Verify the number and types of the zones:

# cat /sys/block/sda/queue/nr_zones 52156 # cat /sys/block/sda/queue/zoned host-managed

Download and compile zonefs-tools and format the disk using mkzonefs command:

# mkzonefs -o aggr_cnv /dev/sda /dev/sda: 27344764928 512-byte sectors (13039 GiB) Host-managed device 52156 zones of 524288 512-byte sectors (256 MiB) 524 conventional zones, 51632 sequential zones 0 read-only zones, 0 offline zones Format: 52155 usable zones Aggregate conventional zones: enabled File UID: 0 File GID: 0 File access permissions: 640 FS UUID: 5ae13117-57df-4437-a2cf-d2efae582657 Resetting sequential zones Writing super block

Mount ZoneFS at /mnt/sda-mnt directory:

# mount -t zonefs /dev/sda /mnt/sda-mnt

Verify that ZoneFS file system is ready:

# dmesg | grep zonefs [344937.022307] zonefs (sda): Mounting 52156 zones [344937.022703] zonefs (sda): Zone group "cnv" has 1 file [344937.062223] zonefs (sda): Zone group "seq" has 51632 files # ls -l /mnt/sda-mnt total 0 dr-xr-xr-x 2 root root 1 Oct 7 11:37 cnv dr-xr-xr-x 2 root root 51632 Oct 7 11:37 seq

The conventional zone can be formatted as a traditional disk as shown below:

# mkfs.ext4 /mnt/sda-mnt/cnv/0

mke2fs 1.46.2 (28-Feb-2021)

Creating filesystem with 34275328 4k blocks and 8568832 inodes

Filesystem UUID: 1ababdcf-8d24-4cfd-b5e8-1537f29cdae4

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624, 11239424, 20480000, 23887872

Allocating group tables: done

Writing inode tables: done

Creating journal (262144 blocks): done

Writing superblocks and filesystem accounting information: done

Use blkzone command to report zones on the disk. The output shows the start offset of the zone, the length of the zone, the capacity of the zone, as well as the write pointer location in the zone. Note that the write pointer location for the conventional zone indicates “non-writable”:

# blkzone report /dev/sda start: 0x000000000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000080000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000100000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000180000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000200000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000280000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000300000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000380000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000400000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000480000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000500000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000580000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000600000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] start: 0x000680000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 0(nw) [type: 1(CONVENTIONAL)] …. …. …. start: 0x65d700000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 1(em) [type: 2(SEQ_WRITE_REQUIRED)] start: 0x65d780000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 1(em) [type: 2(SEQ_WRITE_REQUIRED)] start: 0x65d800000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 1(em) [type: 2(SEQ_WRITE_REQUIRED)] start: 0x65d880000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 1(em) [type: 2(SEQ_WRITE_REQUIRED)] start: 0x65d900000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 1(em) [type: 2(SEQ_WRITE_REQUIRED)] start: 0x65d980000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 1(em) [type: 2(SEQ_WRITE_REQUIRED)] start: 0x65da00000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 1(em) [type: 2(SEQ_WRITE_REQUIRED)] start: 0x65da80000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 1(em) [type: 2(SEQ_WRITE_REQUIRED)] start: 0x65db00000, len 0x080000, cap 0x080000, wptr 0x000000 reset:0 non-seq:0, zcond: 1(em) [type: 2(SEQ_WRITE_REQUIRED)]

List out all the sequential zones on a disk:

# ls -lv /mnt/sda-mnt/seq/ | more total 13535019008 -rw-r----- 1 root root 0 Oct 12 11:52 0 -rw-r----- 1 root root 0 Oct 12 11:52 1 -rw-r----- 1 root root 0 Oct 12 11:52 2 -rw-r----- 1 root root 0 Oct 12 11:52 3 -rw-r----- 1 root root 0 Oct 12 11:52 4 -rw-r----- 1 root root 0 Oct 12 11:52 5 -rw-r----- 1 root root 0 Oct 12 11:52 6 -rw-r----- 1 root root 0 Oct 12 11:52 7 -rw-r----- 1 root root 0 Oct 12 11:52 8 -rw-r----- 1 root root 0 Oct 12 11:52 9 -rw-r----- 1 root root 0 Oct 12 11:52 10 …… …… -rw-r----- 1 root root 0 Oct 12 11:52 51621 -rw-r----- 1 root root 0 Oct 12 11:52 51622 -rw-r----- 1 root root 0 Oct 12 11:52 51623 -rw-r----- 1 root root 0 Oct 12 11:52 51624 -rw-r----- 1 root root 0 Oct 12 11:52 51625 -rw-r----- 1 root root 0 Oct 12 11:52 51626 -rw-r----- 1 root root 0 Oct 12 11:52 51627 -rw-r----- 1 root root 0 Oct 12 11:52 51628 -rw-r----- 1 root root 0 Oct 12 11:52 51629 -rw-r----- 1 root root 0 Oct 12 11:52 51630 -rw-r----- 1 root root 0 Oct 12 11:52 51631

Now list the first sequential zone:

# ls -l /mnt/sda-mnt/seq/0 -rw-r----- 1 root root 0 Oct 12 11:52 /mnt/sda-mnt/seq/0

Write 4K bytes to the sequential zone:

# dd if=/dev/zero of=/mnt/sda-mnt/seq/0 bs=4096 count=1 conv=notrunc oflag=direct 1+0 records in 1+0 records out 4096 bytes (4.1 kB, 4.0 KiB) copied, 1.03659 s, 4.0 kB/s # ls -l /mnt/sda-mnt/seq/0 -rw-r----- 1 root root 4096 Oct 12 11:52 /mnt/sda-mnt/seq/0

Now resize the sequential zone to 256MB, to prevent further writing to that zone:

# truncate -s 268435456 /mnt/sda-mnt/seq/0 # ls -l /mnt/sda-mnt/seq/0 -rw-r----- 1 root root 268435456 Oct 12 12:13 /mnt/sda-mnt/seq/0

Verify I/O

I/O latency verification can be checked by running fio, a flexible I/O load (FIO) tool:

# fio --name=zbc --filename=/dev/sda --direct=1 --zonemode=zbd --offset=274726912 --size=1G --bs=256k --ioengine=libaio

zbc: (g=0): rw=read, bs=(R) 256KiB-256KiB, (W) 256KiB-256KiB, (T) 256KiB-256KiB, ioengine=libaio, iodepth=1

fio-3.32-38-gd146

Starting 1 process

Jobs: 1 (f=1): [R(1)][87.5%][r=147MiB/s][r=587 IOPS][eta 00m:01s]

zbc: (groupid=0, jobs=1): err= 0: pid=8546: Fri Oct 7 12:06:27 2022

read: IOPS=591, BW=148MiB/s (155MB/s)(1024MiB/6923msec)

slat (usec): min=10, max=208, avg=42.16, stdev= 6.28

clat (usec): min=1268, max=52369, avg=1643.33, stdev=1469.50

lat (usec): min=1316, max=52405, avg=1685.49, stdev=1469.75

clat percentiles (usec):

| 1.00th=[ 1336], 5.00th=[ 1352], 10.00th=[ 1369], 20.00th=[ 1385],

| 30.00th=[ 1418], 40.00th=[ 1418], 50.00th=[ 1434], 60.00th=[ 1450],

| 70.00th=[ 1467], 80.00th=[ 1500], 90.00th=[ 2376], 95.00th=[ 2409],

| 99.00th=[ 2442], 99.50th=[ 2442], 99.90th=[10683], 99.95th=[43779],

| 99.99th=[52167]

bw ( KiB/s): min=138752, max=162816, per=100.00%, avg=151805.23, stdev=7686.67, samples=13

iops : min= 542, max= 636, avg=592.92, stdev=30.08, samples=13

lat (msec) : 2=82.10%, 4=17.68%, 10=0.05%, 20=0.07%, 50=0.07%

lat (msec) : 100=0.02%

cpu : usr=1.14%, sys=4.93%, ctx=4096, majf=0, minf=76

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=4096,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=148MiB/s (155MB/s), 148MiB/s-148MiB/s (155MB/s-155MB/s), io=1024MiB (1074MB), run=6923-6923msec

Disk stats (read/write):

sda: ios=4052/0, merge=0/0, ticks=6515/0, in_queue=6515, util=98.64%