Introduction

In the ever-evolving world of data storage, the NVMe (Non-Volatile Memory Express) protocol has emerged as a game-changer. Designed to maximize the performance of modern NAND flash storage, NVMe provides faster data access, reduced latency, and efficient handling of parallel workloads. In this blog, we’ll explore the architecture of NVMe and understand how it delivers such high performance.

NVMe v/s Legacy Storage Protocols

Traditional storage protocols like SATA and SAS, built for spinning disks, are a bottleneck for modern flash storage. NVMe, designed specifically for flash, eliminates these limitations:

SATA Protocol (AHCI): Uses a single command queue with 32 commands per queue. The single queue structure limits the number of simultaneous operations that can be processed. While this setup is sufficient for many traditional applications, it becomes a bottleneck in environments requiring high parallelism and multi-threaded I/O operations.

NVMe Protocol: Supports up to 64K submission and completion queues, each with 64K commands, significantly enhancing parallelism. The NVMe operates directly over the PCIe interface utilizing its higher bandwidth and significantly pushing more data per second.

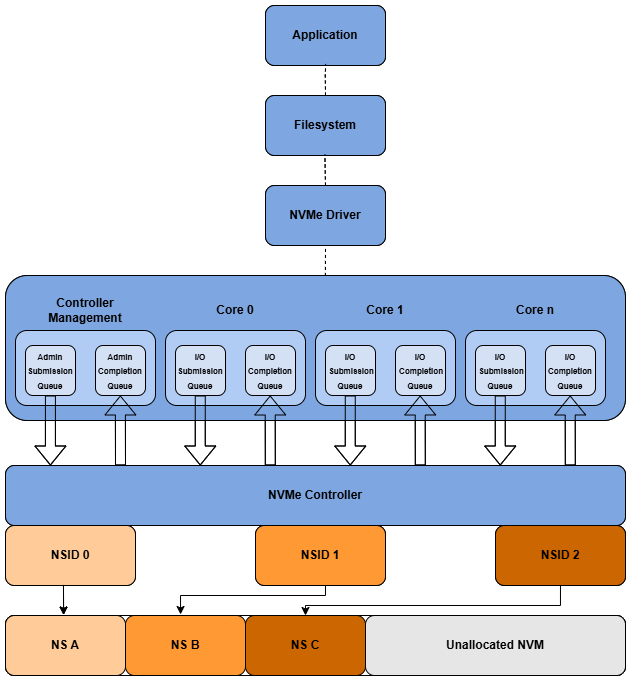

Linux natively supports NVMe through the nvme kernel module, which exposes devices as /dev/nvmeX and /dev/nvmeXnY. The nvmeX entries represent the physical NVMe hardware controllers, while nvmeXnY entries represent namespaces, which are logical storage volumes carved out within a device. In most systems there’s just one namespace (nvme0n1), but enterprise setups may define multiple namespaces on the same controller to isolate workloads.

Key Components of NVMe

NVMe Driver

The NVMe driver in Linux interacts directly with the PCIe subsystem when the NVMe device is locally attached. In this case, the NVMe controller is exposed as a PCIe endpoint, and the driver communicates with it over PCIe registers and queues, enabling high throughput and low latency.

For NVMe over Fabrics (NVMe-oF), the picture is different. The NVMe driver doesn’t talk to PCIe directly. Instead, it communicates through a transport driver (such as RDMA, TCP, or Fibre Channel) that carries NVMe commands over the network. From the block layer’s perspective, both local NVMe and NVMe-oF devices look similar, but the underlying transport path differs.

The NVMe driver supports:

Admin Commands: Admin commands are handled via a dedicated admin queue. These include commands for identifying devices, managing namespaces, and fetching health information.

/* Admin commands */

enum nvme_admin_opcode {

nvme_admin_delete_sq = 0x00,

nvme_admin_create_sq = 0x01,

nvme_admin_get_log_page = 0x02,

nvme_admin_delete_cq = 0x04,

nvme_admin_create_cq = 0x05,

nvme_admin_identify = 0x06,

nvme_admin_abort_cmd = 0x08,

nvme_admin_set_features = 0x09,

nvme_admin_get_features = 0x0a,

nvme_admin_async_event = 0x0c,

nvme_admin_ns_mgmt = 0x0d,

nvme_admin_activate_fw = 0x10,

nvme_admin_download_fw = 0x11,

nvme_admin_dev_self_test = 0x14,

nvme_admin_ns_attach = 0x15,

nvme_admin_keep_alive = 0x18,

nvme_admin_directive_send = 0x19,

nvme_admin_directive_recv = 0x1a,

nvme_admin_virtual_mgmt = 0x1c,

nvme_admin_nvme_mi_send = 0x1d,

nvme_admin_nvme_mi_recv = 0x1e,

nvme_admin_dbbuf = 0x7C,

nvme_admin_format_nvm = 0x80,

nvme_admin_security_send = 0x81,

nvme_admin_security_recv = 0x82,

nvme_admin_sanitize_nvm = 0x84,

nvme_admin_get_lba_status = 0x86,

nvme_admin_vendor_start = 0xC0,

};I/O Commands: I/O commands enable the data operations on the device. IO commands are managed through I/O queues for read/write operations.

/* I/O commands */

enum nvme_opcode {

nvme_cmd_flush = 0x00,

nvme_cmd_write = 0x01,

nvme_cmd_read = 0x02,

nvme_cmd_write_uncor = 0x04,

nvme_cmd_compare = 0x05,

nvme_cmd_write_zeroes = 0x08,

nvme_cmd_dsm = 0x09,

nvme_cmd_verify = 0x0c,

nvme_cmd_resv_register = 0x0d,

nvme_cmd_resv_report = 0x0e,

nvme_cmd_resv_acquire = 0x11,

nvme_cmd_resv_release = 0x15,

nvme_cmd_zone_mgmt_send = 0x79,

nvme_cmd_zone_mgmt_recv = 0x7a,

nvme_cmd_zone_append = 0x7d,

nvme_cmd_vendor_start = 0x80,

};Queue Management

NVMe’s performance benefits stem from its queue-based architecture. NVMe supports up to 64K queues, each capable of holding 64K commands.

Submission Queues (SQ):

- The submission queue is used to submit commands by the driver for execution by the controller.

- It is a circular buffer in host memory with fixed size.

- Each queue has a unique queue identifier (QID).

- Once a command is placed in the queue, the host updates the submission queue tail doorbell register to notify the controller.

Completion Queues (CQ):

- The completion queue is where controller posts the results of executed commands.

- It is also a circular buffer in host memory.

- Once a command from SQ is completed, the controller writes a corresponding completion entry into the completion queue.

- The controller updates the completion queue head doorbell register to inform the host that new completion entries are available.

Admin Queue: The admin queue is the submission queue and completion queue pair with identifier (QID) 0. The admin submission queue and corresponding admin completion queue are used to submit administrative commands and receive completions for those administrative commands respectively.

I/O Queues: NVMe theoretically supports up to 64K submission and completion queues, with each queue capable of holding up to 64K commands. However, most real-world NVMe devices support significantly fewer queues and commands. In practice, the number of configured queues usually ranges between 16-256, with queue depth varying from 32 – 2048 per queue.

$ cat /sys/class/nvme/nvme0/queue_count

129$ cat /sys/block/nvme0n1/queue/nr_requests

1023NVMe Namespaces

An NVMe namespace is a logically isolated set of contiguous Logical Block Addresses (LBAs) managed by an NVMe controller and accessible by the host through a unique Namespace Identifier (NSID). Unlike physical isolation, namespaces provide logical segmentation within an NVMe device, allowing flexible management of storage resources.

In Linux, each namespace is exposed as a block device (e.g., /dev/nvme0n1), where nvme0 refers to the controller and n1 denotes the namespace.

Namespaces allow for flexible configurations, enabling features like multi-tenancy, security isolation (encryption per namespace), write protection, and overprovisioning to improve performance and endurance. Each namespace is presented to the host as a distinct storage target.

Node Generic SN Model Namespace Usage Format FW Rev

--------------------- --------------------- -------------------- ---------------------------------------- ---------- -------------------------- ---------------- --------

/dev/nvme0n1 /dev/ng0n1 PHAB252400943P8EGN INTEL SSDPF2KX038T9S 0x1 500.00 GB / 500.00 GB 512 B + 0 B 1.0.0

/dev/nvme0n2 /dev/ng0n2 PHAB252400943P8EGN INTEL SSDPF2KX038T9S 0x2 300.00 GB / 300.00 GB 512 B + 0 B 1.0.0

/dev/nvme0n3 /dev/ng0n3 PHAB252400943P8EGN INTEL SSDPF2KX038T9S 0x3 200.00 GB / 200.00 GB 512 B + 0 B 1.0.0NVMe Controller

An NVMe controller is responsible for managing communication between the host system and the storage media. It interprets NVMe commands, manages data transfer and ensures efficient operation of the storage devices.

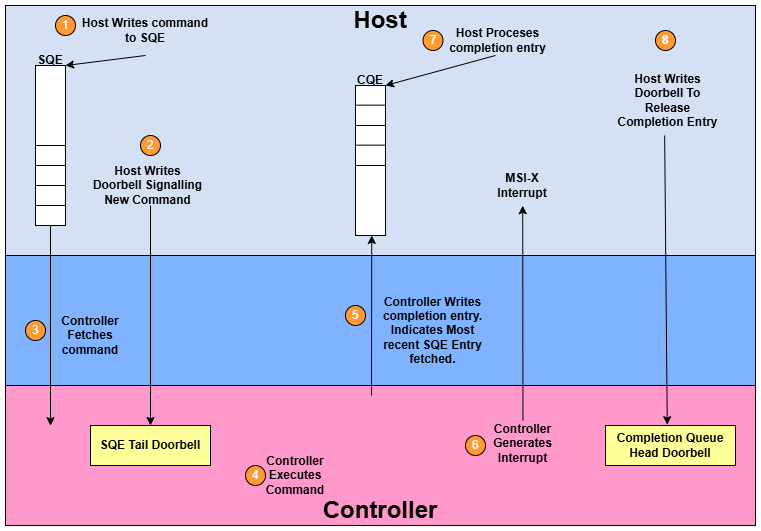

Command processing

- The host creates a command and places it in the appropriate submission queue in host memory.

- It then updates the Submission Queue Tail Doorbell register to notify the controller that new commands are ready for processing.

- The controller retrieves the commands from the submission queue in host memory for execution.

- Commands are arbitrated by the controller, which may execute them out of order. The arbitration mechanism selects the next command for execution.

- Once a command is executed, the controller writes a completion entry to the corresponding completion queue, indicating the most recent submission queue entry that was processed.

- The controller may generate an interrupt to inform the host of a new completion queue entry. This depends on the interrupt coalescing settings.

- The host processes the completion queue entry, handling any errors as needed.

- Finally, the host updates the Completion Queue Head Doorbell register to signal that the completion queue entry has been processed. Multiple entries can be processed before updating this register.

NVMe Subsystem

An NVMe subsystem represents a top-level abstraction that encapsulates one or more controllers, one or more namespaces, one or more PCI express ports. Each NVMe subystem is exposed under /sys/class/nvme and initialilzed by the nvme-core and nvme driver.

NVMe over PCIe

The NVMe Base Specification was originally developed to define a standardized, high-performance interface for host software to communicate with non-volatile memory devices via the PCIe bus. NVMe is architected specifically for SSDs, optimizing data paths and reducing latency. With enhancement in PCIe technology, the data transfer rate doubled from PCIe 4.0 with 16GT/s to PCI 5.0 at 32GT/s (GT: Gigatransfers).

Advantage of using NVMe PCIe:

- NVMe fully utilizes the high speed lanes of PCIe. With up to 16 lanes supported in Gen4 and 32 lanes in Gen 5, it enables high parallel data throughput.

- Minimizes command overhead compared to legacy SATA/AHCI. NVMe achieves latency as low as ~20usec compared to ~60-100usec with SATA/AHCI.

- The NVMe protocol leverages PCIe’s full-duplex architecutre to allow simultaneous bidirectional data transfers and efficient parallel command execution.

- Employs Direct Memory Access (DMA) capabilities to move data directly between the SSD and host memory without CPU involvement, improving efficiency and reducing latency.

NVMe over Fabrics (NVMe-oF)

As the technology evolved, the NVMe specification expanded beyond PCIe to support multiple transport protocols, including RDMA, TCP/IP and Fibre Channel, under the umbrella of NVMe over Fabrics (NVMe-oF). However, PCIe remains the primary and most performance-critical transport layer for NVMe. NVMe-oF is a protocol that enables the use of the NVMe interface over a network fabric, allowing high-performance access to NVMe storage devices across a data center or cluster. It extends the benefits of NVMe (low latency, high throughput, and scalability) beyond the local host by using network connections.

Supported Transports

| Type | Description |

|---|---|

| RDMA | Includes RoCE and iWARP. This is the best performance option, offloading the data processing from CPU. |

| TCP/IP | Extends NVMe functionality over standard Ethernet networks, offering broader compatibility without need of special hardware. |

| Fiber Channel | Leverages existing Fibre Channel infrastructure to deliver NVMe benefits. |

Covering NVMe-oF is beyond the scope of this blog and will be discussed in future blogs. There is a good read available on NVMe over TCP by Alan Adamson.

Conclusion

NVMe is a game-changing protocol designed to unlock the full potential of modern flash storage. With its queue-based design, direct PCIe integration, and support for features like namespaces and NVMe-oF enable unmatched performance, scalability, and efficiency. Whether you’re optimizing a personal system or scaling enterprise infrastructure, NVMe lays the foundation for high-speed, low-latency storage.