Oracle Linux UEK5 introduced NVMe over Fabrics which allows transferring NVMe storage commands over a Infiniband or Ethernet network using RDMA Technology. UEK5U1 extended NVMe over Fabrics to also include Fibre Channel storage networks. Now with UEK6, NVMe over TCP is introduced which again extends NVMe over Fabrics to use a standard Ethernet network without having to purchase special RDMA-capable network hardware.

What is NVMe-TCP?

The NVMe Multi-Queuing Model implements up to 64k I/O Submission and Completion Queues as well as an Administration Submission Queue and a Completion Queue within each NVMe controller. For a PCIe attached NVMe controller, these queues are implemented in host memory and shared by both the host CPUs and NVMe Controller. I/O is submitted to a NVMe device when a device driver writes a command to a I/O submission queue and then writing to a doorbell register to notify the device. When the command has been completed, the device writes to a I/O completion queue and generates an interrupt to notify the device driver.

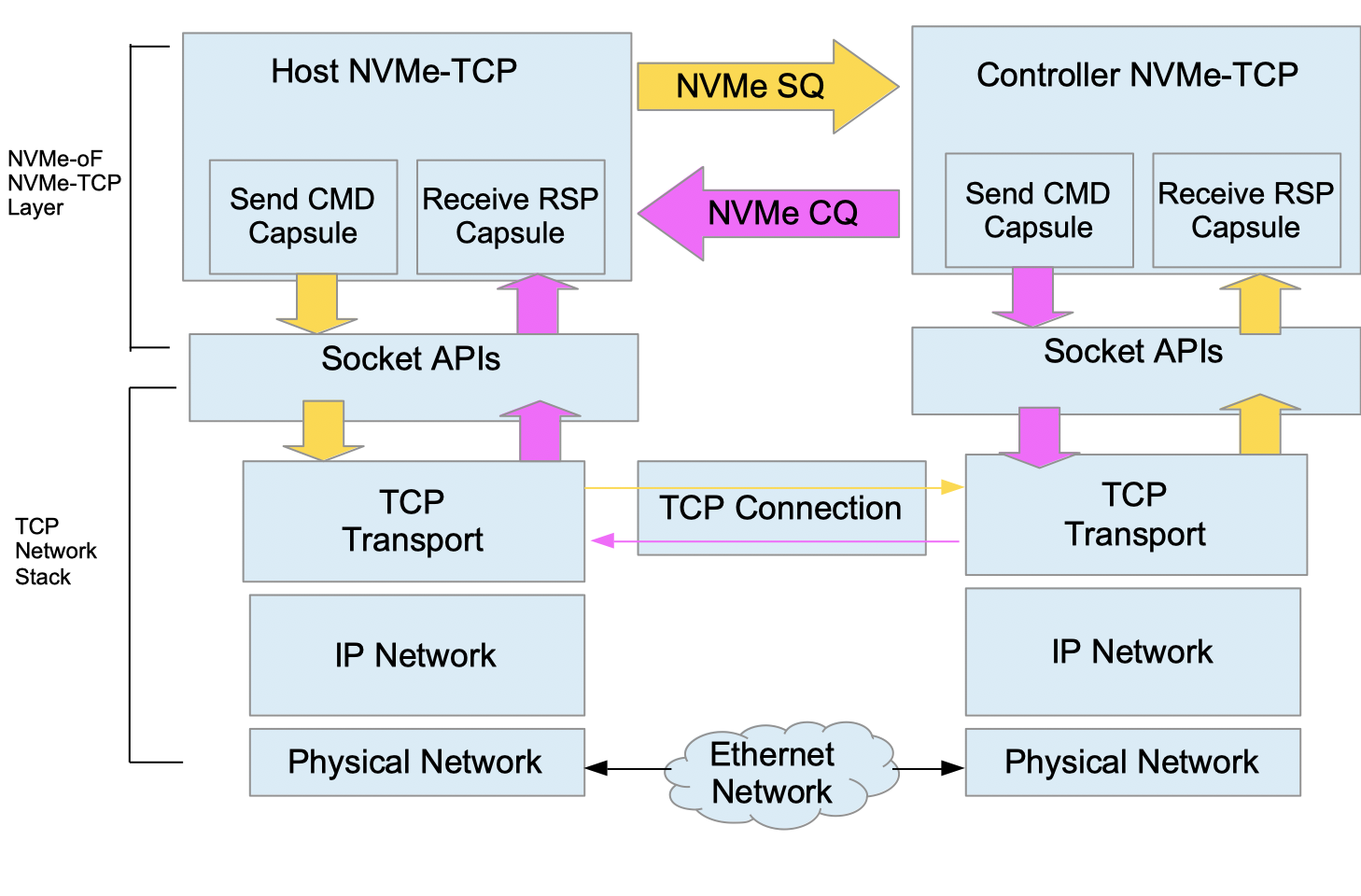

NVMe over Fabrics extends this design so submission and completion queues in host memory are duplicated in the remote controller so a host-based queue-pair is mapped to a controller-based queue-pair. NVMe over Fabrics defines Command and Response Capsules that are used by queues to communicate across the fabric as well as Data Capsules. NVMe-TCP defines how these capsules are encapsulated within a TCP PDU (Protocol Data Unit). Each host-based queue-pair and its associated controller-based queue-pair maps to its own TCP connection and can be assigned to a separate CPU core.

NVMe-TCP Benefits

- The ubiquitous nature of TCP. TCP is one of the most common network transports in use which is already implemented in most data centers across the world.

- Designed to work with existing network infrastructure. In Other words, there is no need to replace existing ethernet routers, switches, NICs which simplifies maintenance of the network infrastructure.

- Unlike RDMA-based implementations, TCP is fully routable and is well suited for larger deployments and longer distances while maintaining high performance and low latency.

- TCP is actively being maintained and enhanced by a large community.

NVMe-TCP Drawbacks

- TCP can increase CPU usage because certain operations like calculating checksums must be done by the CPU as part of the TCP stack.

- Although TCP provides high performance with low latency, when compared with RDMA implementations, latency could affect some applications in part because of additional copies of data that must be maintained.

Setting up NVMe-TCP Example

UEK6 was released with NVMe-TCP enabled by default, but to try it with a upstream kernel, you will need to build with the following kernel configuration parameters:

- CONFIG_NVME_TCP

- CONFIG_NVME_TARGET_TCP=m

Setting Up A Target

$ sudo modprobe nvme_tcp $ sudo modprobe nvmet $ sudo modprobe nvmet-tcp $ sudo mkdir /sys/kernel/config/nvmet/subsystems/nvmet-test $ cd /sys/kernel/config/nvmet/subsystems/nvmet-test $ echo 1 |sudo tee -a attr_allow_any_host > /dev/null $ sudo mkdir namespaces/1 $ cd namespaces/1/ $ sudo echo -n /dev/nvme0n1 |sudo tee -a device_path > /dev/null $ echo 1|sudo tee -a enable > /dev/null

If you don’t have access to a NVMe device on the target host, you can use a null block device instead.

$ sudo modprobe null_blk nr_devices=1 $ sudo ls /dev/nullb0 /dev/nullb0 $ echo -n /dev/nullb0 > device_path $ echo 1 > enable $ sudo mkdir /sys/kernel/config/nvmet/ports/1 $ cd /sys/kernel/config/nvmet/ports/1 $ echo 10.147.27.85 |sudo tee -a addr_traddr > /dev/null $ echo tcp|sudo tee -a addr_trtype > /dev/null $ echo 4420|sudo tee -a addr_trsvcid > /dev/null $ echo ipv4|sudo tee -a addr_adrfam > /dev/null $ sudo ln -s /sys/kernel/config/nvmet/subsystems/nvmet-test/ /sys/kernel/config/nvmet/ports/1/subsystems/nvmet-t

You now should see the following message captured in dmesg:

$ dmesg |grep "nvmet_tcp" [24457.458325] nvmet_tcp: enabling port 1 (10.147.27.85:4420)

Setting Up The Client

$ sudo modprobe nvme $ sudo modprobe nvme-tcp $ sudo nvme discover -t tcp -a 10.147.27.85 -s 4420 Discovery Log Number of Records 1, Generation counter 3 =====Discovery Log Entry 0====== trtype: tcp adrfam: ipv4 subtype: nvme subsystem treq: not specified, sq flow control disable supported portid: 1 trsvcid: 4420 subnqn: nvmet-test traddr: 10.147.27.85 sectype: none $ sudo nvme connect -t tcp -n nvmet-test -a 10.147.27.85 -s 4420 $ sudo nvme list Node SN Model Namespace Usage Format FW Rev ------------- ------------------- --------------- --------- ----------- --------- ------- /dev/nvme0n1 610d2342db36e701 Linux 1 2.20 GB / 2.20 GB 512 B + 0 B

You now have a remote NVMe block device exported via an NVMe over Fabrics network using TCP. Now you can write to and read from it like any other locally attached high-performance block device.

Performance

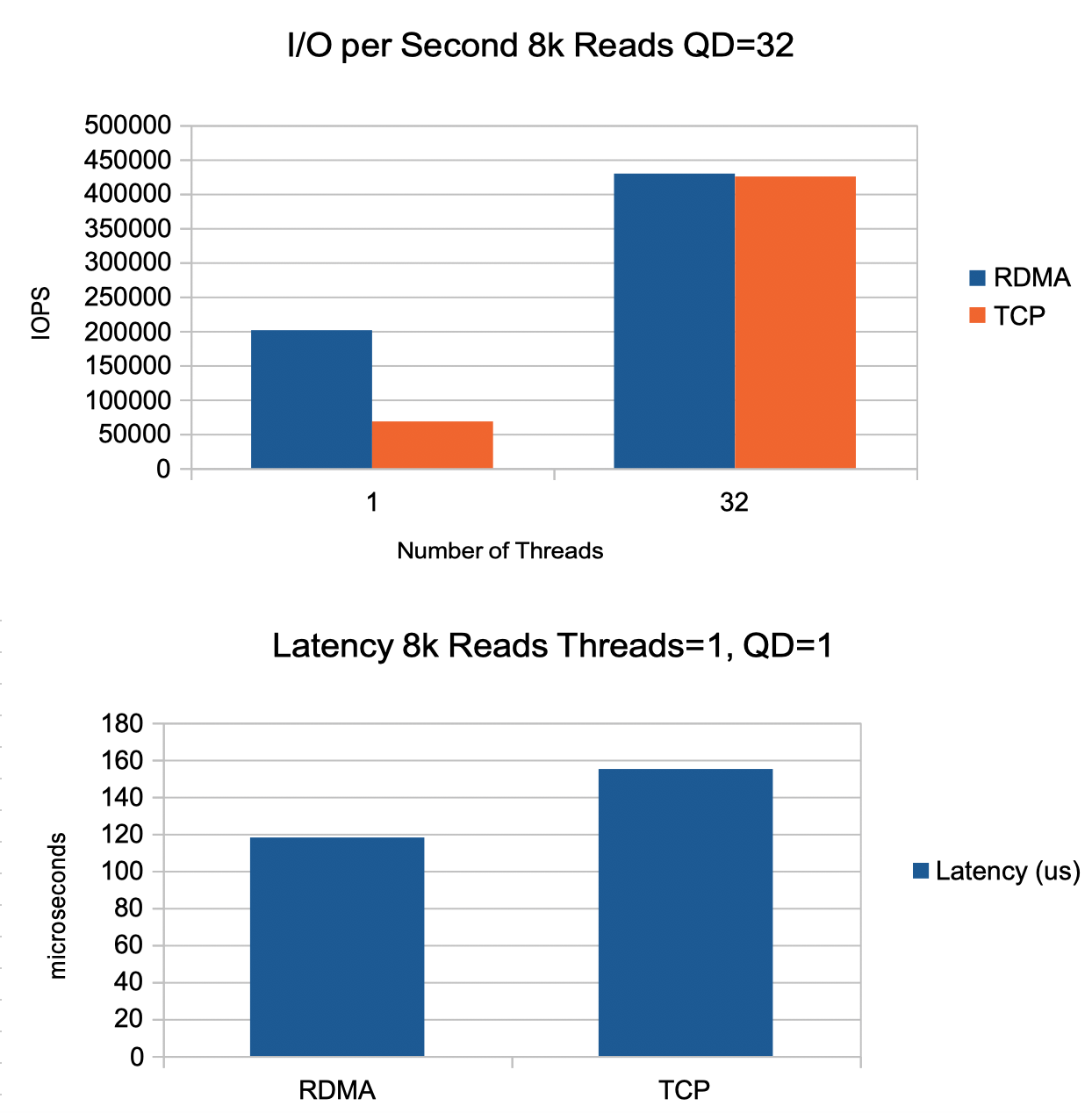

To compare NVMe-RDMA and NVMe-TCP, we used a pair of Oracle X7-2 hosts each with a Mellanox ConnectX-5 running Oracle Linux (OL8.2) with UEK6 (v5.4.0-1944). A pair of 40Gb ConnectX-5 ports were configured with RoCEv2 (RDMA), performance tests run, reconfigured to use TCP and performance tests rerun . The performance utility fio was used to measure I/Os per second (IOPS) and latency.

When testing for IOPS, a single-threaded 8k read test with a queue depth of 32 showed RDMA significantly outperformed TCP, but when additional threads were added that better take advantage of the NVMe queuing model, TCP IOPS performance increased. When the number of threads reached 32, TCP IOPS performance match that of RDMA.

Latency was measured using a 8k read from a single thread with a queue depth of 1. TCP latency was 30% higher than RDMA. Much of the difference is due to the buffer copies required by TCP.

Conclusion

Although NVMe-TCP suffers from newness, TCP does not, and with its dominance in the data center, there is no doubt NVMe-TCP will be a dominant player in the data center SAN space. Over the next year, expect many third-party NVMe-TCP products to be introduced, from NVMe-TCP optimized ethernet adapters to NVMe-TCP SAN products.