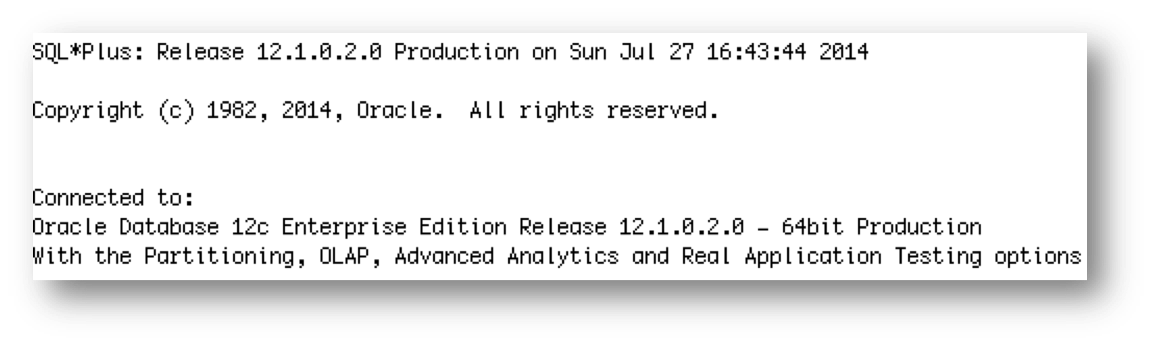

Now that Oracle Database 12.1.0.2 has been officially released, I can finally start sharing more technical details on how Oracle Database In-Memory (Database In-Memory) works.

I thought we should start this series of posts right at the very beginning by answering the most fundamental question, how and when is Database In-Memory installed and enabled.

Let’s start by doing a clean install of 12.1.0.2 and allowing the installer to create a typical single instance database.

So, has In-Memory been installed?

Yes, Oracle Database In-Memory is installed. How do I know? Oracle Database In-Memory is not a bolt on technology to the Oracle Database. It has been seamlessly integrated into the core of the database as a new component of the Shared Global Area (SGA). When the Oracle Database is installed, Oracle Database In-Memory is installed. They are one and the same. You can’t unlink it or choose not to install it.

The more important question is if In-Memory is automatically enabled or not?

The answer is NO. In order to prove this we are going to need to look at some of the new initialization parameters that control In-Memory.

Six new initialization parameters with the INMEMORY prefix have been introduced to directly control the different aspects of the new in-memory functionality. There is also a new optimizer parameter that controls whether queries can use the INMEMORY or not.

Right now we are only interested in one of these parameters, INMEMORY_SIZE to determine if In-Memory is enabled.

Database In-Memory uses an In-Memory column store (IM column store), which is the new component of the SGA, called the In-Memory Area. Data in the IM column store does not reside in the traditional row format used by the Oracle Database; instead it uses a new column format. The size of the IM column store is controlled by the INMEMORY_SIZE parameter. As you can see the INMEMORY_SIZE parameter is set to 0 and therefore Database In-Memory is not enabled, as there is no IM column store allocated. We can also confirm the In-Memory Area is not allocated by querying v$SGA.

Still don’t trust me? Let’s confirm Database In-Memory is not enabled by examining the feature tracking information. I’m going to force the feature-tracking table to be updated before I query it.

![]()

If Database In-Memory isn’t enabled out of the box how do you enable it?

Enabling Database In-Memory is actually a multi-step process.

Step1: First we must allocate memory for the IM column store by setting the INMEMORY_SIZE parameter to a non-zero value that is greater than 100MB.

ALTER SYSTEM SET inmemory_size = 20G scope=spfile;

Since the IM column store is part of the SGA, we also need to ensure the SGA_TARGET parameter is set large enough to accommodate the new IM column store and all of the other existing components (buffer cache, shared pool, large pool etc.). By default, the installer set the SGA_TARGET to 71G, so I’m going to bump it by 20G.

ALTER SYSTEM SET sga_target = 91G scope=spfile;

Now let’s bounce the database so these parameter changes can take effect.

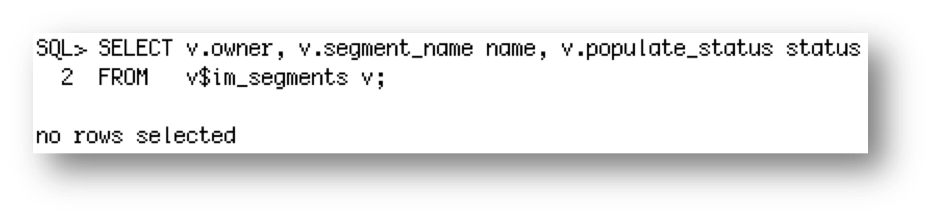

As you can see, we now have an IM column store. But Database In-Memory is still not in use because no objects have been populated into the IM column store. To confirm this we can look at two new v$ views, v$IM_SEGMENTS and v$IM_USER_SEGMENTS that indicate what objects are in the In-Memory Column Store.

We can also confirm it by checking the feature tracking information again.

![]()

![]()

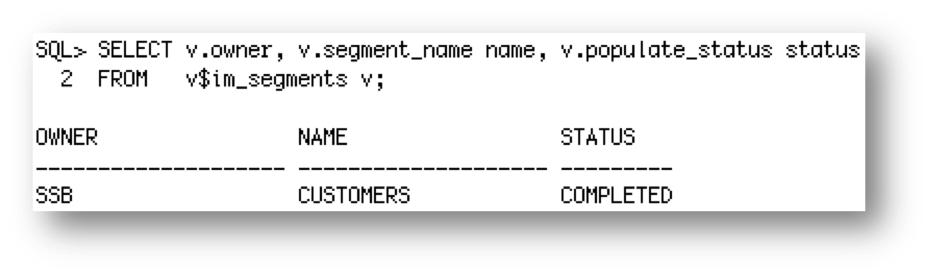

Step 2: Unlike a pure In-Memory database, not all of the objects in an Oracle database need to be populated in the IM column store. The IM column store should be populated with the most performance-critical data in the database. Less performance-critical data can reside on lower cost flash or disk. Of course, if your database is small enough, you can populate all of your tables into the IM column store. Only objects with the INMEMORY attribute are populated into the IM column store. The INMEMORY attribute can be specified on a tablespace, table, (sub)partition, or materialized view. In this case let’s enable the INMEMORY attribute on one of the user tables CUSTOMERS.

ALTER TABLE SSB.customers INMEMORY;

By default Oracle automatically decides when to populate the table into the In-Memory Column Store. This is also referred to as “on demand”, as Oracle typically populates the table after it has been accessed for the first time. So, let’s run a query on the CUSTOMERS table.

SELECT cust_valid, Count(*)

FROM customers

GROUP BY cust_valid;

Now if we check v$IM_SEGMENTS we see the CUSTOMERS table has been populated in the IM column store.

If we check the feature tracking information now, we will see Database In-Memory is enabled and being used.

![]()

If you want to remove a table from the IM column store you simply need to specify the NO INMEMORY attribute.

ALTER TABLE SSB.customers NO INMEMORY;

In next week’s post, I will explain in a lot more detail all of the different sub-clause of the INMEMORY attribute and what all of the columns in the new v$ views represent.

Original publish date: July 28, 2014