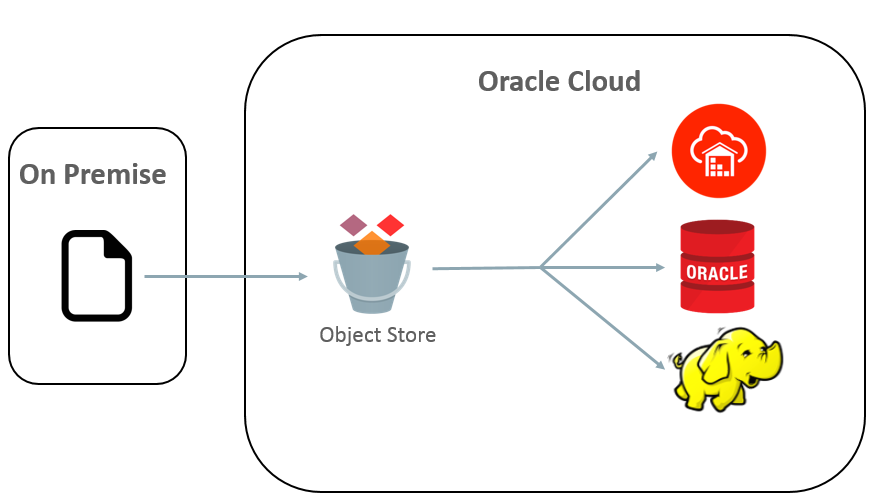

One of the most common and clear trends in the IT market is Cloud and one of the most common and clear trends in the Cloud is Object Store. Some introduction information you may find here. Many Cloud providers, including Oracle, assumes, that data lifecycle starts from Object Store:

You land it there and then either read or load it by different services, such as ADWC or BDCS, for example. Oracle has two flavors of Object Store Services (OSS), OSS on OCI (Oracle Cloud Infrastructure) and OSS on OCI -C (Oracle Cloud Infrastructure Classic).

In this post, I’m going to focus on OSS on OCI-C, mostly because OSS on OCI, was perfectly explained by Hermann Baer here and by Rachna Thusoo here.

Upload/Download files.

As in Hermann’s blog, I’ll focus on most frequent operations Upload and Download. There are multiple ways to do so. For example:

– Oracle Cloud WebUI

– REST API

– FTM CLI tool

– Third Part tools such as CloudBerry

– Big Data Manager (via ODCP)

– Hadoop client with Swift API

– Oracle Storage Software Appliance

Let’s start with easiest one – Web Interface.

Upload/Download files. WebUI.

For sure you have to start with Log In to cloud services:

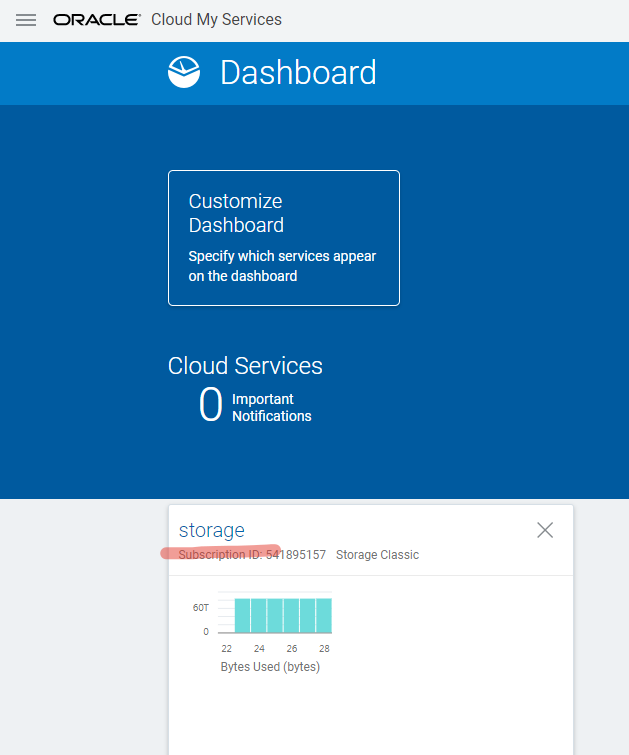

then, you have to go to Object Store Service:

then, you have to go to Object Store Service:

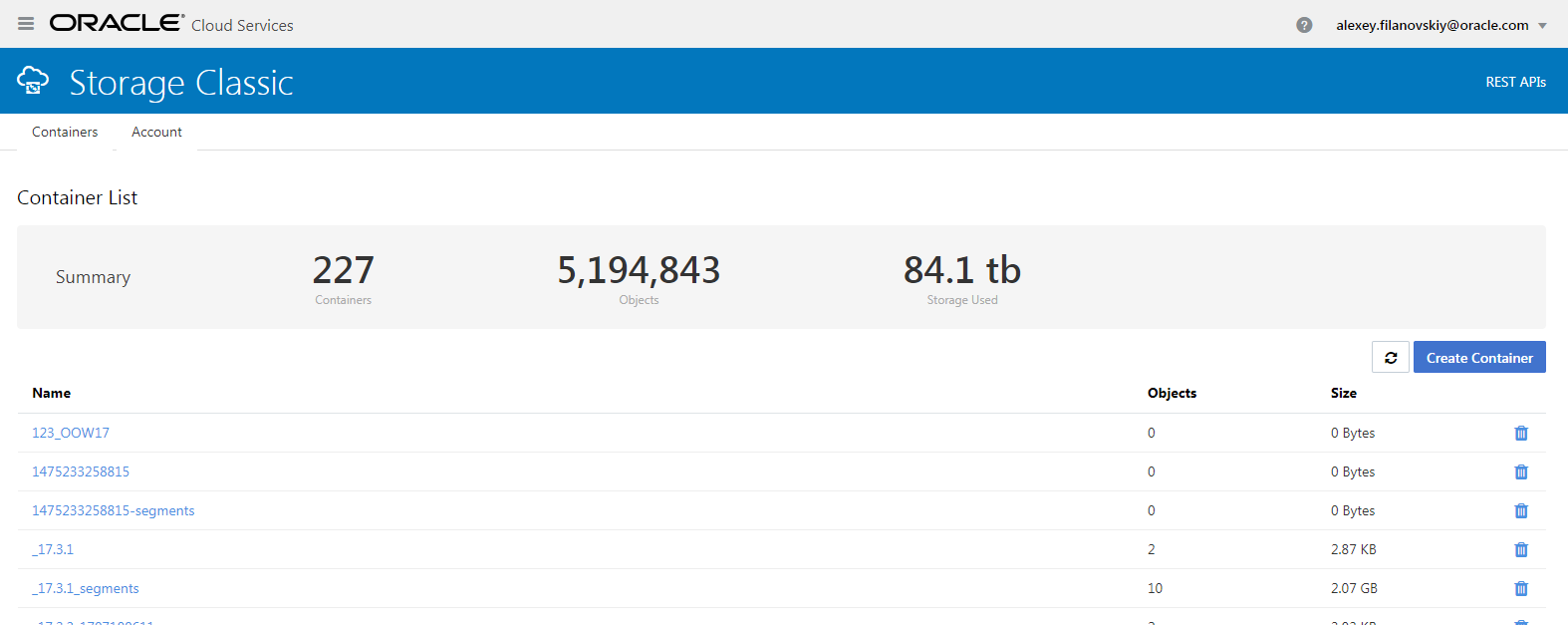

after this drill down into Service Console and you will be able to see list of the containers within your OSS:

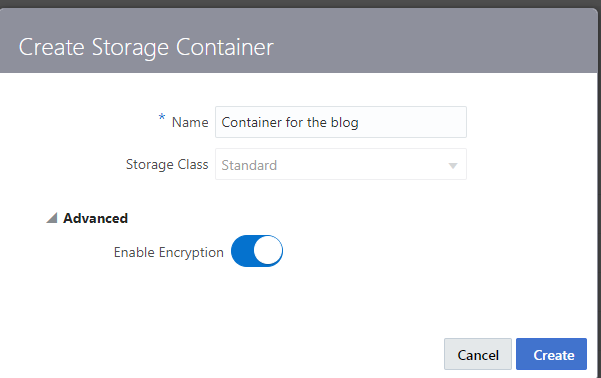

To create a new container (bucket in OCI terminology), simply click on “Creare Container” and give a name to it:

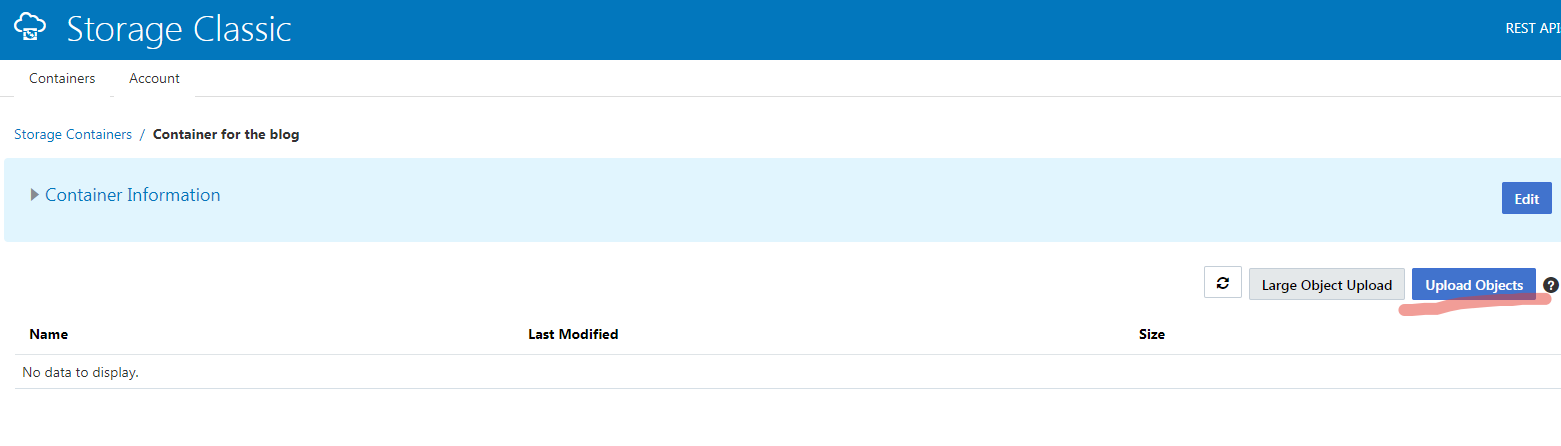

After it been created, click on it and go to “Upload object” button:

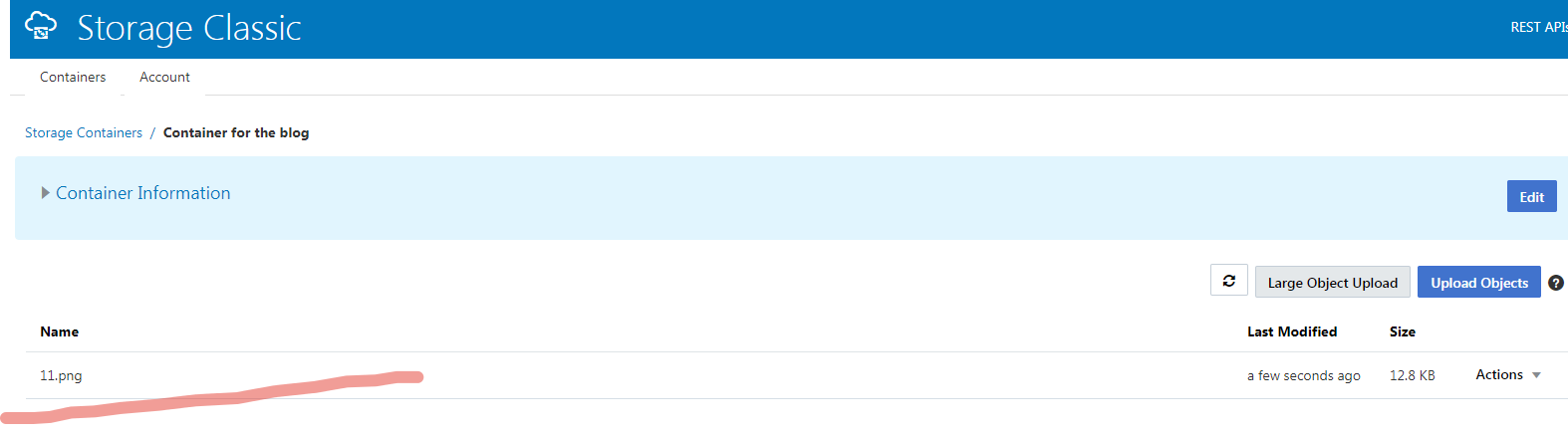

Click and Click again and here we are, file in the container:

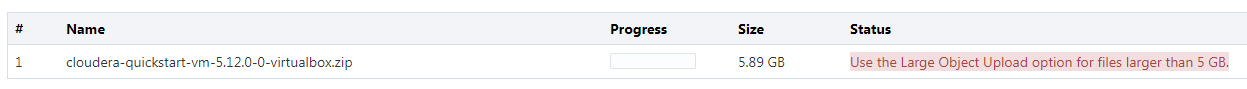

Let’s try to upload a bigger file, ops… we got an error:

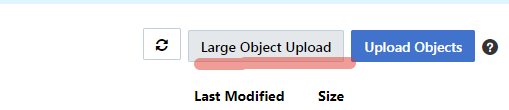

So, seems we have 5GB limitations. Fortunitely, we could have “Large object upload”:

Which will allow us to uplod file bigger than 5GB:

so, and what about downloading? It’s easy, simply click download and land file on local files system.

Upload/Download files. REST API.

WebUI maybe a good way to upload data, when a human operates with it, but it’s not too convenient for scripting. If you want to automate your file uploading, you may use REST API. You may find all details regarding REST API here, but alternatively, you may use this script, which I’m publishing below and it could hint you some basic commands:

#!/bin/bash shopt -s expand_aliases alias echo="echo -e" USER="alexey.filanovskiy@oracle.com" PASS="MySecurePassword" OSS_USER="storage-a424392:${USER}" OSS_PASS="${PASS}" OSS_URL="https://storage-a424392.storage.oraclecloud.com/auth/v1.0" echo "curl -k -sS -H \"X-Storage-User: ${OSS_USER}\" -H \"X-Storage-Pass:${OSS_PASS}\" -i \"${OSS_URL}\"" out=`curl -k -sS -H "X-Storage-User: ${OSS_USER}" -H "X-Storage-Pass:${OSS_PASS}" -i "${OSS_URL}"` while [ $? -ne 0 ]; do echo "Retrying to get token\n" sleep 1; out=`curl -k -sS -H "X-Storage-User: ${OSS_USER}" -H "X-Storage-Pass:${OSS_PASS}" -i "${OSS_URL}"` done AUTH_TOKEN=`echo "${out}" | grep "X-Auth-Token" | sed 's/X-Auth-Token: //;s/\r//'` STORAGE_TOKEN=`echo "${out}" | grep "X-Storage-Token" | sed 's/X-Storage-Token: //;s/\r//'` STORAGE_URL=`echo "${out}" | grep "X-Storage-Url" | sed 's/X-Storage-Url: //;s/\r//'` echo "Token and storage URL:" echo "\tOSS url: ${OSS_URL}" echo "\tauth token: ${AUTH_TOKEN}" echo "\tstorage token: ${STORAGE_TOKEN}" echo "\tstorage url: ${STORAGE_URL}" echo "\nContainers:" for CONTAINER in `curl -k -sS -u "${USER}:${PASS}" "${STORAGE_URL}"`; do echo "\t${CONTAINER}" done FILE_SIZE=$((1024*1024*1)) CONTAINER="example_container" FILE="file.txt" LOCAL_FILE="./${FILE}" FILE_AT_DIR="/path/file.txt" LOCAL_FILE_AT_DIR=".${FILE_AT_DIR}" REMOTE_FILE="${CONTAINER}/${FILE}" REMOTE_FILE_AT_DIR="${CONTAINER}${FILE_AT_DIR}" for f in "${LOCAL_FILE}" "${LOCAL_FILE_AT_DIR}"; do if [ ! -e "${f}" ]; then echo "\nInfo: File "${f}" does not exist. Creating ${f}" d=`dirname "${f}"` mkdir -p "${d}"; tr -dc A-Za-z0-9 </dev/urandom | head -c "${FILE_SIZE}" > "${f}" #dd if="/dev/random" of="${f}" bs=1 count=0 seek=${FILE_SIZE} &> /dev/null fi; done; echo "\nActions:" echo "\tListing containers:\t\t\t\tcurl -k -vX GET -u \"${USER}:${PASS}\" \"${STORAGE_URL}/\"" echo "\tCreate container \"oss://${CONTAINER}\":\t\tcurl -k -vX PUT -u \"${USER}:${PASS}\" \"${STORAGE_URL}/${CONTAINER}\"" echo "\tListing objects at container \"oss://${CONTAINER}\":\tcurl -k -vX GET -u \"${USER}:${PASS}\" \"${STORAGE_URL}/${CONTAINER}/\"" echo "\n\tUpload \"${LOCAL_FILE}\" to \"oss://${REMOTE_FILE}\":\tcurl -k -vX PUT -T \"${LOCAL_FILE}\" -u \"${USER}:${PASS}\" \"${STORAGE_URL}/${CONTAINER}/\"" echo "\tDownload \"oss://${REMOTE_FILE}\" to \"${LOCAL_FILE}\":\tcurl -k -vX GET -u \"${USER}:${PASS}\" \"${STORAGE_URL}/${REMOTE_FILE}\" > \"${LOCAL_FILE}\"" echo "\n\tDelete \"oss://${REMOTE_FILE}\":\tcurl -k -vX DELETE -u \"${USER}:${PASS}\" \"${STORAGE_URL}/${REMOTE_FILE}\"" echo "\ndone"

I put the content of this script into file oss_operations.sh, give execute permission and run it:

$ chmod +x oss_operations.sh $ ./oss_operations.sh

the output will look like:

curl -k -sS -H "X-Storage-User: storage-a424392:alexey.filanovskiy@oracle.com" -H "X-Storage-Pass:MySecurePass" -i "https://storage-a424392.storage.oraclecloud.com/auth/v1.0" Token and storage URL: OSS url: https://storage-a424392.storage.oraclecloud.com/auth/v1.0 auth token: AUTH_tk45d49d9bcd65753f81bad0eae0aeb3db storage token: AUTH_tk45d49d9bcd65753f81bad0eae0aeb3db storage url: https://storage.us2.oraclecloud.com/v1/storage-a424392 Containers: 123_OOW17 1475233258815 1475233258815-segments Container ... Actions: Listing containers: curl -k -vX GET -u "alexey.filanovskiy@oracle.com:MySecurePassword" "https://storage.us2.oraclecloud.com/v1/storage-a424392/"

Create container "oss://example_container": curl -k -vX PUT -u "alexey.filanovskiy@oracle.com:MySecurePassword" "https://storage.us2.oraclecloud.com/v1/storage-a424392/example_container"

Listing objects at container "oss://example_container": curl -k -vX GET -u "alexey.filanovskiy@oracle.com:MySecurePassword" "https://storage.us2.oraclecloud.com/v1/storage-a424392/example_container/"

Upload "./file.txt" to "oss://example_container/file.txt": curl -k -vX PUT -T "./file.txt" -u "alexey.filanovskiy@oracle.com:MySecurePassword" "https://storage.us2.oraclecloud.com/v1/storage-a424392/example_container/"

Download "oss://example_container/file.txt" to "./file.txt": curl -k -vX GET -u "alexey.filanovskiy@oracle.com:MySecurePassword" "https://storage.us2.oraclecloud.com/v1/storage-a424392/example_container/file.txt" > "./file.txt"

Delete "oss://example_container/file.txt": curl -k -vX DELETE -u "alexey.filanovskiy@oracle.com:MySecurePassword" "https://storage.us2.oraclecloud.com/v1/storage-a424392/example_container/file.txt"

Upload/Download files. FTM CLI.

REST API may seems a bit cumbersome and quite hard to use. But there is a good news that there is kind of intermediate solution Command Line Interface – FTM CLI. Again, here is the full documentation available here, but I’d like briefly explain what you could do with FTM CLI. You could download it here and after unpacking, it’s ready to use!

$ unzip ftmcli-v2.4.2.zip

... $ cd ftmcli-v2.4.2 $ ls -lrt total 120032 -rwxr-xr-x 1 opc opc 1272 Jan 29 08:42 README.txt -rw-r--r-- 1 opc opc 15130743 Mar 7 12:59 ftmcli.jar -rw-rw-r-- 1 opc opc 107373568 Mar 22 13:37 file.txt -rw-rw-r-- 1 opc opc 641 Mar 23 10:34 ftmcliKeystore -rw-rw-r-- 1 opc opc 315 Mar 23 10:34 ftmcli.properties -rw-rw-r-- 1 opc opc 373817 Mar 23 15:24 ftmcli.log

You may note that there is file ftmcli.properties, it may simplify your life if you configure it once. Documentation you may find here and my example of this config:

$ cat ftmcli.properties #saving authkey #Fri Mar 30 21:15:25 UTC 2018 rest-endpoint=https\://storage-a424392.storage.oraclecloud.com/v1/storage-a424392 retries=5 user=alexey.filanovskiy@oracle.com segments-container=all_segments max-threads=15 storage-class=Standard segment-size=100

Now we have all connection details and we may use CLI as simple as possible. There are few basics commands available with FTMCLI, but as a first step I’d suggest to authenticate a user (put password once):

$ java -jar ftmcli.jar list --save-auth-key

Enter your password:

if you will use “–save-auth-key” it will save your password and next time will not ask you for a password:

$ java -jar ftmcli.jar list

123_OOW17

1475233258815

...

You may refer to the documentation for get full list of the commands or simply run ftmcli without any arguments:

$ java -jar ftmcli.jar ... Commands: upload Upload a file or a directory to a container. download Download an object or a virtual directory from a container. create-container Create a container. restore Restore an object from an Archive container. list List containers in the account or objects in a container. delete Delete a container in the account or an object in a container. describe Describes the attributes of a container in the account or an object in a container. set Set the metadata attribute(s) of a container in the account or an object in a container. set-crp Set a replication policy for a container. copy Copy an object to a destination container.

Let’s try to accomplish standart flow for OSS – create container, upload file there, list objects in container,describe container properties and delete it.

# Create container $ java -jar ftmcli.jar create-container container_for_blog Name: container_for_blog Object Count: 0 Bytes Used: 0 Storage Class: Standard Creation Date: Fri Mar 30 21:50:15 UTC 2018 Last Modified: Fri Mar 30 21:50:14 UTC 2018 Metadata --------------- x-container-write: a424392.storage.Storage_ReadWriteGroup x-container-read: a424392.storage.Storage_ReadOnlyGroup,a424392.storage.Storage_ReadWriteGroup content-type: text/plain;charset=utf-8 accept-ranges: bytes Custom Metadata --------------- x-container-meta-policy-georeplication: container

# Upload file to container

$ java -jar ftmcli.jar upload container_for_blog file.txt

Uploading file: file.txt to container: container_for_blog

File successfully uploaded: file.txt

Estimated Transfer Rate: 16484KB/s

# List files into Container

$ java -jar ftmcli.jar list container_for_blog

file.txt

# Get Container Metadata

$ java -jar ftmcli.jar describe container_for_blog Name: container_for_blog Object Count: 1 Bytes Used: 434 Storage Class: Standard Creation Date: Fri Mar 30 21:50:15 UTC 2018 Last Modified: Fri Mar 30 21:50:14 UTC 2018 Metadata --------------- x-container-write: a424392.storage.Storage_ReadWriteGroup x-container-read: a424392.storage.Storage_ReadOnlyGroup,a424392.storage.Storage_ReadWriteGroup content-type: text/plain;charset=utf-8 accept-ranges: bytes Custom Metadata --------------- x-container-meta-policy-georeplication: container # Delete container $ java -jar ftmcli.jar delete container_for_blog ERROR:Delete failed. Container is not empty. # Delete with force option $ java -jar ftmcli.jar delete -f container_for_blog Container successfully deleted: container_for_blog

Another great thing about FTM CLI is that allows easily manage uploading performance out of the box. In ftmcli.properties there is the property called “max-threads”. It may vary between 1 and 100. Here is testcase illustrates this:

-- Generate 10GB file $ dd if=/dev/zero of=file.txt count=10240 bs=1048576 -- Upload file in one thread (has around 18MB/sec rate $ java -jar ftmcli.jar upload container_for_blog /home/opc/file.txt Uploading file: /home/opc/file.txt to container: container_for_blog File successfully uploaded: /home/opc/file.txt Estimated Transfer Rate: 18381KB/s -- Change number of thrads from 1 to 99 in config file $ sed -i -e 's/max-threads=1/max-threads=99/g' ftmcli.properties -- Upload file in 99 threads (has around 68MB/sec rate) $ java -jar ftmcli.jar upload container_for_blog /home/opc/file.txt Uploading file: /home/opc/file.txt to container: container_for_blog File successfully uploaded: /home/opc/file.txt Estimated Transfer Rate: 68449KB/s

so, it’s very simple and at the same time powerful tool for operations with Object Store, it may help you with scripting of operations.

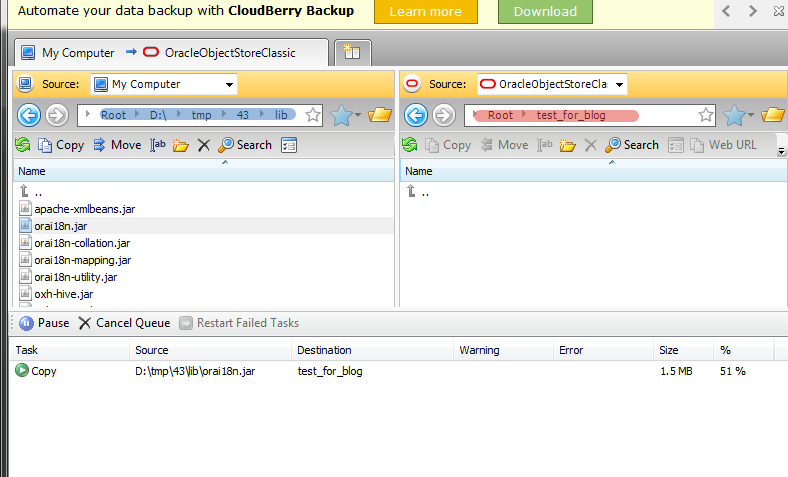

Upload/Download files. CloudBerry.

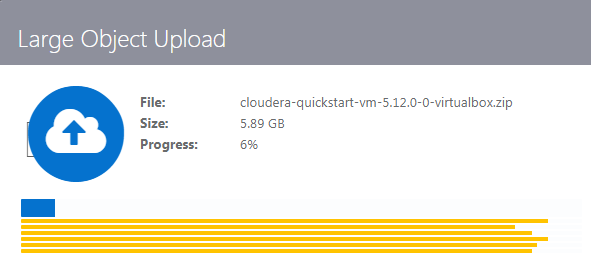

Another way to interact with OSS use some application, for example, you may use CloudBerry Explorer for OpenStack Storage. There is a great blogpost, which explains how to configure CloudBerry for Oracle Object Store Service Classic and I will start from the point where I already configured it. Whenever you log in it looks like this:

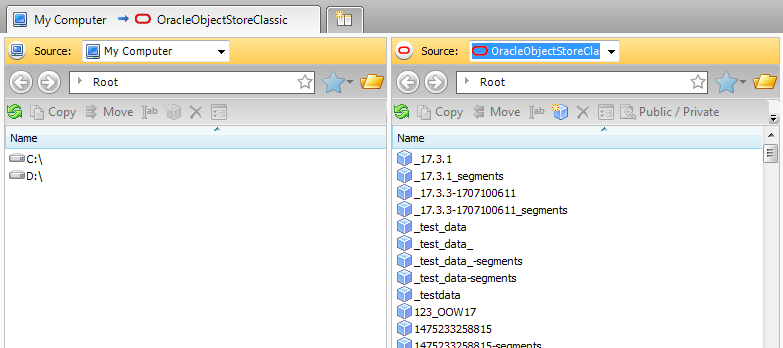

You may easily create container in CloudBerry:

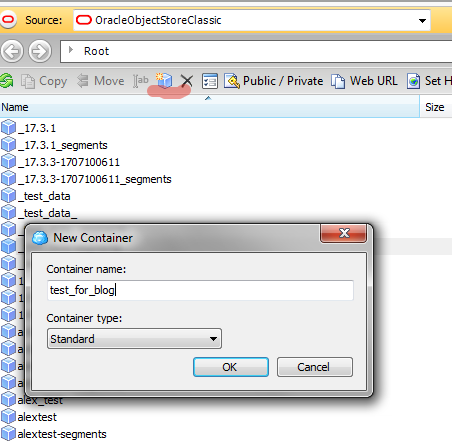

And for sure you may easily copy data from your local machine to OSS:

It’s nothing to add here, CloudBerry is convinient tool for browsing Object Stores and do a small copies between local machine and OSS. For me personally, it looks like TotalCommander for a OSS.

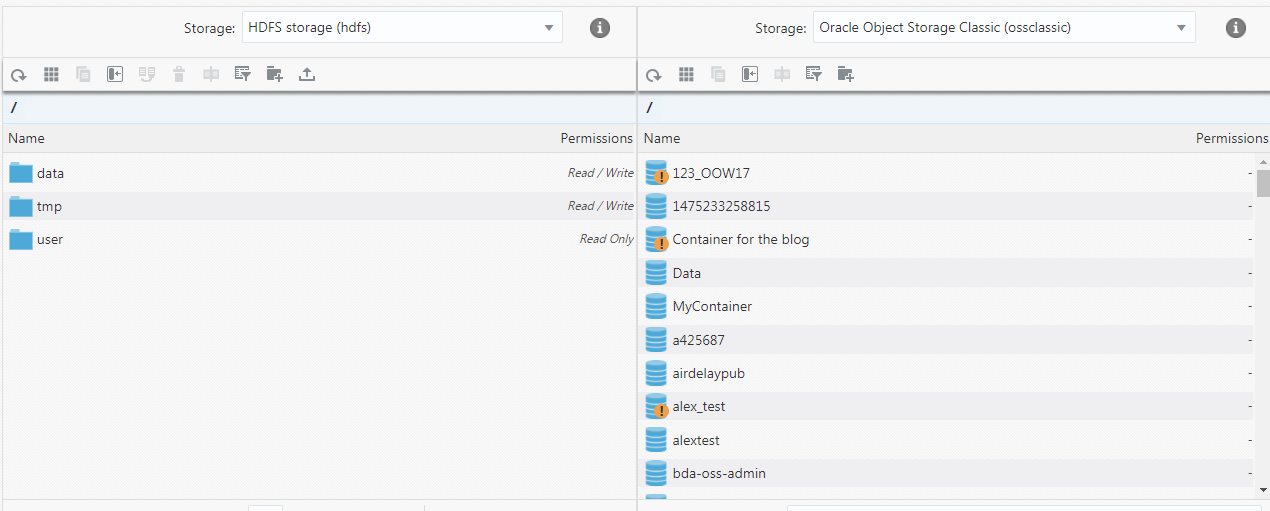

Upload/Download files. Big Data Manager and ODCP.

Big Data Cloud Service (BDCS) has great component called Big Data Manager. This is tool developed by Oracle, which allows you to manage and monitor Hadoop Cluster. Among other features Big Data Manager (BDM) allows you to register Object Store in Stores browser and easily drug and drop data between OSS and other sources (Database, HDFS…). When you copy data to/from HDFS you use optimized version of Hadoop Distcp tool ODCP.

This is very fast way to copy data back and forth. Fortunitely, JP already wrote about this feature and I could just simply give a link. If you want to see concreet performance numbers, you could go here to a-team blog page.

Without Big Data Manager, you could manually register OSS on Linux machine and invoke copy command from bash. Documentation will show you all details and I will show just one example:

# add account: $ export CM_ADMIN=admin $ export CM_PASSWORD=SuperSecurePasswordCloderaManager $ export CM_URL=https://cfclbv8493.us2.oraclecloud.com:7183 $ bda-oss-admin add_swift_cred --swift-username "storage-a424392:alexey.filanovskiy@oracle.com" --swift-password "SecurePasswordForSwift" --swift-storageurl "https://storage-a424392.storage.oraclecloud.com/auth/v2.0/tokens" --swift-provider bdcstorage # list of credentials: $ bda-oss-admin list_swift_creds Provider: bdcstorage Username: storage-a424392:alexey.filanovskiy@oracle.com Storage URL: https://storage-a424392.storage.oraclecloud.com/auth/v2.0/tokens # check files on OSS swift://[container name].[Provider created step before]/:

$ hadoop fs -ls swift://alextest.bdcstorage/ 18/03/31 01:01:13 WARN http.RestClientBindings: Property fs.swift.bdcstorage.property.loader.chain is not set Found 3 items -rw-rw-rw- 1 279153664 2018-03-07 00:08 swift://alextest.bdcstorage/bigdata.file.copy drwxrwxrwx - 0 2018-03-07 00:31 swift://alextest.bdcstorage/customer drwxrwxrwx - 0 2018-03-07 00:30 swift://alextest.bdcstorage/customer_address

Now you have OSS, configured and ready to use. You may copy data by ODCP, here you may find entire list of the sources and destinations. For example, if you want to copy data from hdfs to OSS, you have to run:

$ odcp hdfs:///tmp/file.txt swift://alextest.bdcstorage/

ODCP is a very efficient way to move data from HDFS to Object Store and back.

if you are from Hadoop world and you use to Hadoop fs API, you may use it as well with Object Store (configuring it before), for example for load data into OSS, you need to run:

$ hadoop fs -put /home/opc/file.txt swift://alextest.bdcstorage/file1.txt

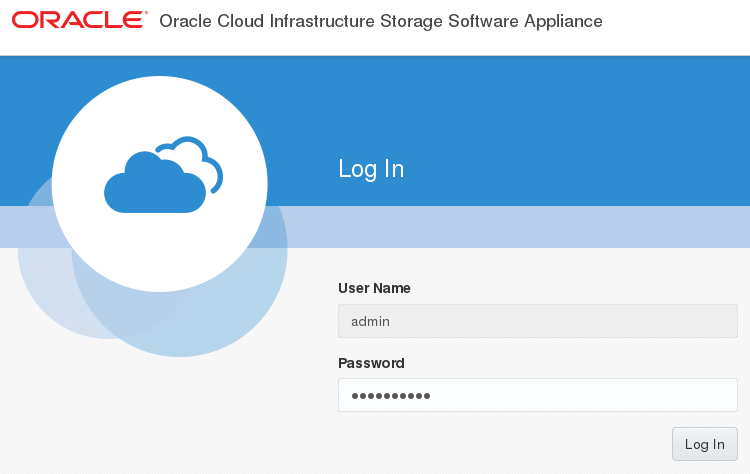

Upload/Download files. Oracle Storage Cloud Software Appliance.

Object Store is a fairly new concept and for sure there is a way to smooth this migration. Years ago, when HDFS was new and undiscovered, many people didn’t know how to work with it and few technologies, such as NFS-Gateway and HDFS-fuse appears. Both these technology allowed to mount HDFS on Linux filesystem and work with it as with normal filesystem. Something like this allows doing Oracle Cloud Infrastructure Storage Software Appliance. All documentation you could find here, brief video here, download software here. In my blog I just show one example of its usage. This picture will help me to explain how works Storage Cloud Software Appliance:

you may see that customer need to install on-premise docker container, which will have all required stack. I’ll skip all details, which you may find in the documentation above, and will just show a concept.

# Check oscsa status [on-prem client] $ oscsa info Management Console: https://docker.oracleworld.com:32769 If you have already configured an OSCSA FileSystem via the Management Console, you can access the NFS share using the following port. NFS Port: 32770 Example: mount -t nfs -o vers=4,port=32770 docker.oracleworld.com:/<OSCSA FileSystem name> /local_mount_point # Run oscsa [on-prem client] $ oscsa up

There (on the docker image, which you deploy on some on-premise machine) you may find WebUI, where you can configure Storage Appliance:

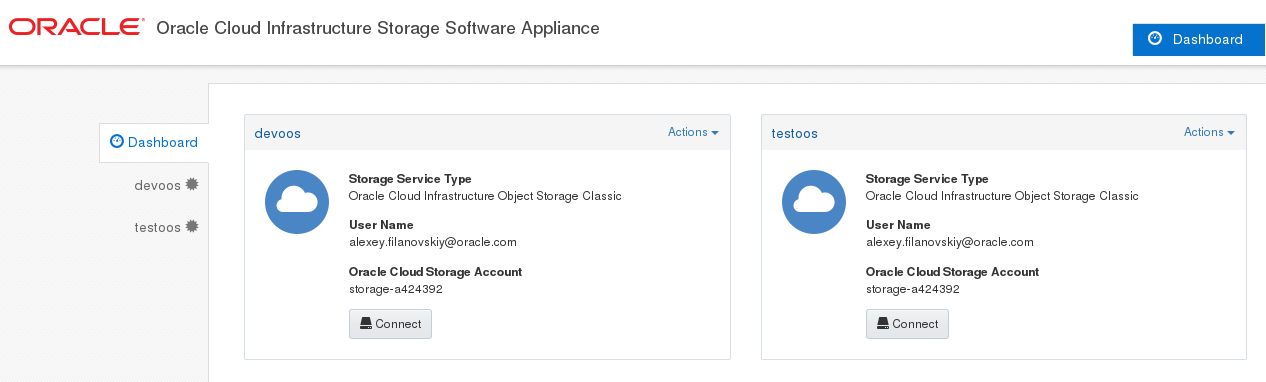

after login, you may see a list of configured Objects Stores:

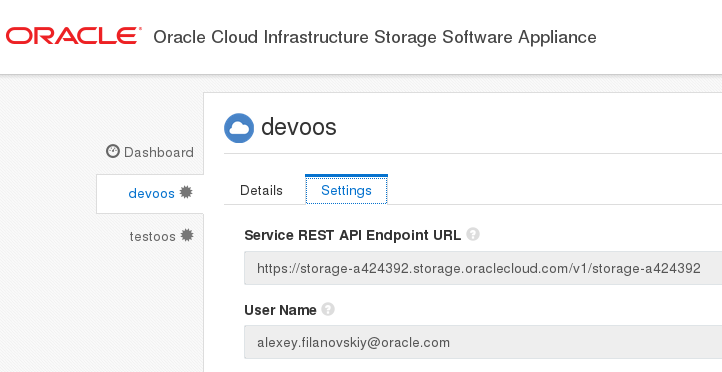

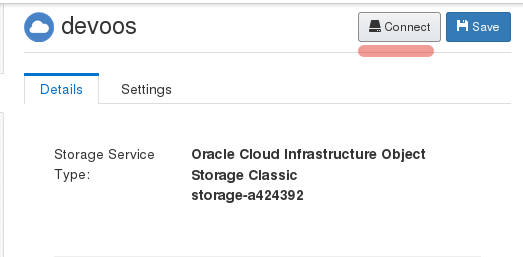

In this console you may connect linked container with this on-premise host:

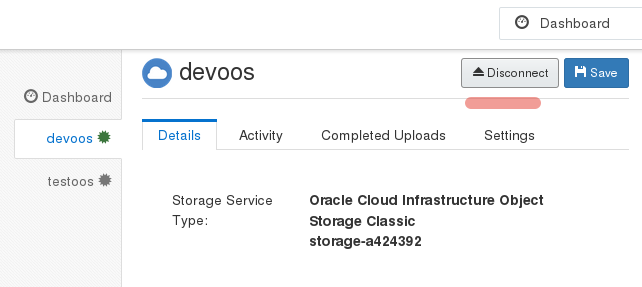

after it been connected, you will see option “disconnect”

After you connect a device, you have to mount it:

[on-prem client] $ sudo mount -t nfs -o vers=4,port=32770 localhost:/devoos /oscsa/mnt [on-prem client] $ df -h|grep oscsa localhost:/devoos 100T 1.0M 100T 1% /oscsa/mnt

Now you could upload a file into Object Store:

[on-prem client] $ echo "Hello Oracle World" > blog.file [on-prem client] $ cp blog.file /oscsa/mnt/

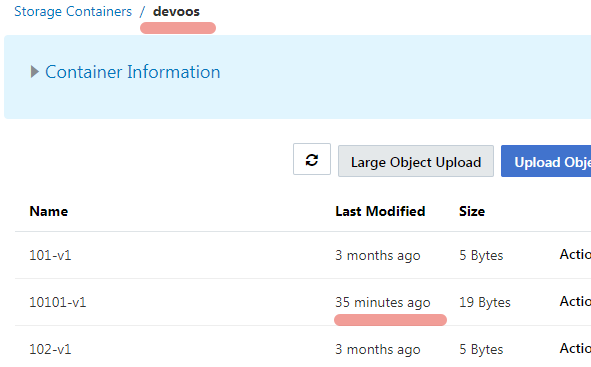

This is asynchronous copy to Object Store, so after a while, you will be able to find a file there:

Only one restriction, which I wasn’t able to overcome is that filename is changing during the copy.

Conclusion.

Object Store is here and it will became more and more popular. It means there is no way to escape it and you have to get familiar with it. Blogpost above showed that there are multiple ways to deal with it, strting from user friendly (like CloudBerry) and ending on the low level REST API.