A recurring customer requirement is to measure the lag time between when a transaction was committed on the source and when it was committed on the target. Recently, a customer using Snowflake as a target wanted to implement such requirement using the CURRENT_TIMESTAMP() function in Snowflake.

We came up with an approach using extra columns on the target to store the operation type performed by GoldenGate, the source commit timestamp from the trail, and the target commit timestamp using the CURRENT_TIMESTAMP() function as its default value. We used CONVERT_TIMEZONE() to convert the default Snowflake timestamp timezone from ‘America/Los Angeles’ to Coordinated Universal Time (UTC) to match the GoldenGate commit timestamp.

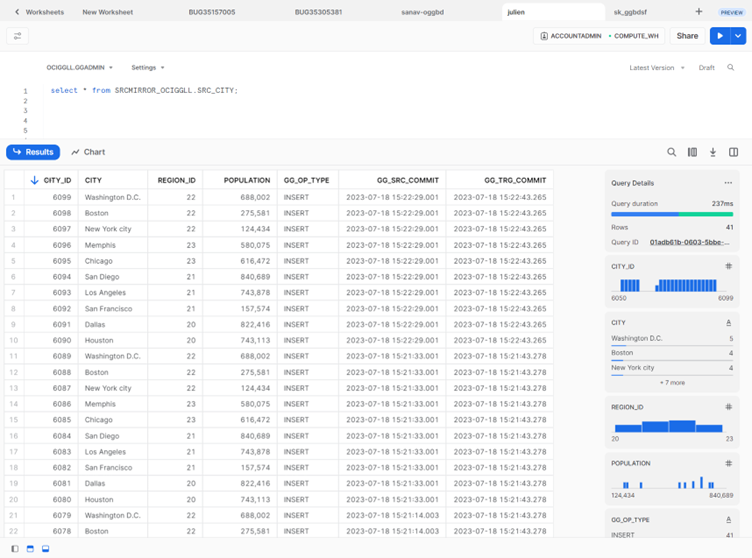

Here is an example of a target table with these three additional fields (GG_OP_TYPE, GG_SRC_COMMIT, GG_TRG_COMMIT):

CREATE OR REPLACE TABLE SRCMIRROR_OCIGGLL.SRC_CITY (

CITY_ID NUMBER(10,0) NOT NULL,

CITY VARCHAR(50),

REGION_ID NUMBER(10,0),

POPULATION NUMBER(10,0),

GG_OP_TYPE VARCHAR(10),

GG_SRC_COMMIT TIMESTAMP_NTZ,

GG_TRG_COMMIT TIMESTAMP_NTZ DEFAULT CONVERT_TIMEZONE('America/Los_Angeles','UTC', CURRENT_TIMESTAMP(3)),

PRIMARY KEY (CITY_ID)

);

We use the @GETENV function to retrieve some additional metadata information from the header portion (GGHEADER) of an Oracle GoldenGate trail record:

- OPTYPE: Returns the type of operation performed (INSERT, UPDATE, DELETE, SQL COMPUPDATE, PK UPDATE, TRUNCATE).

- COMMITTIMESTAMP: Returns the transaction timestamp (when the transaction is committed) expressed in the format of YYYY-MM-DD HH:MI:SS.FFFFFF.

@GETENV is used in the Replicat parameter file in the COLMAP section:

REPLICAT RSNOW

MAP SRC_OCIGGLL_JT.SRC_CITY, TARGET SRCMIRROR_OCIGGLL.SRC_CITY,

COLMAP (USEDEFAULTS, GG_OP_TYPE = @GETENV ('GGHEADER', 'OPTYPE'), GG_SRC_COMMIT = @GETENV ('GGHEADER', 'COMMITTIMESTAMP'));

Note that we do not map the Snowflake column GG_TRG_COMMIT. GoldenGate will not load it, and Snowflake will populate it with the current timestamp using the CURRENT_TIMESTAMP function.

If we run the Replicat, we can see that the three extra fields are populated correctly. The native Snowflake handler in GoldenGate for Big Data uses a Stage & Merge approach which loads the data every 30 seconds, so the lag should be close to this value by default. This time interval can be modified using the flush.interval parameter.

Using the timestamps stored in GG_SRC_COMMIT and GG_TRG_COMMIT, we can calculate the end-to-end lag between our source and target! We have seen how simple it is to implement this requirement using GoldenGate functions like @GETENV and database functions like CURRENT_TIMESTAMP() in Snowflake. You can use a similar approach with other technologies supported by GoldenGate.