“You can use Oracle Real Application Testing Database Replay to capture workload from an on-premises or other cloud service database and replay it on an Autonomous Database instance. This enables you to compare workloads between an on-premises database or other cloud service database and an Autonomous Database.” This is an important statement when you consider to test workloads on Autonomous Database.

For all of you who don’t know what Oracle Real Application Testing is used for, I’d like to start the posting with a short introduction. Real Application Testing can answer questions like:

How can you verify or even guarantee general database performance when migrating from an on-premises database or any cloud database to an autonomous database such as ATP-S or ADW-S? How can real not artificial tests be easily accomplished – without manually implementing test scripts etc?

Two technologies are included in Oracle Real Application Testing that can help to mitigate the risk of migration: SQL Performance Analyzer (SPA) and Database Replay (DB Replay). Both are available in on-premises installation and in our Oracle Cloud database offerings. Regarding Autonomous Database only SQL Performance Analyzer was supported until now. SQL Performance Analyzer focuses on detailed statement analysis of a defined SQL workload. A SQL workload can consist of SELECT or DML statements, which are made available via a SQL Tuning Set (STS). The workload is run twice – once BEFORE the change and then AFTER a change. The result is a detailed comparative analysis (before and after the change) of the individual statements, measured by different metrics such as elapsed time, cpu_time, etc. To illustrate the functionality we have already published blog postings about these subjects some time ago – e.g. the blog posting Smooth transition to Autonomous Database using SPA SQL Performance Analyzer in Autonomous Database covering SQL Performance Analyzer usage in Autonomous Database.

Database Replay is available for Autonomous Database. It can be used to replay a recorded (captured) workload from On-Premises or cloud installations in an Autonomous Database. Only one(!) statement is required to replay it. This led me to the the blog title “Autonomous” Database Replay – on behalf of a suggestion from one my colleagues during a discussion about this new functionality. 🙂

Note: Please keep in mind Database Replay on ADB-D (Autonomous database on dedicated infrastructure) is different from ADB-S (Autonomous Database serverless). Please refer to the documentation Oracle Autonomous Database on Dedicated Exadata Infrastructure in chapter “Database Replay“.

In this blog posting I’d like to outline all the steps that are necessary to replay a workload – starting from a capture and the copy of the captured files to the replay in an Autonomous Database Serverless. In addition I added some reports to analyze and compare the results with each other. If you are experienced with Database Replay and Oracle Database Cloud you may find all the information you need in the documentation Using Oracle Autonomous Database Serverless chapter “Test Workloads with Oracle Real Application Testing“.

Note: You can capture a workload in an Autonomous Database instance and replay it in another Autonomous Database instance. You can replay the captured workload on a full or refreshable clone. The capture and replay targets must be in a consistent logical state. More information can be found in chapter Capture a Workload on an Autonomous Database Instance.

To provide the full picture these are the steps that are required. If you are only interested in the new database replay functionality you may just read the third step.

- Capture/record a workload on a non-Autonomous Database

- Copy the capture files to the Object Store

- Replay a workload on Autonomous Database

- Monitor the replay and analyze the results

In my example, I used a database workload in a cloud VM, Oracle SQL Developer to import the required data and monitor the process and Oracle Cloud Shell to bulk load the captured files and generate the report files.

Capture/Record a Workload on a non-Autonomous Database

On the production system, a recording is started with a single call. Required is an empty logical directory of the database (CAPDIR in my example). There are certain restrictions regarding the commands that are recorded. These should be checked in advance in the manual. The dependency on external services such as database links, web services, etc. must also be considered and clarified. In addition, start the capture at low load to get as few in-flight transactions as possible. Of course, the best option would be to shut down the database, but this is not an option in most environments.

Therefore before starting a workload capture, you must first complete the prerequisites for capturing a database workload. The prerequisites are described in Prerequisites for Capturing a Database Workload. You should also review the workload capture options, as described in Workload Capture Options.

During a workload capture, a variety of information such as connection strings, SQL text and bind values are stored. When you begin workload capture, all requests from external clients directed to Oracle Database are tracked and stored in binary files called capture files.

-- CREATE OR REPLACE DIRECTORY capdir as '<directory>'; -- grant read, write on directory capdir; execute DBMS_WORKLOAD_CAPTURE.START_CAPTURE(name=>'C3', dir => 'CAPDIR');

The capture (here with the name C3) is ended either manually with the following command or automatically by specifying an additional duration arguments in the START_CAPTURE call.

execute DBMS_WORKLOAD_CAPTURE.FINISH_CAPTURE();

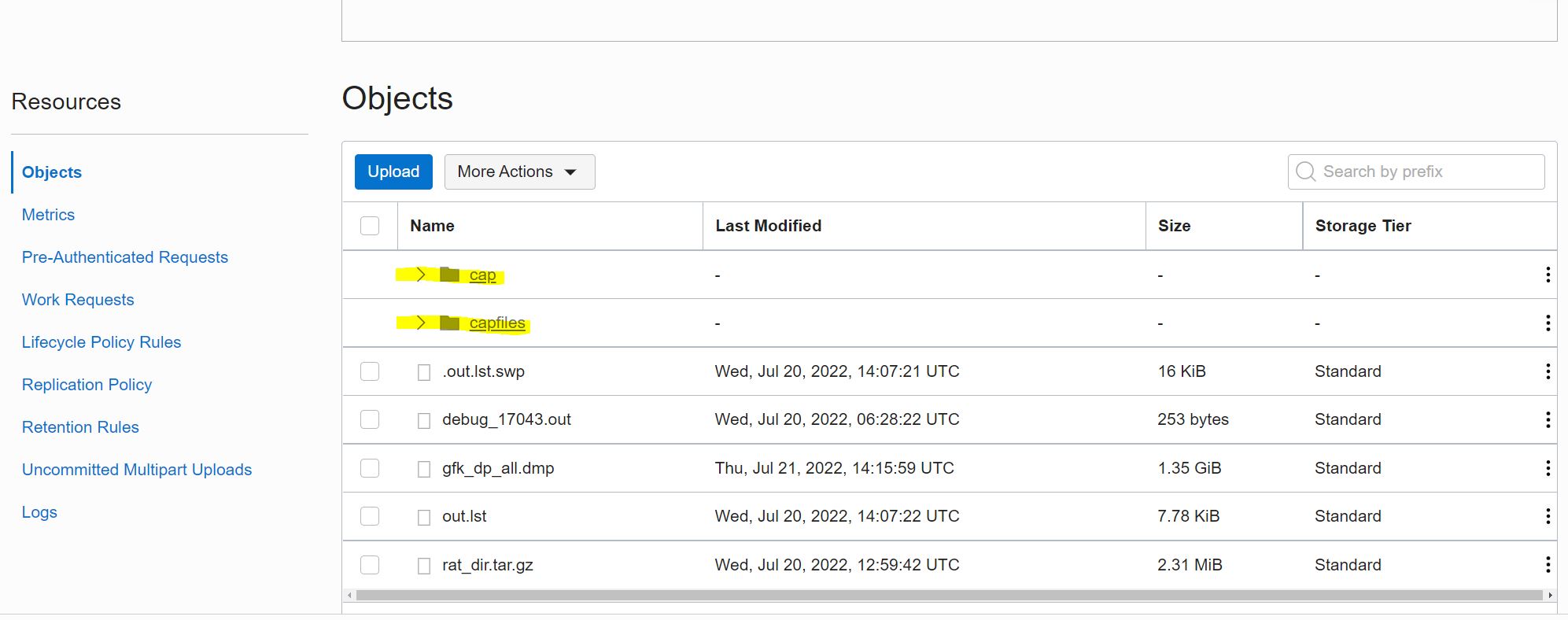

A workload capture results in the creation of two subdirectories, cap and capfiles, which contains the capture files.

The last action on the capture system is the export of the corresponding AWR snapshots to save the performance data information also in the capture directory. The following command initiates the export of the AWR snapshots.

execute DBMS_WORKLOAD_CAPTURE.EXPORT_AWR(capture_id =>21);

Copy the capture files to the Object Store

Before you start a replay, you have to upload the cap and capfiles subdirectories containing capture files that are created during capture to the Object Storage location. You must maintain the directory structure within the cap and capfiles subdirectories when you upload them to the Object Storage. I used the OCI command line interface (CLI) and the bulk upload command to upload all files in a given directory and all subdirectories. OCI Cloud Shell provides already a pre-installed OCI CLI environment, therefore I decided to us it for the bulk upload. You only need to provide the namespace (-ns) (here mynamespace), the bucketname (-bn) (here bucketus) and the source directory (–src-dir) (here /home/ulrike_sch/rat_dir). Then you can copy all the files with the following command:

oci os object bulk-upload -ns mynamespace -bn bucketus --src-dir /home/ulrike_sch/rat_dir

Hint: You may find the namespace with:

oci os ns get

More information on this will be found in the OCI CLI documentation

Replay a Workload on Autonomous Database

In order to carry out a replay, a test system must first be created and setup. It is important that the database on the capture and replay system have the same consistency status. You may use the MV2ADB tool or other methods to migrate your data to Autonomous Database. In my case Data Pump export was sufficient and I used SQL Developer Import wizard to import the required data to Autonomous Database. To reset the database afterwords you may use Data Pump import or the point in time recovery capability of Autonomous Database.

To run the replay only one single command is needed. No separate preprocessing command and start of replay clients etc. are required. You only need to execute DBMS_CLOUD_ADMIN.REPLAY_WORKLOAD to initiate a workload replay on your Autonomous Database. Also for this step I used Oracle Cloud Shell. SQL*Plus instant client or SQL Developer can of course also be used.

If you don’t know how to connect to Autonomous Database via Oracle Cloud Shell, you may follow the steps outlined in Kris Rice blog posting SQLcl and OCI Cloud Shell.

Im my case I generated the wallet zip file with name Wallet_DB19c.zip as follows:

oci db autonomous-database generate-wallet --autonomous-database-id --file Wallet_DB19c.zip --password 'xxxxxxx'

Then I connected with SQLcl as follows:

sql -cloudconfig Wallet_DB19c.zip admin@db19c_low

Now I am ready to execute the replay command.

BEGIN

DBMS_CLOUD_ADMIN.REPLAY_WORKLOAD(

location_uri => 'https://objectstorage.eu-frankfurt 1.oraclecloud.com/n/mynamespace/b/bucketus/o/',

credential_name => 'CRED_TEST',

synchronization => TRUE,

process_capture => TRUE);

END;

/

The credential_name parameter specifies the credential to access the object storage bucket. If you do not specify a credential_name value, then DEFAULT_CREDENTIAL is used. The synchronization parameter specifies the synchronization method used during workload replay. A TRUE value indicates that the synchronization is SCN based, False means the replay is based on time. The process_capture specifies whether you need to specify process_capture value or not. A TRUE value indicates that the replay includes process_capture. It only needs to be

done once. You can find more information in the documentation.

That’s it! The additional steps for monitoring or analyzing the outcome are not new and can be found in the Oracle Testing documentation. To provide a complete example in this posting I added these steps in the next section.

Monitor the replay and analyze the results

The following query lists the details of the capture metrics and preprocess status. It can be used on the production system where you have captured the workload or also on the replay system – of the Autonomous Database. Here is an example.

col status format a11 col name format a10 select id, name, status, errors, awr_exported, duration_secs, LAST_PROCESSED_VERSION, dbtime, user_calls, user_calls_unreplayable, capture_size/1024/1024 capsize from dba_workload_captures; ID NAME STATUS ERRORS AWR_EXPORTED DURATION_SECS LAST_PROCESSED_VERSION DBTIME USER_CALLS USER_CALLS_UNRE CAPSIZE _____ _______ ____________ _________ _______________ ________________ _________________________ _______________ _____________ _______________ __________ 21 C3 COMPLETED 298 YES 2346 19.16.0.1.0 1381157902 34798 8285 24.733809

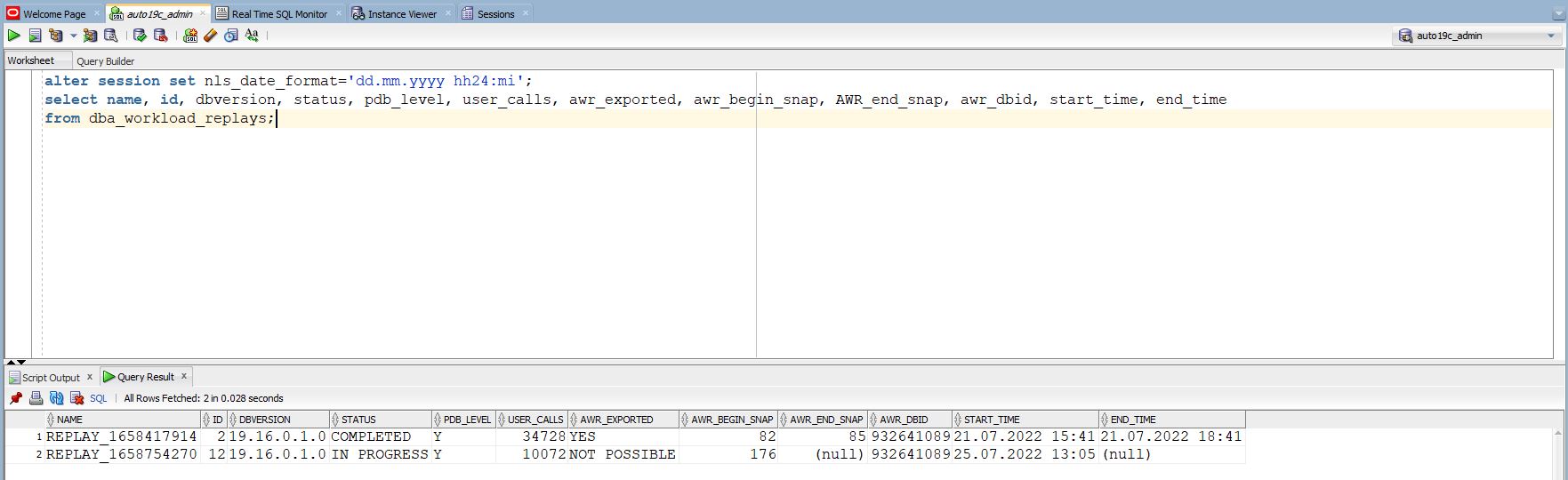

During and after the replay you may execute the following query to get information about some replay statistics.

alter session set nls_date_format='dd.mm.yyyy hh24:mi'; select name, id, dbversion, status, user_calls, awr_exported, awr_begin_snap, AWR_end_snap, awr_dbid, start_time, end_time from dba_workload_replays; NAME ID DBVERSION STATUS USER_CALLS AWR_EXPORTED AWR_BEGIN_SNAP AWR_END_SNAP AWR_DBID START_TIME END_TIME ____________________ _____ ___________ _________ _____________ _______________ ______________ ____________ ___________ ________________ ________________ REPLAY_1658417914 2 19.16.0.1.0 COMPLETED 34728 YES 82 85 932641089 21.07.2022 15:41 21.07.2022 18:41 REPLAY_1658754270 12 19.16.0.1.0 COMPLETED 34728 YES 176 177 932641089 25.07.2022 13:05 25.07.2022 13:38

If you prefer SQL Developer, you may use the SQL Worksheet to executed the queries.

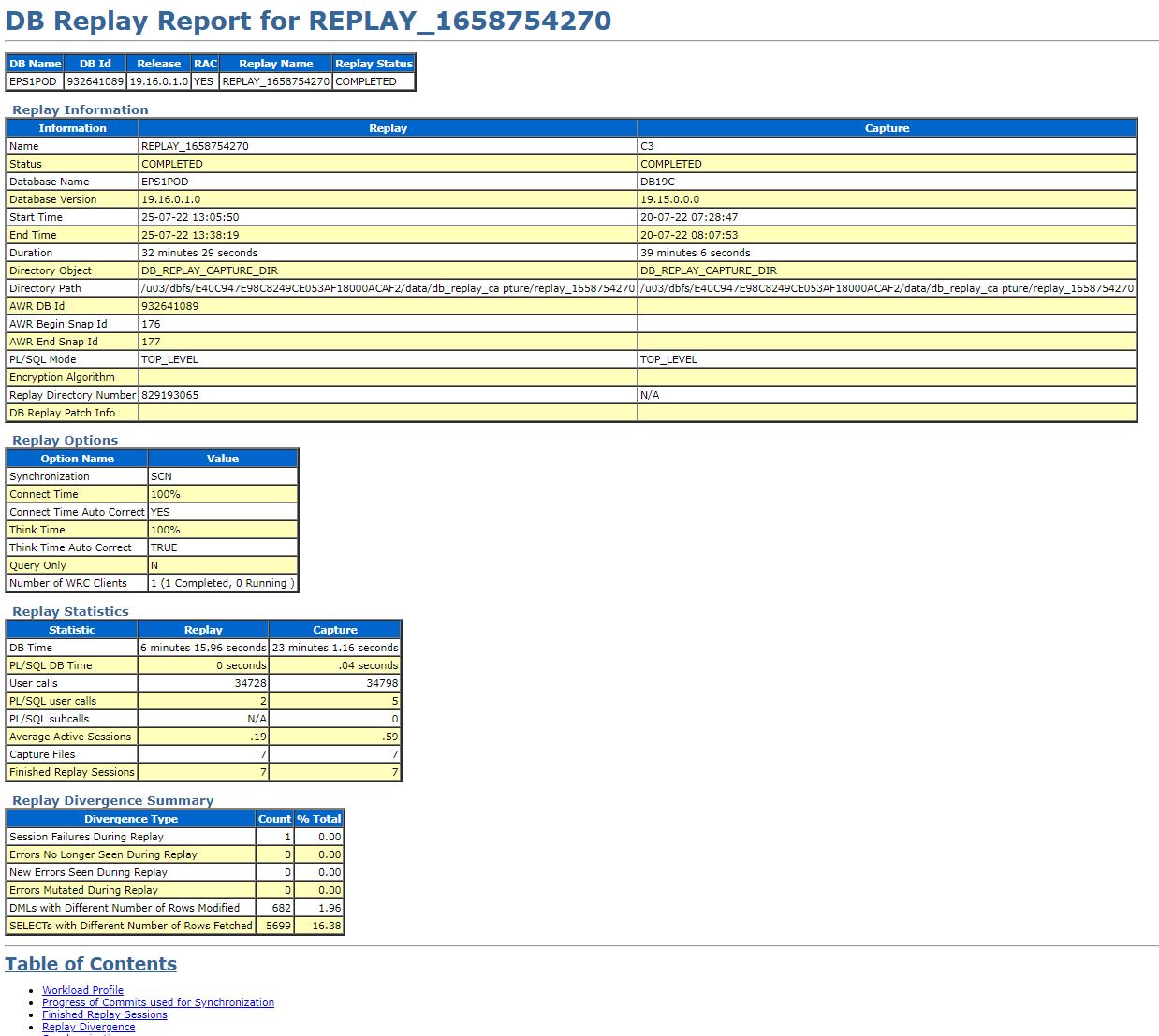

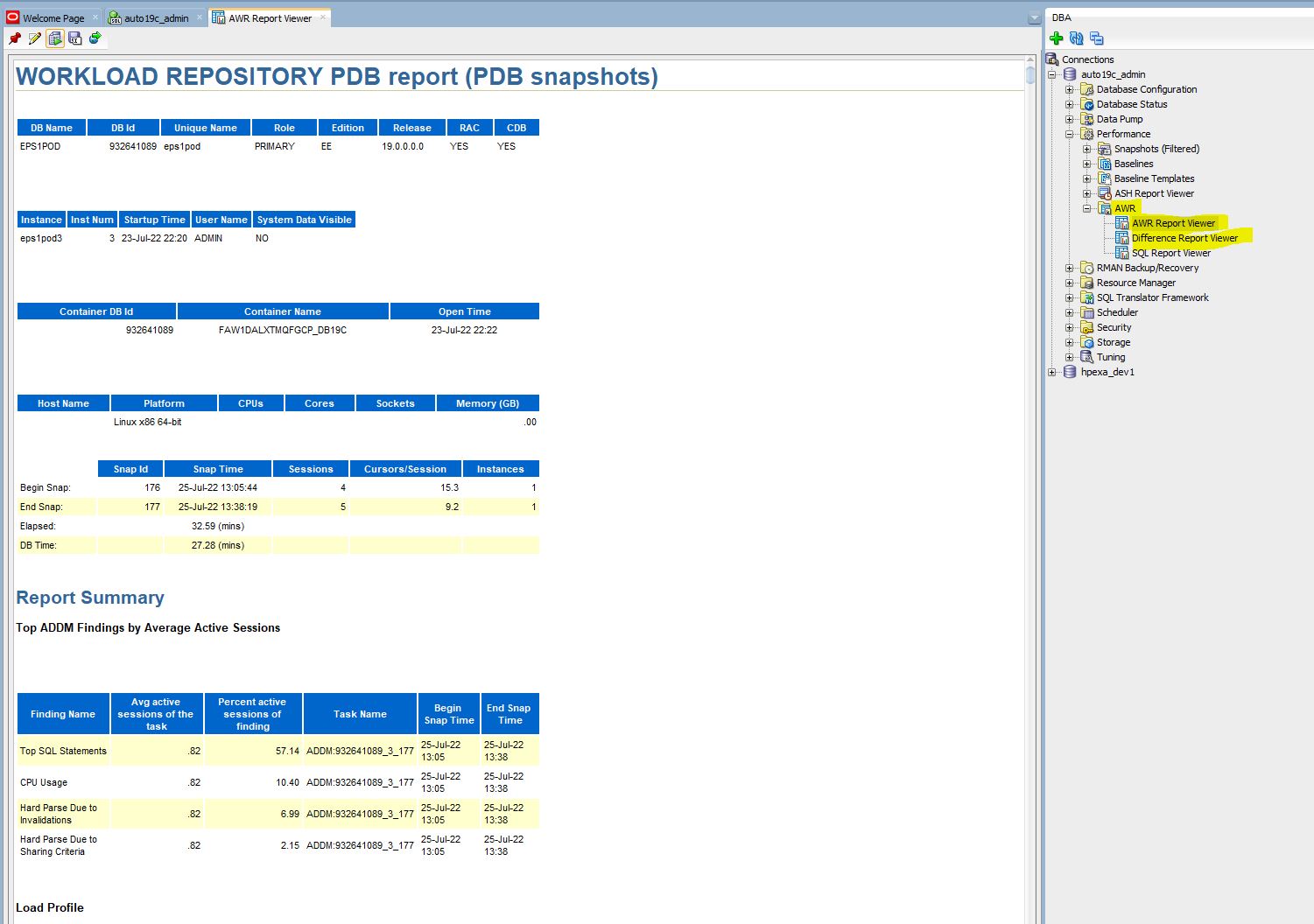

After the replay you can generate reports to get information about the replay and to compare it with the capture or with additional replays.The so-called workload replay reports contains information that can be used to measure performance differences between the capture system and the replay system.

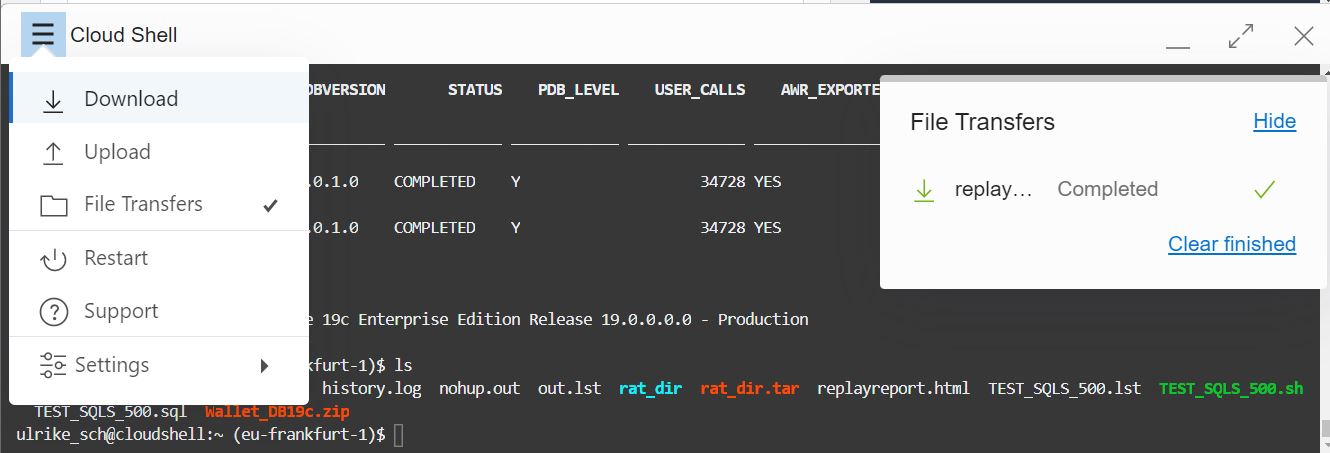

To generate a workload replay report I used SQLcl in my Oracle Cloud Shell.

set long 1000000 heading off pagesize 0 spool replayreport.html rep select dbms_workload_replay.report(replay_id=>12, format=>'HTML') from dual; spool off

After that I downloaded the generated HTML report to my PC to open it in my browser.

Here is a section from the replay report.

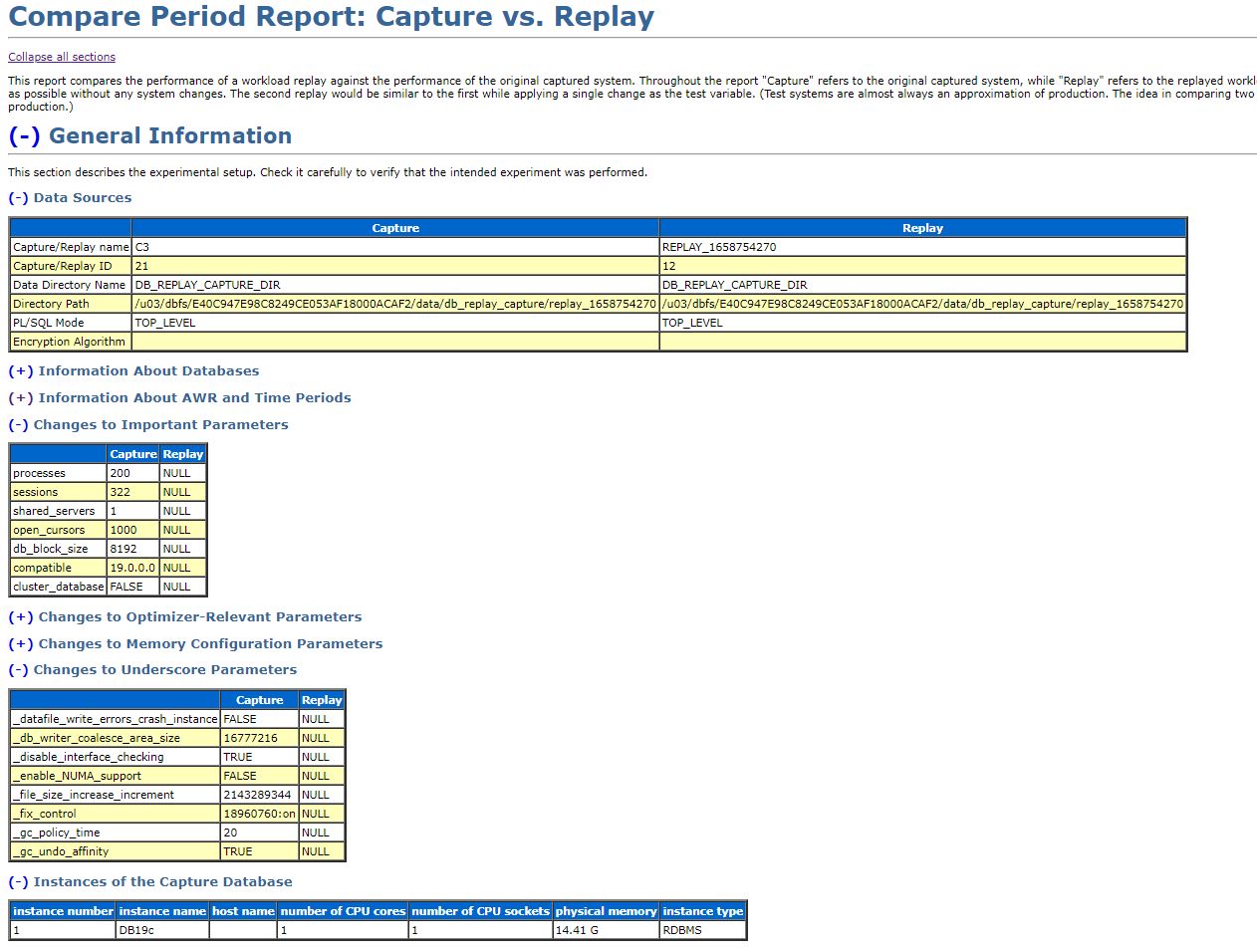

Next I generated the so-called compare period report which even gives a more detailled insight in the performance characteristics. For example you can compare a replay to its capture or to another replay of the same capture. This report compares the performance of a workload replay against the performance of the original captured system.Throughout the report “Capture” refers to the original captured system, while “Replay” refers to the replayed workload. The most reliable experiment would compare two replays. The first replay would try to mimic the captured system as much as possible without any system changes. The second replay would be similar to the first while applying a single change as the test variable.

First you need to make the AWR snapshots from capture run available. The following command imports the AWR snapshots associated with a given capture ID (here 21) provided those AWR snapshots were exported earlier from the original capture system using the EXPORT_AWR procedure. The staging schema must be a valid schema in the current database which can be used as a staging area while importing the AWR snapshots from the replay directory to the SYS AWR schema. The SYS schema is not a valid input.

select dbms_workload_capture.import_awr(capture_id => 21, staging_schema => 'DWH_DATA') from dual; DBMS_WORKLOAD_CAPTURE.IMPORT_AWR(CAPTURE_ID=>21,STAGING_SCHEMA=>'DWH_DATA') ______________________________________________________________________________ 196792466

This returns the new randomly generated database ID that was used to import the AWR snapshots. The same value can be found in the AWR_DBID column in the DBA_WORKLOAD_REPLAYS view.

Now you can generate the compare period report and download it again.

variable report_bind clob begin DBMS_WORKLOAD_REPLAY.COMPARE_PERIOD_REPORT ( replay_id1 => 12, replay_id2 => null, -- compare with capture format => DBMS_WORKLOAD_CAPTURE.TYPE_HTML, result => :report_bind); end; / set heading off long 1000000 longc 1000000 pagesize 0 spool comparereport.html rep print report_bind spool off

The parameters replay_id1 and replay_id2 are used to specify the different replay ids whose replays are requested. If the second id is NULL, then the comparison is done with the capture run. The format parameter specifies the report format. Valid values are DBMS_WORKLOAD_CAPTURE.TYPE_HTML and DBMS_WORKLOAD_CAPTURE.TYPE_XML. The result parameter is used for the output of the report (in CLOB). I used the bind variable report_bind to store the output and to print it afterwards.

And here is a section of the compare period report:

If you still need more information for your analysis, you may generate an AWR report with SQL Developer DBA window.

Summary

Oracle Real Application Testing functionality enables you to perform real-world testing of Oracle Database. By capturing production workloads and assessing the impact of system changes on these workloads before production deployment, Oracle Real Application Testing minimizes the risk of instabilities associated with system changes. SQL Performance Analyzer and Database Replay are key components of Oracle Real Application Testing. Depending on the nature and impact of the system change being tested, and on the type of system the test will be performed, you can use either or both components to perform your testing. With the new enhancement both components are now available on Autonomous Database. Keep in mind only one (!) command is required to replay a workload – no separate preprocessing, calibrate and start of replay clients, initialize and prepare commands are necessary to replay a captured workload in an Autonomous Database environment. This makes workload testing on Autonomous Database really easy and straightforward.

Further Readings

- Documentation Using Oracle Autonomous Database Serverless chapter “Test Workloads with Oracle Real Application Testing“

- General Database Documentation: Part II Database Replay

- Documentation DBMS_CLOUD_ADMIN

- Documentation Oracle Autonomous Database on Dedicated Exadata Infrastructure chapter “Database Replay“

- Blog Posting: Smooth transition to Autonomous Database using SPA

- Blog Posting SQLcl and OCI Cloud Shell

- OCI CLI command reference