A core purpose of using artificial intelligence (AI) and machine learning (ML) is to make sense of large volumes of data by arriving at a trained model. Much like how an athlete needs to train for optimal performance during their upcoming competition, an ML model must be trained to perform optimally when deployed in production. A trained model can deliver accurate results with a small tolerance for failure, quickly, and cost-effectively on computing infrastructure.

A large language model (LLM) uses massive amounts of data, such as the data deployed on the internet over a period of a few years. It learns from this information to help us in a variety of ways, including summarizing text, detecting crime, writing software, and the following example use cases:

-

Chatbots that can answer complex customer questions and resolve customer queries with greater effectiveness than chatbots widely deployed in the market today

-

AI helpers that can assist researchers uncover new insights. For example, biologists can feed data from published material and public health records to help discover new remedies

-

Specialized assistants that can help attorneys with case preparation and analysis, marketers with copy optimization and customer segmentation, sales managers with identification of new growth areas, developers with writing software, and much more

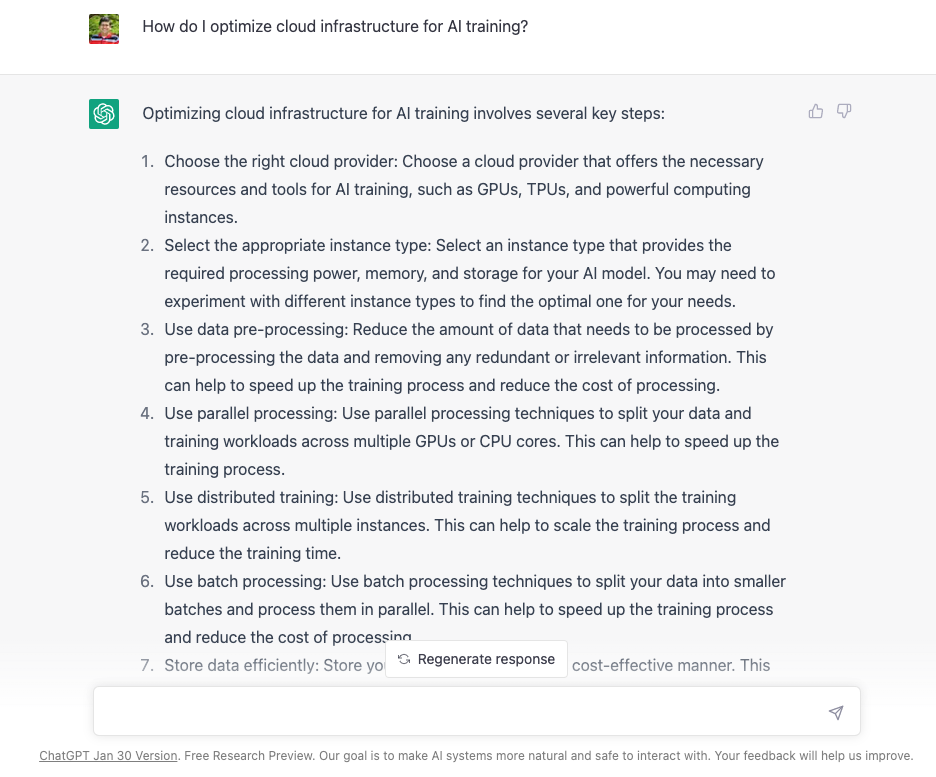

ChatGPT as an LLM example

Developed by Open AI, an AI research and development lab, ChatGPT is a popular AI that can generate human-readable responses to general questions. Because of its versatility, ChatGPT can evolve today’s search engines, supplementing search results with more insightful answers. Consider the following example, which shows a reasonable response for a complex question on optimizing cloud infrastructure for AI training.

Superclusters and their role in LLM training

Training AI applications, such as ChatGPT, using large language models requires powerful clusters of computing infrastructure that can process vast amounts of data. These superclusters contain hundreds or thousands of bare metal Compute instances connected by a high-performance network fabric. Collectively, these Compute instances deliver the capability to process training datasets at-scale. Meta AI estimates that these superclusters must be capable of performing quintillions of operations per second as models are becoming increasingly large, complex, and adaptable.

Oracle Cloud Infrastructure (OCI)’s AI infrastructure is already enabling customers, such as Adept and SoundHound, to process vast amounts of data for training large language models. We have made optimizations in OCI’s cluster networks to support ultra-low latencies using RDMA over converged ethernet (RoCE). You can learn more about our large-scale supercluster networks and the engineering innovations that made them possible in the following video.

Comparing OCI with AWS and Google Cloud Platform (GCP)

Training large language models is extremely network intensive. Training these models requires coordinating and sharing information across hundreds or thousands of independent servers. OCI GPUs are connected by a simple, high-performance ethernet network using RDMA that just works. The bandwidth provided by OCI exceeds that of both AWS and GCP by 4x-16x, which in turn reduces the time and cost of ML training. The published bandwidths of the three vendors are:

- OCI with BM.GPU.GM4.8 instances: 1600 Gbps

- AWS with P4D instances: 400 Gbps

- GCP with A2 instances: 100 Gbps

OCI’s cluster network technology validated by cutting-edge AI/ML innovators such as Adept, MosaicML, and SoundHound. At the time of publication, AWS and Google Cloud Platform were not completely transparent in the type of interconnect technology used: Infiniband, ethernet, or something else. In contrast to OCI’s simplicity, enhancements such as AWS EFA create complexity in configuration and software that must be thoroughly tested before being used for ML training. By keeping interconnects simple and fast, OCI provides the best environment for training large language models.

Want to know more?

OCI offers support from cloud engineers for training large language models and deploying AI at-scale. To learn more about Oracle Cloud Infrastructure’s capabilities please contact us or see the following resources:

For additional information on OCI’s supercluster architecture, please refer to First principles: Superclusters with RDMA—Ultra-high performance at massive scale and First principles: Building a high-performance network in the public cloud.