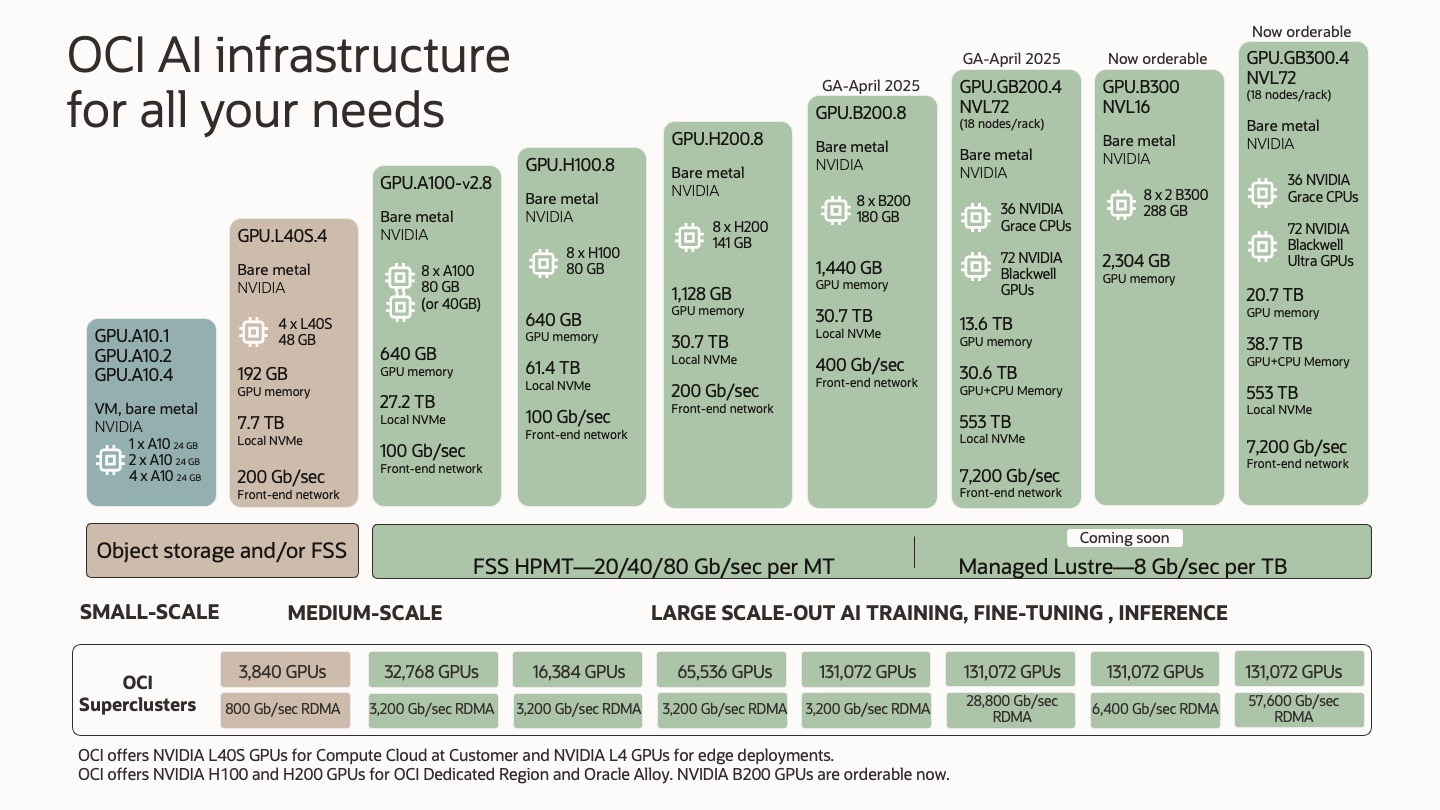

At Oracle Cloud Infrastructure (OCI), we’re committed to providing customers with the most advanced cloud infrastructure for AI workloads, wherever they need it. Whether you’re training massive multimodal models, fine-tuning large language models (LLMs), or deploying real-time inference at scale, our AI infrastructure is built to handle the most demanding workloads with the most powerful GPUs in the market.

Today, we’re excited to announce new AI infrastructure capabilities across public cloud, OCI Dedicated Region, and Oracle Alloy. These offerings bring the latest NVIDIA Blackwell architecture GPUs and Superchips to you at any scale, from a single instance to clusters with many thousands of GPUs.

We’re among the first cloud service providers to offer orderability for next-generation NVIDIA GB300 NVL72 and NVIDIA HGX B300 NVL16 with Blackwell Ultra GPUs, providing you early access to the AI acceleration. We’re announcing the following product offerings:

Orderable now (generally available later this year):

-

OCI Compute bare metal with NVIDIA GB300 Grace Blackwell Ultra Superchips

-

OCI Compute bare metal with NVIDIA B300 Blackwell Ultra GPUs

-

OCI Supercluster with up to 131,072 NVIDIA GB300 Grace Blackwell Ultra Superchips as parts of rack-scale NVIDIA GB300 NVL72 solutions with NVIDIA ConnectX®-8 SuperNICs™

-

OCI Supercluster with up to 131,072 NVIDIA Blackwell Ultra GPUs as parts of NVIDIA HGX B300 NVL16 solutions with NVIDIA ConnectX-8 SuperNICs

-

OCI Compute bare metal with NVIDIA Blackwell GPUs (in OCI Dedicated Region and Oracle Alloy)

Generally available now:

-

OCI Compute bare metal with NVIDIA Hopper GPUs (in OCI Dedicated Region and Oracle Alloy)

Generally available in April 2025:

-

OCI Compute bare metal with NVIDIA GB200 Grace Blackwell Superchips

-

OCI Compute bare metal with NVIDIA Blackwell GPUs

-

OCI Supercluster with up to 131,072 NVIDIA GB200 Grace Blackwell Superchips as parts of rack-scale NVIDIA GB200 NVL72 solutions

-

OCI Supercluster with up to 131,072 NVIDIA Blackwell GPUs

Expanding the Broadest Portfolio of Bare Metal AI Compute

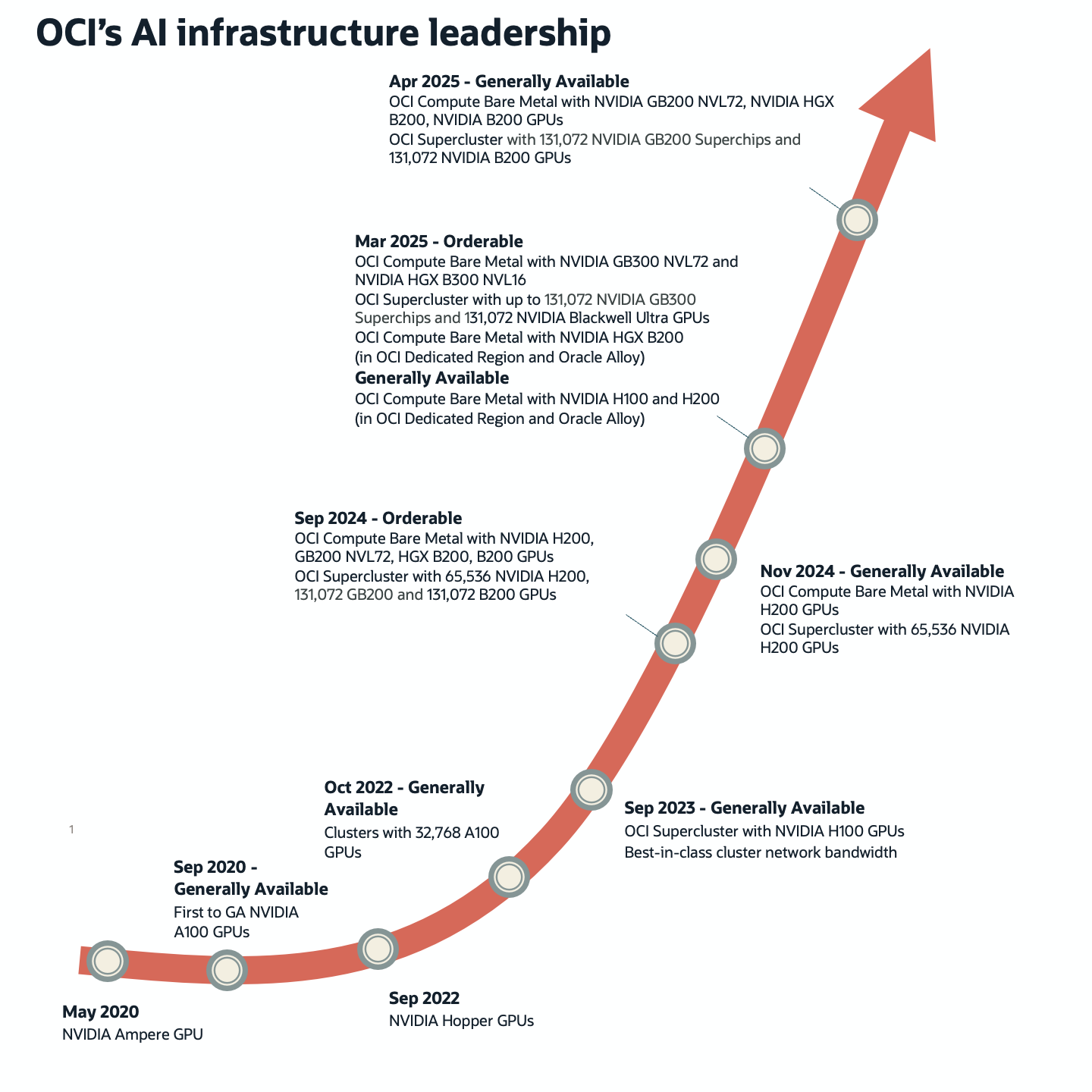

OCI has been at the forefront of AI infrastructure innovation. In 2016, Oracle became the first major cloud provider to introduce bare metal instances, and in 2020, we were among the first to make NVIDIA A100 GPUs generally available. Since then, we have continuously delivered the latest AI compute innovations from our silicon partners, enabling you to push the boundaries of AI. Here’s a look at our AI infrastructure journey and the rapid growth of OCI’s AI compute offerings over the years.

With today’s announcements, OCI now offers the most extensive lineup of bare metal AI compute across our public cloud regions, OCI Dedicated Region, and Oracle Alloy.

AI Infrastructure Wherever You Need It with Unprecedented Scalability and Flexibility

AI workloads are not one-size-fits-all. Many organizations require infrastructure that aligns with their stringent data sovereignty, security, and regulatory requirements. With an expandable footprint starting as small as three racks, OCI Dedicated Region provides preconfigured modular infrastructure in your own data center. Oracle Alloy is a complete cloud infrastructure platform that enables partners to transform their business model to become cloud providers themselves and offer the full range of over 150 OCI cloud services to their own customers.

Now, with general availability of OCI Compute with NVIDIA Hopper GPUs and the orderability of OCI Compute with NVIDIA Blackwell GPUs for OCI Dedicated Region and Oracle Alloy, you get access to state-of-the-art GPU compute directly in their data centers to build and deploy custom and open source models for AI training, inference, and fine-tuning, or to generate vector indexes for databases with NVIDIA GPUs and NVIDIA cuVS. You will be able to use the OCI Generative AI services or agentic AI capabilities for Fusion AI applications, such as predictive insights for financial management in Oracle Fusion ERP and content generation for hiring managers in Oracle Fusion HCM.

You can start as small as eight GPUs and scale up to 64 GPUs per cluster, with or without RDMA cluster networking for small-scale inference and training. You can have multiple clusters of 64 GPUs or choose a cluster of 1,024 or more GPUs for larger AI infrastructure deployments. As always, you get the same operational expenditure (OpEx) flexibility you experience anywhere across Oracle’s distributed cloud. Learn more about Oracle distributed cloud sovereign AI capabilities through this announcement blog article.

The Most Scalable AI Infrastructure for Any Workload

Whether deploying a single NVIDIA GPU rack-scale solution like GB200 NVL72, or operating the largest AI Supercluster in the cloud, OCI empowers you to realize AI-driven innovation at any scale. Contact us, visit us online, and learn more about Oracle Cloud Infrastructure through the following recent announcements:

- First Principles: Inside zettascale OCI Superclusters for next-gen AI

- Oracle and NVIDIA deliver sovereign AI anywhere

- OCI AI Blueprints: Go from Zero to Hero While Deploying AI Workloads on OCI

- Oracle Integrates NVIDIA AI Enterprise with OCI To Drive Innovation in the Enterprise

- Announcing General Availability of NVIDIA GPU Device Plugin Add-On for OKE

- Accelerating AI Vector Search in Oracle Database 23ai with NVIDIA GPUs

- DeweyVision Transforms Media Discovery with Oracle AI Vector Search: Faster, Smarter, and More Cost-Effective

- Beyond Structured Data: The AI Revolution in Market Data Analytics

For more information, see the following resources: