Introduction

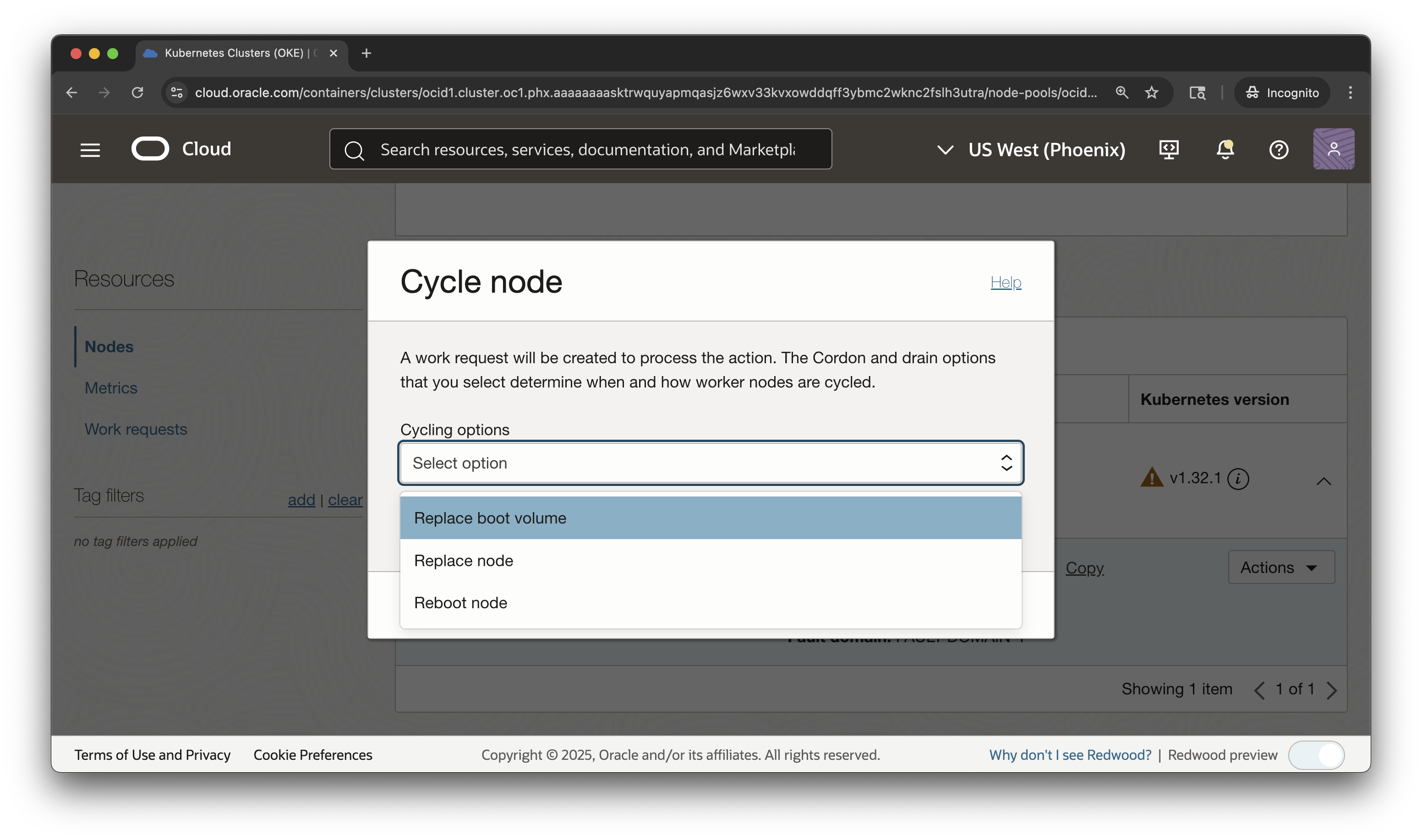

We are excited to announce the ability for OCI Kubernetes Engine (OKE) users to take repair actions on faulty worker nodes. With this change, you can reboot or replace the boot volumes of managed nodes and self-managed nodes in your cluster to address node health issues. You can take these corrective actions both through the OKE API and natively through the Kubernetes API and they will respect your Kubernetes-level availability configurations.

Background

Faulty nodes can lead to performance degradation and application downtime. The longstanding approach to address host level issues is simply to terminate the troubled node and replace it with a new healthy one. In OKE clusters, this can be done at the node pool level through node pool cycling or at the node level by deleting a specific worker node. Terminating unhealthy nodes and replacing them with new, healthy ones is a great way to address the majority of issues impacting node health, but in some cases a less heavy handed approach is worth considering.

Alternative Repair Options

Alternatives to instance termination and replacement, including rebooting or replacing the boot volume of nodes, can deliver faster issue correction and shorter workload interruption. These alternatives are prudent for users with stateful workloads for whom shifting state off one machine to another requires effort and careful attention. They also benefit users of bare metal instances, for example those with AI model training workloads, who need to avoid the long recycle time required to launch new instances.

Reboot

Rebooting allows you to restart an instance to resolve error conditions. In the context of Kubernetes, rebooting a worker node power cycles the compute instance hosting the node. Rebooting the node restarts the instance by sending a shutdown command to the operating system. When you issue the command, your nodes are cordoned and drained, which prevents Kubernetes from scheduling new workloads onto the node and offloads existing workloads to new nodes, the OS is given time to shut down, and the instance is powered off and then powered back on. In contrast to termination and replacement, the instance itself is not terminated and keeps the same OCID and network address.

In some cases a simple power cycling is sufficient to resolve your host problems. For example, our bare metal GPU users may find themselves facing a drop in job performance or GPU memory showing high temperatures indicating thermal throttling. Or that the host is reporting fewer than the expected number of GPUs or that hardware has fallen off the bus. Perhaps there are NVLink errors indicated by the NVIDIA Fabric Manager failing to start or NCCL jobs failing to run. In all these cases, rebooting the node is the first step to correcting the issue.

Boot Volume Replacement

Boot volume replacement offers a simple path to replace the boot volume of an instance hosting a worker node. If you detect an issue caused by configuration drift of your boot volume, you can correct it by performing a boot volume replacement, without updating your node pool properties. In doing so, the boot volumes of your nodes will be replaced with newly provisioned ones that possess the same properties as your original boot volume. When you issue the command, your nodes are cordoned and drained, the instance stops, the boot volume is swapped out, and the instance returns to the state it was in prior to the volume replacement process. This approach is useful in situations where you’ve taken an action on the node to alter its original state, for example by updating the GPU driver version, and you want to return the instance or instances to the original state.

Another way to repair managed nodes through boot volume replacement is by swapping the existing boot volume for one possessing updated properties, for example a new host image or cloud-init script. For more information about this approach, take a look at Non-Destructive Kubernetes Worker Node Updates.

A Kubernetes-Centric Approach

Repair Using the Kubernetes API

One of the most exciting aspects of node repair is the ability to take action on worker nodes backed by OCI Compute instances directly through the Kubernetes API. We spoke with many users who preferred to take these new corrective actions directly through the Kubernetes API. Rather than needing to call the OCI APIs to reboot or replace the boot volume of a node, which is also a supported option, you can do so directly through the Kubernetes API using Kubernetes custom resources and node selectors. This feature helps you avoid a split experience of jumping between the Kubernetes and OCI APIs and also lends itself to extensibility. For example, a user with node-problem-detector, a commonly used operational tool that makes node problems visible to the upstream layers in the cluster management stack, deployed to their cluster could leverage the node conditions generated by the tool to the newly available node actions to automatically remediate node issues.

Eviction Grace Periods

In addition to making the new actions available through the Kubernetes API, the actions themselves also honor Kubernetes availability best practices. As with the existing ability to terminate and replace nodes through the node cycling and delete node APIs, rebooting and boot volume replacement offer you the option to set an eviction grace period, the length of time to allow nodes to be cordoned and drained before taking action on them. This option respects any pod disruption budgets set for your workloads to avoid any interruptions that might occur if a pod was still running at the time action was taken on a node. If any pods failed to evict by the end of the eviction grace period, you have the option to either cancel the operation or move ahead with the action.

maxUnavailable Nodes

When taking action on multiple nodes at once, for example replacing the boot volume of all nodes in a node pool, you can tailor OKE behavior to meet your requirements for service availability and cost. You can specify the number of nodes allowed to be concurrently unavailable during the upgrade operation, which is referred to as maxUnavailable. The greater the number of nodes that you allow to be unavailable at one time, the more nodes can repaired in parallel. However, the greater the number of nodes that you allow to be unavailable, the more service availability might be compromised. You can select a managed node pool and choose to terminate and replace all of the nodes within it as well as replace the boot volumes of all the nodes within it. At this time you cannot select a managed node pool and reboot all the nodes within it. You have to reboot nodes individually.

Comprehensive Node Support

A goal for the feature was to be able to repair all types of nodes, including OKE managed nodes and self-managed nodes, as well as nodes backed by OCI virtual machine shapes and bare metal shapes. Traditionally, the most commonly used type of OKE nodes are those in a managed node pool backed by virtual machines. Nodes outside of this type, such as bare metal shape or self-managed nodes, had fewer operation features available to them. In order to provide a unified experience across all node types, it was important for us to ensure all node types would benefit from these new operational features.

Conclusion

Instance termination and replacement is no longer the only way to address node health issues. Rebooting or replacing the boot volume of nodes can address a large number of host level issues with the benefit of faster correction and shorter workload interruption compared to terminating and replacing instances. These alternative actions respect Kubernetes availability settings and can be triggered through the OCI API or directly through the Kubernetes API to avoid a split experience of jumping between the Kubernetes and OCI APIs and also lends itself to extensibility.

For more information, see the following resources: