In high-performance computing (HPC), the Compute nodes and the fast remote direct memory access (RDMA) network are the main workhorse. The HPC systems typically prefer to manage their workloads using specialized workload management and job scheduling tools like SLURM. However, in certain scenarios, you might need to opt for Kubernetes as an alternative for cluster creation and management. Several factors can drive this decision, such as the need for seamless integration with other systems, environments, or APIs. Moreover, present-day HPC workloads have grown demands for scalability and containerization for better productivity and manageability, resulting in utilizing Kubernetes for the HPC use cases. Kubernetes excels in orchestrating containers seamlessly but deploying distributed HPC applications can be tricky on Kubernetes.

This post aims to demonstrate the implementation of an RDMA-enabled HPC cluster using the Oracle Kubernetes Engine (OKE). In addition to cluster setup, it also showcases the utilization of the Oracle Cloud Infrastructure (OCI) File Storage service as a shared network file system (NFS) storage solution for enabling file sharing among the cluster nodes. While this example focuses on OCI File Storage, you can choose to employ any other compatible file storage system, based on your preferences and requirements.

The steps outlined in this blog post provide insights into setting up an HPC cluster using OKE, configuring shared storage with OCI File Storage, and automating the provisioning process using Terraform. This approach offers flexibility, scalability, and integration capabilities, making it an attractive option for HPC workloads in various scenarios.

Architecture

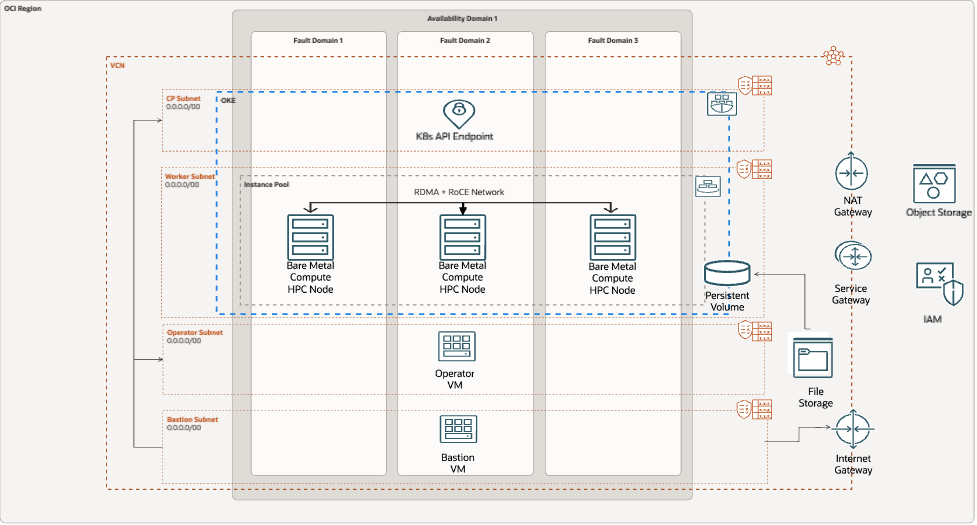

The following diagram shows the architectural components of the HPC environment integrated in an OKE environment.

Figure 1: OKE HPC architecture

The solution uses the following components:

- Bastion host: A virtual machine (VM) with a Standard.E4.Flex shape used as a jump host

- Operator VM: Uses a Standard.E4.Flex shape and provides access to the Kubernetes API endpoint.

- HPC Nodes: Bare metal instance using a BM.Optimized3.36 shape with 36 Intel Xeon 6354 cores, 3.84-TB NVMe SSD, and 100-Gbps RDMA network.

- OCI File Storage: Enterprise-grade network file system.

- Other OCI services, including Block Volume, Object Storage, and Identity and Access Management (IAM), are used to prepare and run the environment.

GitHub repository

The setup process involves the use of a GitHub repository, which describes the implementation of HPC with RDMA networking and also OCI File Storage as a persistant volume. It largely contains Terraform and yaml scripts to set up the architecture.

We use the HPC custom image provided in that repository on the HPC nodes.

Deploying HPC nodes in OKE environment

You can deploy the OKE cluster in a generic way using Terraform scripts and bare metal BM.Optimized3.36 nodes with RDMA connectivity as described in the GitHub documentation. The HPC node pool requires to use a special HPC cluster network image prepared by the Oracle HPC team as explained in Running RDMA (remote direct memory access) GPU workloads on OKE using GPU Operator and Network Operator. This image is packaged with the OFED drivers and necessary packages required for the RDMA networking. You can download the base image from Object Storage.

SSH to the operator VM from the bastion host and run the command, kubectl get nodes. You should get a result similar to the one in Figure 2.

|

$ssh -o ProxyCommand=’ssh -W %h:%p -i <path-to-private-key> opc@<bastion-ip>’ -i <path-to-private-key> opc@<operator-ip> |

opc@o-djtrktw ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.0.146.126 Ready <none> 7d23h v1.27.2

10.0.146.9 Ready node 7d23h v1.28.2

10.0.149.221 Ready <none> 7d23h v1.27.2

Figure 2: OKE nodes status

Now, the HPC nodes are ready to run HPC workloads in a cluster computing with RDMA network.

HPC cluster network verifications

When the RDMA cluster is prepared, we need to guarantee that the RDMA network is ready to run parallel distributed workloads.

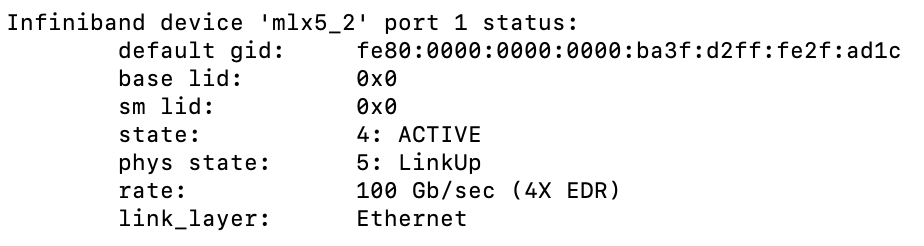

To ensure that the network interfaces are configured with proper OFED drivers and the links are active, we check the corresponding network interfaces with the following command:

$ ib_status

Figure 3: HPC node Infiniband device

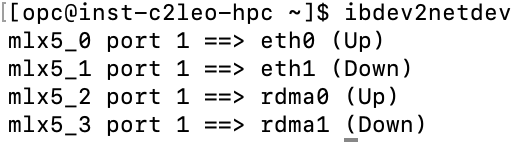

The mapping of the network devices with ibdev2netdev, one of the most useful scripts in the MLNX_OFED package, displays the mapping of the adapter port to the net device.

Figure 4: RDMA network device mapping

To test from an application point of view, we ran a few MPI point-to-point and collective tests, including pingpong, AllReduce and AllGather, as described in the post, Running Applications on Oracle Cloud Using Cluster Networking. The measured node-to-node communication latency was within the standards provided by the OCI networking infrastructures.

OKE HPC with File Storage mount

HPC workloads typically also require a shared performant storage option to store data for running distributed parallel jobs, and the performance of this storage can directly impact the application performance. Although applications running on OKE generally don’t need persistent storage, OCI offers the ability to provision persistent volume claims by attaching volumes from the Block Volume service or by mounting file systems from File Storage. We believe that using the storage as a service can simplify the HPC deployment and guarantee stability and high availability in a complex combination of OKE and HPC.

For information on how to create a persistent volume and provision a persistent volume claim based on it, see Provisioning PVCs on the File Storage Service.

For this scenario, we create a persistent volume claim on an existing file system in OCI File Storage. You can find sample yaml files in the OKE FSS PV folder in the GitHub repo. Run the command, kubectl create, to create the persistent volume and persistent volume claim in that order. After the persistent volume claim is created, HPC workloads can use it as storage in pods.

|

[opc@o-gbfokg ~]$ kubectl create -f hpc-fss-pv.yaml |

Figure 5: Creating a persistent volume

|

[opc@o-gbfokg ~]$ kubectl create -f hpc-fss-pvc.yaml |

Figure 6: Creating a persistent volume claim

Conclusion

This technical solution can facilitate a secure containerized platform, such as OKE, to run containerized HPC applications. It expeditated the agility, manageability, and efficiency for HPC users seeking Kubernetes environments. This will enable OCI HPC users to bring out the performance of the OCI BareMetal HPC nodes still being scalable and manageable with OKE.

While different solutions can be chosen as shared storage for HPC workloads, Oracle File Storage Service (FSS) provides a feasible and easy-to-deploy solution that is already integrated with OKE and can be configured to be used as a Persistent Volume Claim (PVC) by OKE pods. The integration of OKE and FSS streamlines the deployment and management of containerized HPC applications, allowing users to focus on their core objectives rather than grappling with infrastructure complexities.

For more information, see the following resources:

- High-performance computing (HPC) on OCI

- Oracle Kubernetes Engine (OKE)

- OCI File Storage service

- GitHub repository for OKE, HPC, and File Storage service

- GitHub repository for OKE, HPC, and GPU

- OCI custom image for HPC on OKE

- Oracle blog post on HPC on RDMA network

- Oracle blog post on building high performance network

- Provisioning persistent volume claims on OCI File Storage