Oracle Container Engine for Kubernetes (OKE) currently enables you to create clusters with Flannel as the default container network interface (CNI). Flannel is a simple overlay virtual network that satisfies the requirements of the Kubernetes networking model by attaching IP addresses to containers and using virtual extensible LAN (VXLAN) to facilitate network connectivity.

Today, we’re pleased to announce that your Kubernetes pods can natively be a part of your virtual cloud network (VCN) with the general availability of VCN-native pod networking for OKE CNI. VCN is a customizable software-defined network that enables you to manage and scale your infrastructure on OCI using its rich set of features like Virtual Test Access Point (VTAPs) and Flow Logs. Your Kubernetes pods can now benefit from more flexibility, more granular security, and consistent performance. This complements the Flannel overlay network options we provide today so that customer can have more choices to plumb their pod network.

Introducing the OCI VCN-native CNI for OKE

VCN-native pod networking provides a new CNI plugin for Kubernetes clusters managed by OKE. This CNI provides pods with an IP address from the VCN CIDR, resulting in the same network performance as virtual machines with no additional overlay required. Your pods are routable within your VCN, between VCN, and with your on-premises network over FastConnect or IPSec VPN. The pod’s IP can identify the network traffic going in and out of pods, and you can use VCN flow logs for auditing the pods’ network traffic that passes through your VCN.

While we recommend accessing your applications in your Kubernetes cluster through a Kubernetes service, the ability to access pods by their IP can unlock various use cases. For example, services might be exposed on an Oracle Kubernetes cluster making outbound calls to the internet, and the administrator wants to have an end-to-end visibility of the packets all the way to the pods level. In these scenarios, the VCN-native pod networking plugin for Kubernetes preserves the pods’ IPv4 address without translating the pods IPv4 address to the node IPv4 address that the pods are running on and the pods network traffic details can be logged in applications like VCN Flow logs.

When you enable VCN-native pod networking in your cluster, OKE automatically deploys, configures, and maintains the VCN-native pod networking CNI in your cluster. The CNI creates a “warm pool” of IP addresses by preallocating IP addresses on OKE nodes to reduce scheduling latency. Because the worker nodes already have IP addresses allocated to it, Kubernetes doesn’t need to wait for an IP address to be assigned before it can schedule a pod.

VCN-native pod networking provides pods with high throughput, low latency, and consistency in performance. Our performance tests indicate that OCI VCN-native pod networking provides a 25% lower latency than Flannel for a pod-to-pod communication across different nodes. A low and consistent network transmission time between pods is essential for real-time applications such as video conferencing, internet of things-based smart home apps, online gaming and many more. With OCI VCN- native pod networking, pods communicate at the speed of the OCI networking without any overhead.

With VCN-native pod networking, you can configure a single subnet for all your cluster components (API server, worker nodes, load balancers, and now pods). However, the best practice keeps all the components in different subnets. Another common practice made more secure by VCN-native pod networking is to share the same network (VCN or subnet) among multiple Kubernetes clusters, such as a production or a non-production cluster.

Fine-grained security rules as part of network security groups (NSGs) allow your fleet of pods to access other resources within your VCN or peered VCN on an on-premises network. In these scenarios, you can use the same subnet of the VCN for both the production and non-production cluster but still achieve granular control on securing the cloud native applications running on those clusters.

Granular network policy enforcement at the pods level, such as using Calico, also helps protect your application workloads and provides a layered defense against today’s constantly evolving threat landscape.

Comparing CNI for OKE

Before you go ahead and configure VCN-native pod networking, let’s do a deep dive and understand how VCN-native pod networking differs from OCI pod networking with Flannel.

Native pod networking

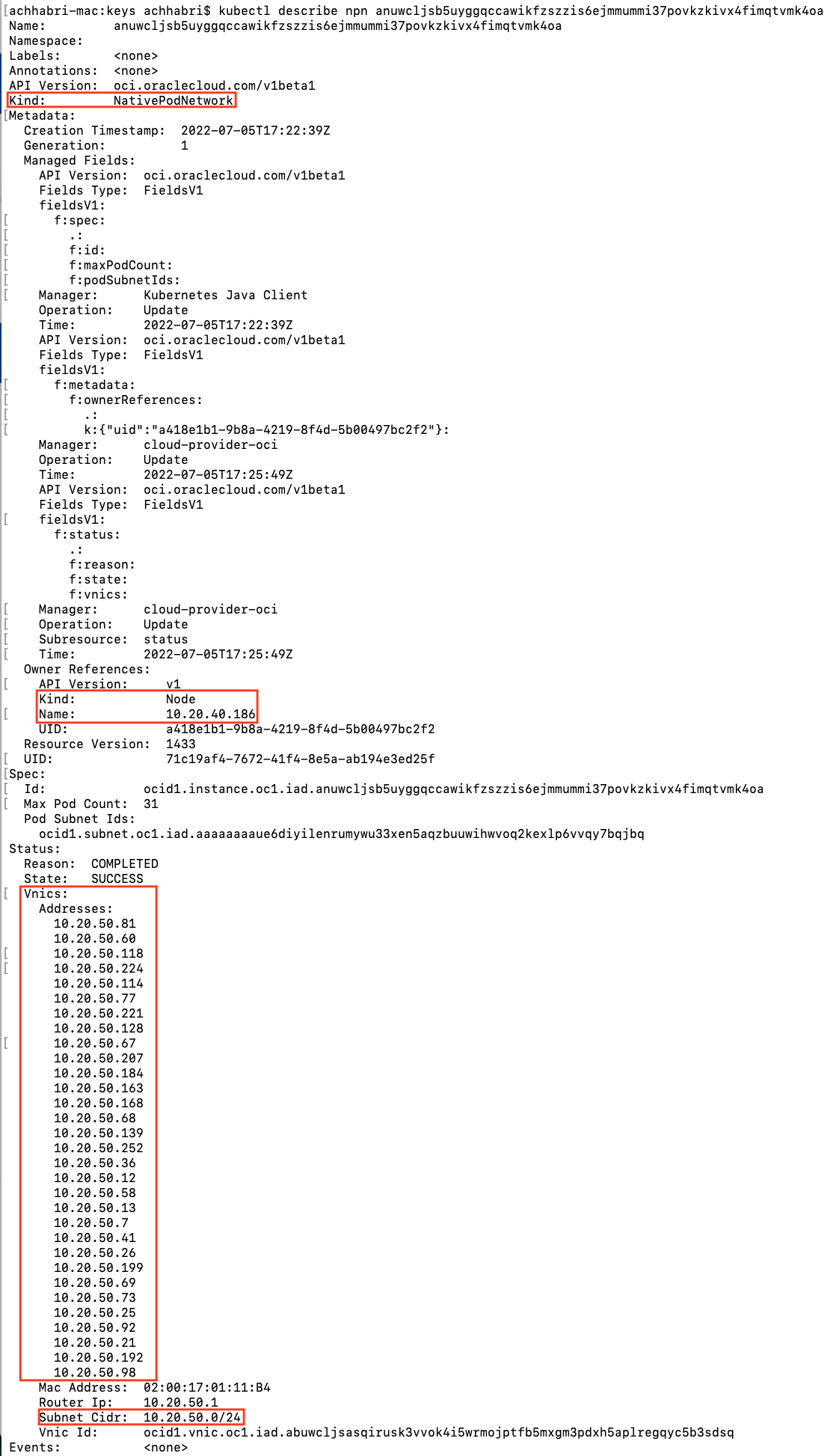

With VCN-native pod networking, OCI uses its ability to attach multiple virtual network interface cards (vNICs) to a virtual machine (VM), and associate multiple secondary IP addresses with each vNIC. The CNI daemon set updates the node to have the correct CNI binaries and configurations and schedules the pods using the configuration and binaries.

Each pod can then pick a free secondary IP address, assign it to the pod, and allow it to communicate inbound/outbound over that interface. This way, each pod is connected to a vNIC and is directly hooked up to the VCN. Pods are assigned a private IP from the Pods subnet. The communication between pods is going through the VCN natively (without encapsulation). Kubernetes services (cluster IPs) still get an IP from the CIDR block defined for the cluster.

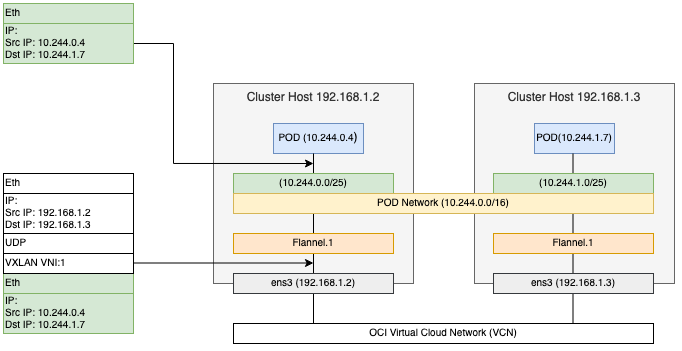

Flannel overlay networking

We have a simple pattern to achieve basic network requirements in OKE, essentially a virtual network within the VCN where pods are hooked up to the inner virtual network. In this model, Kubernetes worker nodes run several pods that get their own IP address from a private overlay network specific to a cluster. This extra layer of overlay network is built using a Flannel CNI plugin that provides networking at Layer 3. Flannel VXLAN is a UDP packet. VXLAN is a protocol to encapsulate the traffic in UDP packets. Flannel uses VXLAN to encapsulate traffic in UDP packets for pod communication in two different hosts.

The previous diagram shows how the packet flows between two pods in different hosts. If your application environment requires you to not use an IP address from VCN or a high-density pod per worker node, then using Flannel CNI is preferable. Another use case related to density per node is VCN IP starvation. The overlay frees up considerable IPs within the CIDR block if VCN IP starvation is a consideration.

Getting started

Let’s look at how an administrator sets up the Kubernetes cluster using VCN-native pod networking.

You can configure VCN-native pod networking with the following steps:

-

In the Oracle Cloud Console, under the Developer Services group, go to Containers and Artifacts and click Kubernetes Clusters (OKE). Under Scope, choose a compartment that you have permission to work in, and then click Create Cluster.

-

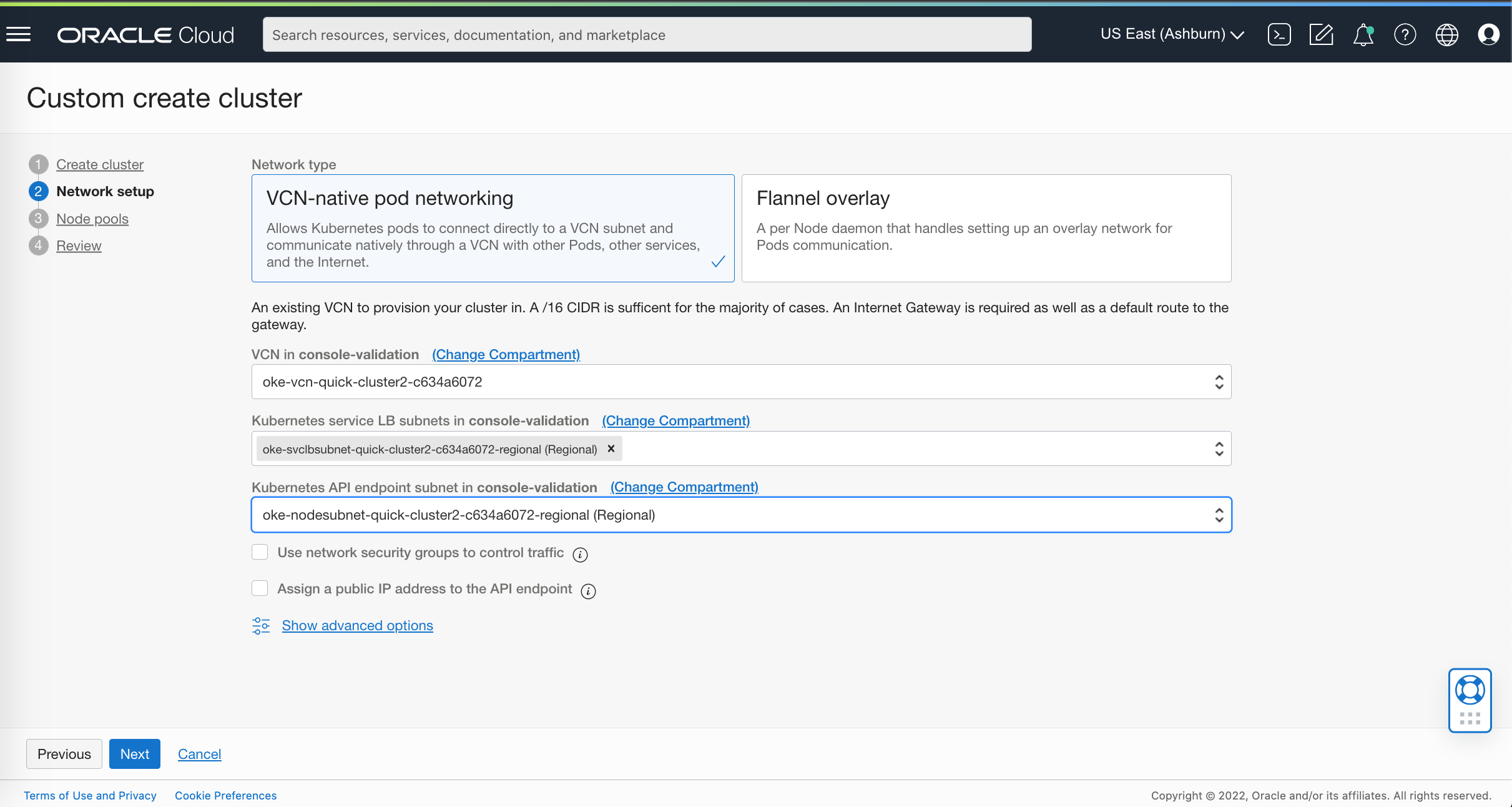

In the networking setup section, specify to use native pod networking during the cluster creation.

-

When it’s enabled, choose VCN and subnets for the load balancer and API endpoints.

-

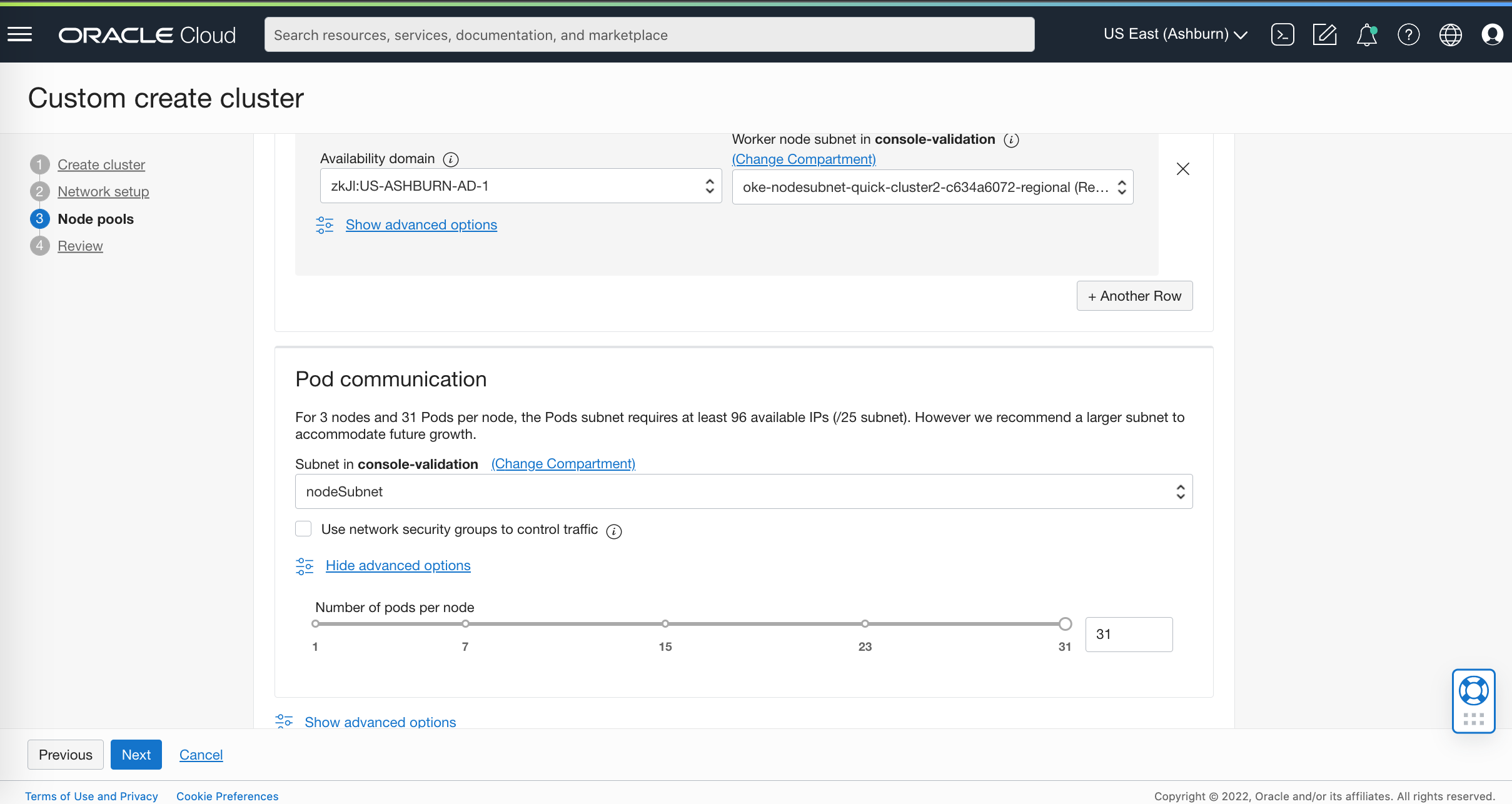

When creating a node pool, you see an extra subnet option for pods. With native pod networking, pods aren’t required to be hooked up to the same subnet or VCN as the node its on. Worker nodes are attached to the node and pod subsets. For Kubelet, kube-proxy, and networking daemons communication, the node subnet supports the communication between the Kubernetes Control Plane and Kubelet or kube-proxy running on each worker nodes. It can be public or private, depending on how you expose your worker nodes.

-

Review the overall configuration. If everything looks right, create the cluster.

-

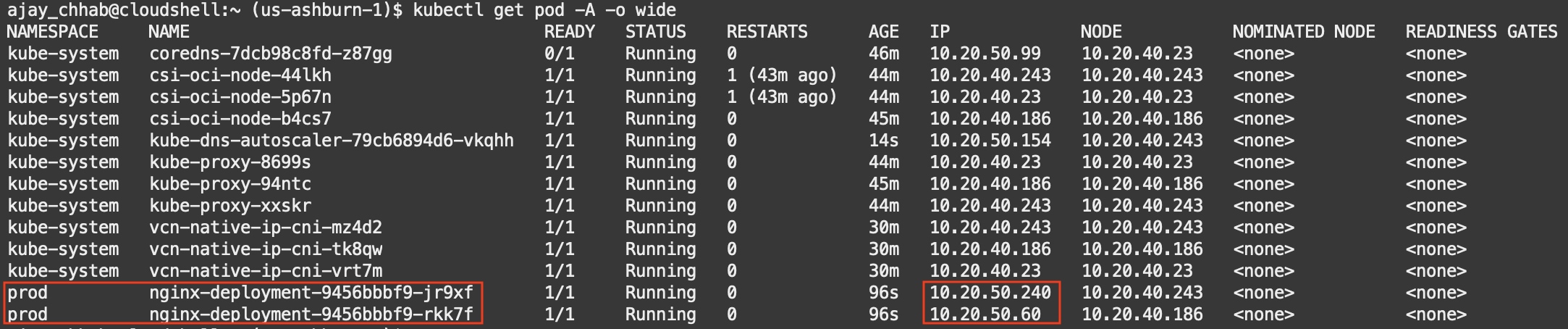

When the cluster is up and running, the IP addresses are assigned to the pods from the VCN pod subnet CIDR block. The pod IPs closely match with the worker nodes if the pod subnet chosen is the same as the worker subnet.

Next steps

VCN-native pod networking connects your Kubernetes pods to your VCN, so you benefit from the VCN security features and performance. For more information, see the VCN-native pod networking documentation. To try this new feature and all the enterprise-grade capabilities that Oracle Cloud Infrastructure offers, create your Always Free account today with a US$300 free credit.