The efficiency and accuracy of inference models play a pivotal role in determining the practical usability of AI solutions in real-world applications. In this blog post, we’re sharing inference benchmarks of Reka models running on Oracle Cloud Infrastructure (OCI) and focusing on key performance metrics, such as throughput, latency, and multimodal evaluation. The Chatbot Arena, formerly LMSYS, provides insights into text-based model evaluation, while Vibe-Eval offers a framework for challenging multimodal models.

Reka models, such as Reka Core and Reka Flash, are frontier multimodal language models designed to handle complex text and image prompts. On the text side, the Chatbot Arena provides a dynamic, public leaderboard of model performance evaluated by user-generated prompts and ratings. This platform enables a fair, real-time comparison between Reka models and other language models, accounting for prompt difficulty and varying user expectations. A benchmark created by Reka, Vibe-Eval serves as a hard evaluation suite for these multimodal language models. It comprises 269 visual understanding prompts, categorized into normal and hard sets, with a significant portion that even the most advanced models struggle to solve.

We included the following key metrics:

- Throughput: Number of output tokens per second that an inference server can generate

- Time to first token: The latency in seconds to produce the first token

- Time per output token: The average time taken per token during inference

Setting up the test environment on OCI

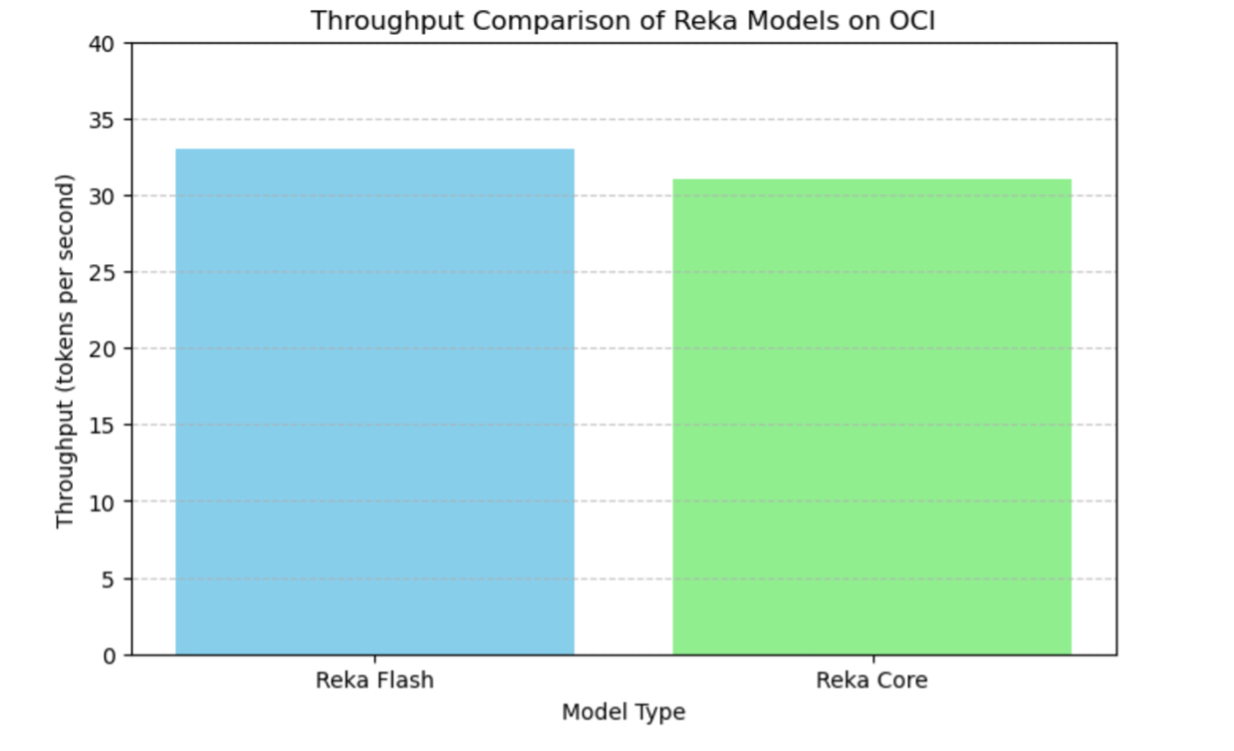

OCI offers a robust infrastructure for deploying and running large-scale AI models. Reka Flash and Reka Core are advanced multimodal language models developed to tackle a wide range of complex tasks involving both text and image inputs. Reka Flash is optimized for high throughput and low latency, making it particularly suitable for real-time applications where speed is critical. It achieves a throughput of approximately 33 tokens per second and a time to first token latency of around 0.80 seconds, ensuring rapid response in dynamic environments. Reka Core focuses on comprehensive understanding and reasoning capabilities, excelling in more challenging scenarios that require intricate decision-making and nuanced interpretations.

Use the following step-by-step guide to set up the test environment on OCI:

1. Set up the OCI environment with the following command:

export OCI_CLI_PROFILE=YOUR_PROFILE_NAME

oci setup config

2. Create a virtual cloud setwork (VCN) for hosting the inference environment:

oci network vcn create –compartment-id YOUR_COMPARTMENT_ID –display-name “Reka-VCN” –cidr-block “10.0.0.0/16”

3. Set up the Compute instance with GPU:

oci compute instance launch –compartment-id YOUR_COMPARTMENT_ID –availability-domain YOUR_AVAILABILITY_DOMAIN –shape “VM.GPU3.1” –image-id YOUR_IMAGE_ID –subnet-id YOUR_SUBNET_ID –display-name “Reka-Compute-Instance”

After the test launchpad is deployed, install the Reka Python software developer kit (SDK). Our exmaple uses the Get Models API to list all the Reka models.

from reka.client import Reka

client = Reka()

print(client.models.get())

The command gives the following output:

[

Model(id=’reka-core’),

Model(id=’reka-core-20240415′),

Model(id=’reka-core-20240501′),

Model(id=’reka-flash’),

Model(id=’reka-flash-20240226′),

Model(id=’reka-edge’),

Model(id=’reka-edge-20240208′),

]

Next, set up the script for multimodal input to test.

client.chat.create(

messages=[

ChatMessage(

role=”user”,

content=[

{

“type”: “text”,

“text”: “What is this video about?”

},

{

“type”: “video_url”:

“video_url”: “https://fun_video”

}

],

)

],

model=”reka-core-20240501″,

)

Running the same test from within the Oracle corporate network, we used the following custom HTTP client to support proxy:

import httpx

from reka.client import Reka

client = Reka(…,

http_client=httpx.Client(

proxies=”http://my.test.proxy.example.com”,

transport=httpx.HTTPTransport(local_address=”0.0.0.0″),

),

)

You can download the Vibe-Eval dataset from Hugging Face. Using the Vibe-Eval suite, the performance of Reka models on OCI is rigorously tested, especially on the hard-set prompts that are designed to challenge the capabilities of frontier models.

from reka_models import VibeEval

# Load Vibe-Eval prompts

vibe_eval = VibeEval.load(‘vibe-eval-hard-set’)

# Evaluate the model

results = vibe_eval.evaluate(model)

print(“Vibe-Eval Hard-Set Score:”, results[‘score’])

The result

From the tests, we observed the following performance:

- Throughput: Approximately 33 tokens/second

- Latency: Time to first token around 0.80 seconds

- Time per token: Approximately 0.13 seconds/token

These results are average with the performance metrics that Reka Engineers have published.

Conclusion

Evaluated against the Vibe-Eval benchmark, a hard evaluation suite designed to test multimodal language models rigorously, both Reka Flash and Reka Core demonstrate excellent performance, with Reka Core performing exceptionally well on tasks requiring deeper cognitive processing and reasoning. Together, these models provide a versatile toolkit for addressing a broad spectrum of AI-driven tasks, from quick responses in conversational settings to more complex problem-solving scenarios in multimodal contexts.

Benchmarking on OCI provides insights into how infrastructure choices impact model performance. Benchmarking Reka models on OCI not only validates the robustness of the platform as an AI platform, but also provides valuable insights into the performance capabilities of frontier multimodal models in real-world scenarios. By fine-tuning infrastructure choices and optimizing model deployment, organizations can achieve superior performance, helping ensure that AI solutions are both efficient and scalable.

Ready to take your AI projects to the next level? OCI offers the high-performance, scalable, and reliable infrastructure you need to deploy advanced AI models like Reka. Using OCI’s cutting-edge GPU instances and low-latency network to enhance your model’s throughput can reduce latency and help ensure that your AI solutions are both efficient and scalable. Start your journey with Oracle Cloud Infrastructure today, experience the difference in AI inference performance with OCI AI Infrastructure now, and transform your AI capabilities!

For more information, see the following resources: