The Oracle Cloud Infrastructure (OCI) AI Agent Platform is a significant development in enterprise AI, offering a managed platform for advanced AI systems. It combines LLMs, Retrieval Augmented Generation (RAG), and tool orchestration, allowing AI agents to perform complex tasks autonomously. The service integrates seamlessly across the Oracle stack, including databases and cloud infrastructure, enabling efficient data retrieval and API interactions. This integration allows faster and more secure AI implementation, aligning with business processes. Oracle’s focus is on leveraging its ecosystem and prioritizing security, so that it can be a leader in the industry and in the field of autonomous AI agents. OCI AI Agent Platform enables enterprises to build intelligent, secure, and context-aware agents that integrate seamlessly across the Oracle ecosystem.

Introduction, history & evolution

Generative AI has rapidly evolved, transitioning from experimental to essential within enterprises, and revolutionizing various industries. Its initial applications in content creation and language understanding have expanded, significantly impacting software development and operational efficiency. The technology has automated and streamlined numerous tasks, from coding assistance to report synthesis. Now, the focus is advancing towards agentic AI, aiming to integrate AI agents into broader workflows, configuring them to make decisions and take actions based on real-time context. This progression marks a significant step in the evolution of AI, moving towards more intelligent and adaptive systems.

What is Agentic AI?

Agentic AI is the integration of LLM capabilities with advanced reasoning, memory, and tool orchestration to enable intelligent systems that are designed to manage complex processes autonomously. Unlike simple prompt-based systems, agents can work through multiple steps, retrieve relevant information, call external APIs, and adapt based on interaction history. Agentic systems are a result of a steady evolution in machine learning and deep learning.

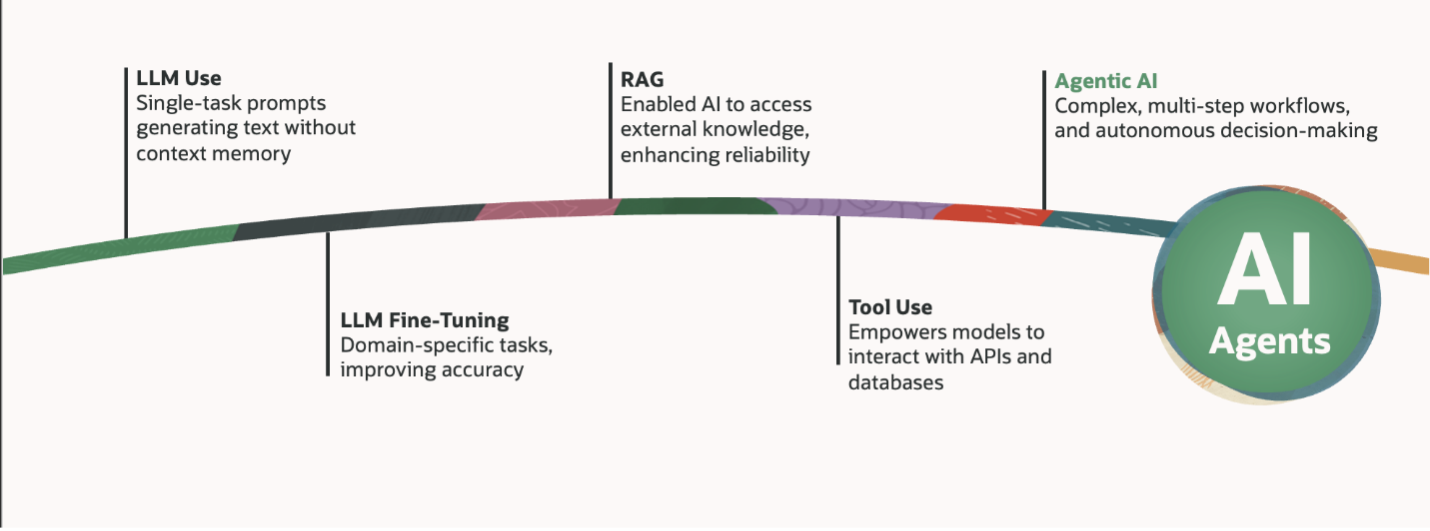

Generative AI’s development is marked by significant milestones. Deep learning and machine learning led to LLMs. Specifically, the adoption of transformer models in natural language processing paved the way for large language models. Early on, LLMs were utilized for single-step tasks, generating text without contextual memory. Later fine-tuned LLMs emerged for domain-specific activities, improving accuracy. RAG then enabled AI to access external knowledge, enhancing reliability. Integrated Tool Use empowered models to interact with APIs and databases, transforming passive assistants into problem-solvers. Finally, Agentic AI combines these advancements, creating intelligent agents for complex, multi-step workflows, and autonomous decision-making. This progression has elevated generative AI’s capabilities, making it a versatile and powerful enterprise tool.

Figure 1: Evolution from Generative AI to Agentic AI.

Together, these five phases illustrate how enterprise AI has progressed from isolated, one-off tasks to intelligent systems that can engage in complex, dynamic, and meaningful problem-solving processes.

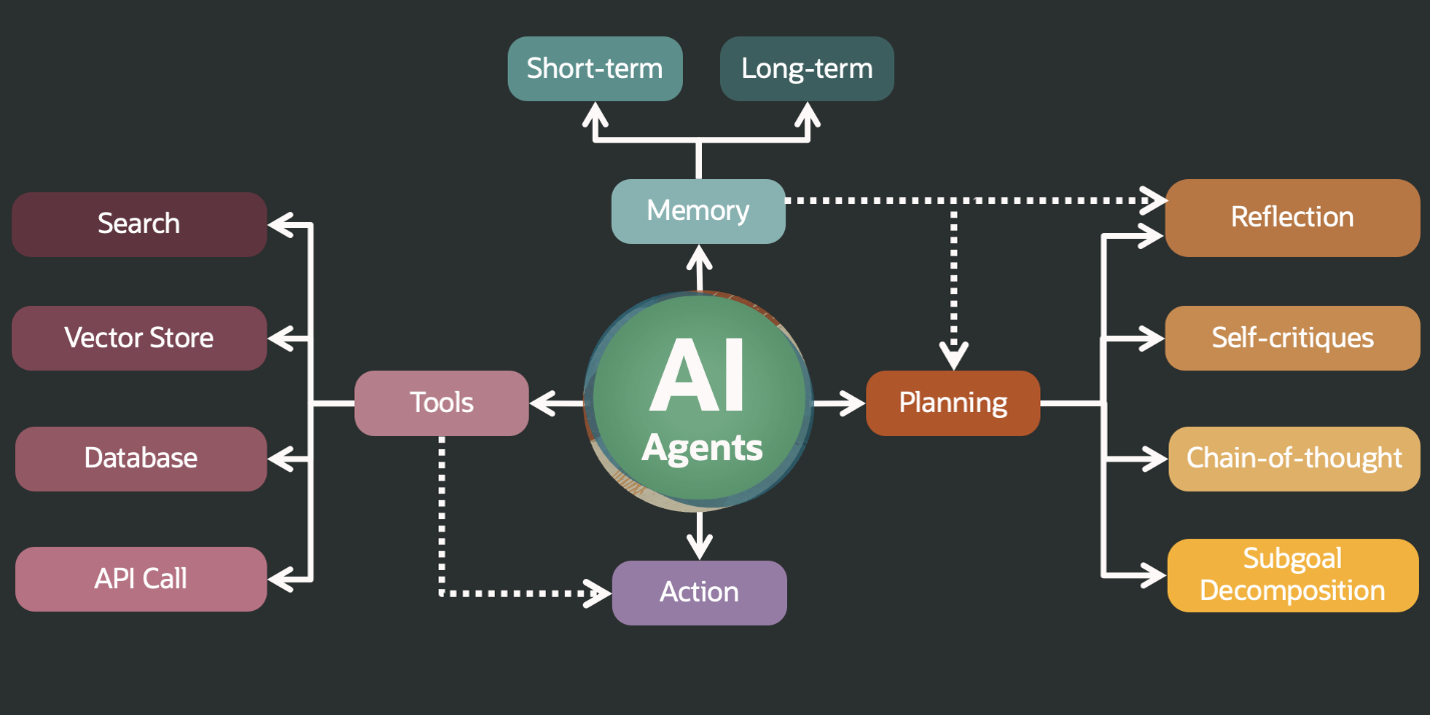

Agents are comprised of three building blocks:

- Planning – The ability to make stepwise decisions based on prompts instructions, conversation history, and external context. This includes techniques like reflection, self-critique, chain-of-thought, and sub-goal decomposition.

- Tool Use – enables integration with external tools, APIs, and functions to perform actions. At each step, the agent typically interacts with a tool such as a search engine, vector store, database, or makes an API call.

- Memory – brings contextual awareness over multiple interactions – which can be short-term (e.g., retaining the latest user inputs within a session) or long-term (e.g., remembering user preferences or past tasks across sessions).

Figure 2: Core components of an Agent.

AI Agent Vs. Automation Workflow

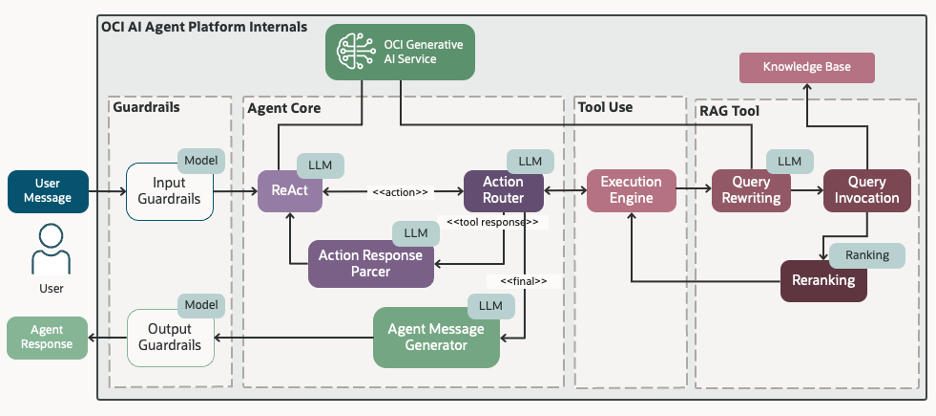

From a technical lens, an agent is a pipeline of multiple LLM calls, each performing discrete steps: parsing intent, selecting tools, retrieving data, evaluating results, and responding. Architecturally, this makes agents powerful yet modular—ideal for the enterprise apps.

Unlike traditional static Robotic Process Automation (RPA) and rigid workflow automations, agents are designed to be dynamic and adaptive, capable of responding to changing conditions and varied inputs in real time. This flexibility can make agentic technology broadly applicable across domains while reducing the need for extensive upfront configuration or integration. As a result, organizations can deploy intelligent solutions faster and with greater resilience to operational variability.

Figure 3: Utilization of LLMs throughout agent execution with RAG tool involved.

Agentic AI pipelines can contain multiple agents and their sub-processes being invoked dynamically to obtain desired results or set objectives. This can include agents that can be fully autonomous and the ones that involve human in the loop.

From Agent Definition to Deployment: An End-to-End Framework

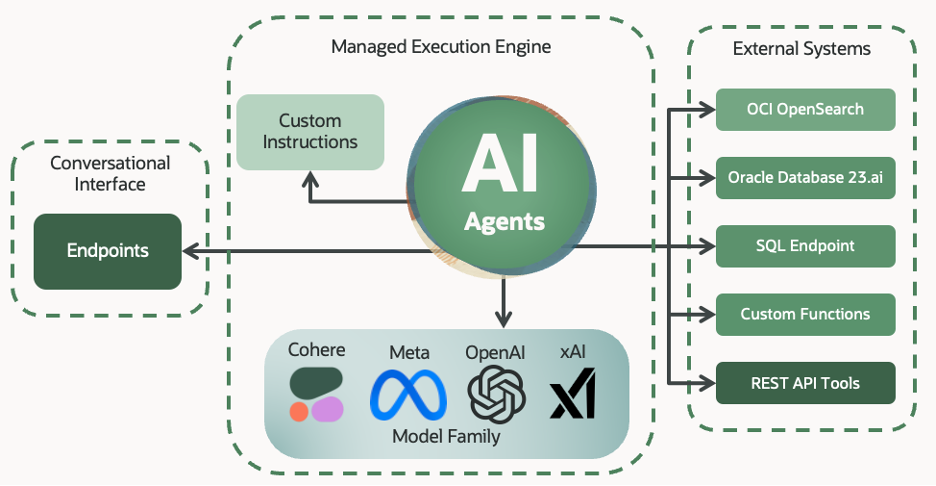

OCI AI Agent Platform provides a seamless, fully managed environment for building and deploying AI agents. This platform abstracts the complexity of infrastructure and orchestration, empowering developers to focus on design and logic.

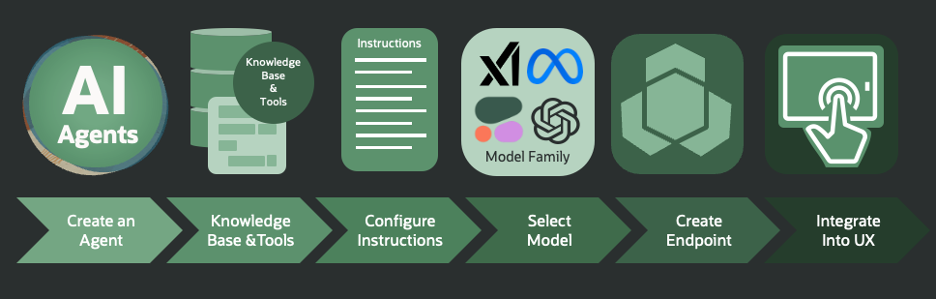

When provisioning a new agent using the OCI AI Agent Platform, users are given a rich and modular design framework to help configure every aspect of their agent’s capabilities. Typically, this configuration process begins with four primary dimensions that shape the agent’s behavior, intelligence, and integration potential: (a) Defining the knowledge base, (b) configuring the end point, (c) enabling tool use and (d) the model selection.

Figure 4: Key capabilities of an agent within OCI AI Agent Platform.

First step is the definition of the Knowledge Bases (KBs) that the agent can access. These are foundational to how agents interact with enterprise knowledge. A knowledge base might consist of unstructured data such as internal documentation, help center articles, or onboarding guides, or structured datasets like tabular financial reports. A key example is Oracle Database 23ai, which can serve as a semantic-aware knowledge source combining traditional SQL structures with vector-enhanced search capabilities. By indexing documents and metadata into searchable embeddings, developers enable agents to better understand and respond with more relevant, grounded answers from across large information repositories.

Second step is configuring the SQL Endpoint, which empowers agents to interact directly with structured data in relational databases using natural language. This setup supports a variety of use cases such as analytics, reporting, compliance queries, and real-time status checks. Embedded NL2SQL tool can translate user intent into executable SQL queries, with built-in capabilities like query explanation, schema linking, and self-correction. This allows, for instance, a sales agent to answer, “What were our top three revenue-generating products last quarter?” and have that translated into an optimized SQL query against an Oracle database.

Third step is to define Custom Tools tailored to their specific domain logic. These tools can represent internal microservices, workflow APIs, or even external SaaS endpoints. Developers can expose functionality through securely defined interfaces—typically using Open API specifications—so that agents can trigger actions such as creating a service ticket, scheduling a calendar event, or retrieving data from a proprietary CRM system. Oracle’s platform supports secure, authenticated calls and allows for tool invocation controls based on user roles or agent contexts.

Another essential configuration step involves specifying Instructions—behavioral blueprints that guide an agent’s tone, response strategies, and escalation protocols. Alongside this, agent creators define a Model Selection strategy that aligns with their specific use case. OCI AI Agent Platform supports a range of LLMs, including LLaMA, Cohere, and OpenAI, each offering options in accuracy, latency, and cost. Together, these provisioning dimensions allow for rich customization and help ensure that every agent deployed through OCI is purpose-built, with tight control over data security, and enterprise ready.

Once an agent has been provisioned and configured, it can be deployed as a fully managed, production-grade service accessible via a dedicated endpoint. This endpoint acts as the live interface through which end users and client applications can engage with the agent through a browser-based chat interface, an embedded widget in an application, or programmatically through RESTful APIs. The endpoint is intended to handle message routing, maintains conversational context, and enables multi-turn interactions.

For example, a support chatbot provisioned for a finance application might be surfaced through a portal where users can ask questions like, “What’s the status of my latest reimbursement?” The agent processes the input, retrieves the appropriate data, and responds conversationally—all through the secure endpoint provided by OCI.

Figure 5: Agent provisioning process.

Security and Privacy: Built-In, Not Bolted On

Though agentic AI brings powerful and exciting capabilities to the enterprise, security comes first. Enterprises cannot afford to compromise on privacy, compliance, or data integrity. Recognizing this, OCI Generative AI Agents Service is built from the ground up with security at every level of the stack, not as an afterthought. Every layer of the platform, from data ingestion to response generation, incorporates robust controls designed to protect sensitive information, enforce policy boundaries, and foster trust. This security-first design empowers organizations to confidently deploy intelligent agents across even the most regulated environments.

At runtime, agents can be deployed in isolated, secure environments. OCI AI Agent Platform supports dedicated inference infrastructure and strict separation of tenant data—vital for regulated industries like healthcare and finance.

Agent Guardrails

Agents can include guardrails that constrain behavior and enforce organizational norms, with a strong focus on two critical aspects of safety: request understanding and response verification.

On the input side, guardrails can help agents interpret requests more accurately and within safe boundaries. For example, request parsing mechanisms help ensure that user intent is correctly extracted and matched to allowable operations. In the case of a SQL agent, this might mean validating that a natural language question can be safely translated into a SELECT query without unintended consequences.

On the output side, response verification is essential for helping ensure that what the agent returns is not only relevant, but also compliant and contextually appropriate. In Retrieval-Augmented Generation (RAG) pipelines, this includes checking that cited sources are correctly attributed, that hallucinations are minimized through grounded data references, and that generated responses are validated against expected formats or business logic.

Client-Side Execution

Client-side function invocation is a powerful capability that allows agents to interface with customer-managed APIs while keeping sensitive responsibilities—such as authentication, authorization, and networking—within the client’s tenancy. This approach is particularly valuable for enterprises that need to retain strict control over how and when their internal systems are accessed.

With this setup, the reasoning and decision-making of the agent—everything from parsing user intent to determining which tool to invoke—runs entirely on Oracle’s backend. Once a specific function is selected by the agent’s reasoning engine, the invocation is securely delegated to the client’s environment for execution.

Clients can access a tool or function chosen by an external agent using their own credentials, endpoints, or firewall rules, without exposing those resources to the agent. The client maintains ownership of how the API is called, which identity is used, and what constraints are enforced. This separation of concerns helps address security and compliance boundaries while still enabling seamless orchestration of external actions.

Hallucination Prevention

In OCI AI Agent Platform, Hallucination prevention is primarily aimed at helping ensure that the agent responses are not only relevant but also verifiable and grounded in accurate data. Oracle takes a multi-layered approach to this challenge, embedding safeguards throughout the toolchain and execution lifecycle to help reduce speculation and enforce response integrity.

In the context of SQL-based interactions, hallucinations are mitigated by grounding the agent’s behavior in strict schema definitions helps to mitigate hallucinations. By linking SQL generation to a well-defined schema—specifying table names, column types, and permissible operations—helps to prevent agents from generating invalid or speculative queries. Furthermore, a self-correction mechanism is designed to allow the agent to detect errors in SQL execution (e.g., syntax issues or missing references) and revise its query accordingly, without user intervention. This can add a critical layer of resilience and reliability when agents interact with structured data.

In scenarios involving RAG, hallucination prevention can be better achieved through a combination of post-verification and citation checking. Once a draft response is generated using retrieved documents, the system verifies that the information presented matches what exists in the source material. Each fact or claim made by the agent can be traced back to a specific paragraph or sentence, with inline citations included to support transparency. This not only helps validate the content but also helps to build trust with end users.

For custom tools and APIs, the agent can be restricted to defined functions and expected outputs, reducing the possibility of generating speculative or inaccurate information. Since these tools return structured responses (e.g., JSON payloads), the agent’s generative layer can be instructed to stay within the bounds of known parameters and verified outputs.

Collectively, these techniques—schema-constrained generation, execution-aware self-correction, citation-based verification, and deterministic tool responses—help form a comprehensive framework for reducing hallucinations and providing high-quality agent output.

Oracle Ecosystem Integration

OCI AI Agent Platform serves as both a bridge and a foundation within the Oracle ecosystem. It deeply integrates with Oracle’s native technologies—leveraging advanced capabilities of Oracle Database such as vector search for semantic retrieval and native SQL engines for structured queries. And it acts as a foundational layer upon which broader Oracle applications and services are built. Applications like Oracle Fusion, NetSuite, and Database 23ai embed intelligent agents natively into their workflows, enabling personalized, context-aware, and proactive user experiences.

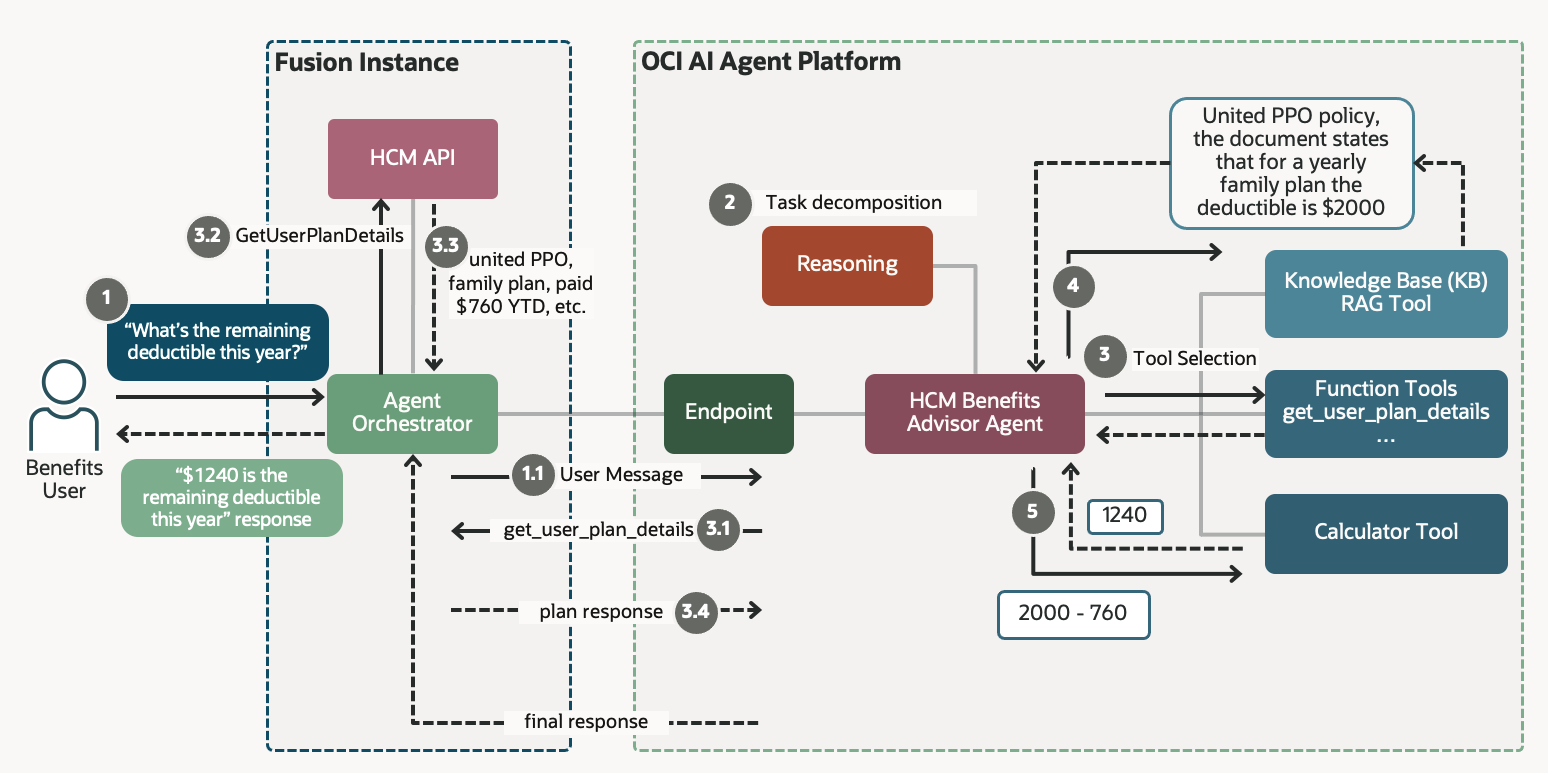

Let’s take an example: Here is what happens behind the scenes when an employee queries about the remaining deductible for the current year:

Figure 6: Fusion HCM Benefits Advisor Agent powered by OCI AI Agent Platform.

This flow demonstrates the seamless integration and clear separation of responsibilities between OCI AI Agent Platform and the consuming application. It emphasizes how security measures can be upheld when interacting with sensitive system data. The process unfolds as follows:

- An employee initiates an interaction by submitting a request.

- The agent service analyzes the request and decomposes it into a series of subtasks to be executed sequentially.

- The first subtask involves identifying the appropriate function needed to retrieve relevant details.

- Execution of the corresponding API call is delegated to the orchestrator within the Fusion environment. This allows for all calls to be properly authorized and helps eliminate the need to expose HCM APIs directly to the Agents service.

- The agent then retrieves benefit plan details from the appropriate knowledge base.

- Finally, a calculator tool is invoked to compute the result, which is passed through a response generation pipeline and delivered back to the user.

This entire interaction is abstracted away from the end user, and the architecture supports a wide range of tool and knowledge base combinations across the Fusion application landscape.

OCI AI Agent Platform powers a wide array of Oracle applications by embedding intelligent agents directly into workflows, enabling natural language interactions and proactive assistance. Fusion AI Studio allows developers to configure agents visually within Fusion apps; Database Select AI translates plain English into SQL queries; NetSuite and Ask Oracle offer conversational finance and operations insights; OCI Support uses Retrieval-Augmented Generation (RAG) and APIs to automate issue resolution; the Smart Contact Center enhances live support with real-time AI guidance; and Oracle Code Assist can help streamline developer productivity through intelligent code suggestions. Together, these capabilities can be leveraged to bring secure, scalable, and context-aware intelligence to every layer of Oracle’s ecosystem.

Together, these capabilities can be leveraged to form a comprehensive foundation for embedding agentic intelligence across the Oracle application landscape. Whether assisting an HR manager in Fusion, guiding a financial analyst in NetSuite, helping a support engineer through the Smart Contact Center, or accelerating code delivery in a developer’s IDE, OCI AI Agent Platform can provide consistent, reliable, and secure integration of intelligent agent flows into business-critical environments.

The agentic AI landscape is evolving rapidly, with new paradigms, tooling methods, and orchestration strategies emerging almost monthly. In the future, the AI agentic industry has the potential to support custom hardware and proprietary LLM models, incorporate emerging agentic patterns such as Deep Research and integrate multi-agent topologies.

Key Takeaways

- OCI AI Agent Platform enables enterprises to leverage Gen AI to build intelligent, secure, and context-aware agents.

- Agents are provisioned using declarative configurations for knowledge bases, SQL endpoints, custom tools, and behavioral instructions.

- Security measures are built-in across the framework. Agent guardrails and hallucination prevention are core design principles, enforced through schema constraints, post-verification, and citation-based validation.

- OCI AI Agent Platform comes with native integration with Oracle technologies such as Database 23ai and can form the foundational building block for Oracle applications, such as Fusion and NetSuite, designed to deliver seamless, in-context automation.

References

- The Path to Agentic AI: Oracle’s Collaborative Approach with Leading Model Providers

- Announcing the General Availability of OCI Generative AI Agents Platform

- Behind the Scenes: Using OCI Generative AI Agents to improve contextual accuracy

- First Principles: Exploring the depths of OCI Generative AI Service