As organizations accelerate their AI and machine learning efforts, the need for scalable, reproducible, and governed machine learning operations (MLOps) has become a business-critical concern. Oracle Cloud Infrastructure (OCI) offers the compute, storage, and network performance to support ML workloads—but many teams also need the software stack for orchestration and automation to bring models into production efficiently.

To meet this need, OCI is proud to announce the integration of the Valohai MLOps platform, now available natively on OCI. Valohai offers an end-to-end solution for building, managing, and scaling ML workflows—enabling OCI customers to go from experimentation to production faster, with full governance and auditability.

Our first customers from the medical and life sciences space are actively using the integration to power regulated, production-grade ML workflows on OCI. While names can’t be disclosed yet, the early adoption underscores the platform’s enterprise-readiness.

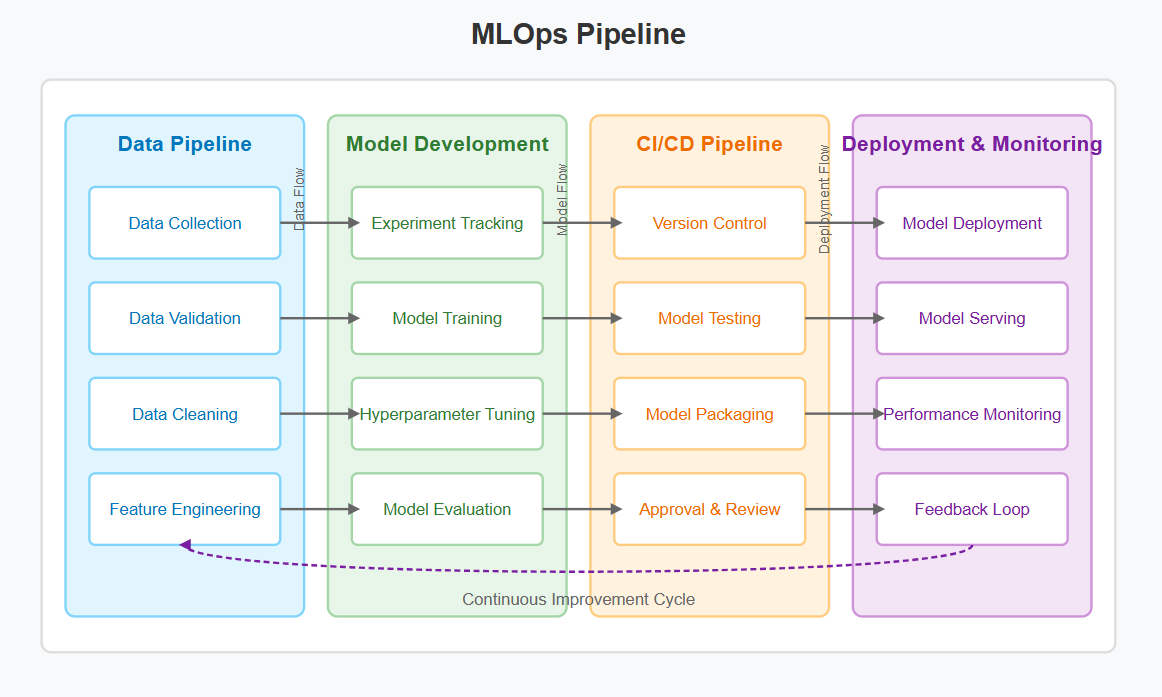

A Complete MLOps Layer on OCI

Valohai brings a robust set of machine learning operations capabilities to OCI, helping customers simplify and standardize the ML lifecycle. Key features include:

Workflow orchestration and automation for training, testing, and deployment

Immutable versioning for data, parameters, code, and outputs

Scalable job execution on OCI compute, including GPUs

Secure artifact and data handling via OCI Object Storage

Seamless integration with OCI IAM and IDCS for enterprise-grade security

Built-in audit trails to support compliance and traceability

These features allow ML teams to maintain full control and visibility across their ML pipelines—reducing manual work, infrastructure complexity, and operational risk.

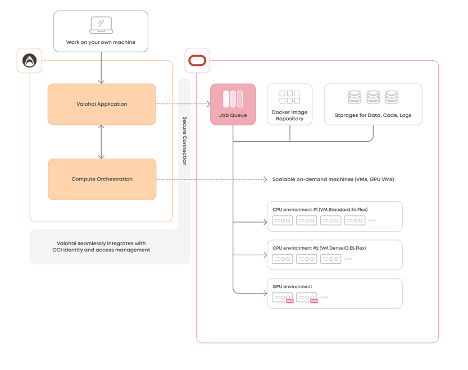

📊OCI + Valohai Integration Architecture

A diagram illustrating how Valohai connects to OCI services, including Compute, Object Storage, and IAM, alongside the data and control flow from notebooks, API, and CLI tools

Streamlining the ML Lifecycle

The integration allows customers to use Valohai as a control layer, orchestrating ML workflows directly on OCI infrastructure. A typical pipeline includes:

- Code commit or workflow definition using Valohai’s UI, CLI, or API

- Automatic provisioning and execution of training jobs on OCI compute

- Management of input data and output artifacts via OCI Object Storage

- Full traceability of logs, metrics, and models for every experiment

- Governance through secure, versioned, and auditable runs

Valohai operates within the customer’s cloud tenancy, leveraging OCI-native security, networking, and identity management features.

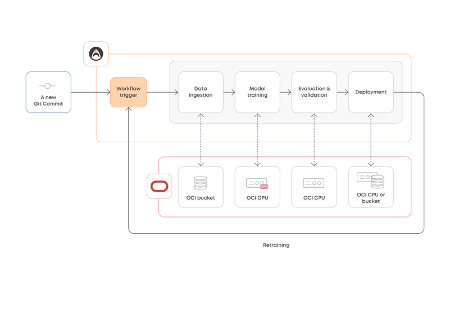

🔁 ML Workflow in Action

A visual walkthrough of an end-to-end ML workflow on OCI powered by Valohai, including data ingestion, model training, evaluation, and deployment.

Built for Hybrid and Multicloud

Many Oracle customers operate in complex environments spanning cloud and on-premises systems. Valohai’s cloud-agnostic architecture supports hybrid and multicloud workflows natively.

With Valohai on OCI, customers can:

- Run compute-heavy training jobs on OCI while accessing data from other sources

- Maintain cross-cloud flexibility without vendor lock-in

- Extend MLOps practices across different cloud environments consistently

This aligns with Oracle’s vision for open, flexible infrastructure that empowers customers to build AI solutions on their terms.

Real-World Use Cases

Healthcare & Life Sciences

Execute compliant, traceable ML pipelines for clinical research and diagnostics—securely hosted on OCI.

Computer Vision

Scale deep learning workflows using OCI GPUs. Automate reproducibility, comparison, and deployment with Valohai’s orchestration layer.

Enterprise ML Platform Teams

Standardize workflows, reduce DevOps bottlenecks, and enforce governance across business units.

Accelerating Machine Learning at Scale

The integration of Valohai into OCI enables technical leaders to:

- Bring models into production faster

- Establish repeatability, traceability, and compliance

- Reduce DevOps overhead

- Scale workflows confidently using OCI infrastructure

“Valohai brings a purpose-built MLOps framework that complements the strengths of OCI’s AI infrastructure,” said Sanjay Basu, Senior Director, GenAI & GPU Solution Services – Oracle Cloud Engineering.

“With this integration, our customers can now streamline the journey from model development to deployment with full reproducibility, auditability, and scalability—on OCI’s GPU-optimized environment. It’s a critical step forward in enabling enterprise-grade operational AI.”

Get Started

The Valohai integration is available today on Oracle Cloud Infrastructure.

🔗 Explore the solution →

📘 Technical documentation →

Oracle Cloud AI Infrastructure

Contact: