Currently, the NVIDIA GPU cloud image on Oracle Cloud Infrastructure is built using Ubuntu 16.04. I’ve received multiple questions from developers who are using GPUs about how to use them with Oracle Linux with Docker. This post describes how to do just that.

It should take you just a few minutes to get up and running using Oracle-provided images and a few short steps. In this tutorial, I use VM.GPU3.2, but all currently available GPU shapes are compatible with this procedure (VM.GPU2.1, BM.GPU2.2, VM.GPU3.1, VM.GPU3.2, VM.GPU3.4, and BM.GPU3.8).

Launch an Instance

Let’s start by launching an instance.

Enter a name for the instance, and select a compatible shape and availability domain.

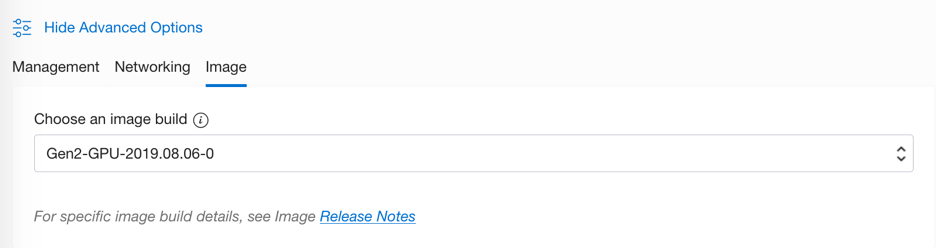

Choose the Oracle Linux 7.6 operating system. In the Advanced Options section, choose the Gen2-GPU build that has NVIDIA drivers preinstalled.

After the instance is RUNNING, validate the driver installation:

Tue Aug 27 03:24:05 2019 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 418.67 Driver Version: 418.67 CUDA Version: 10.1 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla V100-SXM2... Off | 00000000:00:04.0 Off | 0 | | N/A 40C P0 39W / 300W | 0MiB / 16130MiB | 0% Default | +-------------------------------+----------------------+----------------------+ | 1 Tesla V100-SXM2... Off | 00000000:00:05.0 Off | 0 | | N/A 43C P0 40W / 300W | 0MiB / 16130MiB | 4% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+

Add Docker and NVIDIA Container Toolkit

Add the Docker repository and install docker-ce:

$ sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

$ sudo yum install docker-ce docker-ce-cli containerd.io

Configure the nvidia-docker repository and install nvidia-container-toolkit:

curl -s -L https://nvidia.github.io/nvidia-docker/rhel7.6/nvidia-docker.repo | sudo tee /etc/yum.repos.d/nvidia-docker.repo

In the most recent Oracle Linux 7.7 GPU images, /etc/yum.conf contains an exclude list for *nvidia*. We need to temporarily disable this to install the required packages:

sudo yum install --disableexcludes=all -y nvidia-container-toolkit

Restart the docker service and add the user to the docker group:

sudo systemctl restart docker

sudo usermod -aG docker $USER

Disconnect and log in again.

After reconnecting, try to run the cuda container:

docker run --gpus all nvidia/cuda:9.0-base nvidia-smi docker: Error response from daemon: OCI runtime create failed: container_linux.go:345: starting container process caused "process_linux.go:430: container init caused \"write /proc/self/attr/keycreate: permission denied\"": unknown. ERRO[0005] error waiting for container: context canceled

Resolve the Error

The error that you received is related to SELinux. To resolve it, you have two options:

- Configure SELinux

- Disable SELinux or set it to permissive mode, which is a less secure option

Configure SELinux

Run the following command:

grep runc /var/log/audit/audit.log | audit2why type=AVC msg=audit(1563909887.714:945): avc: denied { create } for pid=17499 comm="runc:[2:INIT]" scontext=system_u:system_r:container_runtime_t:s0 tcontext=system_u:object_r:unlabeled_t:s0 tclass=key permissive=0 Was caused by: Missing type enforcement (TE) allow rule.

You can use audit2allow to generate a loadable module to allow this access.

Create an SELinux policy module from the audit log messages:

sudo grep runc /var/log/audit/audit.log | audit2allow -a -M nvidia

Validate the nvidia.te file. The contents should be identical to the following:

module nvidia 1.0; require { type unlabeled_t; type container_runtime_t; class key create; } #============= container_runtime_t ============== #!!!! WARNING: 'unlabeled_t' is a base type. allow container_runtime_t unlabeled_t:key create;

Activate the policy:

sudo semodule -i nvidia.pp

Run the command again. This time, it’s successful.

[opc@oracle-gpu-1 ~]$ docker run --gpus all nvidia/cuda:9.0-base nvidia-smi +-----------------------------------------------------------------------------+ | NVIDIA-SMI 418.67 Driver Version: 418.67 CUDA Version: 10.1 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla V100-SXM2... Off | 00000000:00:04.0 Off | 0 | | N/A 40C P0 39W / 300W | 0MiB / 16130MiB | 0% Default | +-------------------------------+----------------------+----------------------+ | 1 Tesla V100-SXM2... Off | 00000000:00:05.0 Off | 0 | | N/A 43C P0 40W / 300W | 0MiB / 16130MiB | 4% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+

Disable SELinux or Set It to Permissive Mode

This option is less secure.

Run the following command:

sudo sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

Restart the machine:

reboot

Wrap It Up

Docker 19.03 deprecates –runtime=nvidia. Instead, a new –gpus flag has been added, and the latest nvidia-docker has already adopted this feature.

You can also use the NVIDIA GPU cloud repository to run machine learning, GPU, and visualization workloads from NGC on Oracle Linux.

Log in to the repository (you need to create an API key on https://ngc.nvidia.com):

docker login nvcr.io

docker run --gpus all nvcr.io/nvidia/cuda:9.0-cudnn7-devel-ubuntu16.04 nvidia-smi

I hope you enjoyed the tutorial! Now you can build your next containerized application in the fastest cloud and most powerful Linux distribution. Have fun!