Multicloud strategies allow you to select the exact services and solutions you need to best meet the unique requirements of your business. For example, perhaps you want the power of Oracle Autonomous Database while maintaining your existing workloads in Microsoft Azure. Multicloud solutions allow flexibility in service selection and can mitigate the risk of vendor lock-in. However, distributed topologies also present a challenge in maintaining efficient, centralized monitoring, and incident management processes. The last thing you want to do is manage independent security event and incident management (SIEM) systems for each cloud platform.

If you’re already using Microsoft Azure Sentinel as your primary SIEM tool, you can extend coverage to systems and applications running in Oracle Cloud Infrastructure (OCI). One approach requires deploying custom data connectors for each virtual machine (VM) and application with log forwarder agents for virtual networking infrastructure. That’s potentially a lot of agent management work.

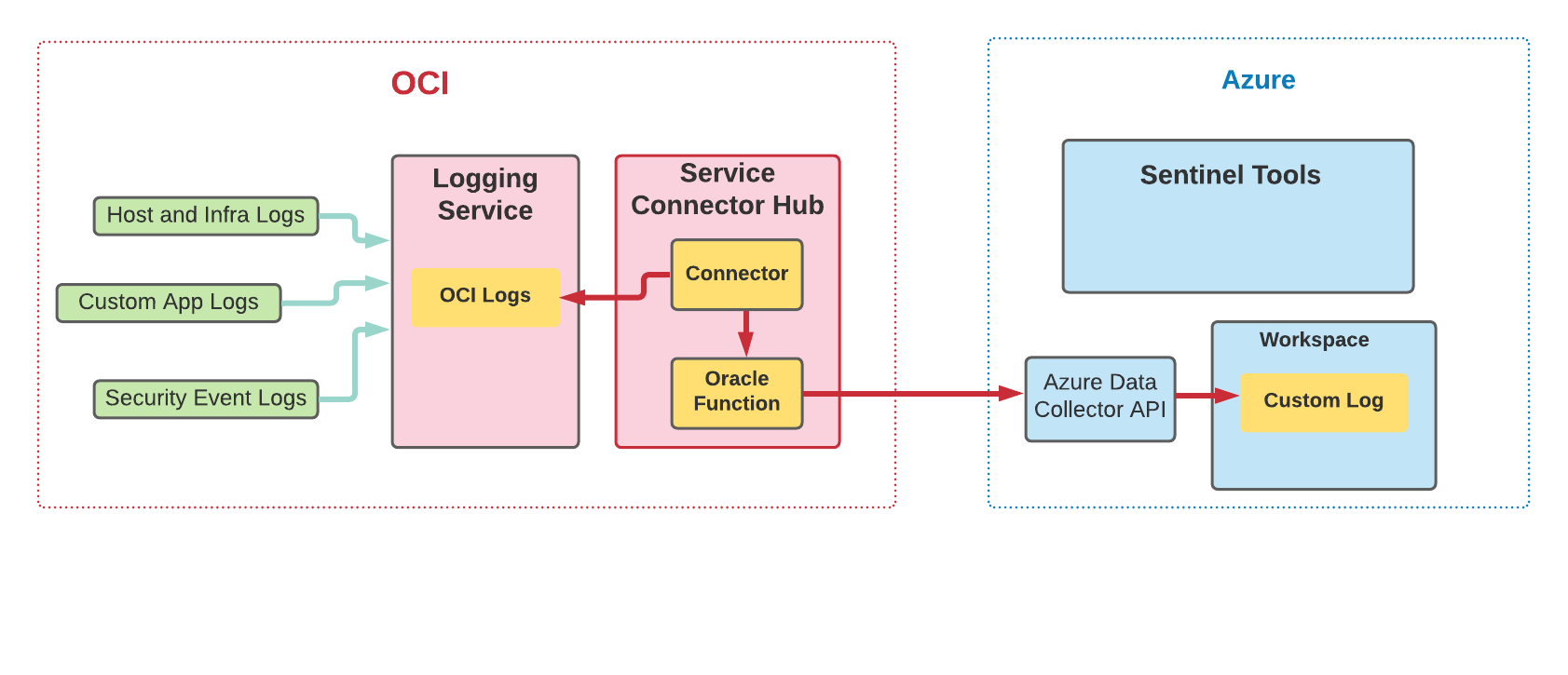

A much better strategy for exporting OCI log data to Azure Sentinel uses the OCI Logging service to transparently handle centralized log ingestion, data staging, and secure network export to Azure. The OCI Logging service is fully managed, robust, scalable, and designed to ingest infrastructure, platform, service, audit, and custom application logs. It allows automatic continuous log data export to Azure Sentinel using the related Service Connector Hub framework. The following image shows the high-level topology of this solution strategy:

This blog presents the Oracle Functions code required to complete the integration.

Create and publish your custom Oracle function

Getting started with Oracle Functions service is easier than you might imagine. The following code example uses Python, but you can easily implement the function in the language platform of your choice. I recommend using the Cloud Shell option for function creation.

You will need to replace (or overwrite) the basic template func.py code file. Let’s add the function code in three simple steps.

Step 1: Add the following required import statements and parameter declarations:

import io

import json

requests

datetime

hashlib

hmac

base64

logging

from fdk import response

# Update the customer ID to your Log Analytics workspace ID

_customer_id = '6107f3a2-1234-abcd-5678-efghijklmno'

# For the shared key, use either the primary or the secondary Connected Sources client authentication key

_shared_key = "K3+1234567890abcdefghijklmnopqrstuvwxyz"

# The log type is the name of the event that is being submitted

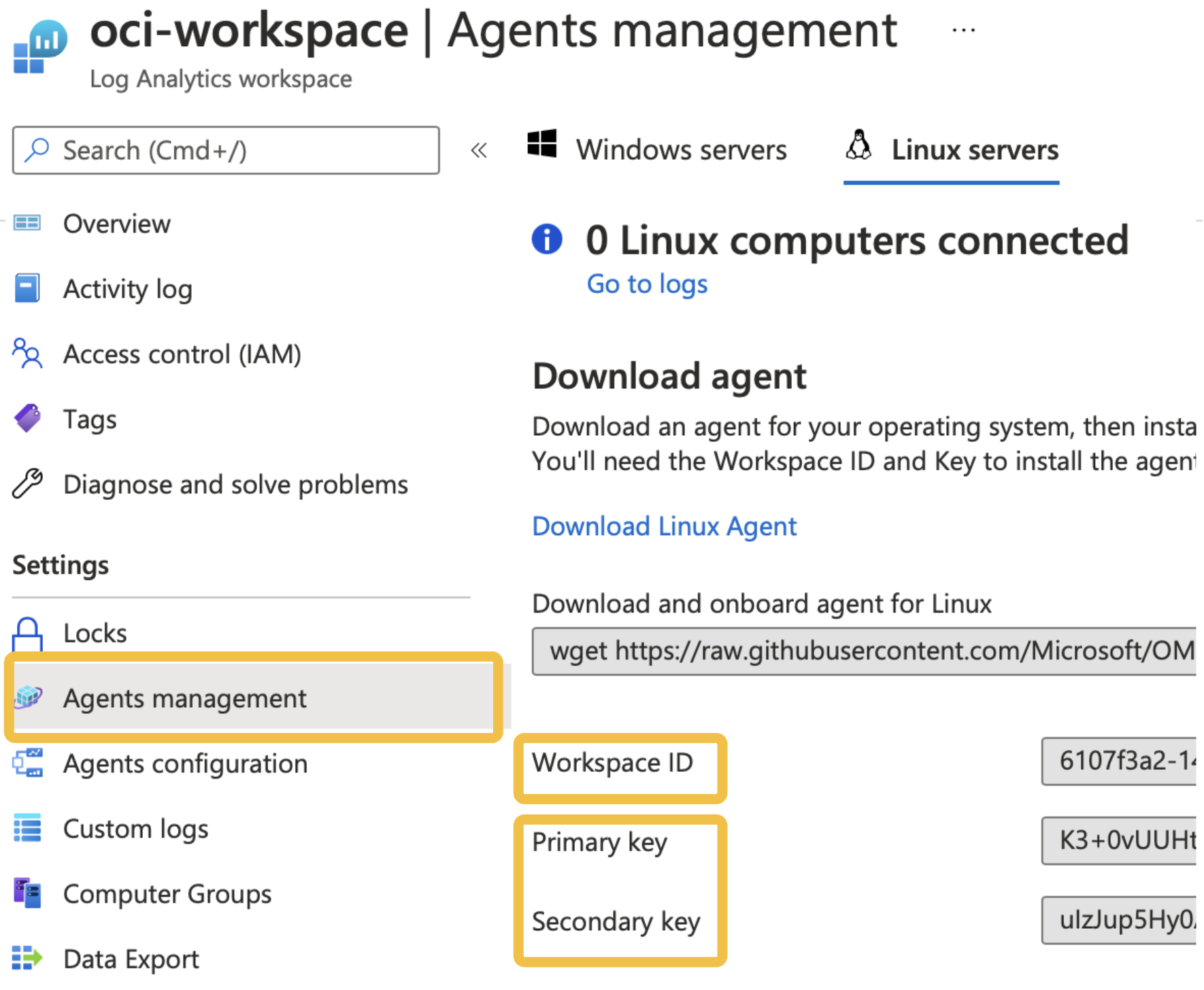

_log_type = 'OCILogging' The parameter values must match your Azure Sentinel workspace environment. You can find the customer ID (workspace ID in the portal) and shared key values in the Azure Portal under Log Analytics workspace, your-workspace-name, and Agents management page. The log type parameter is a friendly name used to define a new (or select an existing) custom log in your Azure Log Analytics workspace and the target location for data uploads.

Step 2: Add the following lines of code to define the actual heavy lifting for making secure REST calls to the Azure data upload endpoint. You don’t need to customize this code, but in the future, Microsoft might update the endpoint or version syntax.

# Build the API signature

def build_signature(customer_id, shared_key, date, content_length, method, content_type, resource):

x_headers = 'x-ms-date:' + date

string_to_hash = method + "\n" + str(content_length) + "\n" + content_type + "\n" + x_headers + "\n" + resource

bytes_to_hash = bytes(string_to_hash, encoding= "utf-8" )

decoded_key = base64.b64decode(shared_key)

encoded_hash = base64.b64encode(hmac.new(decoded_key, bytes_to_hash, digestmod=hashlib.sha256).digest()).decode()

authorization = "SharedKey {}:{}" .format(customer_id,encoded_hash)

return authorization

# Build and send a request to the POST API

def post_data(customer_id, shared_key, body, log_type, logger):

method = 'POST'

content_type = 'application/json'

resource = '/api/logs'

rfc1123date = datetime.datetime.utcnow().strftime( '%a, %d %b %Y %H:%M:%S GMT' )

content_length = len(body)

signature = build_signature(customer_id, shared_key, rfc1123date, content_length, method, content_type, resource)

uri = 'https://' + customer_id + '.ods.opinsights.azure.com' + resource + '?api-version=2016-04-01'

headers = {

'content-type' : content_type,

'Authorization' : signature,

'Log-Type' : log_type,

'x-ms-date' : rfc1123date

}

response = requests.post(uri,data=body, headers=headers)

if (response.status_code >= 200and response.status_code <= 299 ):

logger.info( 'Upload accepted' )

else :

logger.info( "Error during upload. Response code: {}" .format(response.status_code)) Step 3: Add the following code block to your func.py code file to define the run entry point and framework details. You don’t need to make any changes unless you want to add optional features, such as advanced data parsing, formatting, or custom exception handling.

"""

Entrypoint and initialization

"""

def handler(ctx, data: io.BytesIO= None ):

logger = logging.getLogger()

try :

_log_body = data.getvalue()

post_data(_customer_id, _shared_key, _log_body, _log_type, logger)

except Exception as err:

logger.error( "Error in main process: {}" .format(str(err)))

return response.Response(

ctx,

response_data=json.dumps({ "status" : "Success" }),

headers={ "Content-Type" : "application/json" }

) Deploy this function to the OCI region where your source logs are located. Finally, create a Service Connector defining source log, optional query filters, and specify your function as the connector target. The service connector runs every few minutes and moves batches of log entries to the Azure Log Analytics data upload API.

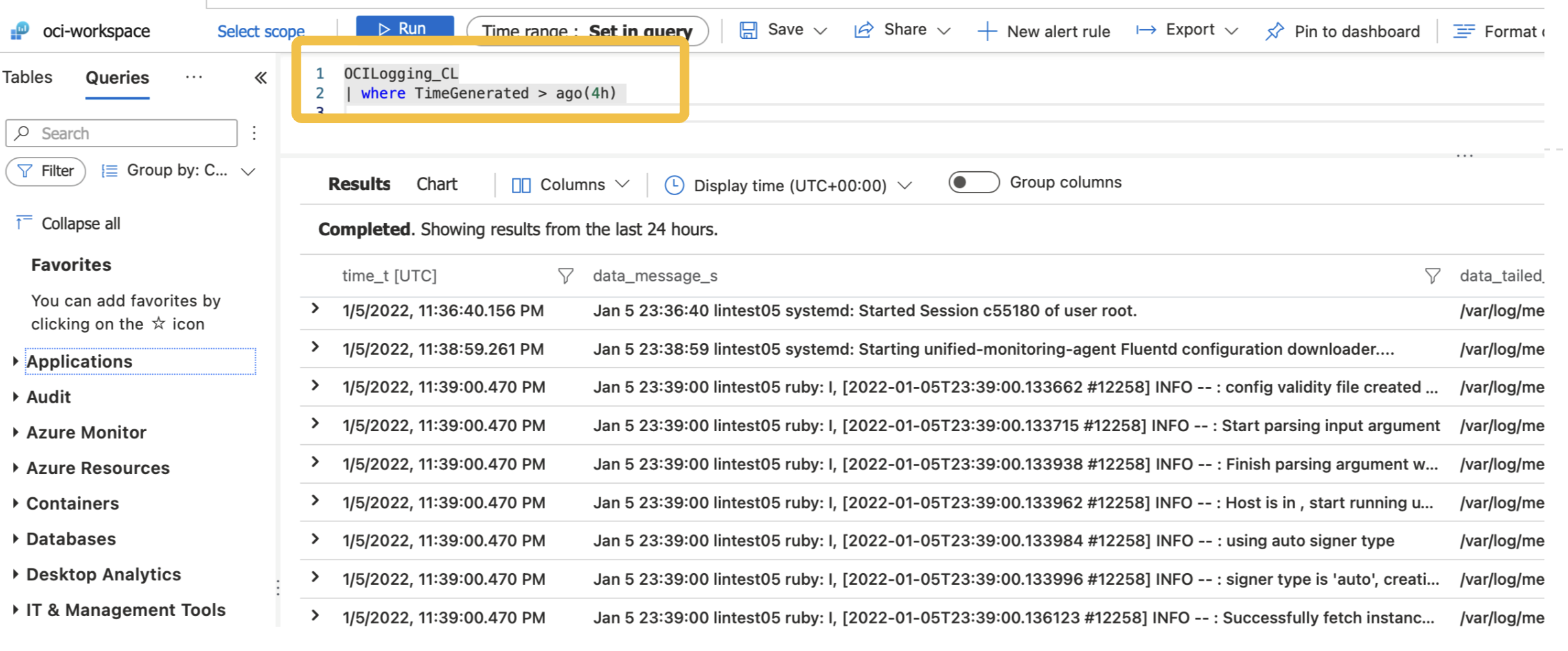

OCI Log Data is available in the Azure Log Analytics custom log table name specified in step 1. You can now use this data for all your Sentinel log search, report, and analytics queries.

Summary

OCI Logging service provides a centralized log management solution for OCI and enables the log data export to external SIEM tools such as Microsoft Azure Sentinel. Logging is one of the Always Free cloud services offered on Oracle Cloud Infrastructure. Try the Logging service today!