Oracle Cloud Infrastructure (OCI) Vision Video Analysis is an AI-driven service that makes it easy to analyze and interpret video content. It can scan stored video files frame by frame to automatically identify labels, classify scenes, detect objects, and recognize faces within each frame.

Oracle Analytics Cloud (OAC) seamlessly integrates with OCI Vision Video Analysis, allowing users to tap into its pretrained video models directly from OAC. This means you can leverage powerful AI models without needing to build or train them yourself.

In this article, you’ll learn how to work with pretrained video models—specifically how to register and run an Object Detection model using OAC, and get a more detailed view of these key areas:

- Introduction to Pretrained Video Models

- Label detection

- Object detection

- Text detection

- Face detection

- What You Need Before You Start

- OCI Connection

- OCI Policies

- Staging Bucket

- Input Dataset

- Hands-On Guide

- Registering a Pretrained Object Detection Model

- Invoking the Object Detection Model

Introduction to Pretrained Video Models

OCI Vision Video Analysis offers several pretrained models out of the box, including:

Label Detection

This model identifies and lists all labels (or tags) recognized throughout the video. In addition to the full list, it also highlights which labels were detected at specific timestamps.

Object Detection

All detected objects in the video are captured and listed. For each frame, objects found at specific moments are noted separately. Detected objects are visually marked with bounding boxes to indicate their position within the frame.

Text Detection

Any visible text in the video is detected and compiled into a list. Text that appears at particular times is noted individually. Bounding boxes are drawn around the detected text to show its exact location.

Face Detection

The model detects and catalogs all faces present in the video. It also provides a breakdown of which faces appear at specific times. Each face is highlighted with a bounding box for easy identification in the video frames.

What You Need Before You Start

To successfully use the OCI Vision Video models in OAC, you must make sure a few things are in place:

Create a Connection to Your OCI Tenancy

Your connection stores relevant credentials and connection information used by OAC to access various OCI services such as Functions, Vision, Language, and Document Understanding. See Create a Connection to Your OCI Tenancy.

Set Up OCI Policies

Ensure you have the required security policies in OCI before you register a OCI Vision Video model in OAC.

The OCI user that you specify in the connection between OAC and your OCI tenancy must have read, write, and delete permissions on the compartment containing the OCI resources you want to use. See Policies Required to Integrate OCI Vision with Oracle Analytics.

Create a Staging Bucket

In OCI Object Storage, create a bucket in a compartment using a suitable name. This staging bucket must be available in an accessible compartment before you register the video model in OAC. It can have private visibility and be used for multiple models.

Prepare Input Dataset

Store the videos that you want to analyze in OCI Object Storage buckets. Next, create a dataset in Oracle Analytics to access these documents. In this article, we use a video showing the vehicular movement on a highway as input for the pretrained Video Object Detection model.

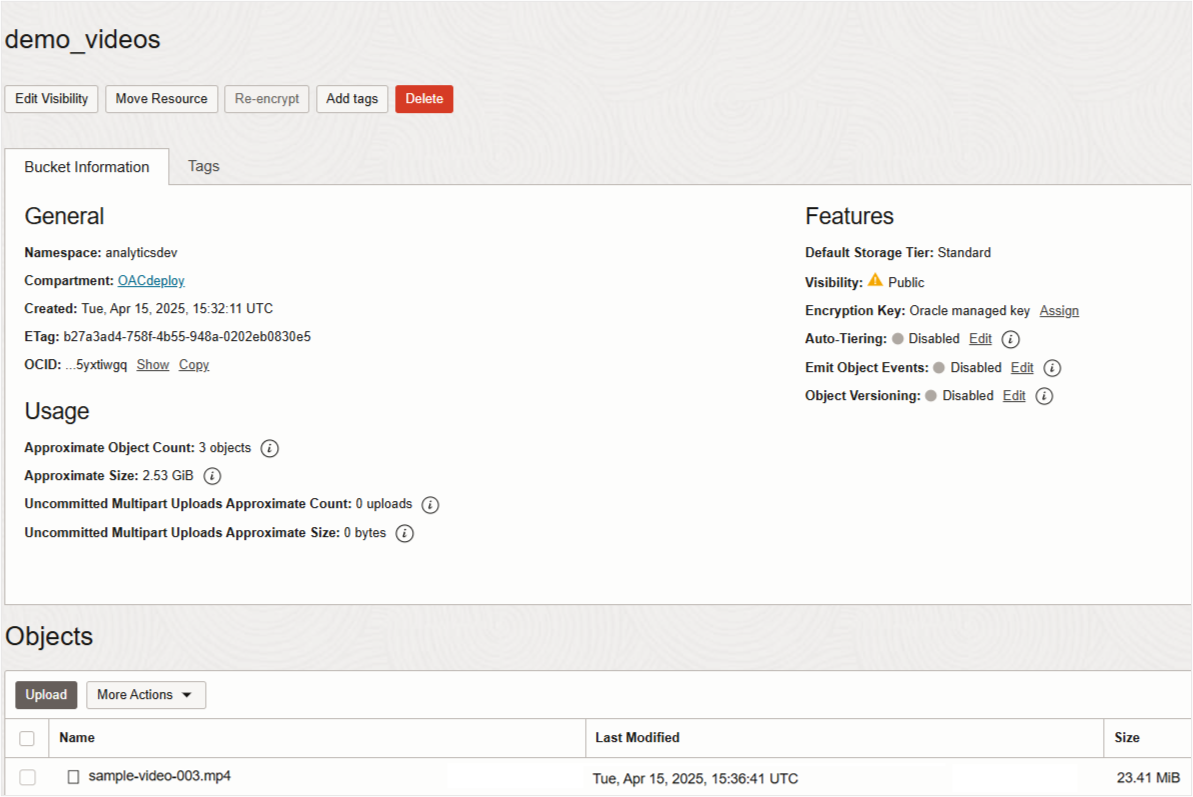

- In OCI Console, navigate to Storage, and then Object Storage & Archive Storage.

- Click Buckets and create a bucket to store your documents.

- Once the bucket is created, click the bucket name.

- Under Objects, click Upload, and upload your video files. See Limits for Video Analysis.

Image 1: OCI Bucket where video file is uploaded. - Verify that the bucket doesn’t contain extraneous files for processing. Oracle Analytics processes every file in the bucket.

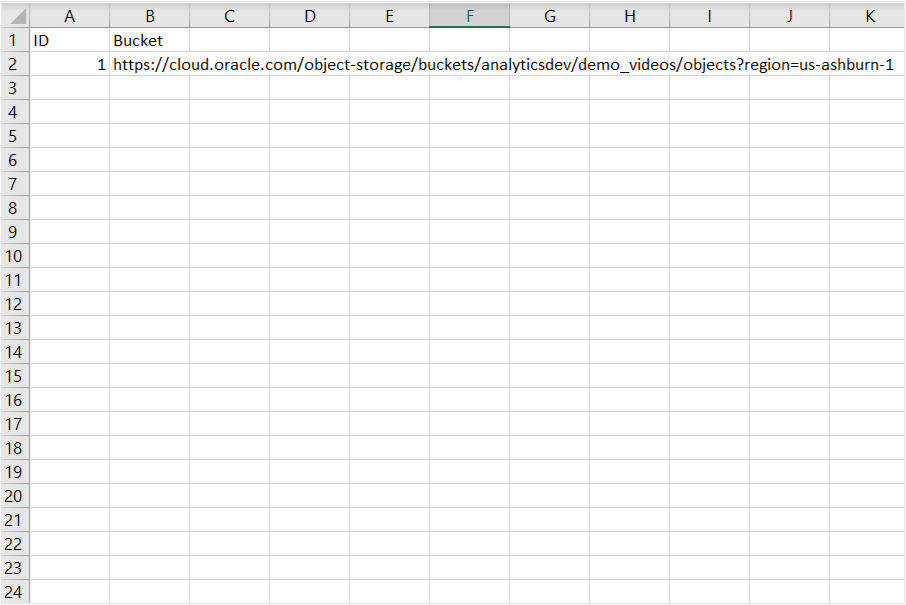

- Prepare an input dataset by creating a CSV file that includes an ID and Bucket URL where your documents are stored.

Image 2: Sample input dataset with OCI Bucket’s URL - Upload this CSV as a dataset to your OAC instance, which serves as the input for the dataflow to invoke the Vision Video service.

Hands-On Guide

Register a Pretrained Object Detection Model

Learn how to set up the model within OAC for your specific use case.

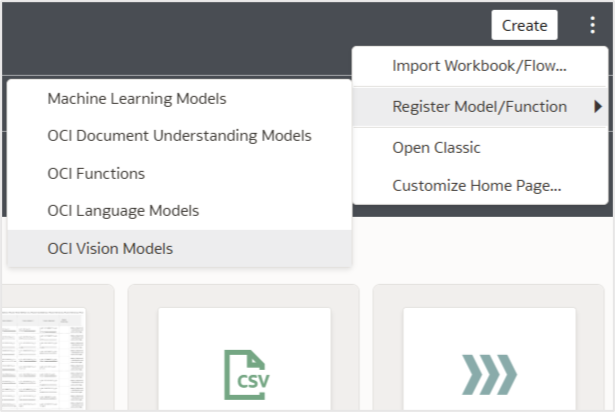

- On your OAC Home page, click the Page menu (ellipsis).

- Select Register Model/Function, and then OCI Vision Models.

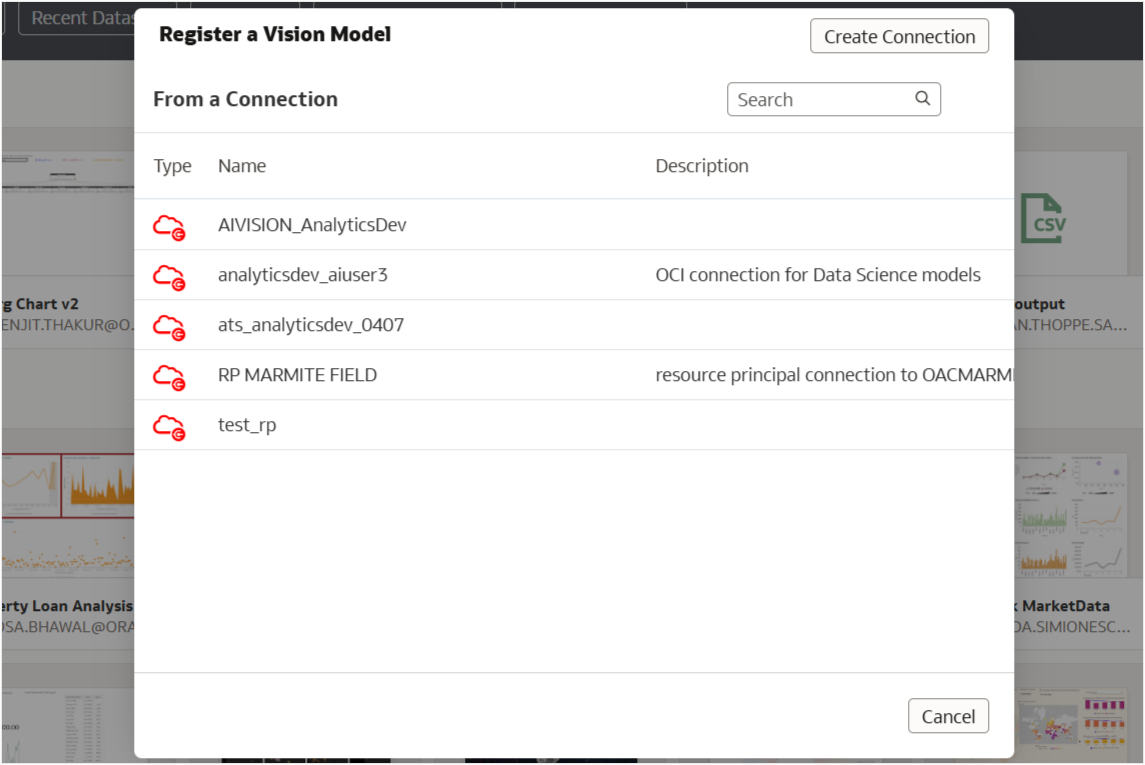

Image 3: OCI Vision Models in the OAC main menu. - Select the connection you created earlier.

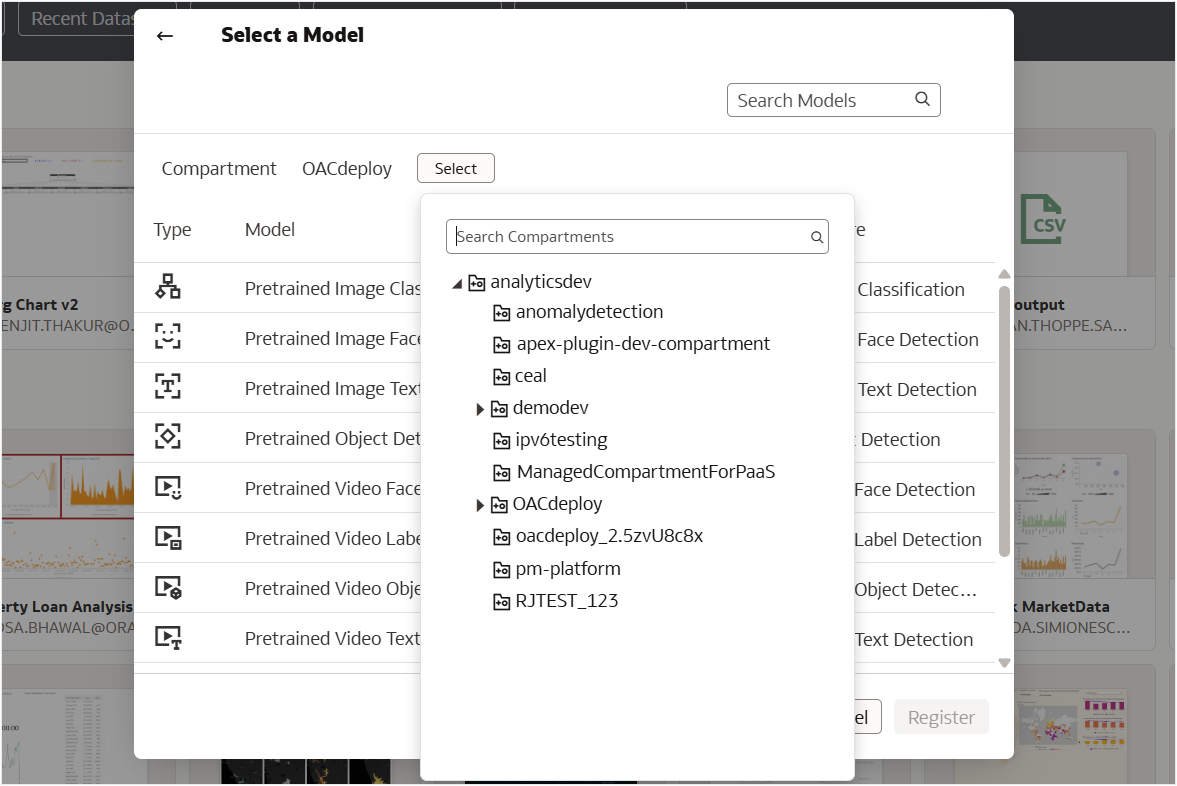

Image 4: Select Connections Dialog - Select a compartment accessible to the OCI user.

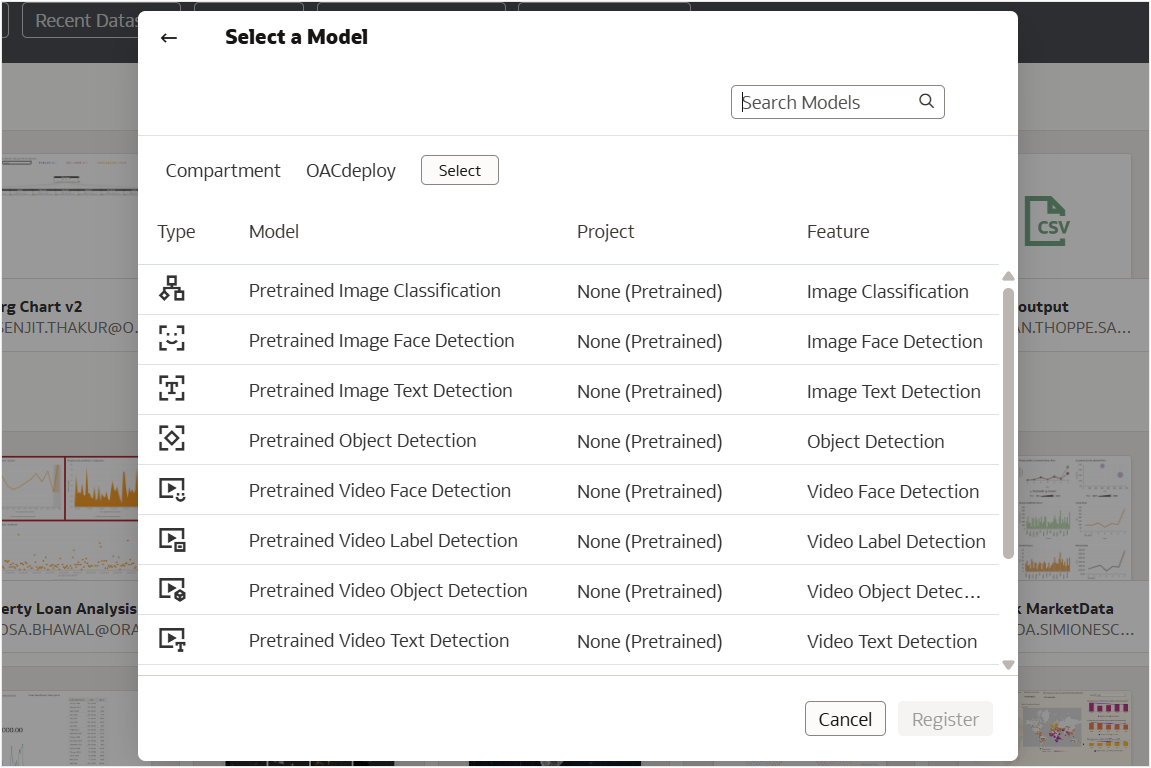

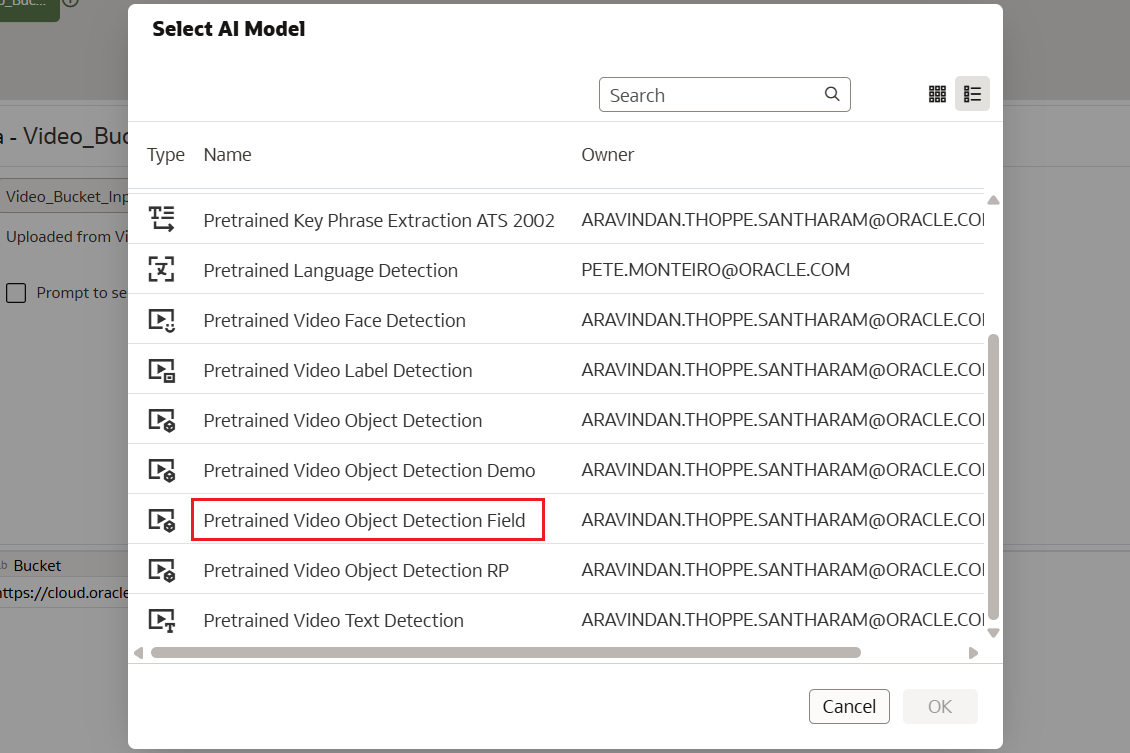

Image 5: Select Compartment Dialog - From the list of image and vision models, select the Pretrained Video Object Detection model that you want to register.

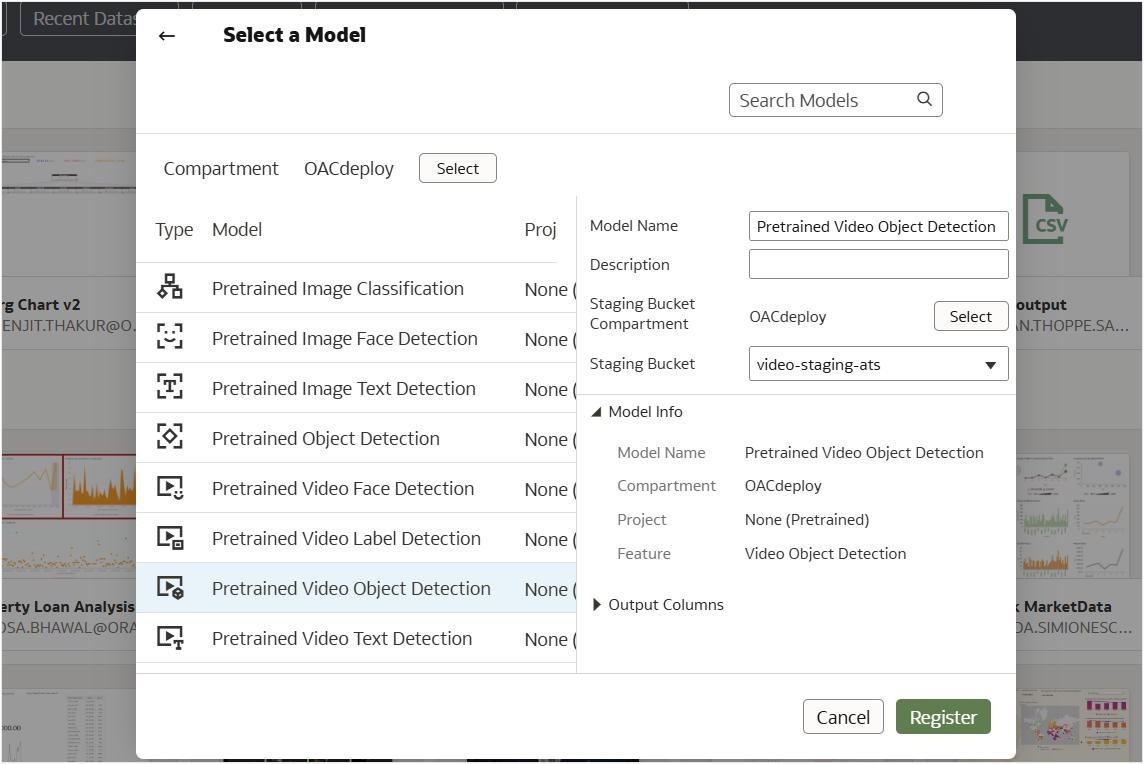

Image 6: Select Model Dialog - Enter a model name and description.

Image 7: Select Model and Register - To the right of Staging Bucket Compartment, click Select, and choose the staging bucket compartment.

- In Staging Bucket, select the staging bucket.

- Click Register.

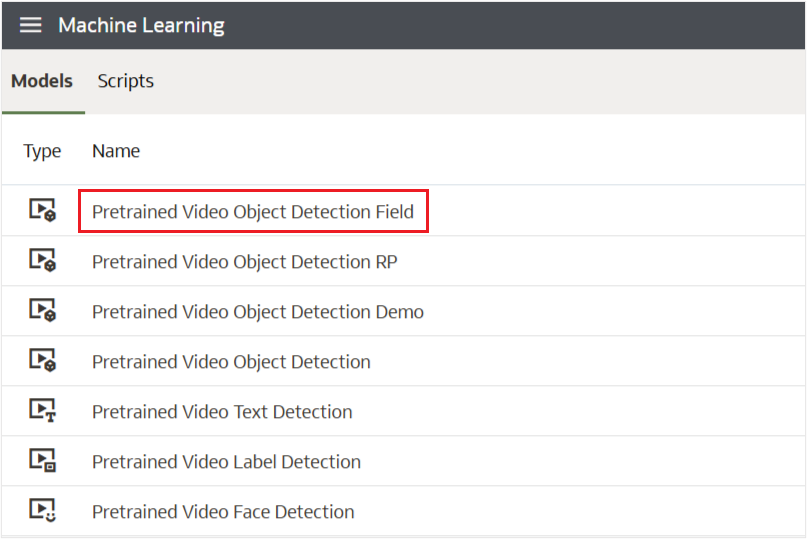

- Verify that the model is successfully registered. On the Home page, click Navigator (in the left corner), and then Machine Learning. Check that the model is displayed on the Machine Learning Models page.

Image 8: Machine Learning Models Page

Invoke the Video Object Detection Model

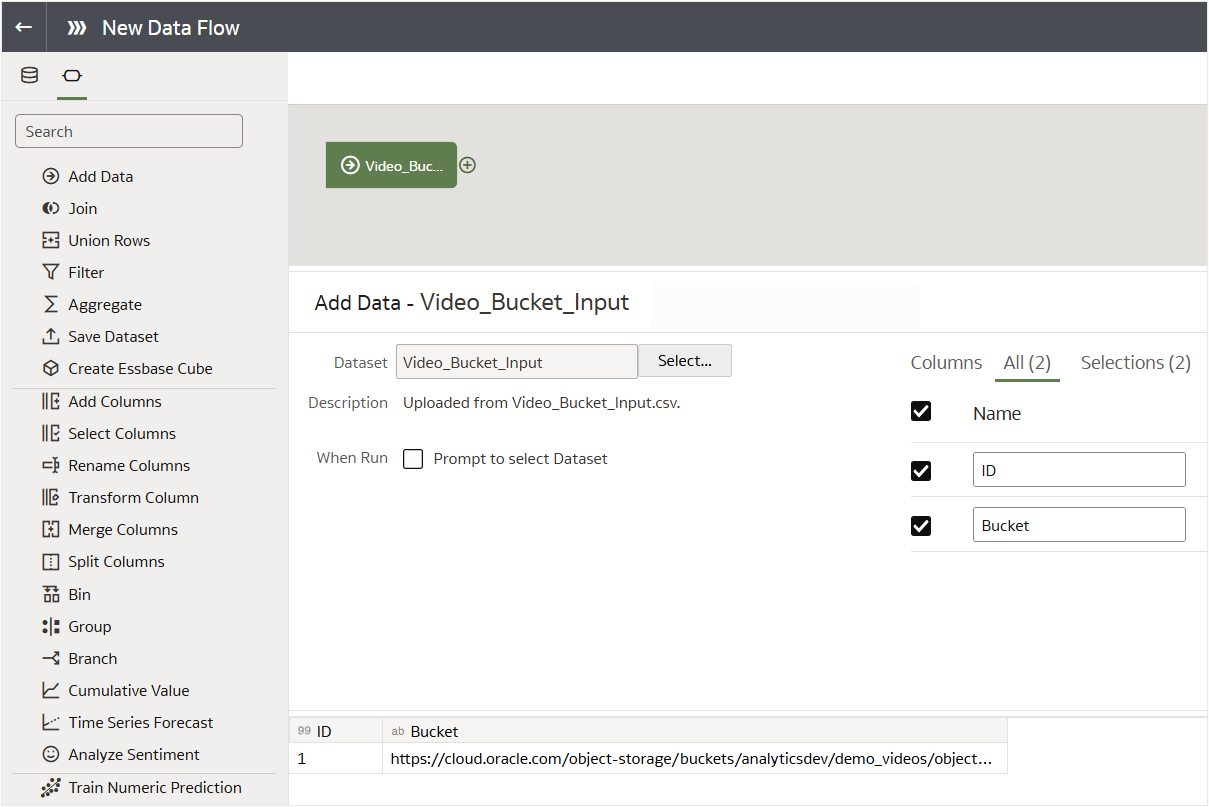

Here are step-by-step instructions on how to run the model and retrieve results. Create a data flow and select the dataset (created earlier) with the Bucket URL of the input video file.

- On the OAC Home page, click Create, and then Data Flow.

- On the Add Data dialog, double-click the dataset you created earlier to select it.

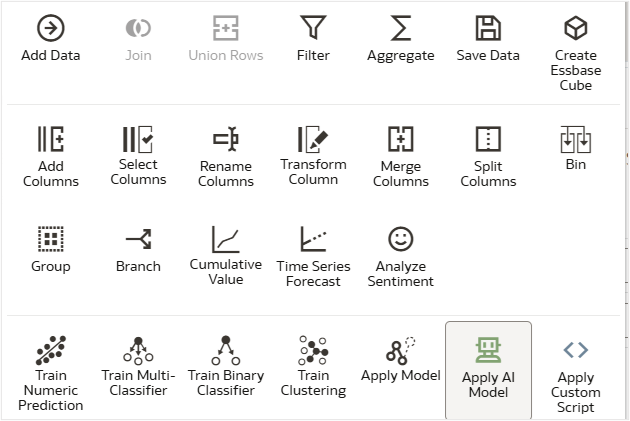

Image 9: New Data Flow - Click Add a step (+), and select Apply AI Model.

Image 10: Add New Step - From the list of AI models, select the Pretrained Video Object Detection Field model you registered earlier.

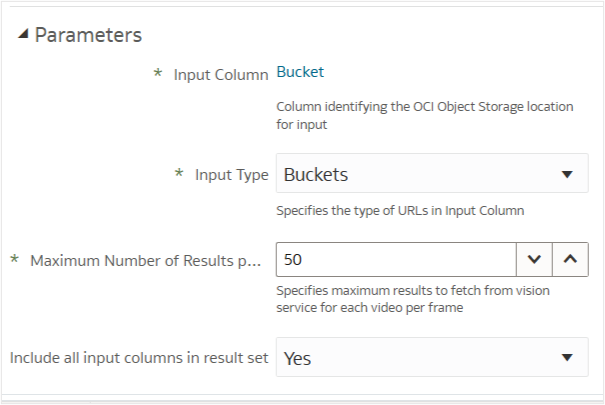

Image 11: Select AI Model - In Parameters, for Input Column, select Bucket, and for Input Type, select Buckets. Alternatively, if you have a smaller number of documents to process, you can create the input dataset with a list of individual video URLs. In that case, select the video URL column as the Input Column and Videos as the Input Type.

Image 12: Parameters Section - Click Add a step (+), and then select Save Data.

- Provide the output dataset details, and then click Run Data Flow.

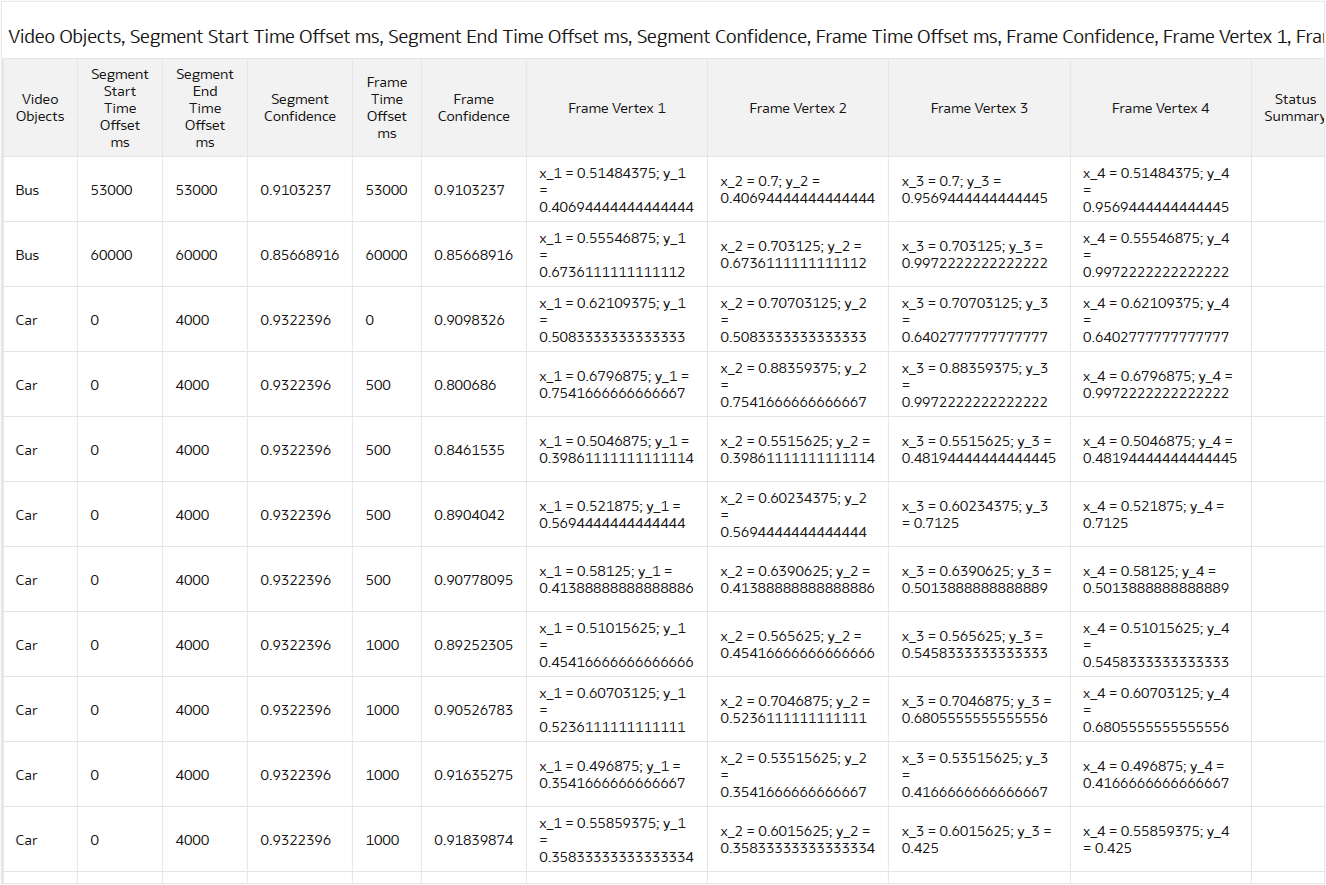

Upon successful completion, the output dataset is available with the relevant columns such as Video Objects, Frame Time Offset, Frame Confidence, Frame Vertices, and so on. You can aggregate and visualize the output to create insightful dashboards.

Summary

In this article, you learned how to register and invoke a pretrained Video Object Detection model from OAC to extract objects that appear on each frame of the video.

Call to Action

Now that you’ve learned how to register and invoke the OCI Video Object Detection model, check out the blog Enhancing Traffic Monitoring with OCI Video Analysis in Oracle Analytics to create an insight-driven dashboard and visualize key patterns and trends from the output data.