Oracle Analytics Cloud (OAC) now supports Oracle Cloud Infrastructure (OCI) Vision Video Analysis! This enables corporations to analyze videos frame by frame to recognize faces, text, labels, and objects.

This article provides a quick tutorial on how you can create your first AI Vision Video Analysis in just five steps.

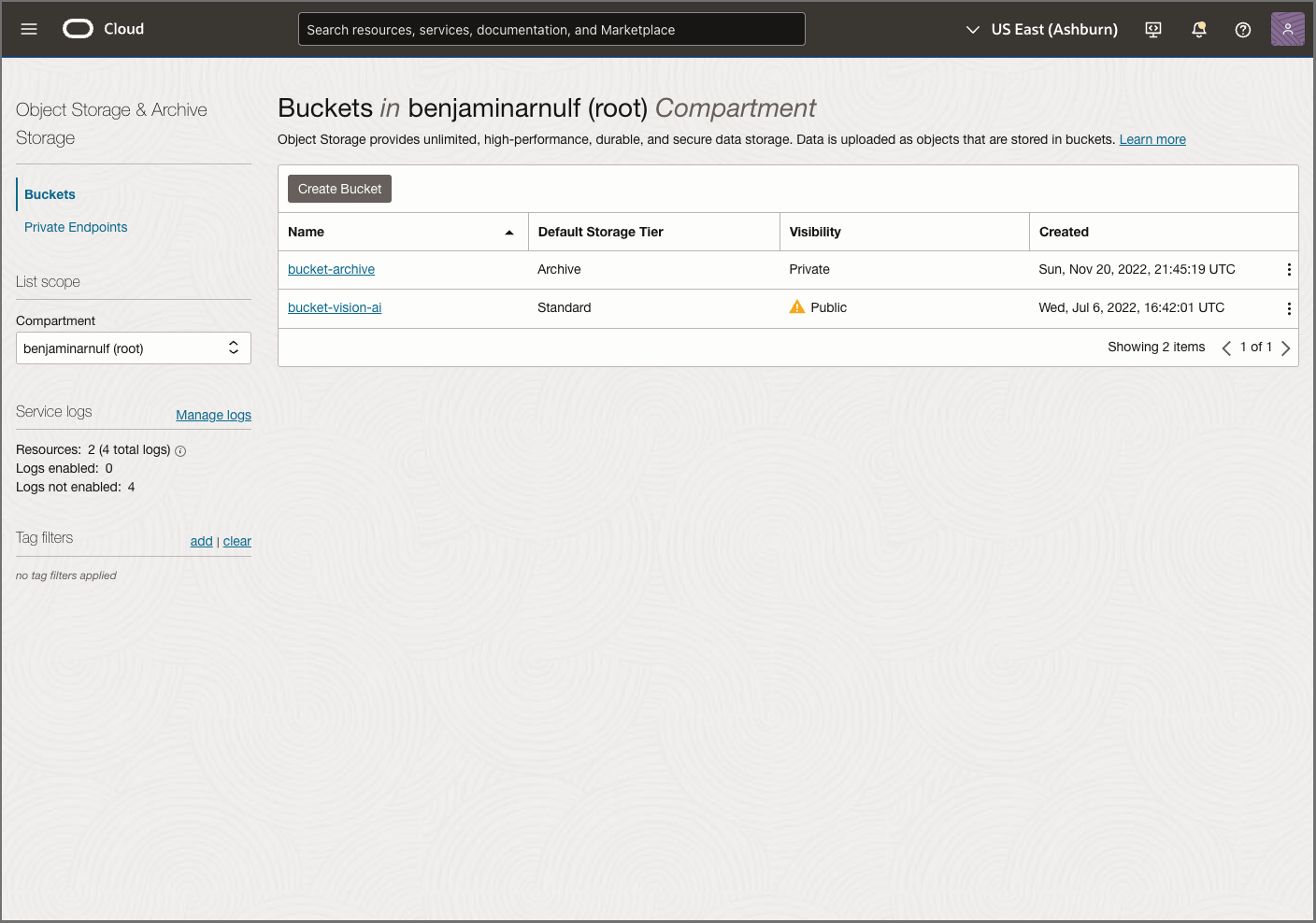

1. Upload your videos to the object storage

a. Create a new object storage bucket in OCI.

b. Select the Visibility for the video folder and upload a video in the MP4 format.

In this example, the bucket name is bucket-vision-ai in the root compartment.

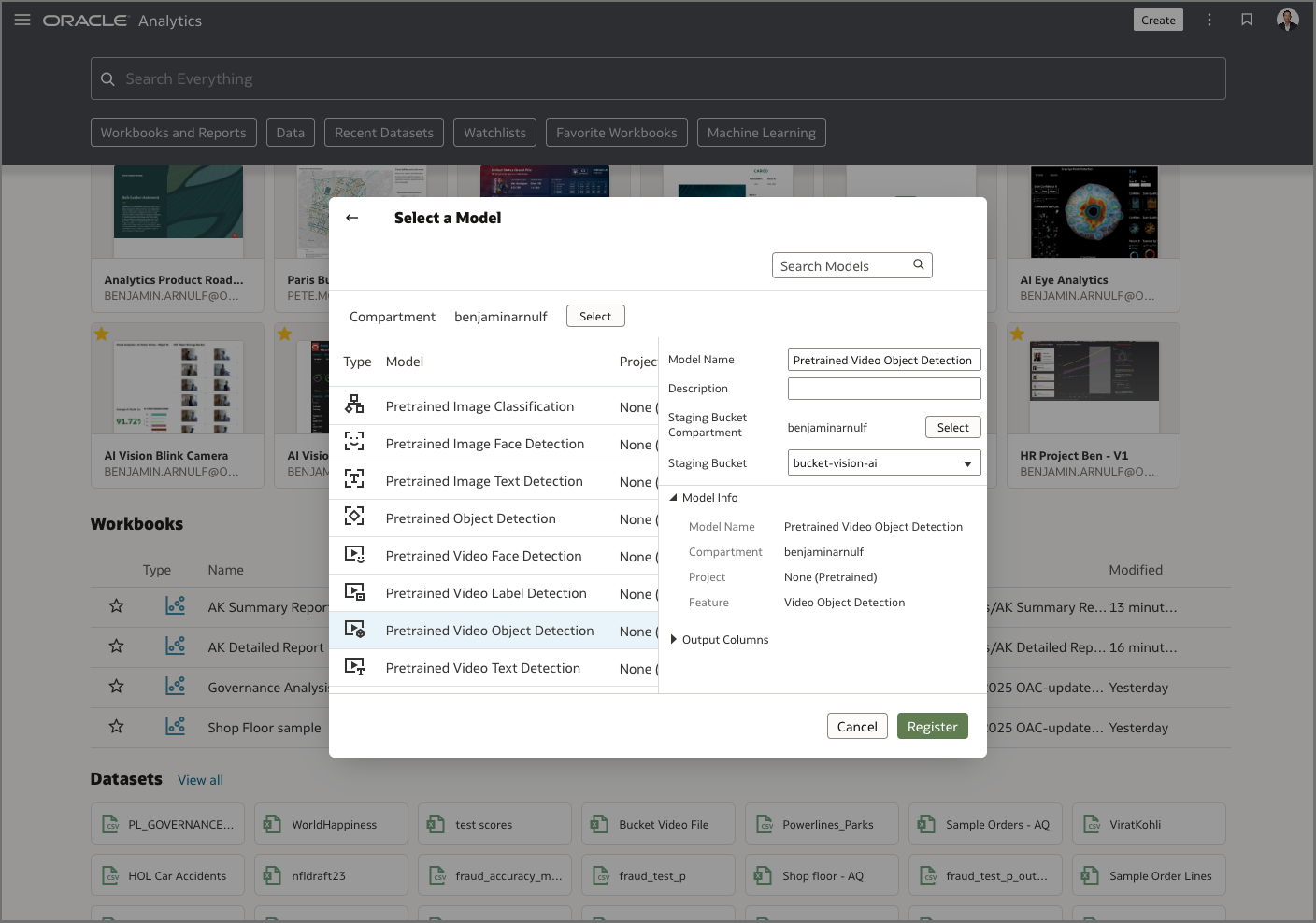

2. Register your OCI Video AI model

a. On the Analytics Home page, click Page Menu (ellipsis) at the top-right corner of the page, select Register Model/Function, and then OCI Vision Model.

b. Select the Compartment, and from Model Type, select Pretrained Video Object Detection.

c. Select the staging bucket and click Register. In this example, you’re registering bucket-vision-ai.

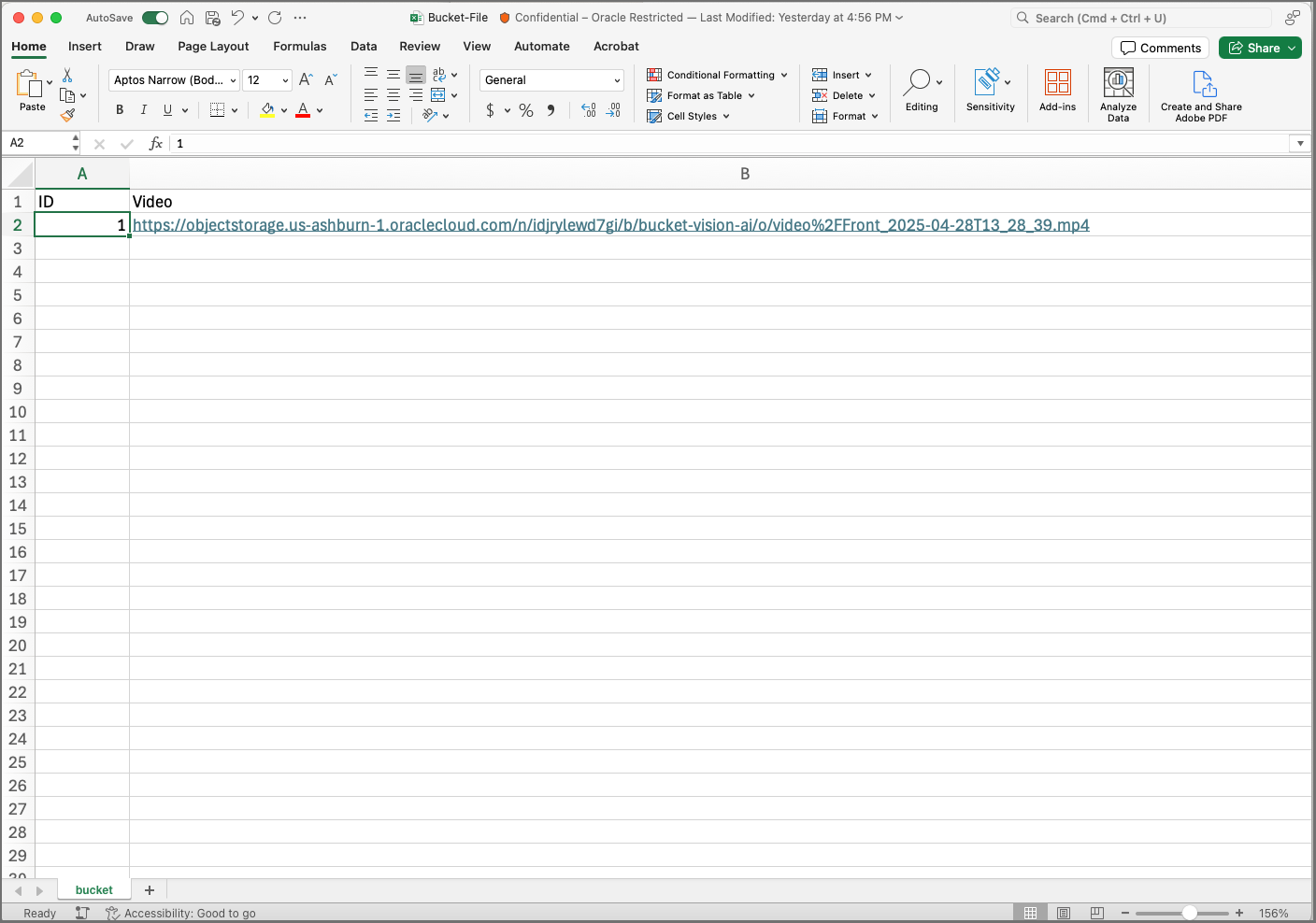

3. Create a CSV file and import it as a dataset

Now that your video is uploaded and the model is ready for analysis in OAC, you must create a file showing the path of the video.

a. Create an Excel spreadsheet with this formatting and content:

- ID in column A

- Video in column B

- Add 1 to the ID column

- Add the object store video URL to the Video column

In theory, you need to be able to open this URL from your network or from the same network as the OAC instance.

b. Upload the Excel file to OAC by dragging and dropping it to the Analytics Home page to create a normal dataset.

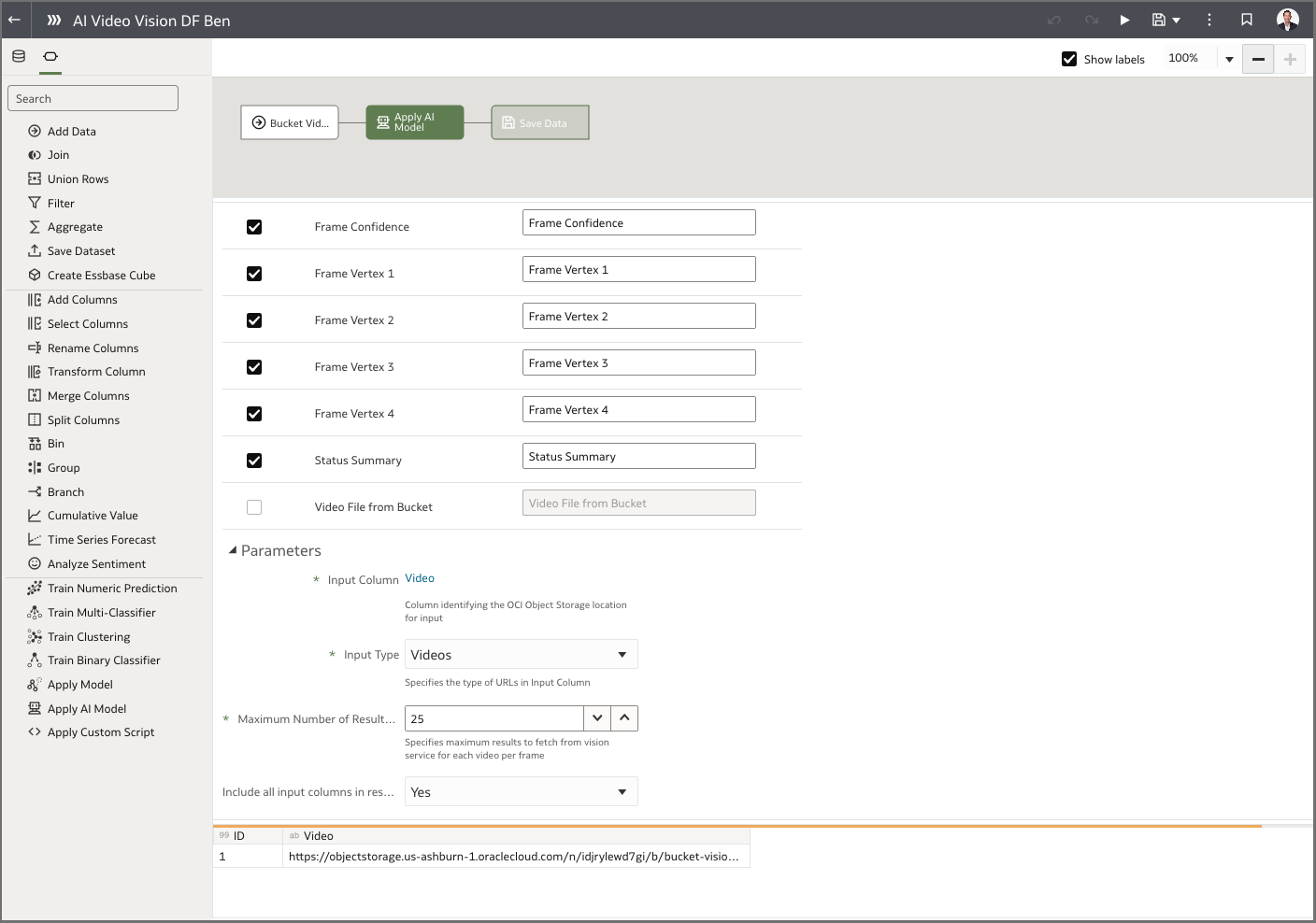

4. Create a data flow to analyze your videos

a. On the Analytics Home page, click Create, and then Data Flow.

b. Select the dataset referencing your video by dragging and dropping it to the Add Data dialog.

In the Data Preview panel, the video URL is displayed.

c. Drag and drop Apply AI Model from Data Flow Steps to the Data Flow, and select the model you just registered.

d. Select all the options to add the columns to your dataset.

- Normally, the Input Column is set to Video. In this case, you’re directly referencing the video URL for each video, rather than an entire bucket. So, select Videos as the Input Type.

- The maximum number of inputs is for the maximum number of objects you want the model to recognize by image in the video. In this case, it’s just you and your Ducati, so enter 25.

e. Leave everything else as is to save the data in a new dataset.

f. Save the data flow and click Run Data Flow.

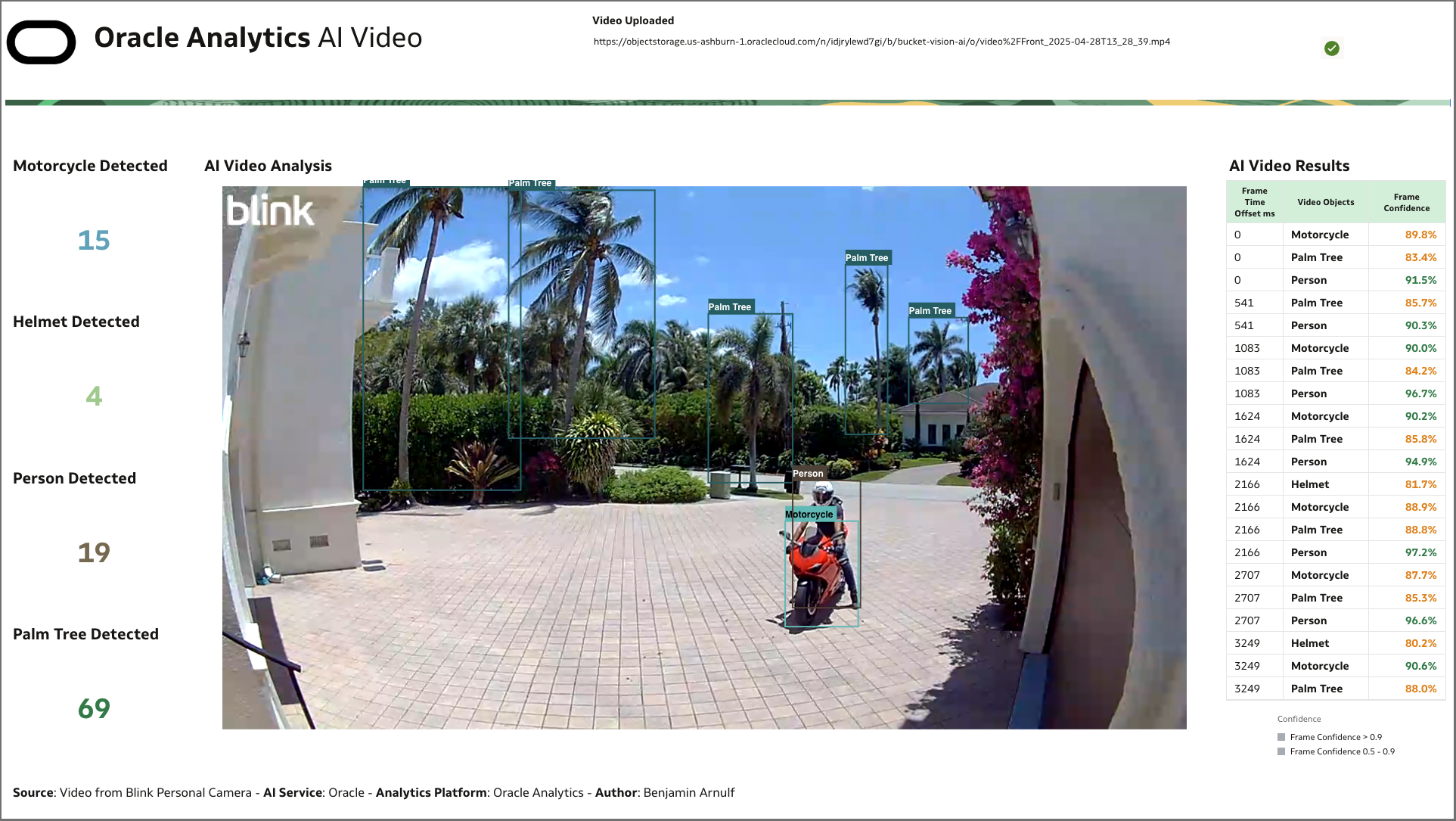

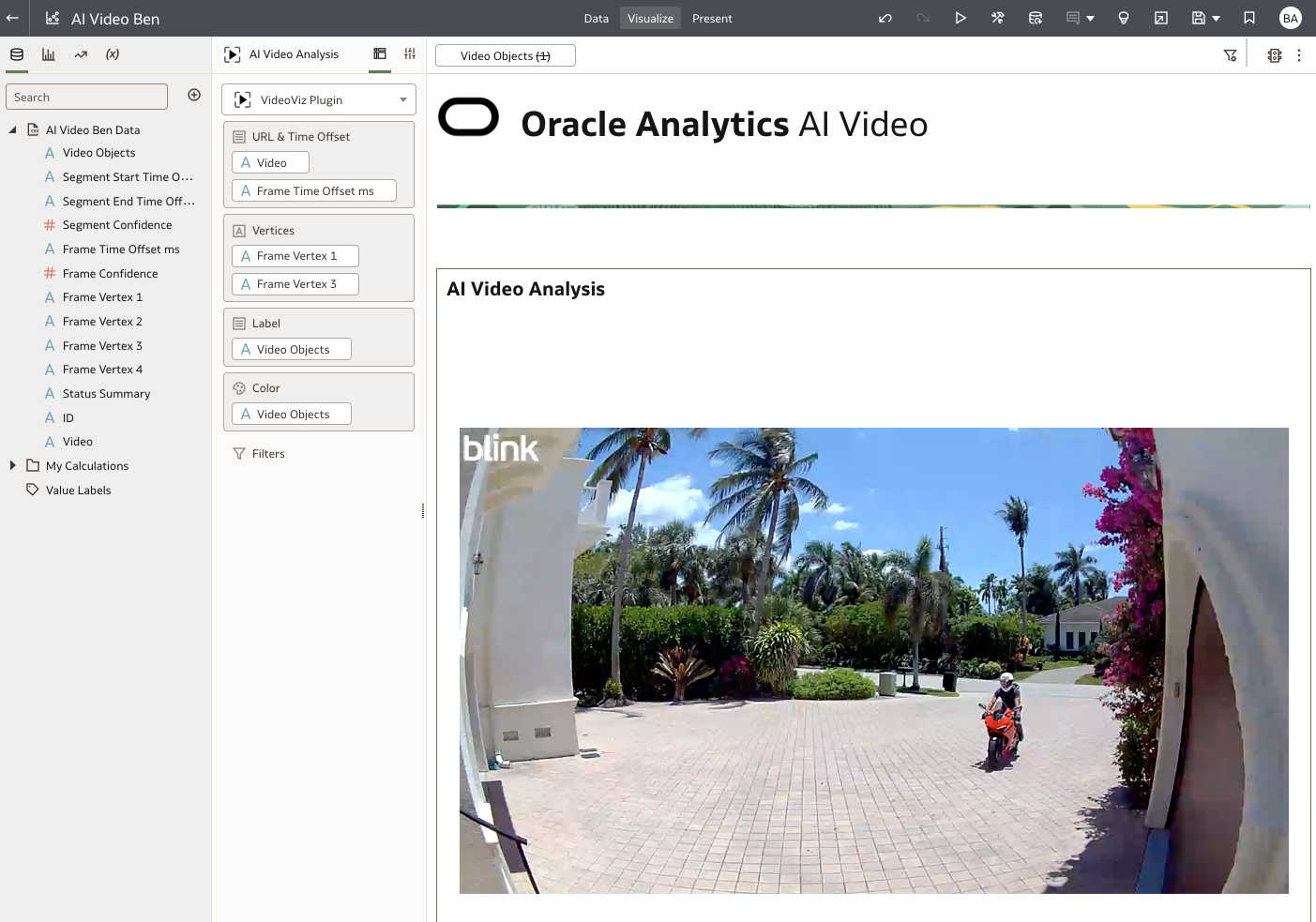

5. Create the workbook to visualize the AI Vision Video dataset

The final step is easy! Simply create a workbook with the new dataset and use the video plug-in extension, Video Viz Plugin. You can find the plug-in on the Oracle Analytics Community.

a. Verify that Segment Confidence and Frame Confidence are set to MEASURE. All other columns should be set to ATTRIBUTE in the Data Tab menu.

b. Drag and drop Video (containing the URL) and Frame Time Offset ms to URL & Time Offset.

c. Drag and drop Frame Vertex 1 and Frame Vertex 3 to Vertices.

d. Drag and drop Video Objects to Label and Color to enable the colors and labels to change based on the detected object.

The result—Clicking Play displays the objects detected in the video. You can also filter by timeframe and object type, and start the video analysis as shown below:

Key Takeaways

Oracle Analytics allows authors to analyze multiple videos using OCI and Oracle AI Vision Models. This is the best low-code solution to analyze and detect objects in a video using drag and drop actions.

For more information:

- What’s new docs for Oracle Analytics Cloud May 2025

- YouTube May 2025 feature playlist

- Oracle Analytics Community

- Oracle Analytics Capabilities Explorer

- Oracle Analytics AI Assistant blog

- Get started with the AI Assistant in three steps