This article describes automating the uploading process of data files for Object Storage Service, as described in the article Loading Data from Oracle Object Storage into Oracle Fusion Analytics.

NOTE: You can take advantage of this script with NetSuite Analytics as well.

Oracle Fusion Analytics is a family of prebuilt, cloud-native analytics applications for Oracle Fusion Cloud Applications that provide operative and functional insights to build upon decision-making. Migrating data files to object storage can be arduous, especially when dealing with a plenitude of data. Typically, it involves creating directories and uploading each file individually.

To upload datasets (xlsx and csv) to Oracle Object Storage Service (OSS) and then load the data to run analytics using the OSS Connector, you can take advantage of this script. It’ll significantly optimize time and simplify the data refresh process.

If you have N number of data files to upload for the OSS Connector, then:

- You must create N number of folders.

- You must upload (N*2)+1 files.

By using this script, you provide only two things:

- The directory that contains datasets to be used in Fusion Analytics through the Oracle Object Storage Service Connector.

- The pre-authenticated URL for the OSS directory where you plan to upload the datasets.

The script effectively does the rest. It creates and uploads all the (xlsx or csv) files to Oracle Object Storage while maintaining the directory structure. For more details about the data loading requirements, refer to Loading Data from Oracle Object Storage into Oracle Fusion Analytics.

Create the Pre-Authenticaled URL

If you’ve created the bucket and parent folder by following the steps in section A of Loading Data from Oracle Object Storage into Oracle Fusion Analytics, then you can get the pre-authenticated URL as follows:

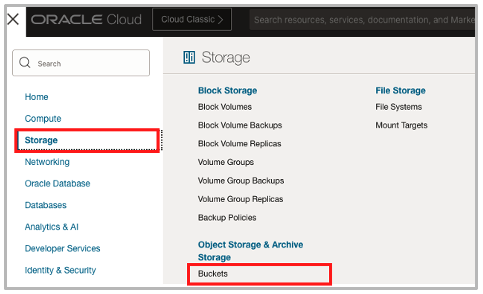

- In OCI, go to the bucket you’ve created previously for OSS, by selecting Storage, then Buckets from the right top menu.

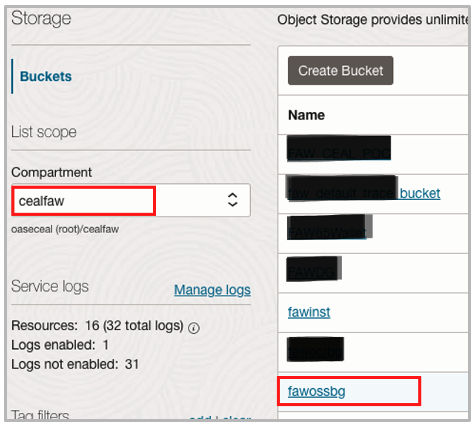

- In Compartment, select the applicable compartment and then select your bucket.

-

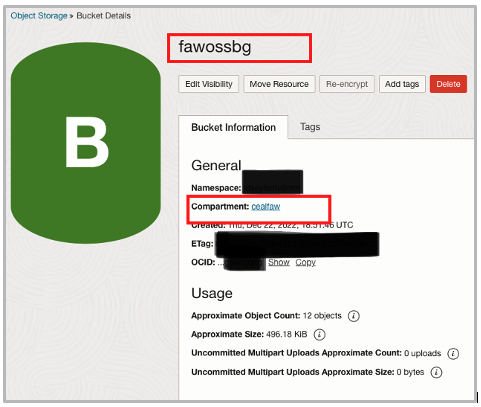

After selecting, confirm by checking the compartment values and bucket name on the Bucket Details page.

-

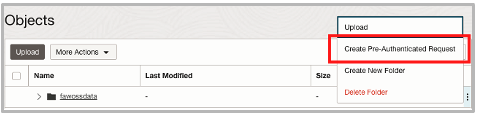

Click the three dots on the right side of the parent folder that you had created previously and select Create Pre-Authenticated Request from the menu.

-

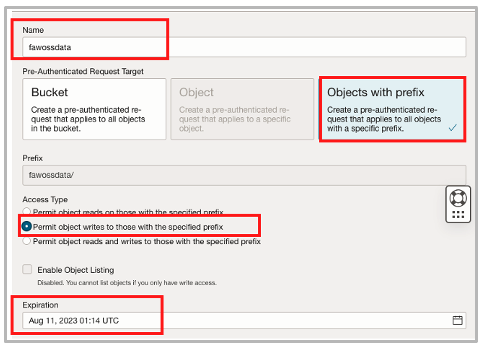

On the Create Pre-Authenticated Request page:

- Enter the same name as the folder name. Using the same name is optional but recommended for simplicity.

- For Access Type, select Permit object write to those with the specified prefix.

In Expiration, select a date for the link. The recommendation is to keep the expiration date short for security reasons.

When you have the link, copy and paste it into a text file and append the folder name to complete the URL. This link is used in the script.

Modify and Execute the Script

Disclaimer – This script is provided as an example, and its use is at your discretion. Oracle can’t warrant its execution in your environment or support its output in your environment.

Use this community SCRIPT as an example. You must have Python 3.6 to execute the script.

Update two of the parameters in the script:

- Update the pre_auth_url parameter with the URL generated earlier.

- Update the path parameter with the directory that contains the data files.

It should look something like this:

Path=’/Users/Downloads/Datafiles’

pre_auth_url=’ https://objectstorage.us-xxxxx-1.oraclecloud.com/p/xxxxxxxxJ_xxxxxx_xxxxxx-xxxxxxxxx/n/xxxxxxx/b/fawossbg/o/fawossdata/’

Execute the script using Python 3.6. Ensure that all the required files are in the path provided in the script.

The script throws an error if you haven’t provided the required metadata file.

Refer to the article Loading Data from Oracle Object Storage into Oracle Fusion Analytics for all the required files and naming conventions.

Call to Action

Follow the guidance in this article to automatically upload your company’s data files to Oracle Object Storage and create the necessary folder structure.