Introduction

In the rapidly evolving landscape of machine learning (ML) and artificial intelligence (AI), embeddings have become the backbone of many state-of-the-art systems, including large language models (LLMs). These dense vector representations encapsulate complex data modalities, from words and sentences to entire documents, images, and more. Feature stores, designed to streamline the ML pipeline by providing consistent and efficient access to preprocessed features, are now facing the challenge of effectively handling these embeddings. But the plot thickens with the rise of LLMs.

With their unparalleled capability to generate and interpret embeddings, LLMs have significantly reshaped the realm of natural language processing and understanding. This blog explores the necessity of incorporating embedding support into feature stores, highlighting the significant influence and possibilities unlocked by LLMs. In this exploration, we uncover the interconnectedness among embeddings, feature stores, and LLMs, delving into the future possibilities of this combination in contemporary AI applications.

The power of embeddings and the feature store

Managing a plethora of datasets, data sources, and transformations for machine learning, including word embeddings, can be complex and costly. Issues such as insufficient data cleaning, inconsistencies, transformation errors, data drift, and disparities between training and serving stages can delay model development and reduce its effectiveness, particularly in handling word embeddings. A feature store emerges as an all-encompassing solution in this scenario, providing a unified platform for data transformation and access throughout the training and serving phases. It ensures a steadfast pipeline for the ingestion and querying of data, including word embeddings, enhancing their usability and performance. This notebook showcases how to leverage a feature store to create and store word embeddings and in this process highlight the efficiency of re-usability and efficient storage methods to store word embeddings. It helps in the following areas:

-

Efficient storage for text embeddings: A fundamental role of the embedding feature store is housing pretrained word embeddings, such as Word2Vec, GloVe, FastText, or BERT. Originating from vast text datasets, these embeddings capture word semantics, proving beneficial for numerous natural language processing (NLP) tasks, including text categorization, entity identification, sentiment interpretation, among others.

-

Support for tailormade and custom embeddings: Some firms choose to craft their embeddings based on specific domain data or modify existing pretrained embeddings to align more closely with their distinct needs. An embedding feature store caters to these custom embeddings, making them available for diverse projects or model applications. Within the feature store, users can save these tailored embeddings with the transformation feature.

-

Swift access: The architecture of embedding feature stores is geared towards the rapid and effective extraction of embeddings. This efficiency is paramount, given the potentially large dimensionality of embeddings and the computational demand of their calculation. With a dedicated storage system, recalculating the same embeddings becomes redundant.

-

Uniformity and versioning: Version management and control are integral to an embedding feature store. Despite the emergence of new embedding versions, models can consistently utilize the same embeddings, preserving reproducibility and ensuring stable model operations over durations.

-

Handling vast data volumes: For entities grappling with colossal data volumes, the streamlined storage and extraction of embeddings can be daunting. However, a feature store powered by the Spark processing engine is adept at managing large-scale embeddings, offering tools for distributed data storage and access.

BERT and DistilBERT

DistilBERT, a lighter and faster variant of the BERT model, has shown promise in various Natural Language Processing tasks without compromising much on performance. Its compact design, achieved by distillation, makes it particularly suitable for storing embeddings efficiently in a Feature Store. Feature Stores, which serve as centralized repositories for machine learning features, need to manage storage effectively to ensure quick retrieval and usability of features. Embeddings from large models can consume significant storage space and increase retrieval times, thus affecting the efficiency of downstream tasks. By utilizing DistilBERT embeddings, data scientists can strike a balance between the quality of the embeddings and storage efficiency, ensuring that features are both relevant for model training and promptly accessible within the Feature Store. Without further delay, let’s get started.

Summary of steps

The following steps summarize the activities taking place:

-

Install the feature store instance with the following prerequisites:

-

Policies

-

Authentication and authorization

-

Variables declaration

-

-

Import feature store-dependent Python libraries into the notebook.

-

Load the SQuAD dataset.

-

Install the feature store construct.

-

Create entities and transformations on the word embeddings.

-

Create feature groups.

-

Create datasets.

-

Train an ML model using the DistilBERT algorithm.

Prerequisites

Policies

Before getting started, set the policies and authentication methods. You can check the following documentation on setting the following requisites:

For this blog, we have taken the dataset from the Stanford Question Answering Dataset (SQuAD). SQuAD is a reading comprehension dataset widely used for training and evaluating ML models on the task of question answering. Each example in the dataset consists of a question posed by a crowd worker based on a given passage from Wikipedia. The answer to every question is a segment or span of the passage.

Authentication

Install ADS with the following command:

python -m pip install --pre oracle-ads==2.9.0rc0

You can set the authentication to “resource principal” or “api_gateway” with the following command:

import ads

ads.set_auth(auth="resource_principal", client_kwargs={"service_endpoint": "<api_gateway>"})Variables

The feature store stores the metadata associated in the hive metastore, providing the compartment ID and the metastore ID.

import os

metastore_id = "<metastore_id>"Import the required libraries

By default the PySpark 3.2, the feature store and Data Flow Conda environment includes preinstalled python libraries for feature validation and monitoring such as great-expectations and monitoring libraries. The joining functionality is heavily inspired by the APIs used by Pandas to merge, join, or filter DataFrames. The APIs allow you to specify which features to select from which feature group, how to join them, and which features to use in join conditions:

import warnings

warnings.filterwarnings("ignore", message="iteritems is deprecated")

warnings.filterwarnings("ignore", category=DeprecationWarning)

import pandas as pd

from ads.feature_store.feature_store import FeatureStore

from ads.feature_store.feature_group import FeatureGroup

from ads.feature_store.model_details import ModelDetails

from ads.feature_store.dataset import Dataset

from ads.feature_store.common.enums import DatasetIngestionMode

from ads.feature_store.feature_group_expectation import ExpectationType

from great_expectations.core import ExpectationSuite, ExpectationConfiguration

from ads.feature_store.feature_store_registrar import FeatureStoreRegistrar Load the SQuAD Dataset

Now load the SQuAD dataset. The following fields are important:

-

answers: The starting location of the answer token and the answer text,

-

context: Background information from which the model needs to extract the answer.

-

question: The question a model answers.

from datasets import load_dataset

squad = load_dataset("squad", split="train[:5000]")

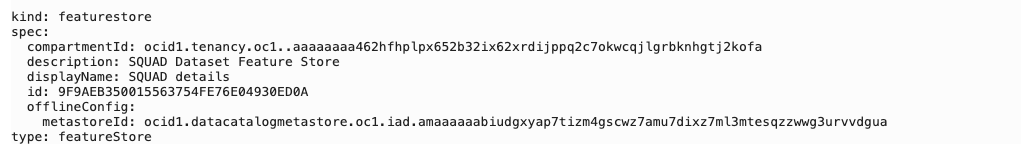

Create the feature store construct

If you have an existing feature store, you can refer to the existing feature store OCID. You can also follow the documentation or refer to the “Setting up Feature Store” section of our previous blog post. The feature store is the top-level entity of the service. Use the following steps.

-

Call the FeatureStore(). command to start the feature store

-

To materialize, call the .create() command.

feature_store_resource = (

FeatureStore().

with_description("SQUAD Dataset Feature Store").

with_compartment_id(compartment_id).

with_display_name("SQUAD details").

with_offline_config(metastore_id=metastore_id)

)

#use the .Create to create the feature store

feature_store = feature_store_resource.create()

feature_store

The following image shows the feature store construct:

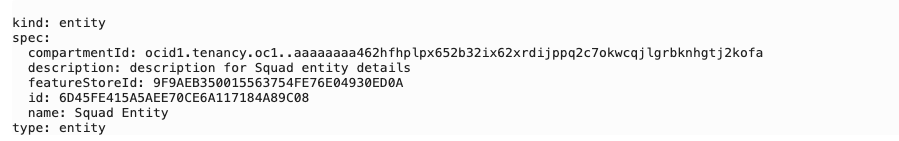

Create entities and transformations

An entity is a logical segregation of feature store entities. In the context of a feature store, transformations refer to operations or a series of operations applied to raw data to derive features that train machine learning models. These transformations are critical because raw data often isn’t in a format that’s directly usable or optimal for ML tasks.

To create entities, use the following command:

entity = feature_store.create_entity(

display_name="Squad Entity",

description="description for Squad entity details"

)

entity

Now, we generate embeddings for sentences by utilizing the transformation construct within the feature store. This process involves grouping sentences into batches and transforming words and sentences into embeddings.

def squad_embedding_transformation(df):

from datasets import Dataset

from transformers import AutoTokenizer

import json

import numpy as np

tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased")

def preprocess_function(examples):

questions = [q.strip() for q in examples["question"]]

inputs = tokenizer(

questions,

examples["context"],

max_length=384,

truncation="only_second",

return_offsets_mapping=True,

padding="max_length",

)

offset_mapping = inputs.pop("offset_mapping")

answers = examples["answers"]

start_positions = []

end_positions = []

for i, offset in enumerate(offset_mapping):

answer = answers[i]

start_char = answer["answer_start"][0]

end_char = answer["answer_start"][0] + len(answer["text"][0])

sequence_ids = inputs.sequence_ids(i)

# Find the start and end of the context

idx = 0

while sequence_ids[idx] != 1:

idx += 1

context_start = idx

while sequence_ids[idx] == 1:

idx += 1

context_end = idx - 1

# If the answer is not fully inside the context, label it (0, 0)

if offset[context_start][0] > end_char or offset[context_end][1] < start_char:

start_positions.append(0)

end_positions.append(0)

else:

# Otherwise it's the start and end token positions

idx = context_start

while idx <= context_end and offset[idx][0] <= start_char:

idx += 1

start_positions.append(idx - 1)

idx = context_end

while idx >= context_start and offset[idx][1] >= end_char:

idx -= 1

end_positions.append(idx + 1)

inputs["start_positions"] = start_positions

inputs["end_positions"] = end_positions

return inputs

df['answers'] = df['answers'].apply(json.loads)

# Convert lists back to NumPy arrays within dictionaries

df['answers'] = df['answers'].apply(lambda x: {k: np.array(v) for k, v in x.items()})

dataset = Dataset.from_pandas(df)

dataset = dataset.train_test_split(test_size=0.2)

dataset = dataset.map(preprocess_function, batched=True, remove_columns=dataset["train"].column_names)

return dataset["train"].to_pandas()

Materialize this transformation with the following code:

from ads.feature_store.transformation import TransformationMode

squad_transformation = feature_store.create_transformation(

transformation_mode=TransformationMode.PANDAS,

source_code_func=squad_embedding_transformation,

display_name="squad_embedding_transformation",

)

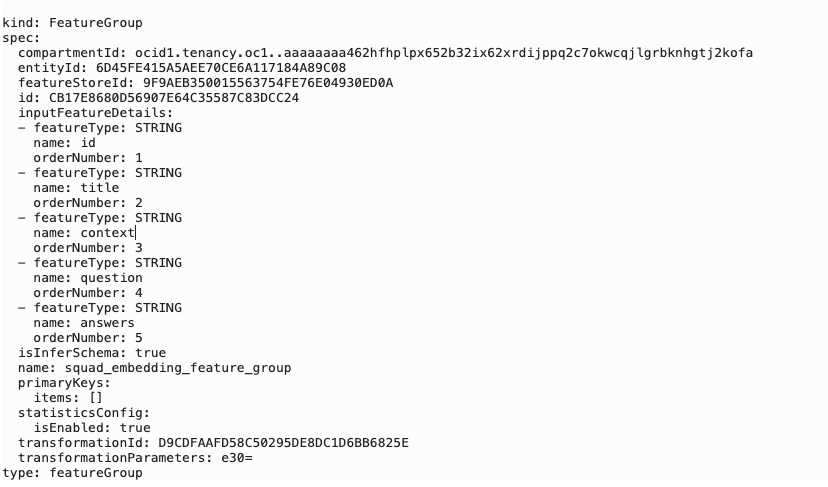

squad_transformationFeature groups

Now, create a feature group to store the embeddings created from the SQuAD dataset.

import json

squad_df = squad.to_pandas()

# Convert NumPy arrays within dictionaries to lists

squad_df['answers'] = squad_df['answers'].apply(lambda x: {k: v.tolist() for k, v in x.items()})

# Convert the 'data' column back to dictionaries of NumPy ndarrays

squad_df['answers'] = squad_df['answers'].apply(json.dumps)

squad_embedding_feature_group = (

FeatureGroup()

.with_feature_store_id(feature_store.id)

.with_primary_keys([])

.with_name("squad_embedding_feature_group")

.with_entity_id(entity.id)

.with_compartment_id(compartment_id)

.with_schema_details_from_dataframe(squad_df)

.with_transformation_id(squad_transformation.id)

)

Materialize the SQuAD feature group with the following command:

squad_embedding_feature_group.create()

Show the feature group with the following command:

squad_embedding_feature_group.show()

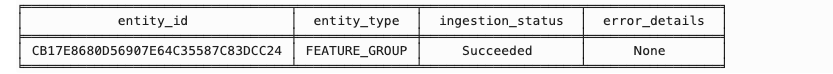

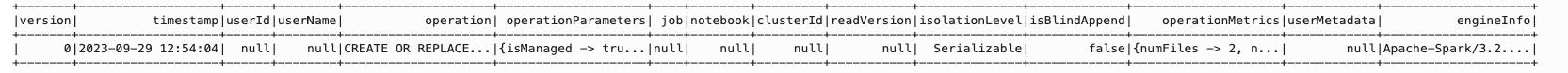

View the history of the feature group versions

Using the .history().show() function, you can view the history and versions of the feature group that has evolved over a period of time.

squad_embedding_feature_group.history().show()

Transforming the feature groups to Pandas dataframes

You can also transform the feature groups to convert to Pandas dataframes.

tranining_df = squad_embedding_feature_group.select().read().toPandas()

tranining_df

You can now use this dataframe to train a DistilBERT model.

Train a DistilBERT model

You’re now ready to start training your model! Load DistilBERT with AutoModel for question answering. Use create_optimizer():

from transformers import create_optimizer

batch_size = 16

num_epochs = 2

total_train_steps = (len(tranining_df) // batch_size) * num_epochs

optimizer, schedule = create_optimizer(

init_lr=2e-5,

num_warmup_steps=0,

num_train_steps=total_train_steps,

)

The TFAutoModelForQuestionAnswering class in the Hugging Face transformers library is designed for TensorFlow users and provides a simple way to automatically load a pretrained model suitable for question-answering (QA) tasks.

from transformers import TFAutoModelForQuestionAnswering

model = TFAutoModelForQuestionAnswering.from_pretrained("distilbert-base-uncased")

In the Hugging Face’s transformers library, the DefaultDataCollator serves a key role in the data preparation pipeline for model training. It converts a list of samples into a format suitable for model training, especially when training using mini-batches.

from transformers import DefaultDataCollator

data_collator = DefaultDataCollator(return_tensors="tf")

from datasets import Dataset

tf_train_set = model.prepare_tf_dataset(

Dataset.from_pandas(tranining_df),

shuffle=True,

batch_size=16,

collate_fn=data_collator,

)

Import the Tensorflow libraries and its dependencies. Use the .compile() command with the optimizer to compile the model.

import tensorflow as tf model.compile(optimizer=optimizer) model.fit(x=tf_train_set, epochs=3)

Use the .fit() function to train the Tensorflow model:

model.fit(x=tf_train_set, epochs=3)

When this model is trained and evaluated, we can deploy the model through Oracle Cloud Infrastructure (OCI) Data Science model deployment.

Conclusion

In this blog, we saw how text embeddings can be stored efficiently and reused to train an ML model. The synergy between embeddings, especially those from LLMs, and feature stores is undeniable. As we continue to push the boundaries of what’s possible in machine learning, ensuring that our tools and infrastructure evolve in tandem is critical. The age of embeddings in feature stores, powered by the might of LLMs, is just beginning, and it promises a future of unparalleled innovation and discovery. For a detailed walkthrough on integrating a feature store with your workflows, refer to our Demo Jupyter Notebooks (Link -> Jupyter Notebooks ).

References

To get started on the feature store, you can try the sample notebooks or watch the demos. Try Oracle Cloud Free Trial for yourself! A 30-day trial with US$300 in free credits gives you access to OCI Data Science service. For more information, see the following resources:

-

Full sample including all files in OCI Data Science sample repository on GitHub.

-

Visit our OCI Data Science service documentation.

-

Configure your OCI tenancy with these setup instructions and start using OCI Data Science.

-

Star and clone our new GitHub repo! We included notebook tutorials and code samples.

-

Try one of our LiveLabs. Search for “data science.”