Are you looking for a fast and easy way to deploy your LangChain applications built with large language models (LLMs)? Oracle Accelerated Data Science (ADS) v2.9.1 adds a new feature to deploy serializable LangChain application as REST API on Oracle Cloud Infrastructure (OCI) Data Science Model Deployment. With ADS software developer kits (SDKs), you can deploy your LangChain application as an OCI Data Science Model Deployment endpoint in a few lines of code. This blog post guides you through the process with step-by-step instructions.

LangChain

LLMs are a groundbreaking technology that encapsulate vast human knowledge and logical capabilities into a massive model. However, the current language model usage has many issues. The entire ecosystem is still evolving, resulting in a lack of adequate tools for developers to deploy language models.

LangChain is a framework for developing applications based on language models. Tasks like prompt engineering, logging, callbacks, persistent memory, and efficient connections to multiple data sources are standard out-of-the-box in LangChain. Overall, LangChain serves as an intermediary layer that links user-facing programs with the LLM. Through LangChain, combining other computing resources and internal knowledge repositories, we can advance various LLM models, toward practical applications based on the current corpus resources, providing them with new phased utility.

OCI model deployment

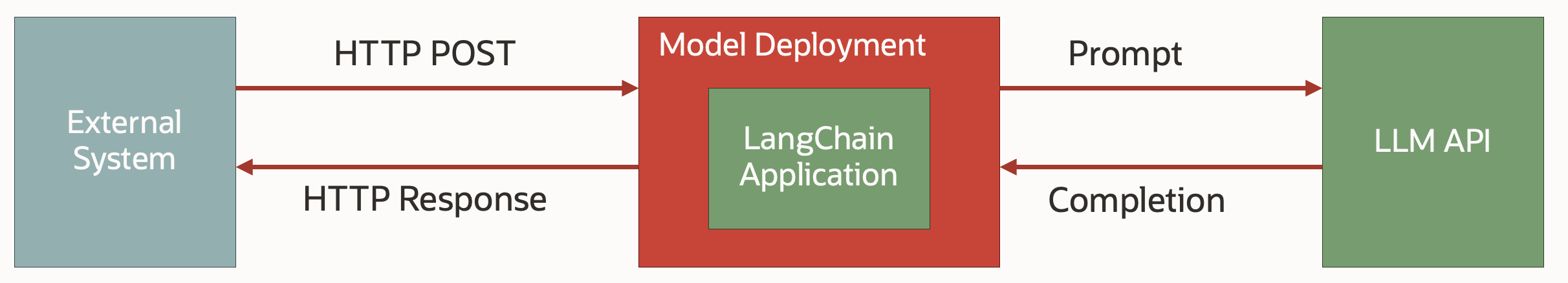

OCI Data Science is a fully managed platform for data scientists and machine learning (ML) engineers to train, manage, deploy, and monitor ML models in a scalable, secure, enterprise environment. You can train and deploy any model, including LLMs in the Data Science service. Model deployments are a managed resource in OCI Data Science to use to deploy ML models as HTTP endpoints in OCI. Deploying ML models as web applications or HTTP API endpoints serving predictions in real time is the most common way that models are productionized. HTTP endpoints are flexible and can serve requests for model predictions.

ADS SDK

The OCI Data Science service team maintains the ADS SDK. It speeds up common data science activities by providing tools that automate and simplify common data science tasks, providing a data scientist-friendly Pythonic interface to OCI services, most notably Data Science, Data Flow, Object Storage, and the Autonomous Database. ADS gives you an interface to manage the lifecycle of ML models, from data acquisition to model training, evaluation, registration, and deployment.

Create your LangChain application

As an example, the following code builds a simple LangChain application to take a subject as input and generate a joke about the subject. Essentially it puts the user input into a prompt template and sends it to the LLM. Here, we use, Cohere as the LLM, but you can replace it with any other LLM that LangChain supports.

import os

from langchain.llms import Cohere

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

os.environ["COHERE_API_KEY"] = "COHERE_API_KEY"

llm = Cohere()

prompt = PromptTemplate.from_template("Tell me a joke about {subject}")

llm_chain = LLMChain(prompt=prompt, llm=llm, verbose=True)Deploy the LangChain application using the Oracle ADS SDK

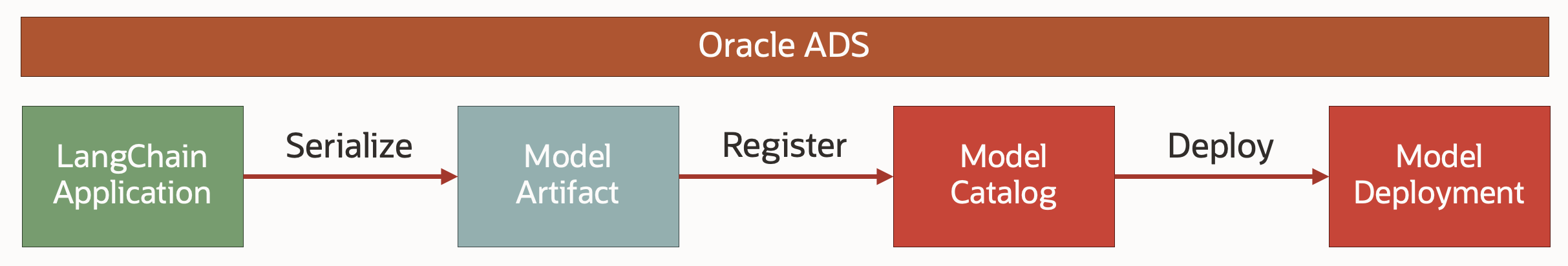

Now, you have a LangChain application, llm_chain, and you can easily deploy it to as OCI model deployment using Oracle ADS SDK. The ADS SDK simplifies the workflow of deploying your application as a REST API endpoint by taking care of the serialization, registration and deployment.

from ads.llm.deploy import ChainDeployment

ChainDeployment(chain=llm_chain).prepare_save_deploy(

inference_conda_env="CUSTOM_CONDA_URI",

deployment_display_name="LangChain Deployment",

environment_variables={"COHERE_API_KEY":"cohere_api_key"}

)

Here, replace CUSTOM_CONDA_URI with your conda environment uri that has the latest ADS v2.9.1. For how to customize and publish conda environment, take reference to Publishing a Conda Environment to an Object Storage Bucket

Behind the scenes, ADS SDK automatically handles the following processes:

-

Serialization of the LangChain application: The configuration of the your application is saved into a YAML file.

-

Preparation of the model artifact, including some autogenerated Python code to load the LangChain application from YAML file and run it with the user’s input.

-

Saving the model artifacts to model catalog: This process uploads and registers your application as model in the model catalog.

-

Creating a model deployment: This deploys the scalable infrastructure to serve your application as HTTP endpoint.

Note that the LLM is not running as part of the model deployment in this example, instead, it is being invoked by the LangChain application as independent API. Such applications can be deployed with cost-effective CPU shapes. When the deployed model is active, you can integrate with external system using HTTP request/response.

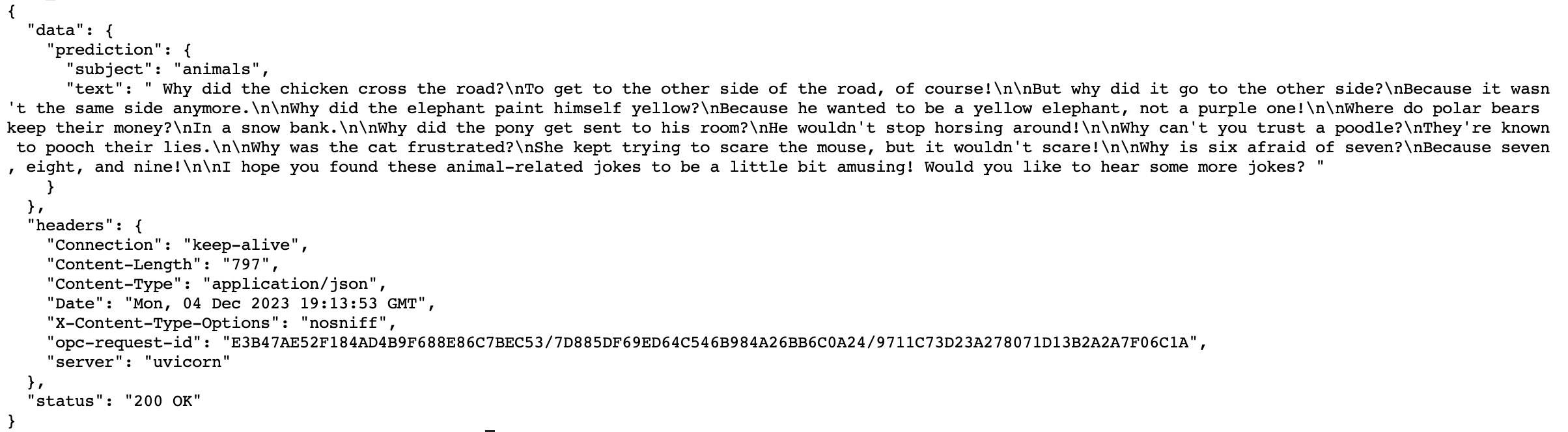

For example, you can use OCI CLI to invoke it. Replace the LANGCHAIN_APPLICATION_MODEL_DEPLOYMENT_URI with the actual model deployment URL, which you can find in the output from the previous step.

oci raw-request --http-method POST --target-uri LANGCHAIN_APPLICATION_MODEL_DEPLOYMENT_URI/predict --request-body '{"subject": "animals"}' --auth resource_principal

For more specific requirements and steps, refer to the ADS user guide and the ADS notebook example, Deploy LangChain Application as OCI Data Science Model Deployment.

In the above example, the Cohere API is used for LLM. However, you can also deploy open-source LLMs with OCI Data Science Model Deployment and use it with the LangChain application. Please refer to the following blogs for more details on how to deploy LLMs:

Try for yourself

Try Oracle Cloud Free Trial! A 30-day trial with US$300 in free credits gives you access to OCI Data Science service.

Ready to learn more about the Oracle Cloud Infrastructure Data Science service?

-

Configure your OCI tenancy with these setup instructions and start using OCI Data Science.

-

Star and clone our new GitHub repo! We’ve included notebook tutorials and code samples.

-

Visit our service documentation

-

Subscribe to our Twitter feed

-

Visit the Oracle Accelerated Data Science Python SDK documentation

-

Try one of our LiveLabs. Search for “data science”.