[Update from 4/18] OCI Data Science released AI Quick Actions, a no-code solution to fine-tune, deploy, and evaluate popular Large Language Models. You can open a notebook session to try it out. This blog post will guide you on how to work with LLMs via code, for optimal customization and flexibility.

In the rapidly evolving landscape of large language models (LLMs), where groundbreaking models emerge frequently, Meta has unveiled its latest offering, Llama 2, a collection of pretrained and finetuned generative text models ranging in scale from 7–70 billion parameters. The pretrained models come with significant improvements over the Llama 1 models, including being trained on 40% more tokens, having a much longer context length (4,000 tokens), and more. Moreover, the finetuned models in Llama 2-Chat have been optimized for dialogue applications using reinforcement learning from human feedback (RLHF), making them perform better than most open models. With its advanced language modeling, you can use Llama 2 for various use cases, from generating high-quality content for websites and social media platforms, to personalized content for marketing campaigns, to improving customer support by creating instant responses and routing to appropriate agents, and much more.

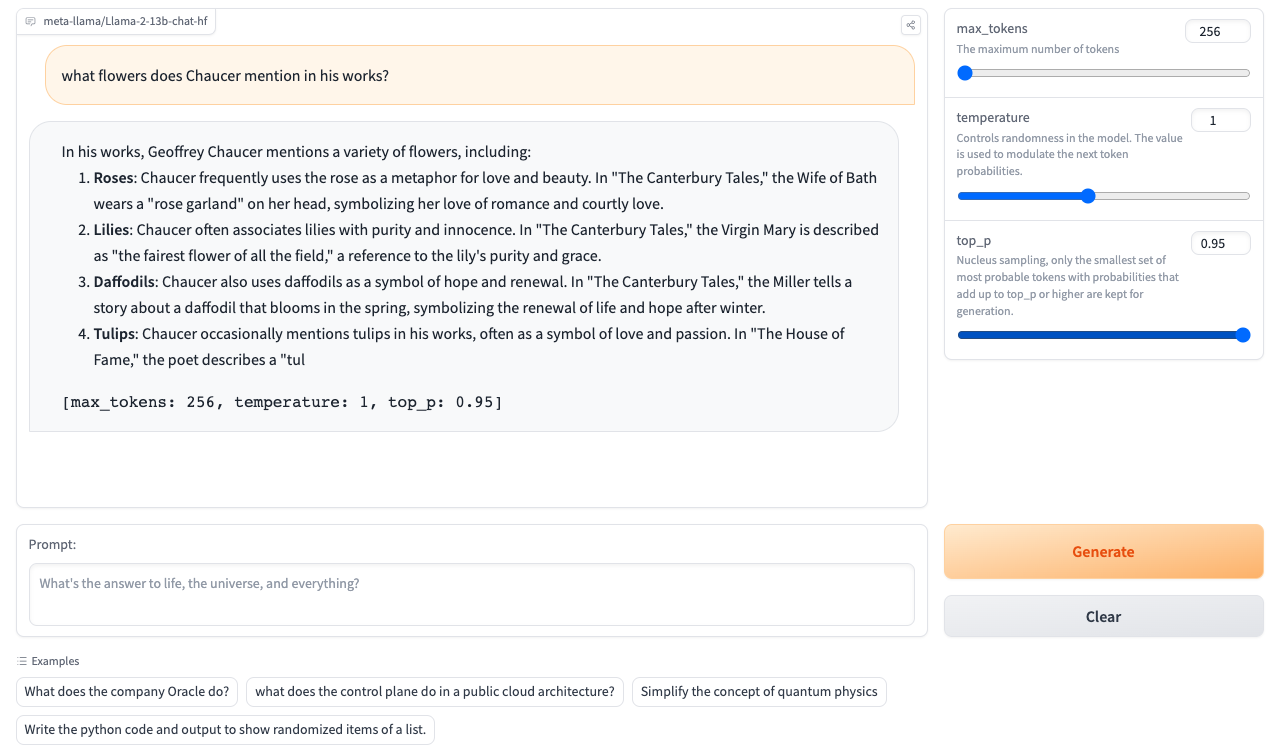

In this blog post, we deploy a Llama 2 model in Oracle Cloud Infrastructure (OCI) Data Science Service and then take it for a test drive with a simple Gradio UI chatbot client application. The Llama 2-Chat model deploys in a custom container in the OCI Data Science service using the model deployment feature for online inferencing. The container is powered by a LLM server, equipped with optimized CUDA kernels, continuous and dynamic batching, optimized transformers, and more. We demonstrate how to use the following features:

-

Text generation inference (TGI): A purpose-built solution for deploying and serving LLMs from Hugging Face. We show how to extend it to provide mappings between the interface requirements of the model deployment resource.

-

vLLM: An open source, high-throughput, and memory-efficient inference and serving engine for LLMs from UC Berkeley.

While this article focuses on a specific model in the Llama 2 family, you can apply the same methodology to other models. See our previous example on how to deploy GPT-2.

Sample code

For the complete walkthrough with the code used in this example, see the Oracle GitHub samples repository.

Prerequisites

Using Llama 2 model requires accepting the user agreement on Meta’s website. Downloading the model from HuggingFace requires an account and agreeing to the service terms. Ensure that the license permits usage for your intended use.

Recommended hardware

For the deployment of the models, we use the following OCI shape based on Nvidia A10 GPU. Both models in our example, the 7b and 13b parameter are deployed using the same shape type. However, the 13b parameters model utilize the quantization technique to fit the model into the GPU memory. OCI supports various GPUs for you to pick from.

| Model | Shape | Quantization |

|---|---|---|

| Llama 7b | VM.GPU.A10.2 (24-GB RAM per GPU, 2xA10) | None |

| Llama 13b | VM.GPU.A10.2 | bitsandbytes (8-bit) |

Deployment approach

You can use the following methods to deploy an LLM with OCI Data Science:

-

Online method: Download the LLM directly from the repository that hosts into the model deployment. This method minimizes copying of data, which is important for large models. To access the model online, we use a token file as the model artifact to authenticate against the repository host (HuggingFace) and download the model during the model deployment creation. However, this method lacks governance of the model itself, which might be important in some scenarios, like production environments. It also doesn’t cover scenarios where you must finetune the model. For those purposes, use the offline method.

-

Offline method: Download the LLM model from the host repository and save it into the Data Science model catalog, then deploy it using the model deployment feature, directly from the model catalog. Using the model catalog also helps with controlling and governing the model and saving a finetuned version of the LLM.

In both methods, we use either text generation inference (TGI) or virtual LLM as the LLM inference framework, and deploy it through the model deployment’s custom containers (Bring Your Own Container) with the LLM model.

For the larger Llama-2 13b model, we take advantage of the quantization technique supported by bitsandbytes, which reduces the GPU memory required for the inference, by lowering the precision to enable use to take advance of the most cost optimized A10 VM GPU based shape.

Inference container

Among the several inference options, we have tried the following two:

-

Hugging Face, Text Generation Inference (TGI), HFOIL 1.0 License: Before 1.0 the package was Apache 2 licensed.

-

vLLM from UC Berkeley with an Apache 2 license: vLLM claims 3.5-times the throughput of TGI on an A10.

We initially used the TGI from Hugging Face, this container image is straightforward to build. The OCI Data Science model deployment has certain routing requirements for /predict and /health, which we must align with the TGI containers. That mapping can be done when creating the deployment (see in the sample).

Creating the model deployment

The last step is to deploy the model and the inference container by creating a model deployment. When the model is deployed, it has a predict URL that you can call by HTTP to invoke the model.

Follow the instructions from our README to create the model deployment using A10 GPUs.

For the online method, we must use a custom virtual cloud network (VCN) with access to the public internet. The GitHub samples repository has a complete walkthrough, including samples on how to build, push and easily deploy with the Oracle Accelerated Data Science Library. Custom networking is currently in limited availability in model deployment.

Testing the model

When the model is deployed, we want to test and use it. We use a Gradio Chat app, that uses the example chatbot app from Hugging Face. After configuring the application to use the predict URL, it’s ready to take in a prompt with the following parameters:

-

max_tokens: The maximum number of tokens to generate (the size of the output sequence, not including the tokens in the prompt)

-

temperature: Controls randomness in the model. The value is used to modulate the next token probabilities. (0.2–2.0)

-

top_p: Nucleus sampling, only the smallest set of most probable tokens with probabilities that add up to top_p or higher are kept for generation. (0–1.0)

The Gradio app uses the config.yaml file to identify for any model the properties of each model, such as the endpoint to use. The format of the config.yaml uses the following code block:

models:

meta-llama/Llama-2-7b-chat-hf:

endpoint: https://modeldeployment.us-ashburn-1.oci.customer-oci.com/ocid1.datasciencemodeldeployment.<placeholder-ocid>/predict

template: prompt-templates/llama.txtTo run the app specifying the model, use the following command:

MODEL=meta-llama/Llama-2-7b-chat-hf gradio app.pyThis command invokes the app and tells it to use the 7b model. Then, the endpoint is derived with the template for the model.

Conclusion

Deploying Llama-2 on OCI Data Science Service offers a robust, scalable, and secure method to harness the power of open source LLMs. With the assistance of an intuitive inference framework, you can seamlessly deploy any LLM into your applications. Plus, it’s all backed by the high-performance capabilities of scalable GPUs on OCI.

In future posts, we demonstrate how to deploy other LLMs and finetune models before deployment. Stay tuned!

Try Oracle Cloud Free Trial! A 30-day trial with US$300 in free credits gives you access to Oracle Cloud Infrastructure Data Science service. For more information, see the following resources:

-

Full sample, including all files in OCI Data Science sample repository on GitHub.

-

Visit our service documentation.

-

Try one of our LiveLabs. Search for “data science.”

-

Got questions? Reach out to us at ask-oci-data-science_grp@oracle.com