When you have a conversation with someone, the person typically remembers the dialogue so that you can use pronouns to refer to entities or make use of context to understand certain terms. For example, I may ask “When did you buy your house?” and later ask “How many rooms does it have?” or infer that “hike” refers to interest rates instead of a rigorous outdoor activity. This conversation memory facilitates smoother and more cohesive human interactions.

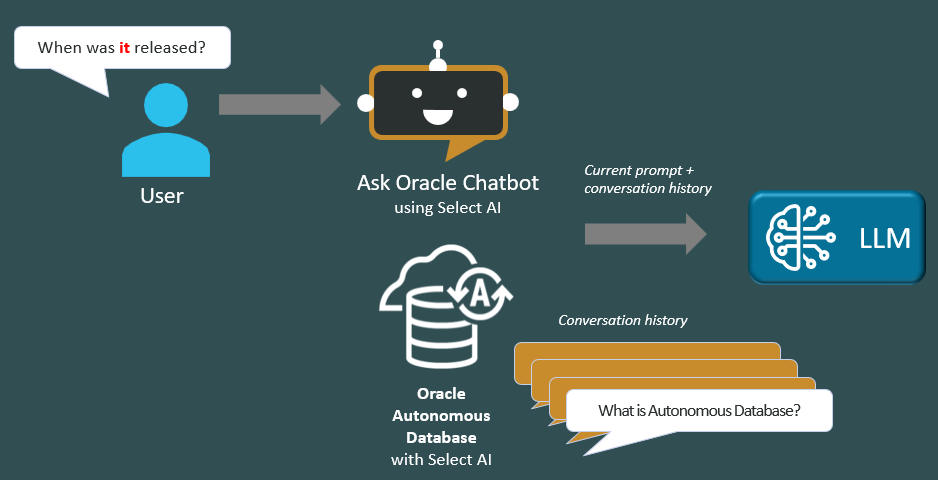

Similarly, AI chatbots also benefit from “memory” of their past interactions, forming a conversation—the interactive dialogue between the user and the AI system supporting that chatbot. In the case of chatbot conversations using Select AI, the sequence of user-provided natural language prompts is stored and managed to support short-term and long-term memory for LLM interactions.

Maintaining different conversations and tracking prompt histories within each conversation is an essential feature for AI chatbot services, as it improves usability and provides contextually relevant generated results. Now, we’re enhancing Select AI with a conversation management API, providing greater functionality and benefits with customizable, long-term conversations using specific procedures or functions and conversation IDs – a significant extension to the original short-term, session-based conversations.

By maintaining multiple conversations and allowing users to switch between them, the new API helps Select AI to generate more relevant, context-aware responses based on prior interactions using the LLM of their choice. This enhances LLM response quality and boosts user workflow efficiency in Select AI. For example, you don’t need to provide context repeatedly and explicitly in your prompts, which reduces prompt writing time. By being able to switch between conversations, you can work on multiple topics without losing or confusing context.

Short and long-term conversations

When you use conversations, Select AI retrieves the available prompt history and sends it to the LLM to generate a response for the current prompt. These responses are stored in a table for future use. As noted above, Select AI has been supporting session-based short-term conversations and now customizable conversations.

Session-based short-term conversations: This first-generation capability includes up to 10 prompts from the current database session. You enable session-based conversations, which are associated with the current AI profile, by setting the conversation attribute to ‘true’ in that AI profile. Unlike the customizable conversation capability, session-based conversations store prompts only for the duration of the session – kept in a temporary table and automatically dropped when the session ends. This type of conversation cannot be reused, and users cannot switch between conversations.

Customizable conversations: This next generation capability supports creating and using multiple named conversations. Customizable conversations enable you to use Select AI with different topics without mixing context and improve both conversation flexibility and efficiency. You can create, set, delete, and update conversations through the DBMS_CLOUD_AI conversation procedures and functions. See more details on this capability below.

Customizable conversations details

The new customizable conversation capability works with various Select AI actions. It is independent of any specific AI profile and works across multiple AI providers.

When a conversation is active, Select AI retrieves previous communication histories (i.e., prompts and responses) from that conversation and sends them to the LLM to assist in response generation for the current prompt. The generated response is then stored in a persistent table in the local schema for future use.

The new API procedures and functions introduced for this capability include:

- create_conversation: creates a new conversation

- set_conversation_id: activates the use of conversation within the session by given the conversation_id

- get_conversation_id: get the conversation id, if any, set for the current session

- clear_conversation_id: clears any conversation id set in the session to disable conversation tracking and use

- drop_conversation: remove the conversation and all associated content

- update_conversation: update the conversation attributes

- delete_conversation_prompt: delete a specific conversation prompt in the history

The overloaded function/procedure for this capability is:

• generate: accepts a new argument “params” which can include a conversation id, which associates the prompt and its result with the given conversation.

Using the new Select AI Conversations API

You can use the new Select AI conversation API in two ways:

- Use ‘SELECT AI + <ACTION> + <PROMPT>’ when the conversation id has been set in the session by invoking set_conversation_id.

- Call DBMS_CLOUD_AI.generate with conversation_id provided in the params argument.

Views to access conversation content are:

- user_cloud_ai_conversations

- user_cloud_ai_conversation_prompts

The conversation management API enables several special capabilities. Users can provide a title for each conversation. If a title is not provided, Select AI will have the LLM generate one when the conversation is used with the first prompt.

You can provide a description for the conversation topic. If not provided, Select AI will have the LLM generate one when the conversation is first used and update it again on the 5th use so that it includes more accurate and relevant information.

You can have Select AI automatically drop conversations. Using the retention_days attribute, you can specify the number of days the conversation will be stored, with 7 days being the default. A value of 0 indicates that the conversation should not be removed unless it is manually deleted by drop_conversation.

You can also customize the maximum conversation length. Using the conversation_length attribute, only the specified number of latest prompts and results in the conversation will be sent to the LLM with the current prompt. The default value is 10, with a maximum allowed value of 999.

Let’s look at some examples of how you can use the new API.

Example 1: Enable a conversation in a single step

You can create the conversation and set its id in a single step using the procedure create_conversation. This allocates a new id that can be used implicitly when running subsequent “select ai <action> <prompt>” statements on the SQL command line.

EXEC DBMS_CLOUD_AI.create_conversation;

Example 2: Create a new conversation id

Use the corresponding create_conversation function to create a conversation id that you can use later—for example, to explicitly set the conversation id using set_conversation_id for use with the SQL command line or to use it in DBMS_CLOUD_AI.generate.

-- Create conversation with default attributes

SQL> SELECT DBMS_CLOUD_AI.create_conversation AS conversation_id;

CONVERSATION_ID

--------------------------------------------------------------------------------

3A3C09EA-7DAC-A9E7-E063-9C6D46647D64

-- Create conversation with custom attributes

SQL> SELECT DBMS_CLOUD_AI.create_conversation(

attributes => '{"title":"Conversation 1",

"description":"this is a description",

"retention_days":5,

"conversation_length":5}')

AS conversation_id FROM dual;

CONVERSATION_ID

--------------------------------------------------------------------------------

3A3C09EA-7DAB-A9E7-E063-9C6D46647D64

Example 3: Set and use the conversation id at the SQL command line

Specify a conversation id from a previously created conversation. If there are prompts already in this conversation, new prompts will be added in sequence. Use set_conversation_id to use the SQL command line.

EXEC DBMS_CLOUD_AI.set_conversation_id(conversation_id => '3A3C09EA-7DAB-A9E7-E063-9C6D46647D64');

Once you create the conversation and set its id along with your AI profile, you can interact with your LLM within the context of the specified conversation. In the following example, which uses the ‘chat’ action, the user asks, “What is the difference in weather between Seattle and San Francisco?” and then follows that up with “Explain the difference again in one paragraph only.” The LLM uses the context of the previous prompt to know we’re talking about San Francisco and Seattle.

SELECT AI CHAT What is the difference in weather between Seattle and San Francisco; RESPONSE -------------------------------------------------------------------------------- Seattle and San Francisco are both located on the West Coast of the United State s, but they have distinct weather patterns due to their unique geography and cli mate conditions. Here are the main differences: 1. **Rainfall**: Seattle is known for its rainy reputation, with an average annu al rainfall of around 37 inches (94 cm). San Francisco, on the other hand, recei ves significantly less rainfall, with an average of around 20 inches (51 cm) per year. 2. **Cloud Cover**: Seattle is often cloudy, with an average of 226 cloudy days per year. San Francisco is also cloudy, but to a lesser extent, with an average of 165 cloudy days per year. ... SELECT AI CHAT Explain the difference again in one paragraph only; RESPONSE -------------------------------------------------------------------------------- Seattle and San Francisco have different weather patterns despite both experienc ing a mild oceanic climate. San Francisco tends to be slightly warmer, with aver age temperatures ranging from 45?F to 67?F, and receives less rainfall, around 2 0 inches per year, mostly during winter. In contrast, Seattle is cooler, with te mperatures ranging from 38?F to 64?F, and rainier, with around 37 inches of rain fall per year, distributed throughout the year. San Francisco is also known for its fog, particularly during summer, and receives more sunshine, around 160 sunn y days per year, although it's often filtered through the fog. Overall, San Fran cisco's weather is warmer and sunnier, with more pronounced seasonal variations, while Seattle's is cooler and rainier, with more consistent temperatures throug hout the year.

Example 4: Get and clear the conversation id

You can get the currently set conversation id to know which conversation is active in the current session. You can also clear any conversation so that subsequent prompts are not associated with any conversation – until a new conversation id is set. As shown in the output below, the second get_conversation_id returns no conversation id since it was previously cleared.

-- Get the conversation id that is set in the session SQL> SELECT DBMS_CLOUD_AI.get_conversation_id; GET_CONVERSATION_ID -------------------------------------- 3A88BFF0-1D7E-B3B8-E063-9C6D46640ECD -- Clear the conversation id SQL> EXEC DBMS_CLOUD_AI.clear_conversation_id; -- The conversation id is removed from the session and the NULL result is returned SQL> SELECT DBMS_CLOUD_AI.get_conversation_id; GET_CONVERSATION_ID --------------------------------------

Example 5: Use a conversation id in DBMS_CLOUD_AI.generate

You can also specify the conversation id in the params argument to generate. Each invocation of generate can potentially use a different conversation id, if desired. In this example the prompt is associated with the specified conversation id.

SQL> SELECT DBMS_CLOUD_AI.generate(

prompt => 'what is Oracle Autonomous Database? Describe in one paragraph',

profile_name => 'GENAI',

action => 'CHAT',

params => '{"conversation_id":"3A3C09EA-7DAB-A9E7-E063-9C6D46647D64"}') AS RESPONSE;

RESPONSE

--------------------------------------------------------------------------------

Oracle Autonomous Database is a self-driving, self-securing, and self-repairing

cloud database service powered by machine learning. It automates routine databas

e tasks like patching, tuning, upgrades, and backups, freeing up database admini

strators to focus on higher-value activities. By leveraging machine learning, it

optimizes performance, enhances security by automatically applying security pat

ches and detecting threats, and minimizes downtime through proactive failure pre

diction and automatic recovery. This ultimately results in reduced operational c

osts, improved security, and enhanced performance compared to traditional databa

se management approaches.

Comparing conversation capabilities

The following table compares the first and next generation conversation capabilities.

| Comparison Point | First-generation conversations | Next-generation conversations |

|---|---|---|

| How to enable? | Defined as an AI profile attribute: {“conversation”: true or false} |

Enabled using create_conversation or set_conversation, or specified in DBMS_CLOUD_AI.generate. |

| How many conversations are allowed? | In each database session, there is at most one conversation. | Multiple conversations can be created and used. |

| Where are prompts stored? | In a temporary table in the user schema. | Prompts are stored in a permanent separate table. |

| How many prompts will be stored? | Maximum of 10. Users cannot customize this limit. | All prompts can be stored up the user-specified limit. |

| How long will prompts be stored? | Once the database session ends, all prompts in the conversation are dropped. | Prompts are stored until the conversation’s retention period expires, at which point both the conversation and prompts are deleted. Individual conversations and prompts can also be manually deleted. |

| Can users remove individual prompts? | No. | Yes, using the delete_conversation_prompt procedure. |

| What prompts will be sent to LLM? | Only prompts belonging to the current user, profile, and action if conversation is enabled in the AI profile. | Prompts associated with the currently set conversation will be sent to the LLM. |

| Does the AI profile affect the conversation capability? | Yes. Only prompts and responses generated using the same AI profile are used. | No. While Select AI associates the AI profile with prompts and responses, it does not restrict based on the profile. All conversation content, up to the length specified, are sent regardless of the AI profile from which the prompt responses were generated. |

| Does the action affect conversation behavior? | Yes. Only prompts and responses generated by the same action are included. | No. While Select AI associates the action used, all conversation content, up to the length specified, will be sent to the LLM regardless of which action was used to produce them. |

| How many prompts will be sent to LLM? | A maximum of 10 prompts, which is not customizable. | Customizable using the conversation_length parameter, which defaults to 10, with a maximum of 999. |

| Can users view the prompts in a conversation? | No. Prompts are not accessible for querying. | Yes. Views, including user_cloud_au_conversation and user_cloud_ai_conversation_prompts, allow users to query and review conversations and prompts. |

Resources

- Try Select AI for free on OCI: Autonomous Database Free Trial

- Video: Getting Started with Oracle Select AI

- Documentation

- • LiveLabs