In previous installments of this series, we saw that the high availability of Oracle Exadata for compute nodes and storage servers enables more resiliency than just redundancy on the component level.

In this blog post, I will explore the features Exadata brings to the internal Remote Direct Memory Access (RDMA) over Converged Ethernet switches and the Host Channel Adapters (HCA) and how Exadata helps to avoid human error.

In the introduction, we briefly discussed RDMA over Converged Ethernet (a.k.a. RoCE), but let’s recap briefly.

RoCE is the latest generation of Exadata’s RDMA Network Fabric. RoCE runs the RDMA protocol on top of Ethernet. Before Exadata X8M, the internal fabric ran the RDMA protocol on InfiniBand (IB). As the RoCE API infrastructure is identical to InfiniBand’s, all existing Exadata performance features continue to benefit from RoCE. In addition, RoCE enables the scalability and bandwidth of Ethernet with the speed of RDMA, making for an incredible combination that has been further optimized. The RoCE protocol is defined and maintained by the InfiniBand Trade Association (IBTA), an open consortium of companies. It is an open-source standard maintained upstream Linux supported by most major network card and switch vendors.

RDMA allows one computer to directly access data from another without operating system or CPU involvement and provides high bandwidth and low latency. The network card directly reads from and writes to memory without extra copying, buffering, and low latency. RDMA is an integral part of the Exadata high-performance architecture. It has been tuned and enhanced over the past decade, underpinning several Exadata-only technologies such as Exafusion Direct-to-Wire Protocol.

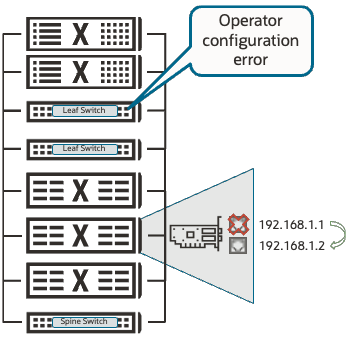

Each Exadata rack has at least two RoCE switches; if multiple Exadata racks are interconnected, an extra spine switch is added to each rack. Each compute node and storage cell is redundantly connected to the RoCE switches for maximum resiliency. Let me describe a few examples of how Exadata resiliency ensures business continuity when failures occur.

What happens if a port breaks?

Compute nodes and storage servers are redundantly connected, making active-active communications possible. The HCA connected to RoCE switches are connected in an active-active configuration with integrated failover; if one port breaks, the other port takes over.

All good, but dead components are easy to diagnose; what about sick components?

Oracle Exadata has extensive capabilities to diagnose sick network handling, including symbol errors and port flapping detection. As of Exadata System Software 24ai, these capabilities were further improved with the introduction of Exadata Port Monitor (ExaPortMon). This new process runs on every Exadata compute and storage server and continuously monitors Exadata’s private network, which is crucial for the system’s well-functioning.

A misconfiguration or outage of a RoCE leaf switch may lead to a stalled port, meaning that the port appears online but has no network traffic flowing. ExaPortMon automatically detects stalled switch ports and moves the IP address of the stalled port to the other active RoCE port on the database or storage server, enabling network traffic to flow. The IP address is moved back to its original port when the switch port issue is resolved.

ExaPortMon also monitors for online RoCE switch ports with network traffic flowing that may send corrupted network packets (or exhibit other symptomatic behavior). In this case, ExaPortMon will identify the sick switch and shut off the port(s) associated with it on the database or storage server. This continued monitoring and increased failure scenario detection and avoidance increases Exadata’s overall availability.

What about switch maintenance?

Switches are redundant and support rolling updates, eliminating downtime during planned maintenance periods.

Exadata effectively protects against all sorts of software and hardware failures, but what about human error?

Oracle Automatic Storage Management (ASM) high redundancy maintains a primary and two mirrored copies to provide high availability.

During ASM maintenance events, Exadata further ensures resiliency by alerting administrators utilizing an LED indicator that signals that redundancy could be compromised if the storage server is taken offline. This functionality is aware of ASM partnering and makes it easy for data center support personnel to see the potential impact of shutting down other storage servers.

Apart from all these Exadata-specific MAA features, there is a rich set of CRS and RDBMS features, such as Flashback, which further protects against human error by providing the ability to rewind changes at the database, table or transaction level.

Conclusion:

This blog post aimed to expand upon the previous Exadata MAA blog posts covering compute and storage redundancy in the series (links to previous posts below) to illustrate how this resiliency is also a core part of the integrated networking components that make up Exadata. The Oracle Exadata and MAA teams continue to innovate and improve the Exadata software and hardware stack to improve resiliency further, so please stay tuned for further blog posts in this series as we roll towards more chapters on Exadata resiliency and, of course, the newly released Exadata X11M.his blog series made it clear that Exadata MAA is much more than just redundant components. The MAA team continues to innovate and improve the Exadata soft- and hardware stack to improve resiliency further.

References and further reading:

Oracle Maximum Availability Architecture

What’s new in Oracle Exadata Database Machine

Blog posts in this series:

More than Just Redundant Hardware: Exadata MAA and HA Explained – Introduction

More than Just Redundant Hardware: Exadata MAA and HA Explained part I, the compute node

More than Just Redundant Hardware: Exadata MAA and HA Explained – Part II, the Exadata Storage Cell

More than Just Redundant Hardware: Exadata MAA and HA Explained part III, RoCE Fabric / Human Error