Introduction

Whilst working on SR-IOV live migration of Windows servers I investigated various implementations such as the M-to-N MUX intermediate driver, NIC Teaming, and the Hyper-V 2-netdev model. This article introduces all three of them but will focus primarily on the 2-netdev model, and elaborates how I implemented the 2-netdev model of SR-IOV live migration in the Windows VirtIO driver.

M-to-N MUX Intermediate Driver

The M-to-N MUX intermediate driver (also known as the 3-netdev model) exposes one lower protocol driver edge that communicates with the miniport driver of the network device, and one upper miniport driver edge that communicates with the top layer protocol drivers. Any upper virtual adapter driven by MUX model binds internally with the lower protocol interface. This MUX model also requires a COM object (notify object) which processes notifications during the network setup and configuration. The implementation of the MUX driver and the notify object is complicated, and it requires lots of work to restore the network offload.

NIC Teaming

Microsoft has supported NIC Teaming since Windows Server 2012 (similar to a 1:N/Team bond in Linux). NIC Teaming can be configured in userspace. For example, a service can be implemented to configure the network automatically through NIC Teaming. However, this is not consistent with our preference of implementation from the kernel level.

Hyper-V 2-netdev Model

The Hyper-V 2-netdev model implements both a protocol driver and a Hyper-V virtual network device driver in one driver binary. The protocol driver only binds to the VF device driver in 1:1 mode. This significantly simplifies the SR-IOV live migration implementation when compared to the MUX intermediate driver.

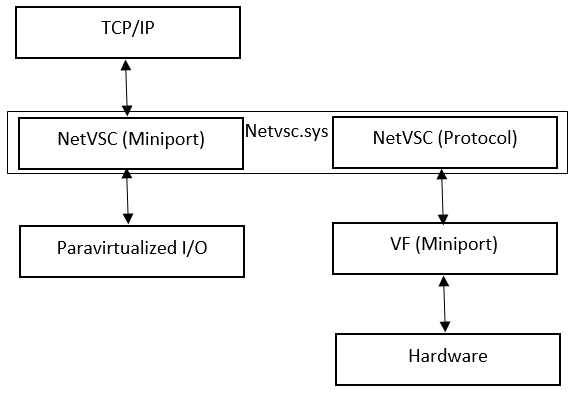

Hyper-V implementation

In Hyper-V, a specific protocol driver serves as a pipe or bridge between the Hyper-V Ethernet miniport driver (NetVSC) and the VF miniport driver. The network flow goes from the VF miniport driver through the Hyper-V protocol driver to the Hyper-V miniport driver.

The driver binary of the Hyper-V 2-netdev model is netvsc.sys. netvsc.sys not only serves as the Hyper-V Ethernet device miniport driver, but also as the protocol driver. This is to say both the NetVSC miniport driver and the protocol driver sit in the same netvsc.sys binary, but the INF installation files for them are separate – wnetvsc.inf and wnetvsc_vfpp.inf (refer to the contents of these two files shown below for more details).

In wnetvsc_vfpp.inf, netvsc.sys is the ServiceBinary of the NetVSC protocol driver. Generally, this driver binary is located in the folder %SystemRoot%\system32\drivers, and its INF file is located in the %SystemRoot%\INF folder.

wnetvsc_vfpp.inf (Hyper-V protocol installation file):

[netvscvfpp.AddService] ServiceType = 1 ;SERVICE_KERNEL_DRIVER StartType = 3 ;SERVICE_DEMAND_START ErrorControl = 1 ;SERVICE_ERROR_NORMAL ServiceBinary = %12%\netvsc.sys

In the wnetvsc.inf file, netvsc.sys is the ServiceBinary of the NetVSC miniport driver.

wnetvsc.inf (Hyper-V Network Adapter installation file):

[netvscvfpp.AddService] ServiceType = 1 ; SERVICE_KERNEL_DRIVER StartType = 3 ; SERVICE_DEMAND_START ErrorControl = 1 ; SERVICE_ERROR_NORMAL ServiceBinary = %12%\netvsc.sys

The Hyper-V NetVSC protocol driver only binds with the VF miniport driver, and this protocol driver only exposes LowerRange as “ndisvf”, as seen in wnetvsc_vfpp.inf below.

wnetvsc_vfpp.inf (Hyper-V protocol installation file):

[netvscvfpp.ndi.AddReg] HKR, Ndi, HelpText, 0, "%netvscvfpp_Help%" HKR, Ndi, Service, 0, "netvscvfpp" HKR, Ndi\Interfaces, UpperRange, 0, "noupper" HKR, Ndi\Interfaces, LowerRange, 0, "ndisvf"

In Hyper-V, all VF network device drivers expose the UpperRange as “ndisvf”. As a result, the VF network device can only bind with the NetVSC protocol driver. No other protocol drivers get involved.

For example, the VF device ID (0x1530) of the Intel X540 VF is advertised by the PF driver in Hyper-V (refer to the INF file netvxx64.inf below).

netvxx64.inf (Intel VF miniport installation file):

[Intel.NTamd64.6.1] %E1530VF.DeviceDesc% = E1530, PCI\VEN_8086&DEV_1530 [E1530] AddReg = Interfaces_iov.reg, Default.reg [Interfaces_iov.reg] HKR, Ndi\Interfaces, UpperRange, 0, "ndisvf" ; Device Description Strings E1530VF.DeviceDesc = "Intel(R) X540 Virtual Function"

This mechanism remarkably reduces the work in the Hyper-V 2-netdev model. The VF device binds the NetVSC Protocol in 1:1 bind mode, which eliminates the involvement of other protocol drivers, like TCP/IP, etc. This ensures all network data flow of the VF device can only be handled by the NetVSC protocol driver. The NetVSC protocol driver then communicates with the Hyper-V NetVSC miniport driver that talks to all protocol drivers that sit on top of it.

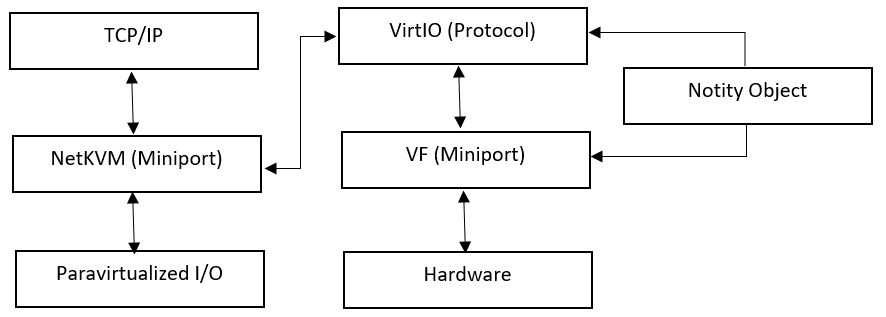

2-netdev model in KVM

VF device ID in KVM

As mentioned above, the 2-netdev model is simpler and cleaner. However, a 2-netdev implementation in VirtIO is more complicated than it is in Hyper-V. A COM object (notify object) is necessary to get involved to handle binding and unbinding between the VirtIO protocol driver, the VF miniport driver, and all other miniport drivers that have UpperRange “ndis5”. The reason for doing this is due to the different communication mechanisms between PF and VF in KVM, and when the PF advertises a VF with a different device ID from the one in Hyper-V. This directly results in a different UpperRange binding.

For example, Intel switched to mailbox VF-PF communication starting with the X722 ethernet network adapter, however they have been using Hyper-V VF-PF mechanism for 1G, 10G and some 40G network cards.

Take Intel X540 VF as an example, the device ID of this VF is 0x1515 in a Windows guest in KVM, versus 0x1530 in Hyper-V. Let us examine the INF file of the Intel VF miniport driver (netvxx64.inf) in KVM:

netvxx64.inf (Intel VF miniport installation file):

[Intel.NTamd64.6.1] %E1515VF.DeviceDesc% = E1515, PCI\VEN_8086&DEV_1515 [E1515] AddReg = Interfaces.reg, Default.reg, VLAN.reg [Interfaces.reg] HKR, Ndi\Interfaces, UpperRange, 0, "ndis5"

As you can see, the UpperRange is “ndis5” for the miniport driver of the VF with device ID 0x1515. This means that this VF device could bind itself with all protocol drivers that have “ndis5” as the LowerRange. However, this conflicts with the idea of binding the VF miniport driver with the VirtIO protocol driver exclusively. In order to bind them in 1:1 mode, the COM object (notify object) is required.

Notify Object

A notify object is a COM object that processes notifications of the network configuration subsystem, and it sits in a DLL binary. The notify object provides different methods for different purposes. Here, a NotifyBindingPath method is introduced because it is the key part of handling binding and unbinding.

In the method NotifyBindingPath, UpperID (protocol) and LowerID (miniport) of every binding can be obtained. Through comparing ID strings of the protocol and miniport drivers, the notify object detects whether it allows binding between that specific protocol driver and the miniport driver.

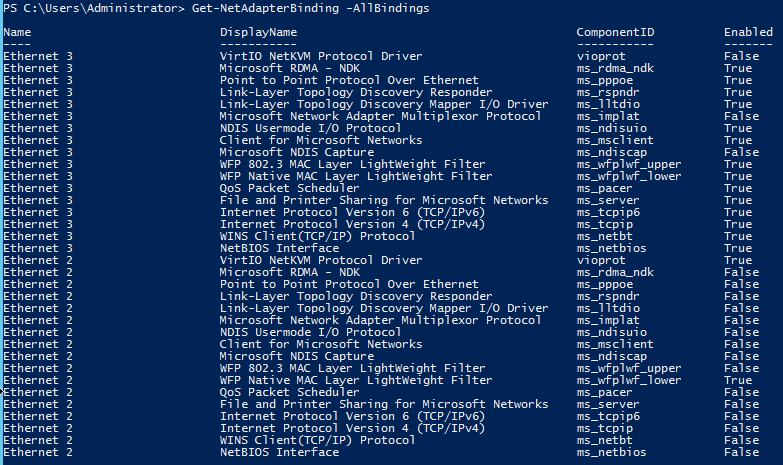

The following command shows how the protocol binding looks after unbinding other protocols from the VF miniport driver,

The notify object is installed through a protocol INF, vioprot.inf.

vioprot.inf (VirtIO protocol installation file):

[CpyFiles_Dll] netkvmno.dll,,,2

VirtIO Protocol Driver

The VirtIO protocol driver works as a bridge between the VirtIO and VF miniport drivers. Since the protocol driver sits in the same binary as the VirtIO miniport driver, and the Windows system does not allow two DriverEntry in one binary, it is necessary to register the protocol driver in the NetKVM driver’s DriverEntry code.

After the protocol driver gets registered and a new VF device binds to it, the protocol driver needs to detect the MAC address of the attached VF device and compare it with the MAC address of the VirtIO device. If both MAC addresses are matched, the protocol driver saves the BindingHandle of the VF miniport device in the corresponding VirtIO NetKVM device’s context. This can be done in the function ndisprotCreateBinding(), and the VirtIO NetKVM driver can communicate with the VF driver through this BindingHandle.

After the protocol driver gets installed, all network devices that have the “ndis5” UpperRange will bind to it. The notify object should unbind all network miniport devices, except for the supported VF miniport device, from this protocol driver.

The protocol driver sits in the same NetKVM driver binary, and it is installed by the vioprot.inf file. However, this INF file isn’t responsible for adding the NetKVM driver binary into the Windows system path during installation. Instead, this driver binary is copied by the NetKVM driver INF file, i.e. when the NetKVM miniport driver gets installed. Nothing needs to be modified in the NetKVM driver installation file. The notify object is installed byvioprot.inf, for example, see the following:

vioprot.inf (VirtIO protocol installation file):

[Inst_Ndi]

HKR, Ndi, ClsID, 0, {5a3db745-e424-4402-a575-af5e4bc65b1d}

HKR, Ndi, ComponentDll, , netkvmno.dll

HKR,Ndi,Service,,"Ndisprot"

HKR,Ndi,HelpText,,%NDISPROT_HelpText%

HKR,Ndi\Interfaces, UpperRange,, noupper

HKR,"Ndi\Interfaces","LowerRange",,"ndis5,ndis4,ndis5_prot"

[NDISPROT_Service_Inst]

DisplayName = %NDISPROT_Desc%

ServiceType = 1 ;SERVICE_KERNEL_DRIVER

StartType = 3 ;SERVICE_DEMAND_START

ErrorControl = 1 ;SERVICE_ERROR_NORMAL

ServiceBinary = %12%\netkvm.sys

netkvm.inf (VirtIO network driver installation file):

[kvmnet6.CopyFiles] netkvm.sys,,,2 [kvmnet6.Service] DisplayName = %kvmnet6.Service.DispName% ServiceType = 1 ;%SERVICE_KERNEL_DRIVER% StartType = 3 ;%SERVICE_DEMAND_START% ErrorControl = 1 ;%SERVICE_ERROR_NORMAL% ServiceBinary = %12%\netkvm.sys

Communication between VirtIO and VF

Communication between the VirtIO and the VF device mainly occurs in TX path, RX path, and OID requests. The BindingHandle of the VF miniport driver is the key of this communication.

TX path (SendNetBufferListsHandler / CancelSendHandler)

When the NetKVM driver forwards a TX packet to the VF miniport driver, the NetBufferList of the TX packet contains the SourceHandle that was originally provided by NDIS. This handle should be saved in the protocol driver. After packets are sent out by the VF miniport, the protocol driver has to complete sending the NetBufferList through the original SourceHandle. Otherwise, things will not function properly because NDIS is expecting the original SourceHandle in the completion routine. When sending packets, the NetKVM miniport driver calls NdisSendNetBufferLists to send data through the VF miniport binding handle as well as CancelSendNetBufferListsToVF.

RX path (ReturnNetBufferListsHandler)

ReturnNetBufferListsHandler returns packets through the VF miniport’s binding handle. NdisMIndicateReceiveNetBufferLists provides the NetBufferLists to the upper protocols through the appropriate NDIS Handle. When the underlying VF miniport driver calls NdisMIndicateReceiveNetBufferLists to indicate the NetBufferLists to the VirtIO protocol driver, this protocol driver’s ReceiveNetBufferListsHandler gets triggered, and it has to call NdisMIndicateReceiveNetBufferLists through the NetKVM NDIS handler to provide the NetBufferLists to all protocol drivers that sit on top of the NetKVM miniport driver.

Request OIDs (OidRequestHandler)

Currently, the NetKVM driver forwards all OIDs to the VF miniport driver in the MPForwardOidRequest function when the VF binding handle is valid. The VF miniport driver processes these OIDs just like what they did before. The VF miniport driver does not behave differently when these OIDs are forwarded by the VirtIO driver or from NDIS in a normal case (the non 2-netdev model). The protocol driver provides callback functions to complete these OIDs. MPQueryInformation and MPSetInformation call MPForwardOidRequest to forward the OID request from the NetKVM to the VF.

Summary

The Oracle development source code tree is available here:

https://github.com/oracle/kvm-guest-drivers-windows/tree/sriov

This source code tree contains our original implementation of 2-netdev SR-IOV live migration. We shared this code tree and documentation with the upstream maintainers, and after evaluation they decided to re-implement this 2-netdev model from scratch. Their implemtation is available here: