Introduction

In scalable cloud virtualized environments, we often increase/decrease the number of virtual CPUs (vCPUs) of a guest virtual machine (VM) to handle dynamic work load requirements to save resources and reduce costs.

- vCPU Hotplug: The operation to increase vCPUs for a live/running guest is called vCPU Hotplug.

- vCPU Hotunplug: The operation to decrease vCPUs for a live/running guest is called vCPU Hotunplug.

We will delve into how libvirt performs these operations behind the scenes for a running guest VM. In this blog, we will use qemu-kvm hypervisor and libvirt version 9.0.0-3, available with Oracle Linux 8 in virt:kvm_utils3 module stream if not otherwise mentioned. To follow this blog in a hands-on approach, feel free to use the steps in https://blogs.oracle.com/linux/post/using-systemtap-for-tracing-libvirt to prepare your setup.

On the host machine running libvirtd, we can see the vCPU threads running on our system and associated with the guest by running the following command (use PID of the guest with -p option):

$ ps -p $(pgrep qemu-kvm) -L PID LWP TTY TIME CMD 57793 57793 ? 00:00:00 qemu-kvm 57793 57808 ? 00:00:00 qemu-kvm 57793 57813 ? 00:00:00 IO mon_iothread 57793 57814 ? 00:00:12 CPU 0/KVM 57793 57815 ? 00:00:07 CPU 1/KVM 57793 58627 ? 00:00:03 CPU 2/KVM 57793 58628 ? 00:00:00 CPU 3/KVM 57793 58629 ? 00:00:00 CPU 4/KVM 57793 59612 ? 00:00:00 CPU 5/KVM

After a successful vCPU hotplug operation, there will be more vCPU threads in the above output and, similarly, after a successful vCPU hotunplug operation, there will be fewer vCPU threads in the above output.

When performing multiple vCPU hotplugs or hotunplugs at one time, the operation is actually performed one vCPU at a time. As such, and for the sake of simplicity, we will now describe first how one vCPU hotplug operation works and then how one vCPU hotunplug works.

vCPU Hotplug

If a running guest named ol8 has 5 vCPUs (and at least a max of 6 vCPUs), we can do a vCPU hotplug operation using a simple command:

$ virsh setvcpus ol8 --count 6 --live

Since the target vCPU count is greater than the current vCPU count by 1, the above operation performs 1 vCPU hotplug. That is, it will perform 1 vCPU thread creation and, after the operation, the guest will have total of 6 vCPU threads.

The trace of communication between libvirt and qemu during a hotplug operation using systemtap shows the following:

$ stap /usr/share/doc/libvirt-docs/examples/systemtap/qemu-monitor.stp

2.106 > 0x7f2680005740 {"execute":"device_add","arguments":{"driver":"Broadwell-IBRS-x86_64-cpu","id":"vcpu5","core-id":0,"thread-id":0,"socket-id":5},"id":"libvirt-472"}

2.109 ! 0x7f2680005740 {"timestamp": {"seconds": 1716988519, "microseconds": 57036}, "event": "ACPI_DEVICE_OST", "data": {"info": {"device": "vcpu5", "source": 1, "status": 0, "slot": "5", "slot-type": "CPU"}}}

2.158 < 0x7f2680005740 {"return": {}, "id": "libvirt-472"}

2.159 > 0x7f2680005740 {"execute":"query-hotpluggable-cpus","id":"libvirt-473"}

2.160 < 0x7f2680005740 {"return": [{"props": {"core-id": 0, "thread-id": 0, "socket-id": 19}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id": 0, "thread-id": 0, "socket-id": 18}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id": 0, "thread-id": 0, "socket-id": 17}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id": 0, "thread-id": 0, "socket-id": 16}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id":

2.160 > 0x7f2680005740 {"execute":"query-cpus-fast","id":"libvirt-474"}

2.161 < 0x7f2680005740 {"return": [{"thread-id": 55152, "props": {"core-id": 0, "thread-id": 0, "socket-id": 0}, "qom-path": "/machine/unattached/device[0]", "cpu-index": 0, "target": "x86_64"}, {"thread-id": 55154, "props": {"core-id": 0, "thread-id": 0, "socket-id": 1}, "qom-path": "/machine/unattached/device[2]", "cpu-index": 1, "target": "x86_64"}, {"thread-id": 55187, "props": {"core-id": 0, "thread-id": 0, "socket-id": 2}, "qom-path": "/machine/peripheral/vcpu2", "cpu-index": 2, "target": "x86_64"}, {"thre

(Please go through this blog : https://blogs.oracle.com/linux/post/using-systemtap-for-tracing-libvirt to learn about tracing this communication.)

As can be seen from the above trace logs, libvirt prepares and sends the device_add QMP (Qemu Monitor Protocol) command to the qemu process (guest VM) through the qemu monitor socket which performs the actual vCPU hotplug.

The unique monitor address (like 0x7f2680005740 in the above trace logs) helps differentiate between multiple guest monitor(s). By default, libvirt creates only one monitor socket per domain (guest) during its creation at /var/lib/libvirt/qemu/domain-ID-NAME/monitor.sock, and we can use the libvirt command virsh qemu-monitor-command --hmp "info cpus" to send QMP commands to qemu via the default qemu monitor socket.

libvirt also performs validations of input and output data from user, qemu, and other parts of the operating system and handles many details automatically to simplify various processes.

Besides sending a command to qemu to perform a hot(un)plug operation, libvirt also performs the following steps:

- It validates the input and output data received from various components, such as user and qemu. For example, for hotplug it compares the target and maximum vCPU counts.

- It provides and implements various authentication and security mechanisms such as ACL (Access Control List), DAC (Discretionary Access Control), PAM (Privileged Access Management), MAC (Manadatory Access Control like selinux), etc.

- It checks for supported qemu capabilities and features support to handle both legacy and modern qemu versions.

- It handles other tasks such as cgroup, process affinity, scheduler tuning, etc.

- It properly logs the operation’s resource change to audit logs.

A user who just wants an addtional vCPU for a guest only needs to specify the target vCPU count, without worrying about the specific CPU driver, CPU topology, etc.

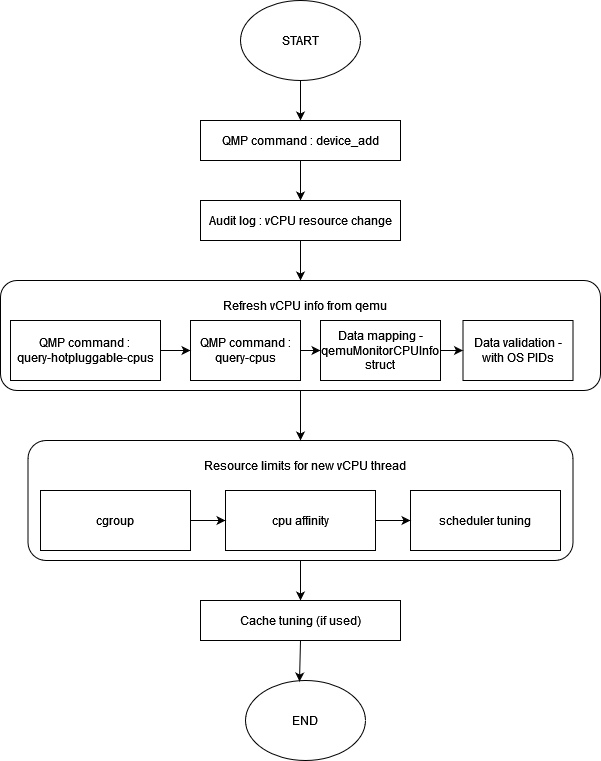

Next, let’s discuss the operations done by libvirt during a hotplug operation step by step in the following diagram:

1. QMP command – device_add

> {"execute":"device_add","arguments":{"driver":"Broadwell-IBRS-x86_64-cpu","id":"vcpu5","core-id":0,"thread-id":0,"socket-id":5},"id":"libvirt-472"}

! {"timestamp": {"seconds": 1716988519, "microseconds": 57036}, "event": "ACPI_DEVICE_OST", "data": {"info": {"device": "vcpu5", "source": 1, "status": 0, "slot": "5", "slot-type": "CPU"}}}

< {"return": {}, "id": "libvirt-472"}

qemuMonitorAddDeviceProps() in libvirt sends a device_add QMP command to qemu (via qemu monitor socket) requesting it to hotplug/add a new vCPU (which, according to qemu, is a device).

qemu receives this request and creates a vCPU thread on the operating system. This improves performance of the guest (if utilized correctly) as the guest will now have more CPUs and thus more time quota for execution on the host.

If qemu does not support the QEMU_CAPS_QUERY_HOTPLUGGABLE_CPUS capability, which is checked by libvirt when it is initially started, libvirt sends a cpu-add QMP command instead. This capability check helps to support running VMs with legacy/old versions of qemu.

libvirt reads the reply from qemu for the device_add command, identified based on the transaction id (e.g., “libvirt-472” in the hotplug operation trace logs) and performs validatation of the reply.

2. Audit log – vCPU resource change

virDomainAuditVcpu() in libvirt logs an audit message about the vCPU resource change that was performed earlier in the hotplug operation (by sending a device_add QMP command to qemu). This system log helps indicate that an operation through libvirt attempted to increase vCPU resources for a domain.

By default, audit logs are available at /var/log/audit/audit.log. Here is a sample audit log for VM ‘ol8’ when the vCPUs increased from 5 to 6:

type=VIRT_RESOURCE msg=audit(1714646418.288:8707): pid=788976 uid=0 auid=4294967295 ses=4294967295 subj=system_u:system_r:virtd_t:s0-s0:c0.c1023 msg='virt=kvm resrc=vcpu reason=update vm="ol8" uuid=ccec7a0f-a621-410f-a1a1-7d3c42af8080 old-vcpu=5 new-vcpu=6 exe="/usr/sbin/libvirtd" hostname=? addr=? terminal=? res=success'^]UID="root" AUID="unset"

3. Refresh vCPU info from qemu

qemuDomainRefreshVcpuInfo() in libvirt refreshes the vCPU data of libvirt with qemu using the following sub-operations:

- 3.1 QMP command –

query-hotpluggable-cpus - 3.2 QMP command –

query-cpus-fast - 3.3 Data mapping into

qemuMonitorCPUInfostruct - 3.4 Validating OS level PID values

- 3.5 Updating the global vCPU information

Operations 3.1 and 3.2 are done in qemuMonitorGetCPUInfo() in libvirt, which takes a lock on the qemu monitor before fetching this information from qemu (via qemu monitor socket) and unlocks it immediately after receiving the reply for the sent QMP commands.

3.1 QMP command – query-hotpluggable-cpus

$ stap /usr/share/doc/libvirt-docs/examples/systemtap/qemu-monitor.stp

> {"execute":"query-hotpluggable-cpus","id":"libvirt-473"}

< {"return": [{"props": {"core-id": 0, "thread-id": 0, "socket-id": 19}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id": 0, "thread-id": 0, "socket-id": 18}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id": 0, "thread-id": 0, "socket-id": 17}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id": 0, "thread-id": 0, "socket-id": 16}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id":

qemuMonitorJSONGetHotpluggableCPUs() in libvirt sends a query-hotpluggable-cpus command to qemu (via qemu monitor socket) to fetch the following information:

type: The CPU architecture model/type being emulated.vcpus_count: The number of vCPUs represented by 1 vCPU device/thread.props(aka properties): The indices ofsocket-id,core-id, andthread-id.qom_path: The qemu object model (qom) path notation used by qemu. For example,/machine/unattached/device[0]for non-hotpluggable vCPUs, and/machine/peripheral/vcpu2for hotpluggable vCPUs for identifying device/vCPU.

This command returns vCPU/device entities on the hotpluggable level which may describe more than one guest logical vCPU/device represented with the qemuMonitorQueryHotpluggableCpusEntry struct as shown below:

struct qemuMonitorQueryHotpluggableCpusEntry {

char *type; /* name of the cpu to use with device_add */

unsigned int vcpus; /* count of virtual cpus in the guest this entry adds */

char *qom_path; /* full device qom path only present for online cpus */

char *alias; /* device alias, may be NULL for non-hotpluggable entities */

/* verbatim copy of the JSON data representing the CPU which must be used for hotplug */

virJSONValuePtr props;

/* topology information -1 if qemu didn't report given parameter */

int node_id;

int socket_id;

int core_id;

int thread_id;

/* internal data */

int enable_id;

};

For offline vCPUs, libvirt receives type, props, and vcpus-count from qemu, filled in a qemuMonitorQueryHotpluggableCpusEntry struct.

For online vCPUs, libvirt receives type, props, vcpus-count, and qom_path, filled in a qemuMonitorQueryHotpluggableCpusEntry struct.

The list of struct qemuMonitorQueryHotpluggableCpusEntry entries are sorted in ascending order, using the quick sort algorithm, in priority of socket_id, then core_id, then thread_id.

3.2 QMP command – query-cpus-fast

$ stap /usr/share/doc/libvirt-docs/examples/systemtap/qemu-monitor.stp

> {"execute":"query-cpus-fast","id":"libvirt-474"}

< {"return": [{"thread-id": 55152, "props": {"core-id": 0, "thread-id": 0, "socket-id": 0}, "qom-path": "/machine/unattached/device[0]", "cpu-index": 0, "target": "x86_64"}, {"thread-id": 55154, "props": {"core-id": 0, "thread-id": 0, "socket-id": 1}, "qom-path": "/machine/unattached/device[2]", "cpu-index": 1, "target": "x86_64"}, {"thread-id": 55187, "props": {"core-id": 0, "thread-id": 0, "socket-id": 2}, "qom-path": "/machine/peripheral/vcpu2", "cpu-index": 2, "target": "x86_64"}, {"thre

qemuMonitorJSONQueryCPUs() in libvirt then sends a query-cpus-fast QMP command to qemu (via qemu monitor socket) which fetches the following information:

thread-id: The OS-level thread ID of the vCPU thread.props(aka properties): The indices ofsocket-id,core-id, andthread-id.qom-path: The qemu object model (qom) path notation used by qemu. For example,/machine/unattached/device[0]for non-hotpluggable vCPUs, and/machine/peripheral/vcpu2for hotpluggable vCPUs for identifying device/vCPU.cpu-index: The index/order of vCPUs (i.e., the order in which vCPUs were enabled/inserted).target: The target CPU architecture to emulate (e.g., x86_64).

This command returns device/vCPU entries for each enabled guest vCPU/device which is stored in qemuMonitorQueryCpusEntry struct as follows:

struct qemuMonitorQueryCpusEntry {

int qemu_id; /* id of the cpu as reported by qemu */

pid_t tid;

char *qom_path;

bool halted;

};

3.3 Data mapping – qemuMonitorCPUInfo struct

qemuMonitorGetCPUInfoHotplug() in libvirt maps data obtained from the query-hotpluggable-cpus and query-cpus-fast QMP commands called earlier, and then stores this information together into a qemuMonitorCPUInfo struct in libvirt, as shown below:

struct _qemuMonitorCPUInfo {

pid_t tid;

int id; /* order of enabling of the given cpu */

int qemu_id; /* identifier of the cpu as reported by query-cpus */

/* state data */

bool online;

bool hotpluggable;

/* topology info for hotplug purposes. Hotplug of given vcpu impossible if all entries are -1 */

int socket_id;

int die_id;

int core_id;

int thread_id;

int node_id;

unsigned int vcpus; /* number of vcpus added if given entry is hotplugged */

/* name of the qemu type to add in case of hotplug */

char *type;

/* verbatim copy of the returned data from qemu which should be used when plugging */

virJSONValue *props;

/* alias of an hotpluggable entry. Entries with alias can be hot-unplugged */

char *alias;

char *qom_path;

bool halted;

};

typedef struct _qemuMonitorCPUInfo qemuMonitorCPUInfo;

qom_path is the key attribute for mapping data stored in the qemuMonitorQueryHotpluggableCpusEntry and qemuMonitorQueryCpusEntry lists. The order in which hotpluggable entries were inserted is stored in (qemuMonitorQueryHotpluggableCpusEntry*)->enable_id, which is calculated by iterating over the entries in the qemuMonitorQueryHotpluggableCpusEntry list for each entry in the qemuMonitorQueryCpusEntry list, and assigning the order serially, starting from 1, but only when the qom-path element in both entries match.

3.4. Validate OS-level PID values

For KVM, there is a 1-1 mapping between vCPU thread-ids and the host OS PIDs.

TCG (Tiny Code Generator) is an exception to the above mapping rule. In the case of TCG:

- In some cases, all vCPUs have same PID as main emulator thread.

- In other cases, the first vCPU has a distinct PID, while other vCPUs have the same PID as the main emulator thread.

For MTTCG (Multi-Threaded TCG), there is a 1-1 mapping between vCPUs and the host OS PIDs, just like with KVM.

An OS-level PID is used by libvirt for reporting data and vCPU pinning (i.e., running vCPU on certain pCPUs (physical CPUs)). Thus, in the case of TCG, which can have the same PID for multiple vCPUs, it becomes especially important to validate this data.

libvirt validates that the there are no duplicate OS-level thread PIDs of vCPUs and they are all different from the PID of the main emulator thread.

3.5. Update guest’s vCPU data

virDomainDef struct in libvirt represents a guest domain as shown below:

# Guest VM struct

struct _virDomainDef {

...

virDomainVcpuDef **vcpus;

...

};

typedef struct _virDomainDef virDomainDef;

A virDomainDef struct (representing the domain) has the vcpus member element, which is a double pointer to a virDomainVcpuDef struct, and stores an array of vCPUs with a size equal to maxvcpus. That is, vcpus[0] to vcpus[maxvcpus-1], each being a pointer to a virDomainVcpuDef struct which represents a vCPU as shown below:

# Definition for each vcpu

struct _virDomainVcpuDef {

...

virObject *privateData;

};

typedef struct _virDomainVcpuDef virDomainVcpuDef;

The QEMU_DOMAIN_VCPU_PRIVATE() macro is used to get the privateData of a particular vCPU, which typecasts the virObject struct pointer to the qemuDomainVcpuPrivate struct shown below:

struct _qemuDomainVcpuPrivate {

virObject parent;

pid_t tid; /* vcpu thread id */

int enable_id; /* order in which the vcpus were enabled in qemu */

int qemu_id; /* ID reported by qemu as 'CPU' in query-cpus */

char *alias;

virTristateBool halted;

virJSONValue *props; /* copy of the data that qemu returned */

/* information for hotpluggable cpus */

char *type;

int socket_id;

int core_id;

int thread_id;

int node_id;

int vcpus;

char *qomPath;

};

typedef struct _qemuDomainVcpuPrivate qemuDomainVcpuPrivate;

The qemuDomainVcpuPrivate struct in libvirt stores the global vCPU data for the domain that is fetched from qemu and was stored in qemuMonitorCPUInfo after the mapping. This data is stored for each vCPU, whether online or offline.

When the guest VM is started, (virDomainDef)->vcpus allocates memory to an array of virDomainVcpuDef struct elements with a size equal to maxvcpus.

4. Setting resource limits for the new vCPU thread

qemuProcessSetupPid() sets up the following resource management settings for the new vCPU thread:

- cgroup

- cpu affinity

- scheduler setting

If a guest domain’s xml does not contain a CPU affinity element explicitly, the default CPU affinity for the vCPU thread is set to all online pCPUs (even if libvirtd itself is not running on all pCPUs), read from the /sys/devices/system/cpu/online file in Linux.

4.1 cgroup settings

The paths to the guest’s cgroup heirarchy relating to hot(un)plug operations are under cpu and cpuset controllers (for libvirt version > 7.1.0):

CPU controller path: /sys/fs/cgroup/cpu,cpuacct/machine.slice/machine-qemu\x2d7\x2dol8.scope/libvirt

CPUSET controller path: /sys/fs/cgroup/cpuset/machine.slice/machine-qemu\x2d7\x2dol8.scope/libvirt

-

cgroup creation: ‘CPU(SET)/vcpu5/’ directories are created for the new vCPU under both the

cpuandcpusetcontroller paths. -

cpu affinity: ‘CPU(SET)/vcpu5/cpuset.cpus’ files are updated to the same value in

$CPU(SET)/cpuset.cpusfiles under the parent cgroup. -

numa affinity: ‘CPU(SET)/vcpu5/cpuset.mems’ files are updated to the same value in

$CPU(SET)/cpuset.memsfile under the parent cgroup. -

cpu bandwidth:

virDomainCgroupSetupVcpuBW()sets up the CPU period and CPU quota for thevcpu5cgroup (if specified). ‘cpu bandwidth’ means that a process may consume up to$QUOTAms in each$PERIODms duration.cgroup v1 – Period and Quota are stored in following files:

Period: cpu.cfs_period_us (min = 1000, max = 1000000) Quota: cpu.cfs_quota_us (min = 1000, max = 17592186044415) (negative value means no restriction)

cgroup v2 – cpu.max file of the format

$QUOTA $PERIODcontains a period and quota (default is “max 100000”, which is the maximum bandwidth limit).Period: $PERIOD in cpu.max file (min=1000, max=1000000) Quota: $QUOTA in cpu.max file (min=1000, max=17592186044415 (1ULL << MAX_BW_BITS based on kernel code)). If only one number is written to the cpu.max file, the CPU quota is updated

-

Apply cgroup settings to vCPU thread :

virCgroupAddThread()finally adds the vCPU thread to thevcpu5cgroup under both controllers (i.e.,CPU/vcpu5andCPUSET/vcpu5)cgroup v1: write pid to $CPU(SET)/vcpu5/tasks file under both CPU and CPUSET controllers. cgroup v2: write pid to $CPU(SET)/vcpu5/cgroup.threads file under both CPU and CPUSET controllers.

4.2 cpu affinity

virProcessSetAffinity() is called to set up pCPU affinity of the new vCPU thread (i.e., the CPU cores on which the guest’s newly created vCPU thread will run).

Based on the platform for which libvirt was built, libvirt calls the following function to set the CPU affinity for the thread:

sched_setaffinity()(WITH_DECL_CPU_SET_Ton Linux systems)cpuset_setaffinity()(WITH_BSD_CPU_AFFINITYon BSD systems)

This function call may fail if:

- libvirtd does not have the

CAP_SYS_NICEcapability. - libvirtd does not run on all CPUs.

This can happen if libvirtd is run inside a container, for example.

4.3 scheduler tuning

virProcessSetScheduler() calls sched_setscheduler() to set the scheduler type and priority of the new vCPU thread.

5. Cache tuning (optional)

If the Linux feature resctrl is supported, available, and used (check if /sys/fs/resctrl is mounted and if CONFIG_X86_CPU_RESCTRL is present in kernel config), libvirt also performs the L2/L3 “cache tunings” for the newly created vCPU thread.

qemuProcessSetup() in libvirt calls qemuProcessSetupPid(), discussed above, which adds the vCPU pid to /sys/fs/resctrl/monitorID/tasks, where monitorID is a unique id generated by libvirt.

In this way, libvirt provides cache tuning for the guest’s vCPU threads, which in turn provides higher CPU performance for the guest.

<domain>

<cputune>

...

<cachetune vcpus='0-3'>

<cache id='0' level='3' type='both' size='3' unit='MiB'/>

<cache id='1' level='3' type='both' size='3' unit='MiB'/>

<monitor level='3' vcpus='1'/>

<monitor level='3' vcpus='0-3'/>

</cachetune>

...

</cputune>

</domain>

vCPU Hotunplug

If a running guest named ‘ol8’ has 6 vCPUs (and at least a minimum of 1 hotpluggable vCPUs), we can do a vCPU hotunplug operation using a simple command like:

$ virsh setvcpus ol8 --count 5 --live

Since the target vCPU count is less than the current vCPU count by 1 (and assuming we have hotpluggable vCPUs), the above operation performs 1 vCPU hotunplug. That is, it will perform 1 vCPU thread deletion and, after the operation, the guest will have total of 5 vCPU threads.

Using systemtap we can trace the communication between libvirt and qemu during a vCPU hotunplug operation:

$ stap /usr/share/doc/libvirt-docs/examples/systemtap/qemu-monitor.stp

2.041 > 0x7f2680005740 {"execute":"device_del","arguments":{"id":"vcpu5"},"id":"libvirt-475"}

2.041 < 0x7f2680005740 {"return": {}, "id": "libvirt-475"}

2.042 ! 0x7f2680005740 {"timestamp": {"seconds": 1716989193, "microseconds": 700509}, "event": "ACPI_DEVICE_OST", "data": {"info": {"device": "vcpu5", "source": 3, "status": 132, "slot": "5", "slot-type": "CPU"}}}

2.058 ! 0x7f2680005740 {"timestamp": {"seconds": 1716989193, "microseconds": 716821}, "event": "DEVICE_DELETED", "data": {"path": "/machine/peripheral/vcpu5/lapic"}}

2.059 ! 0x7f2680005740 {"timestamp": {"seconds": 1716989193, "microseconds": 717019}, "event": "DEVICE_DELETED", "data": {"device": "vcpu5", "path": "/machine/peripheral/vcpu5"}}

2.059 > 0x7f2680005740 {"execute":"query-hotpluggable-cpus","id":"libvirt-476"}

2.059 ! 0x7f2680005740 {"timestamp": {"seconds": 1716989193, "microseconds": 717164}, "event": "ACPI_DEVICE_OST", "data": {"info": {"source": 3, "status": 0, "slot": "5", "slot-type": "CPU"}}}

2.060 < 0x7f2680005740 {"return": [{"props": {"core-id": 0, "thread-id": 0, "socket-id": 19}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id": 0, "thread-id": 0, "socket-id": 18}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id": 0, "thread-id": 0, "socket-id": 17}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id": 0, "thread-id": 0, "socket-id": 16}, "vcpus-count": 1, "type": "Broadwell-IBRS-x86_64-cpu"}, {"props": {"core-id":

2.060 > 0x7f2680005740 {"execute":"query-cpus-fast","id":"libvirt-477"}

2.061 < 0x7f2680005740 {"return": [{"thread-id": 55152, "props": {"core-id": 0, "thread-id": 0, "socket-id": 0}, "qom-path": "/machine/unattached/device[0]", "cpu-index": 0, "target": "x86_64"}, {"thread-id": 55154, "props": {"core-id": 0, "thread-id": 0, "socket-id": 1}, "qom-path": "/machine/unattached/device[2]", "cpu-index": 1, "target": "x86_64"}, {"thread-id": 55187, "props": {"core-id": 0, "thread-id": 0, "socket-id": 2}, "qom-path": "/machine/peripheral/vcpu2", "cpu-index": 2, "target": "x86_64"}, {"thre

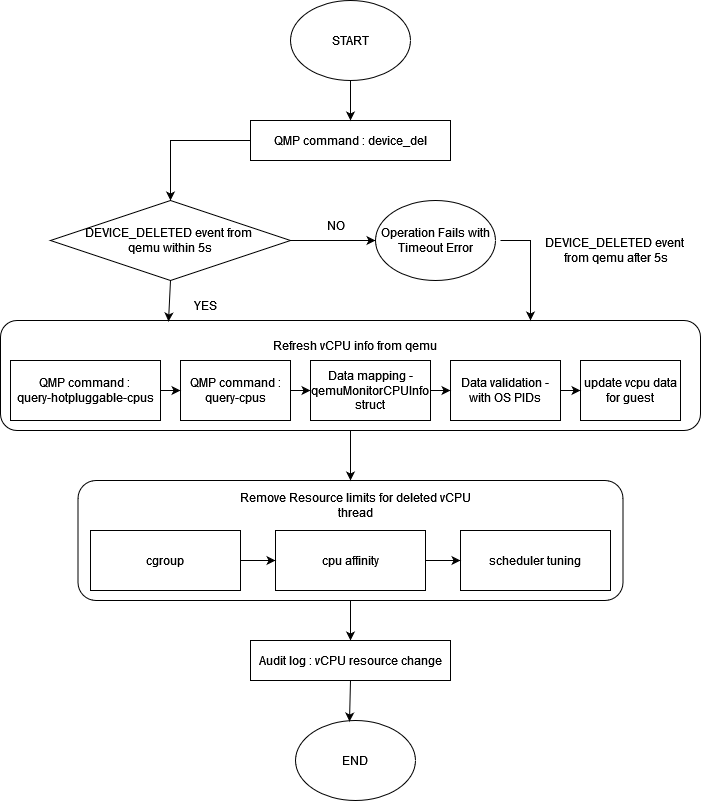

The sequence of communication between libvirt and qemu during a hotunplug operation is similiar to the sequence during a vCPU hotplug sequence.

The vCPU hotunplug operation is mostly just the opposite of the vCPU hotplug operation, with a few differences, such as:

- The

device_delqemu command sent over to qemu instead of thedevice_addcommand in hotplug. - cgroup and other resource limits set for the vCPU (during hotplug or guest boot) are removed.

- libvirt waits for TIMEOUT seconds (5s generally, which is big enough!) to receive the

DEVICE_DELETEDevent from qemu, and reports an error to the user if this event is not received within the time.

libvirt may timeout during a hotunplug operation as qemu may take time to return a DEVICE_DELETED event and, if that happens, we do not want to block the operation and keep the user who ran the operation waiting. During a vCPU device delete (i.e., the removal of vCPU thread on the host), qemu needs to migrate the tasks running on the vCPU thread before it can be deleted. If there are heavy workloads running on the guest and the destination vCPUs to which the tasks will be migrated are already very busy, this operation can get delayed. To handle this scenario, libvirt implements and performs a timeout in this synchronous path and the operation seems to end with a timeout error (however, this is not the end of story yet!).

libvirt has already sent the device_del QMP command to qemu, for which a DEVICE_DELETED event will eventually be returned as a response from qemu, even though the operation itself has timed out. The per-domain event handler thread processes this event and calls the processDeviceDeletedEvent() function registered to qemuDomainRemoveVcpuAlias() which matches the vCPU to remove based on vCPU alias (e.g., vcpu5). For the matching vCPU of the domain, qemuDomainRemoveVcpu() is called, which resumes the vCPU removal (just as in the synchronous operation) and the timed out operation is completed in this asynchronous fashion.

Using both these synchronous and asynchronous approaches, libvirt handles vCPU hotunplug operations seamlessly.

After qemuDomainRefreshVcpuInfo(), the cgroup for the vCPU is deleted by the virCgroupDelThread() for both the cpu and cpuset controllers (created during hotplug or first boot).

virDomainAuditVcpu() logs an audit message, just as in a hotplug event, to celebrate the removal of the vCPU.

The rest of the operations performed in a vCPU hotunplug are similiar to what we have already discussed for the vCPU hotplug operation sequence flow, as can be seen from the code flow diagram.