As a Product Manager at Oracle, I’m excited to collaborate with David Ruthven from Snowflake on this blog post. Together, we’re addressing one of the biggest challenges organizations face in today’s data-driven world: breaking down data silos.

Data silos are one of the biggest obstacles organizations face. Data is generated in various systems, and accessing it across platforms is often cumbersome and inefficient. Snowflake and Oracle Autonomous Database are two industry-leading platforms, but accessing data between them has historically required custom integration.

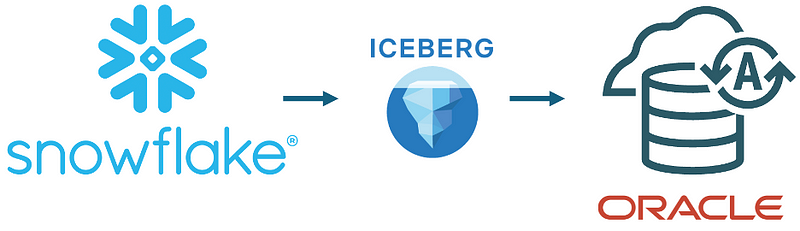

This blog demonstrates how to bridge this gap using Apache Iceberg, a modern Open Table Format for analytical data. With Iceberg, data generated in Snowflake can be easily accessed by Oracle Autonomous Database (ADB), enabling seamless cross-platform analytics.

Accessing Snowflake Iceberg Tables in Oracle Autonomous Database

Imagine this: your data is stored in Snowflake but accessing it from Oracle Autonomous Database feels like jumping through hoops. Traditionally, this meant dealing with complex ETL pipelines that drained time and resources, copying data from one system to the other.

Now, here’s the game-changer: Apache Iceberg. This modern table format allows Snowflake to store data in a way that Oracle Autonomous Database can directly access. The result? No more silos. Just seamless data sharing and simplified workflows.

Snowflake can publish and maintain an Iceberg table version of any shareable data, including any Snowflake Marketplace data, for consumption by, say, an Oracle Autonomous Data Warehouse (ADW). Using a Snowflake Dynamic Iceberg Table with CDC allows for efficient incremental maintenance of the Iceberg Table. The Iceberg table is stored in a Cloud Storage Volume where both Snowflake and Oracle ADW have been granted access.

Building Iceberg Tables in Snowflake: A Simple Three-Step Process

To enable seamless integration between Snowflake and Oracle Autonomous Database, we leverage Apache Iceberg. This three-step process outlines how to create and maintain Iceberg tables in Snowflake for cross-platform analytics.

Step 1: Create a Cloud Storage Volume

The first step is to set up a cloud storage volume to store your Iceberg tables in Parquet format. This volume serves as the shared storage layer that enables interoperability between Snowflake and Oracle Autonomous Database.

CREATE OR REPLACE EXTERNAL VOLUME ice_ext_vol STORAGE_LOCATIONS = (( NAME = ‘ice-us-east-2’ STORAGE_PROVIDER = ‘AZURE’ STORAGE_BASE_URL = ‘azure://<bucket>[/<path>/’ AZURE_TENANT_ID = ‘<tenant_id>’ ));

Step 2: Create a Synchronized Iceberg Table in Snowflake

Next, create a synchronized Iceberg table in Snowflake. This table dynamically maintains a copy of a local or shared Snowflake table, ensuring that changes are efficiently propagated to the Iceberg table.

CREATE OR REPLACE DYNAMIC ICEBERG TABLE iceberg_table TARGET_LAG = ’20 minutes’ WAREHOUSE = ice_wh CATALOG = ‘SNOWFLAKE’ EXTERNAL_VOLUME = ‘ice_ext_vol’ BASE_LOCATION = ‘iceberg_table’ AS SELECT * FROM <database>.<schema>.<table>;

Step 3: Write Metadata for External Tooling (Optional)

In some cases, you may want to export the Iceberg table metadata for use with other tools. This metadata provides essential details about the table structure and versioning.

SELECT SYSTEM$GET_ICEBERG_TABLE_INFORMATION(‘iceberg_table’);

And just like that, Snowflake is ready to share Iceberg tables with Oracle Autonomous Database. With this setup, you’re no longer limited by traditional ETL pipelines or manual data transfer methods. Instead, you have a modern, streamlined way to enable cross-platform analytics, with both platforms accessing a single source of truth.

Seamlessly Access Iceberg Tables from Oracle Autonomous Database

Let’s dive into how to make the data accessible from Oracle Autonomous Database. It’s a straightforward process, simple and straightforward. No need to wrangle with complex pipelines or custom integrations — just a few commands, and you’re good to go!

First, let’s create a credential to securely connect to the Azure storage where the Iceberg table is maintained by Snowflake. This credential allows the Oracle Autonomous Database to access the Iceberg table stored in Azure Data Lake (DFS).

BEGIN DBMS_CLOUD.CREATE_CREDENTIAL( credential_name => ‘MY_CREDENTIAL’, username => ‘your-storage-account’, password => ‘your-access-key’ ); END;

Next, we define an external table in the Oracle Autonomous Database. This step points directly to the Iceberg manifest file created by Snowflake. With just one command, Oracle knows exactly where to find and interpret the data.

BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name => ‘my_sales_iceberg’,

credential_name => ‘MY_CREDENTIAL’,

file_uri_list => ‘https://<storage_account_name>.dfs.core.windows.net/<filesystem_name>/my_sales_iceberg/metadata/95...d.metadata.json’,

format => ‘{“access_protocol”:{“protocol_type”:”iceberg”}}’

);

END;

And there you have it! Your table is ready for action. Want to explore the data? A simple SELECT statement does the trick:

SELECT * FROM my_sales_iceberg;

With just a couple of steps, you’ve turned what used to be a headache into a breeze. No more jumping through hoops or building custom ETL processes to bridge platforms. Plus, there’s no need to worry about data duplication or stale data — both Oracle Autonomous Database and Snowflake always access the latest data seamlessly. The integration is not only easy but also incredibly efficient, letting you focus on analyzing your data.

What’s Next?

Currently, accessing Iceberg tables from Oracle Autonomous Database requires specifying the manifest file. Soon, this process will become even more seamless with Polaris Iceberg REST Catalog integration. Polaris Catalog will maintain the latest version of Iceberg tables automatically, eliminating the need to manually reference manifest files and ensuring up-to-date data across platforms.

Key Takeaways

Breaking data silos is no longer just a dream. By enabling cross-platform data access with Apache Iceberg, organizations can seamlessly integrate Snowflake and Oracle Autonomous Database. Here’s what makes this solution stand out:

- Seamless Integration: Say goodbye to complex ETL pipelines and hello to simplified workflows.

- One Source of Truth: Avoid data duplication with a single, unified copy of your data.

- Always Fresh: No more stale data — both platforms always work with the latest version.

- Effortless Access: Iceberg allows both platforms to access shared data without headaches.

With these benefits, Snowflake and Oracle Autonomous Database working together through Apache Iceberg unlock a new level of data potential. Why wait? Start breaking silos today!

Learn More

Ready to dive deeper? Check out the official documentation and hands-on Live Labs to see this integration in action:

Oracle Documentation: Query Apache Iceberg Tables

Oracle Live Labs: Build a Data Lake with Autonomous Database

Snowflake Documentation: Create Dynamic Iceberg Tables

Snowflake Quickstarts: Getting started with Snowflake Dynamic Tables; Getting started with Iceberg Tables